In the explosive and dazzling world of artificial intelligence, the Large Language Model (LLM) is the undisputed star of the show. It is the brilliant “brain,” the source of the startling intelligence that allows a machine to converse, to reason, and to create. But there is an unsung hero in this story, a vast and powerful infrastructure that works silently in the background to make this magic possible in the real world.

For an AI to have a voice, it needs more than just a brain; it needs a body. It needs a sophisticated, high-performance nervous system that can connect it to the world of sound. This is the indispensable role of the modern voice API for developers.

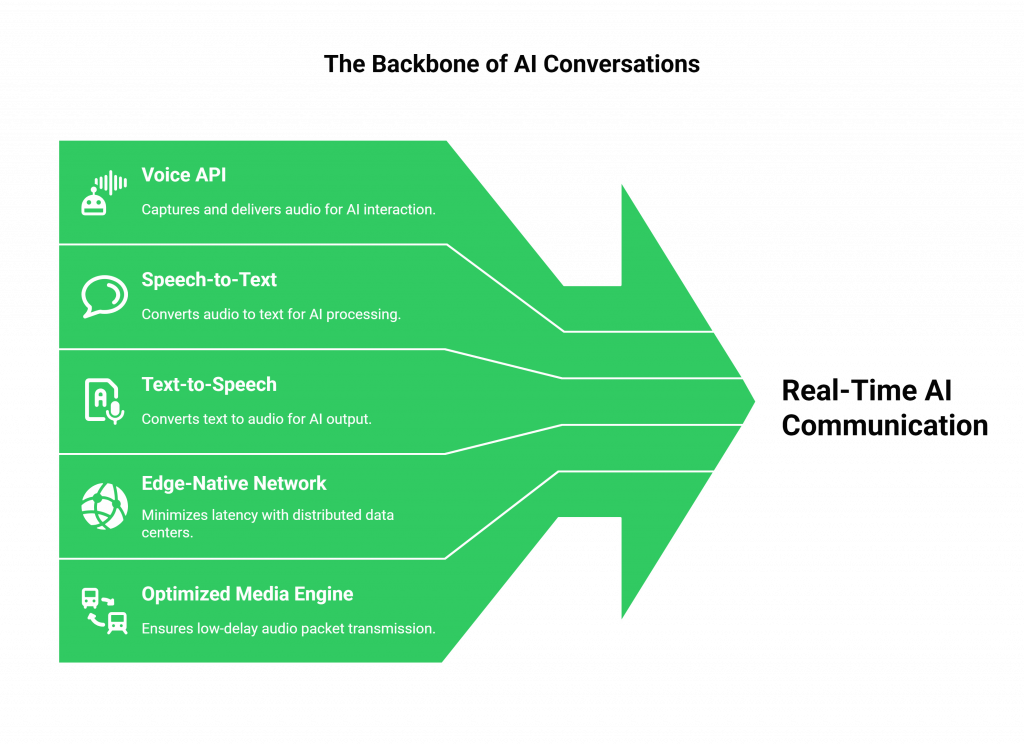

It is a common and dangerous misconception to view the voice API as a simple add-on or a commodity “pipe.” In reality, the core voice api stack is the foundational, architectural backbone upon which any serious, scalable, and reliable ai voice infrastructure is built.

It is the powerful and complex engine that handles the brutal, real-time demands of live conversation, freeing the AI’s intelligence to focus on what it does best: thinking. This guide will explore why the voice API for developers is not just a part of an AI voice system, but its most critical and foundational component.

Table of contents

The “Two-Sided Brain” Problem: Why AI and Telecom Are Different Worlds

Building a production-grade voice AI is not a single engineering challenge; it is a challenge of bridging two completely different and highly specialized technological universes.

The World of Artificial Intelligence

This is the world of data, algorithms, and models.

- The Focus: The primary challenge here is one of intelligence. How do you build and train a model that can understand language, that has a deep knowledge of a specific domain, and that can formulate a coherent and helpful response?

- The Environment: This is a world of asynchronous processing, of massive datasets, and of complex, computational logic. It is a world where a response time of a few seconds is often acceptable.

The World of Real-Time Telecommunications

This is the world of networks, protocols, and the unforgiving physics of real-time data transmission.

- The Focus: The primary challenge here is one of immediacy. How do you capture, transport, and reproduce a human voice from one side of the planet to the other with a delay of less than a fraction of a second?

- The Environment: This is a world of continuous, high-frequency data streams, of stateful, long-lived sessions, and of a relentless battle against latency, jitter, and packet loss.

A machine learning engineer is an expert in the first world. A network engineer is an expert in the second. It is exceptionally rare to find a team that has world-class expertise in both. The voice API for developers is the powerful abstraction layer that solves this problem.

Also Read: How Intelligent Voice API Benefits for Businesses Supporting Daily Support

The Voice API as the “Great Abstraction Layer”

The most important role of a modern voice API for developers is to act as a powerful layer of abstraction. It takes the entire, monumentally complex world of real-time telecommunications and hides it behind a simple, elegant, and programmable interface that a software developer can understand and control.

A platform like FreJun AI has already invested the years of engineering and the massive capital required to build a globally distributed, carrier-grade ai voice infrastructure. The core voice api stack is the set of tools that allows your application to leverage that massive infrastructure on demand. This abstraction is a massive catalyst for innovation.

This table clearly illustrates the powerful division of labor that the API enables.

| The Complex Task | Who Is Responsible? The Voice API Platform | Who Is Responsible? Your Application |

| Connecting to the Global Telephone Network | YES | NO |

| Managing Phone Numbers & Carrier Contracts | YES | NO |

| Handling Low-Level Protocols (SIP/RTP) | YES | NO |

| Real-Time Audio Processing (Jitter, Packet Loss) | YES | NO |

| Ensuring Low-Latency Global Connectivity | YES | NO |

| Building the AI’s Intelligence (LLM) | NO | YES |

| Designing the Conversational Logic | NO | YES |

| Integrating with Business Systems (CRM) | NO | YES |

Ready to build your AI on a foundation that handles all the voice complexity for you? Sign up for FreJun AI and explore our powerful, developer-first platform.

How This “Backbone” Enables Real-Time AI Communication

This architectural separation is what enables the high-speed, conversational loop of a modern AI agent. The voice api for developers is not a passive component; it is an active, high-performance participant in the real-time ai communication workflow.

Providing the “Senses” for the AI

For an AI to have a conversation, it needs “ears” to hear and a “mouth” to speak. The voice API provides these senses.

- The Ears (Hearing): The API’s real-time media streaming feature is what captures the live, raw audio from the phone call and provides it as a clean, continuous stream to your application. This is the essential input for your Speech-to-Text (STT) engine.

- The Mouth (Speaking): The API’s call control features allow your application to inject a new audio stream, the one generated by your Text-to-Speech (TTS) engine back into the live call for the user to hear.

The High-Speed, Low-Latency “Nervous System”

The connection between these senses and the brain must be instantaneous. A delay of even a few hundred milliseconds can make a conversation feel unnatural.

- The Edge-Native Advantage: A modern voice platform like FreJun AI’s Teler engine is a globally distributed, edge-native network. It processes the call at a data center that is physically close to the end-user. This is the single most important factor in minimizing the network latency that is a major killer of the conversational experience.

- The Optimized Media Engine: The platform’s internal voice streaming engine is a highly specialized piece of software that is obsessively optimized for the sole purpose of moving real-time audio packets with the lowest possible delay.

What is FreJun AI’s Role in This AI Voice Stack?

At FreJun AI, we have a clear and focused vision. We are not an AI company. We are a voice infrastructure company. Our entire platform is built on the philosophy that our job is to provide the most powerful, reliable, and developer-friendly “body” for your AI “brain.”

- We Are the Foundational Backbone: Our Teler engine is the carrier-grade, globally scalable ai voice infrastructure. We handle the immense, 24/7/365 complexity of the global voice network.

- We Are a Radically Developer-First Platform: We are obsessed with creating the best possible tools for developers. Our APIs are designed to be simple, powerful, and elegant, allowing you to control our massive global network with a few lines of code.

- We Are a Flexible, Model-Agnostic Bridge: We believe that the future of AI is too big and is moving too fast for any single company to own. Our platform is designed to be a completely open and flexible bridge, allowing you to connect the best-in-class AI models from any provider in the world. This is our core promise: “We handle the complex voice infrastructure so you can focus on building your AI.”

Also Read: Voice Recognition SDK That Handles Noise with High Precision

Conclusion

The brilliant intelligence of a modern Large Language Model is only one half of the equation for a successful voice AI. The other, equally important half is the powerful and reliable infrastructure that gives that intelligence a voice and connects it to the world in real time.

The voice API for developers is this foundational backbone. It is the sophisticated, high-performance ai voice infrastructure that handles the immense, underlying complexity of telecommunications, allowing developers to focus on what they do best: building the intelligence of the conversation.

For any business looking to build a serious, scalable, and production-grade voice AI system, the choice of their core voice api stack is the most important architectural decision they will make.

Want to do a deep architectural dive into how our platform can provide the foundational backbone for your AI voice application? Schedule a demo with FreJun Teler.

Also Read: Top 7 IVR Software for Call Centers: Automation, Routing & AI Features

Frequently Asked Questions (FAQs)

Its main role is to act as the foundational “backbone,” handling the complex, real-time telecommunications so that the developer can focus on the AI’s intelligence.

An AI voice infrastructure is a complete, end-to-end technology stack. It powers every aspect of a voice AI system and includes the voice API and the carrier network. It also includes real-time media processing engines.

The core voice api stack refers to the essential, foundational voice communication platform that an AI’s intelligence (the STT, LLM, and TTS models) is built upon.

It enables real-time ai communication by providing a low-latency, real-time media streaming feature that allows the AI to “hear” and “speak” in a live conversation.

No. The primary purpose of a voice api for developers is to abstract away the telecom complexity, allowing any proficient software developer to build voice applications.

One of the biggest challenges is bridging the gap between the world of AI (data and models) and the world of real-time telecommunications (networks and protocols).

Low latency is critical because it minimizes the delay in the conversation. A fast, responsive AI feels intelligent, while a slow, laggy AI feels frustrating and broken.