The rise of the voice bot is one of the most significant technological shifts in modern business communication. We are rapidly moving into a world where a customer’s first, and often only, interaction with a company is a conversation with an AI. This presents an incredible opportunity for efficiency and scale, but it also opens a Pandora’s box of complex ethical questions.

For a business leader, the process of building voice bots is no longer just a technical or an operational challenge; it is a profound ethical one. The decisions you make about how your AI listens, speaks, and makes decisions will have a direct and lasting impact on your brand’s integrity and the trust of your customers.

This is not a theoretical, futuristic debate. The ethical missteps of early AI implementations have already led to significant public backlash, regulatory scrutiny, and brand damage for some of the world’s largest companies.

As voice AI becomes more powerful and more deeply integrated into our daily lives, the need for a strong, proactive, and clearly defined ethical framework is no longer a “nice-to-have”; it is a non-negotiable requirement for responsible innovation. This guide will explore the critical ethical issues that every leader must consider when building voice bots.

Table of contents

Is Your AI Being Deceptive? The Ethics of Transparency

This is the first and most fundamental ethical question you must answer. When a customer calls your company and an AI answers, does the customer know they are speaking to a bot?

The Dangers of Undisclosed AI

The technology for creating hyper-realistic, human-sounding AI voices is advancing at a breathtaking pace. It is now possible to build a voice bot that is, for a short time, indistinguishable from a human. The temptation to deploy such a bot without disclosing its nature is a significant ethical pitfall.

- The Problem: An undisclosed AI can create a feeling of deception and manipulation. If a customer believes they are having a genuine human interaction, only to discover later that they were “tricked” by a machine, it can lead to a profound and lasting breach of trust. This practice can also be used for malicious purposes, making it even more important for legitimate businesses to set a clear ethical standard. This is a key part of transparent ai disclosure.

- The Ethical Framework: The principle of “informed consent” is paramount. A customer has a right to know who, or what, they are speaking to.

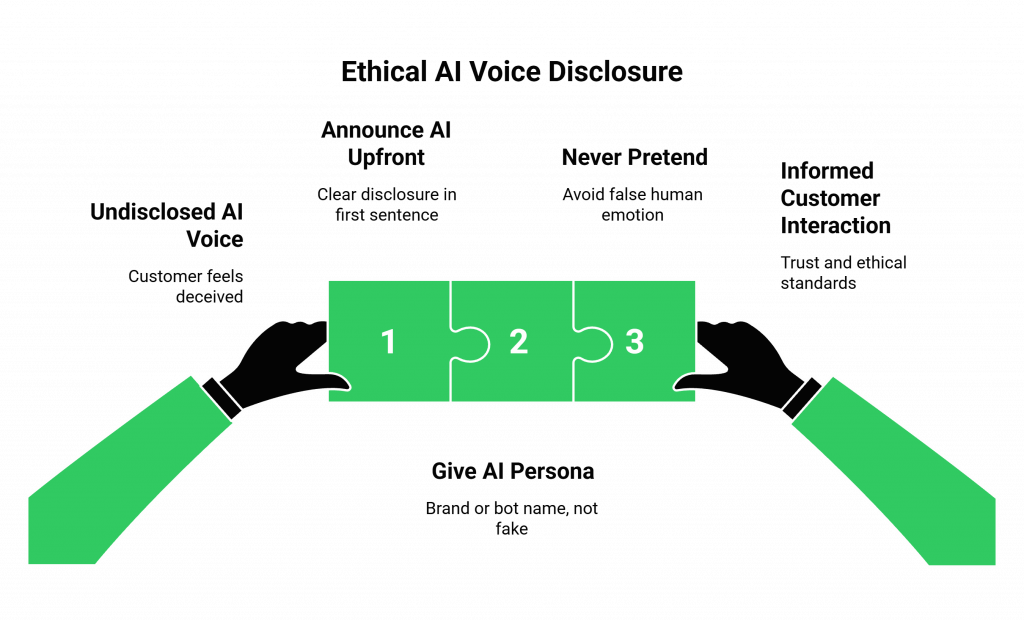

Best Practices for Transparent AI Disclosure

- Announce the AI Upfront: The very first sentence the bot speaks should be a clear and simple disclosure, such as, “Hello, you’ve reached our automated voice assistant,” or “Hi, I’m an AI-powered agent here to help you today.”

- Give the Bot a Clear “AI” Persona: Do not try to give your bot a fake human name and backstory. Give it a name that is clearly a brand or a bot name. This sets the right expectation from the start.

- Never Pretend: The bot’s script should never contain phrases that falsely imply human emotion or experience, such as “I understand how you feel” or “I’ve had that happen to me too.”

Also Read: How Developers Use Voice Calling SDKs to Power AI-Driven Conversations?

Are You Protecting Your Customer’s Most Personal Data: Their Voice?

A voice conversation is an incredibly rich and personal data stream. It contains not only the words a person is saying but also their tone of voice, their emotional state, their accent, and their unique vocal biometric signature. The ethical data handling of this voice data is a critical responsibility.

The Principles of Privacy-First Voice Design

A privacy-first voice design is one that is built on the principles of data minimization and purpose limitation.

- Data Minimization: Only collect and store the voice data that is absolutely necessary to perform the required function. Do you really need to store the full audio recording of every call, or do you just need the transcript? If you do store the audio, for how long do you need to keep it?

- Purpose Limitation: Be crystal clear with your customers about why you are recording or processing their voice data (e.g., “This call may be recorded for quality and training purposes”). Do not use the data for any other purpose without their explicit consent.

- Anonymization and Redaction: For the data you do store, implement robust processes to anonymize it or redact any Personal Identifiable Information (PII) as quickly as possible.

This table summarizes a simple framework for ethical data handling in voice AI.

| Data Principle | Key Question for Leaders | Best Practice |

| Transparency | Do my customers know what data is being collected and why? | Provide a clear and easy-to-understand privacy policy specifically for your voice interactions. |

| Minimization | Are we only collecting and storing the data we absolutely need? | Default to storing transcripts instead of raw audio wherever possible. Have a strict data retention policy. |

| Security | Is the voice data protected from unauthorized access? | Ensure all voice data, both in transit and at rest, is encrypted using strong protocols. |

| Control | Can my customers access, review, and delete their voice data? | Provide a clear process for customers to request access to or deletion of their personal data, in line with regulations like GDPR. |

Also Read: Voice Calling SDK vs Voice API: What’s the Real Difference for Developers?

Is Your AI Perpetuating Harmful Biases?

This is one of the most subtle and most dangerous ethical challenges in the world of AI. The AI models that power your voice bot, especially the STT and LLM are trained on vast datasets of human language. If those datasets contain biases (and they all do), the model will learn and can even amplify those biases.

The Challenge of Bias Reduction in Speech Models

- The Problem: A Speech-to-Text model might have a higher error rate for speakers with certain regional accents, or for speakers of a particular gender or ethnicity. This can lead to a frustrating and exclusionary experience where the voice bot simply “doesn’t understand” certain groups of your customers. An LLM might generate responses that contain subtle, harmful stereotypes. This is a major focus of responsible ai guidelines.

- The Ethical Framework: The principle of “fairness and equity” requires that your AI system should work equally well for all of your customers, regardless of their background or how they speak.

Best Practices for Bias Reduction

- Audit Your Models: Actively test your chosen STT and LLM models with a diverse range of voices, accents, and dialects to identify any performance disparities.

- Choose Inclusive Vendors: Partner with AI and voice infrastructure providers who are transparent about their efforts in bias reduction in speech models and who are committed to building more equitable AI.

- Implement a “Human in the Loop”: Have a clear process for regularly reviewing a sample of your AI’s conversations (the transcripts, not necessarily the audio) to look for and correct any instances of biased or inappropriate responses.

Ready to build your voice bot on a platform that is committed to the principles of responsible AI? Sign up for FreJun AI.

Do Your Customers Have a Way Out? The Ethics of Escalation

The final ethical consideration is about control. No matter how intelligent your AI is, there will always be situations where a customer has a complex, emotional, or unique problem that requires the nuance and empathy of a human being.

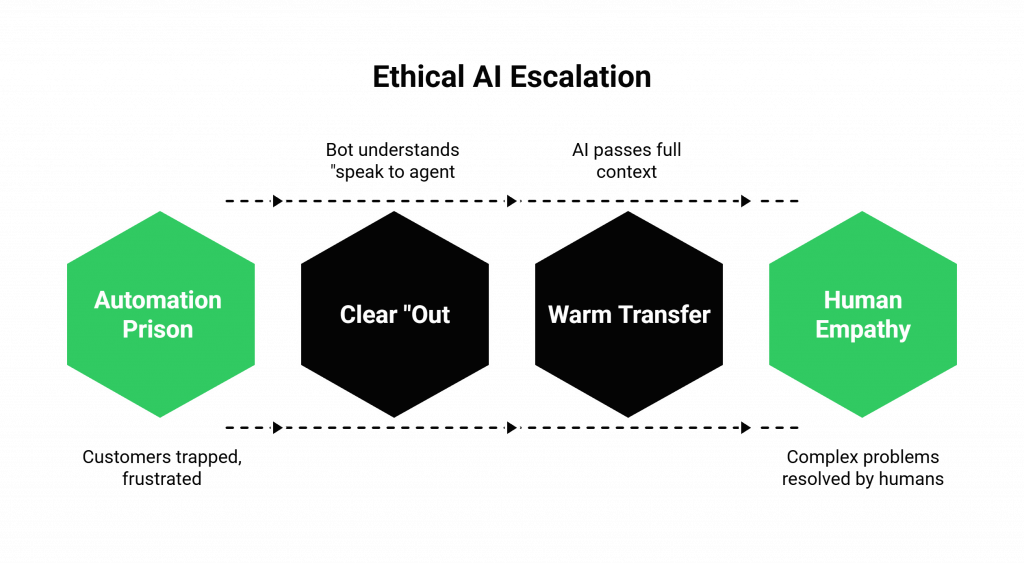

The Trap of the “Automation Prison”

- The Problem: Some businesses, in their pursuit of cost reduction, design their automated systems to be a maze with no exit. The customer is forced to go in circles, unable to reach a human agent, leading to extreme frustration.

- The Ethical Framework: A customer should always have the right to choose to speak with a human. An ethical voice bot is a helpful guide, not a prison guard.

Best Practices for Graceful Escalation

- Provide a Clear “Out”: At any point in the conversation, a customer should be able to say a simple phrase like “speak to an agent” or “I need a person,” and the bot should immediately understand this intent and initiate a transfer.

- Ensure a “Warm” Transfer: The escalation should be seamless. The AI should pass the full context of its conversation, along with the customer’s account details, directly to the human agent. This respects the customer’s time and allows the human agent to pick up the conversation exactly where the bot left off.

Also Read: Top 10 Features Every Modern Voice Calling SDK Should Have in 2026

Conclusion

The journey of building voice bots is as much an ethical exploration as it is a technical one. The choices we make as leaders and developers in this new frontier will have a lasting impact on the relationship between businesses and their customers.

By embedding the core principles of transparency, privacy, fairness, and human-centricity into the very foundation of our voice AI strategies, we can ensure that this powerful technology is used not just to create efficiency, but to build trust.

A truly successful voice bot is one that is not only intelligent in its design but is also deeply ethical in its application.

Want to discuss how our platform can help you build a voice bot that is not only powerful but also aligns with your company’s ethical commitments? Schedule a demo with our team at FreJun Teler.

Also Read: Leveraging Cloud Telephony Analytics: Call Metrics and Performance Insights

Frequently Asked Questions (FAQs)

While all are important, the most fundamental issue is transparency. You must provide a transparent ai disclosure to ensure that customers are always aware they are speaking to an AI and not a human.

Responsible AI guidelines are a set of principles and best practices that a company commits to following in its development and deployment of artificial intelligence. These guidelines typically cover areas like fairness, transparency, accountability, and privacy.

Privacy-first voice design is specifically concerned with the unique and highly sensitive nature of voice data. It goes beyond standard data privacy to include considerations about the storage of vocal biometric data, the analysis of emotional tone, and the explicit need for consent for call recording.

Eliminating bias is difficult, but teams can reduce it by measuring disparities continuously and actively mitigating their impact.

Ethical data handling for a voice bot follows clear principles. It collects only necessary data through minimization. It uses data strictly for its stated purpose. Strong encryption ensures security at every stage. Customers retain control over their own data.

The laws are evolving rapidly across many jurisdictions. In some places, disclosure is becoming a legal requirement. Certain states prohibit bots from selling without revealing their artificial identity. Even where not required by law, disclosure remains a strong ethical best practice.

You must partner with a voice infrastructure provider that takes security seriously. This means they must support end-to-end encryption for all voice communication, using protocols like TLS for signaling and SRTP for the media stream itself.