For developers using MindOS, the platform provides a unique and powerful way to create autonomous AI agents, or “Minds,” with distinct personalities, memories, and skills. You can design a character, give it knowledge, and define its behavior.

However, these brilliant minds are born silent, confined to text-based interfaces. To truly bring your creation to life, you need to give it a voice. This is where a VoIP Calling API Integration for MindOS becomes essential.

Imagine a user being able to pick up their phone and have a real, spoken conversation with the unique AI character you designed.

This tutorial will provide a complete, developer-focused guide on the technical architecture and step-by-step process required to make this happen, transforming your MindOS agent from a silent entity into an interactive, voice-enabled personality.

Table of contents

What is MindOS?

MindOS is a platform for creating and deploying lifelike, autonomous AI agents called “Minds.” Unlike generic chatbots, these agents are designed to have persistent personalities, long-term memory, and the ability to perform tasks using tools.

Key Features of MindOS

- Persona & Memory: You can define a Mind’s personality, background, and communication style. It maintains memory across conversations, allowing for stateful and context-aware interactions.

- Actions (Tools): You can equip your Minds with “Actions,” which are essentially tools that connect to external APIs. This allows your agent to perform tasks like looking up data, booking appointments, or interacting with other services.

- API Access: Crucially for this tutorial, every Mind you create can be interacted with via a simple, secure API. This is the endpoint we will use to connect our voice system to the agent’s brain.

Also Read: Programmable Voice APIs Vs Cloud Telephony Compared

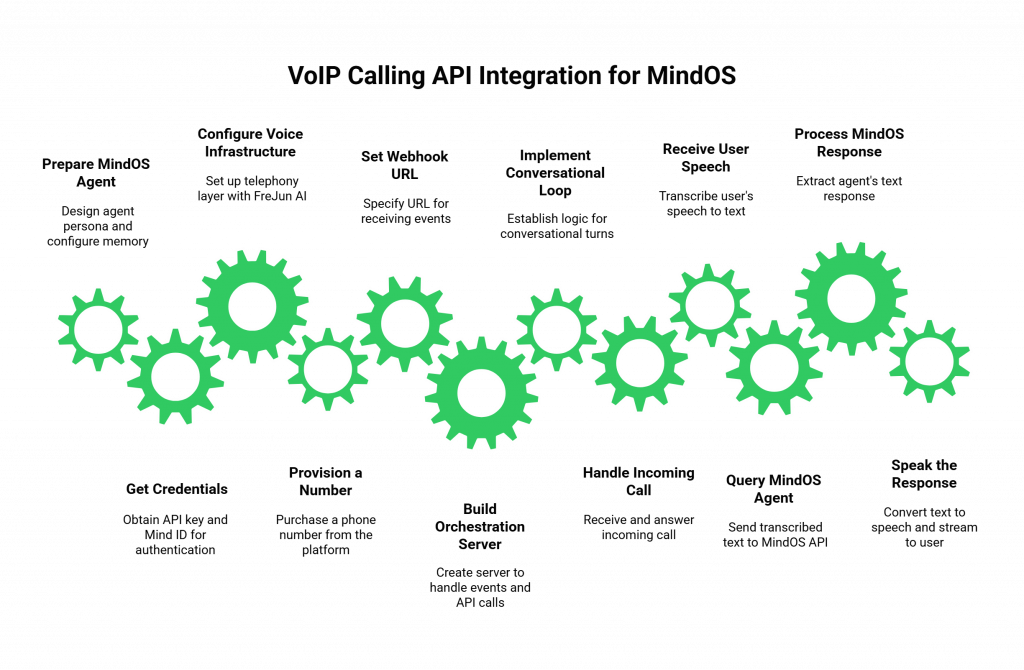

Step-by-Step Setup Guide of VoIP Calling API Integration for MindOS

This is the core of the VoIP Calling API Integration for MindOS. Follow these steps to build the bridge between voice and your AI’s mind.

Step 1: Prepare Your MindOS Agent

Before you write any code, your AI agent needs to be ready.

- Create Your Mind: In the MindOS Studio, design your agent. Define its persona, give it any necessary background knowledge, and configure its memory.

- Get Your Credentials: Find your MindOS API Key and the unique Mind ID for the agent you want to voice-enable. These will be used to authenticate and direct your API requests.

Step 2: Configure Your Voice Infrastructure

This step sets up the telephony layer.

- Sign Up: Create an account with a developer-first voice platform like FreJun AI.

- Provision a Number: Purchase a phone number from the platform’s dashboard.

- Set Your Webhook URL: This is the most critical configuration. In your phone number’s settings, you must specify a webhook URL. This URL must point to a public endpoint on your orchestration server (the application you are about to build). For local development, a tool like ngrok is perfect for exposing your local server to the internet.

Also Read: How To Use RAG With Voice Agents For Accuracy?

Step 3: Build the Orchestration Server

This is the central piece of code you will write. It acts as a middleman, receiving events from the voice platform and communicating with the MindOS API. You can use any backend framework like Python/Flask or Node.js/Express.

Step 4: Implement the Conversational Loop

Within your webhook handler, you will implement the logic for a full conversational turn.

- Handle the Incoming Call: When a user calls, your webhook receives an event. Your code responds by instructing the voice platform to answer the call.

- Receive User Speech: The user speaks. Your webhook receives an event containing the text transcribed by the STT service.

- Query Your MindOS Agent: Take the transcribed text and call the query_mindos function (from the example above). This function makes a POST request to the MindOS API with the user’s message.

- Process the MindOS Response: The MindOS API will return a JSON object. Extract the agent’s text response from the output field.

- Speak the Response: Send this text to your TTS service. Then, instruct your voice platform to stream the resulting audio back to the user on the call. This completes the loop.

Also Read: How To Integrate Voice Into Existing IVR Systems?

Why Is FreJun AI the Ideal Voice Infrastructure for MindOS?

MindOS creates a complex, stateful personality. To make that personality feel real in a conversation, the interaction must be instant. This is where FreJun AI excels. Our philosophy is “We handle the complex voice infrastructure so you can focus on building your AI.”

For a MindOS developer, FreJun is the perfect foundation because our entire platform is engineered for the ultra-low latency required to make an AI character feel responsive and alive. Our simple, developer-friendly APIs make building the orchestration server a clean and straightforward process.

Conclusion

MindOS provides the tools to build a sophisticated AI brain with personality and memory. A VoIP Calling API Integration for MindOS provides the voice that brings that brain to life.

By following this tutorial, you can bridge the gap between your agent’s complex internal world and the real, spoken world of your users, transforming your digital creations into truly interactive and engaging characters.

Also Read: Cloud PBX System: Key Features That Drive Productivity

Frequently Asked Questions (FAQs)

The orchestration server is the custom application you build. It acts as the central controller, receiving events from the voice platform and making the necessary API calls to MindOS, the STT service, and the TTS service to manage the conversation.

Yes. When your server sends a query to the MindOS API, the agent will execute its full logic, including calling any Actions you have configured. The voice platform will simply wait for the final text response before proceeding.

You need to manage the session_id. When you make an API call to MindOS, you should include a unique session ID for that phone call. By using the same session ID for every turn in a single call, MindOS can access the conversation’s history and use its memory correctly.

Latency. The delay between when the user stops speaking and the MindOS agent starts replying must be as short as possible (ideally under a second). A low-latency voice infrastructure is non-negotiable for an immersive experience.

Yes. This component is your own custom code. You will need to host it on a cloud platform (like AWS, Google Cloud, or Heroku) so that it has a public URL that the voice platform’s webhooks can reach.