This guide provides a technical overview for developers on how to connect a VoIP Calling API to AssemblyAI’s audio intelligence models. It covers the two primary integration patterns: real-time streaming for live analysis and asynchronous processing for post-call analysis, enabling you to transform raw phone call audio into structured, actionable data.

It is critical to understand that these are two distinct, complementary layers of a voice application stack.

- VoIP Calling API (The Transport Layer): This is your telecommunications service, such as FreJun AI. Its role is to manage phone numbers, handle the live call connection, and provide the raw audio either as a real-time stream or a final recording.

- AssemblyAI (The Analysis Layer): This is your AI engine. Its role is to process the audio provided by the VoIP API and convert it into structured data, such as transcripts, summaries, and sentiment scores.

Table of contents

Integration Pattern 1: Real-Time Streaming Analysis

This pattern is for applications that require live transcription and intelligence during an active call. It is the more complex of the two patterns and is essential for interactive use cases.

Use Cases: Building real-time agent assists, live compliance monitoring, or creating interactive voice agents (IVAs).

Real-Time Workflow for Developers

This integration requires your application server to act as a high-speed proxy between two WebSocket connections.

- Receive Incoming Call: Your VoIP provider sends a webhook to your server’s endpoint when a user calls your number.

- Establish Two WebSockets:

- Connection A (VoIP Platform -> Your Server): Your server responds to the webhook, instructing the VoIP platform to open a WebSocket and begin streaming raw call audio to your server.

- Connection B (Your Server -> AssemblyAI): Your server simultaneously opens a separate WebSocket connection to AssemblyAI’s real-time transcription endpoint.

- Proxy the Audio Data: As your server receives audio data chunks from Connection A, it immediately forwards them through Connection B to AssemblyAI. This must be done with minimal processing to maintain low latency.

- Receive Live Transcripts: Your server listens on Connection B for real-time JSON messages from AssemblyAI containing partial and final transcripts. This text can then be used by your application’s live logic to provide real-time feedback or conversational responses.

Also Read: How VoIP Calling API Integration for CrewAI Improves AI Agents?

Integration Pattern 2: Asynchronous Post-Call Analysis

This pattern is simpler, more robust, and highly common. It is used for applications that analyze calls after they have concluded.

Use Cases: Call center analytics, sales intelligence dashboards, automated quality assurance, and market research.

Asynchronous Workflow for Developers

This integration uses call recordings and standard REST API calls, making it easier to implement.

- Record the Call: You handle an inbound or outbound call via your VoIP API with the recording feature enabled.

- Receive the Recording URL: After the call ends, your VoIP provider sends a webhook to your server. The webhook payload contains a secure URL to the final audio recording file (e.g., MP3/WAV).

- Submit to AssemblyAI: Your server’s webhook handler makes a POST request to AssemblyAI’s asynchronous transcription endpoint. You pass the recording URL in the request body. In this same request, you can specify additional models to run, such as summarization or sentiment analysis.

- Receive the Full Analysis: AssemblyAI processes the audio file in the background. Once complete, it sends a webhook to an endpoint you specified in your request. The payload of this final webhook contains the complete, structured JSON data with the transcript and all requested intelligence.

Also Read: Why Developers Choose VoIP Calling API Integration for OpenAgents?

The Critical Role of Your VoIP Provider

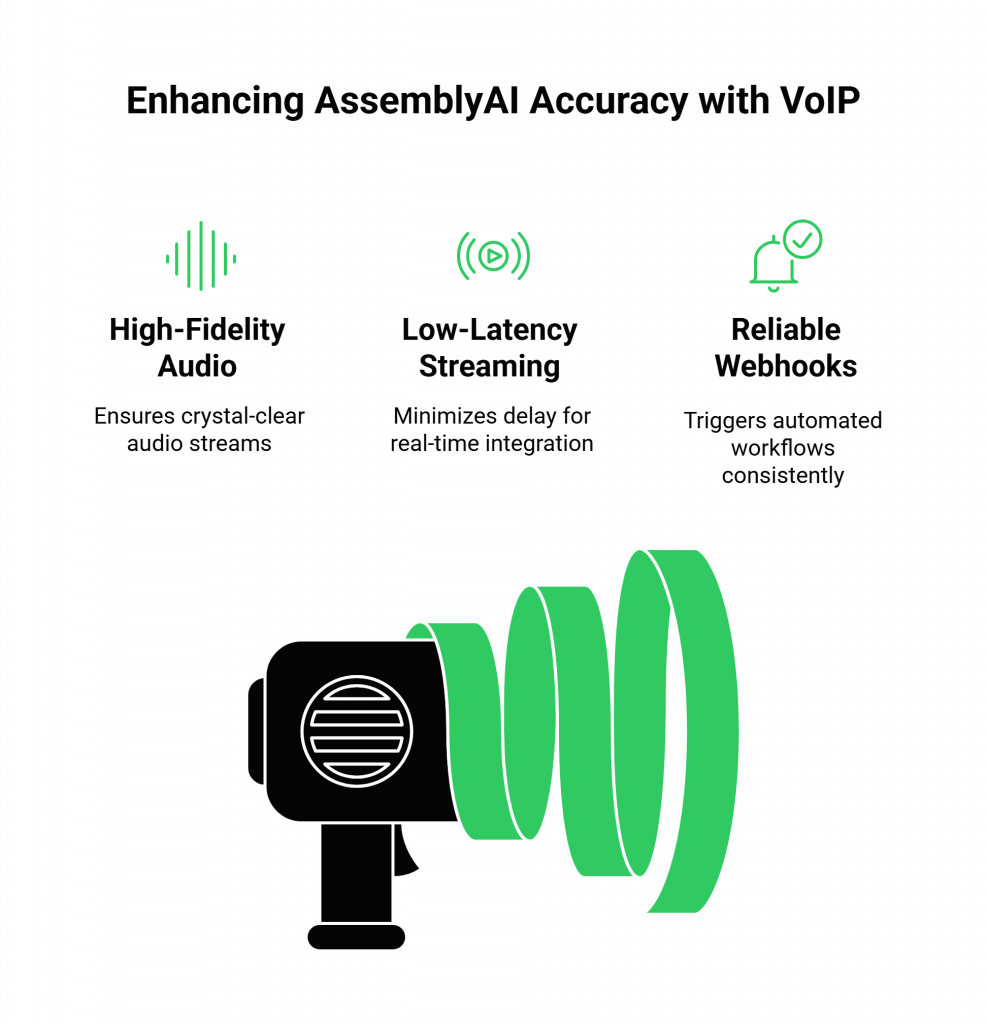

The accuracy of AssemblyAI’s models is directly proportional to the quality of the input audio. A professional VoIP provider is essential for:

- High-Fidelity Audio: Providing crystal-clear audio streams and recordings to maximize AssemblyAI’s accuracy.

- Low-Latency Streaming: Delivering the audio for real-time integrations with minimal delay.

- Reliable Webhooks: Ensuring the automated post-call workflow is triggered consistently.

For these reasons, a developer-focused platform like FreJun AI is an ideal transport layer, providing the high-quality audio input necessary for AssemblyAI’s analysis engine to perform optimally.

Conclusion: Choose the Right Pattern for Your Use Case

If you correctly integrate a high-quality VoIP API with AssemblyAI, you can transform raw telephone conversations into structured, actionable data for any application.

- For live, in-call features, implement the real-time streaming pattern using WebSockets.

- For post-call analytics and reporting, implement the asynchronous pattern using call recordings and webhooks.

Also Read: Cloud PBX Voicemail: Smarter Messaging for Modern Teams

Frequently Asked Questions (FAQs)

No. AssemblyAI provides the real-time “ears” (STT). To build a conversational agent, you must combine it with an LLM for logic and a Text-to-Speech (TTS) service for the “voice,” and orchestrate the conversation yourself.

The real-time streaming pattern is significantly more complex due to the need to manage two persistent WebSocket connections and proxy data with low latency. The asynchronous pattern is more robust and simpler as it uses standard REST APIs.

In a single asynchronous API call, you can get a transcript plus analysis from models for summarization, sentiment analysis, topic detection, entity detection (PII, products, etc.), and speaker diarization.

Yes, it is the most critical factor. High-quality, clear audio will result in significantly higher accuracy from AssemblyAI. Compressed or noisy audio will degrade performance.