AI-driven voice interactions are rapidly becoming the default interface for customer support, sales, and enterprise workflows. However, building effective voice agents requires more than just advanced language models; it demands robust voice recognition, low-latency streaming, and seamless integration with TTS and LLM systems. This blog explores how a Voice Recognition SDK improves AI interaction accuracy, supports multi-turn conversations, and enables natural voice AI experiences.

By understanding the role of voice infrastructure and the technical challenges involved, developers, product managers, and engineering leads can design AI agents that respond quickly, maintain context, and scale reliably across global applications.

Why Are AI-Driven Interactions Moving Toward Voice Interfaces?

AI interactions are no longer limited to text boxes and chat windows. Instead, voice is becoming the preferred interface for real-world use cases. People speak faster than they type. More importantly, voice allows users to interact with AI while multitasking, driving, or working.

As of 2025, global deployment of voice assistants has exceeded 8.4 billion devices worldwide, indicating that voice interfaces are now a mainstream interaction channel rather than a niche feature.

Because of this shift, businesses are now building natural voice AI systems that can listen, understand, and respond in real time. However, voice-based AI is not simply “text AI with audio added.” It introduces new technical challenges that directly affect user experience.

As a result, the quality of AI-driven interactions increasingly depends on how well voice input is captured and processed.

This is where a Voice Recognition SDK plays a central role.

What Is A Voice Recognition SDK And How Is It Different From Basic Speech To Text?

At a basic level, speech-to-text converts spoken audio into written text. However, a Voice Recognition SDK goes much further than that.

Basic Speech To Text APIs Usually:

- Accept pre-recorded audio files

- Process speech after the user finishes talking

- Return a single transcription result

- Work well for offline or non-interactive use cases

A Voice Recognition SDK, On The Other Hand:

- Streams live audio in real time

- Produces partial and final transcripts continuously

- Manages audio sessions and connections

- Handles latency, jitter, and packet loss

- Feeds text instantly into downstream AI systems

Because of this, a voice recognition SDK is not just a transcription tool. Instead, it becomes the foundation of a conversational AI SDK, enabling real-time, two-way communication.

In simple terms, speech-to-text helps AI read. A voice recognition SDK helps AI listen.

How Does Voice Recognition Affect AI Interaction Accuracy?

AI interaction accuracy depends on one key factor: how correctly the AI understands user intent. In voice-based systems, intent understanding starts with transcription quality.

Even small transcription errors can lead to:

- Wrong intent classification

- Incorrect tool execution

- Broken conversation flow

- Loss of user trust

For example, consider the difference between:

- “Cancel my appointment tomorrow”

- “Confirm my appointment tomorrow”

If a voice recognition SDK misinterprets a single word, the AI may perform the opposite action.

Voice Recognition Directly Impacts:

- Intent detection accuracy

- Entity extraction (dates, names, numbers)

- Context continuity across turns

- Confidence scores passed to the LLM

Therefore, improving AI interaction accuracy is not only about choosing a better language model. It also depends on choosing a voice recognition SDK that can handle real-world speech reliably.

Why Is Real Time Voice Recognition Critical For Natural AI Conversations?

Human conversations happen in real time. People interrupt each other, pause mid-sentence, and change direction quickly. AI systems must match this behavior to feel natural.

However, batch-based transcription creates delays. These delays break conversational flow and make AI sound robotic.

Real Time Voice Recognition Enables:

- Streaming partial transcripts as the user speaks

- Faster LLM response generation

- Interrupt handling and barge-in support

- Reduced silence between turns

Because of this, real-time voice recognition is essential for building natural voice AI systems.

Latency Benchmarks That Matter:

| Component | Acceptable Range |

| Audio Capture – STT | < 150 ms |

| STT – LLM Input | < 50 ms |

| LLM – Response Start | < 200 ms |

| Total Turn Delay | < 500 ms |

If latency exceeds these thresholds, conversations feel slow and unnatural. Therefore, a well-designed voice recognition SDK focuses heavily on low-latency streaming.

How Do Voice Recognition SDKs Fit Into An AI Voice Agent Architecture?

Voice agents are often described as “AI that talks.” In reality, they are complex systems made up of multiple components.

A Typical AI Voice Agent Includes:

- Voice Recognition (STT)

- Large Language Model (LLM)

- Context Management or RAG

- Tool Calling and Business Logic

- Text To Speech (TTS)

- Voice Streaming Infrastructure

Among these components, the voice recognition SDK sits at the very beginning of the pipeline.

Simplified Flow:

- User speaks into a call or app

- Voice recognition SDK streams audio

- Partial text is generated continuously

- Text is sent to the LLM

- LLM decides what to say or do next

Because of this placement, voice recognition controls how quickly and accurately the AI can respond. If this layer fails, every downstream component suffers.

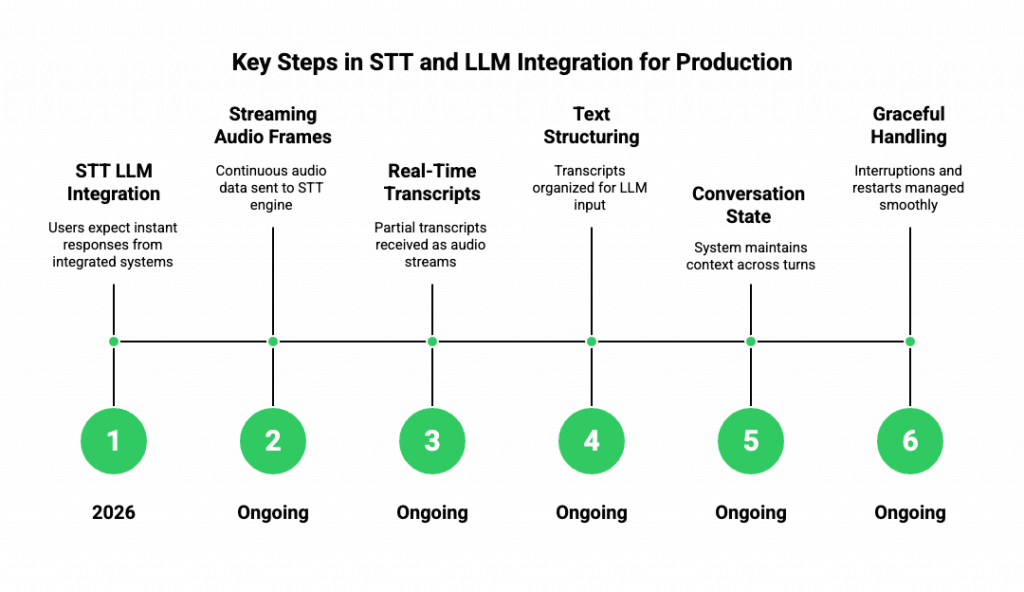

How Does STT And LLM Integration Work In Production Systems?

In production environments, STT and LLM integration must be fast, stable, and predictable. This becomes even more important as teams move toward STT LLM integration in 2026, where users expect instant responses.

Key Integration Steps:

- Streaming audio frames to the STT engine

- Receiving partial transcripts in real time

- Structuring text input for the LLM

- Maintaining conversation state across turns

- Handling interruptions and restarts gracefully

A strong voice recognition SDK supports:

- Word-level timestamps

- Confidence scoring

- Language detection

- Speaker separation (when required)

As a result, LLMs receive cleaner, structured input. This leads to better reasoning, fewer retries, and more stable AI behavior.

What Technical Challenges Do Teams Face When Building Voice Based AI?

Although voice AI looks simple on the surface, engineering teams face several challenges when building production systems.

Common Challenges Include:

- Variable network quality

- Background noise and echo

- Accents and speaking styles

- Concurrent session scaling

- Long-running voice connections

Additionally, voice workloads are continuous. Unlike HTTP requests, audio streams stay open for long periods. This increases infrastructure complexity.

Therefore, teams must treat voice recognition as a core system component, not an add-on feature.

Why Does Voice Recognition SDK Choice Matter More Than The AI Model?

Many teams focus heavily on selecting the best LLM. However, real-world deployments show that voice quality often matters more.

Even the most advanced AI model cannot fix:

- Missing words

- Delayed transcripts

- Broken audio streams

Because of this, a reliable voice recognition SDK improves:

- User satisfaction

- Task completion rates

- AI response consistency

- Overall system stability

In short, better voice input leads to better AI output.

Why Do Calling-First Platforms Fall Short For AI Voice Use Cases?

Many organizations initially rely on traditional cloud calling or IVR platforms to enable AI interactions. While these platforms excel at connecting calls and managing basic call flows, they often struggle when integrated with AI systems.

Key Limitations:

- No real-time streaming for AI – Audio is often processed in batches, delaying AI responses

- Limited context management – They do not maintain conversational state for multi-turn dialogues

- Vendor-locked integrations – Only compatible with proprietary AI tools or fixed speech engines

- Poor error handling – Packet loss, network jitter, or dropped connections can break the conversation

In contrast, a conversational AI SDK designed for modern voice agents addresses all these challenges by focusing on low-latency, real-time streaming, and robust integration.

Simply adding AI to a call isn’t enough. Teams need an infrastructure layer built for AI-first conversations.

How Does A Voice Infrastructure Layer Improve AI-Driven Interactions?

A dedicated voice infrastructure layer acts as the backbone for natural voice AI. It separates the voice transport from the AI logic, ensuring each component performs optimally.

Benefits Include:

- Low-latency streaming: Ensures AI can respond naturally without awkward pauses

- Session stability: Maintains long-running voice connections with failover handling

- Model-agnostic support: Integrates with any STT, TTS, or LLM engine

- Scalability: Handles hundreds or thousands of concurrent conversations

- Data integrity and security: Ensures sensitive conversations are encrypted and logged reliably

By decoupling voice infrastructure from AI, teams can focus on improving AI interaction accuracy without worrying about network issues or voice delivery.

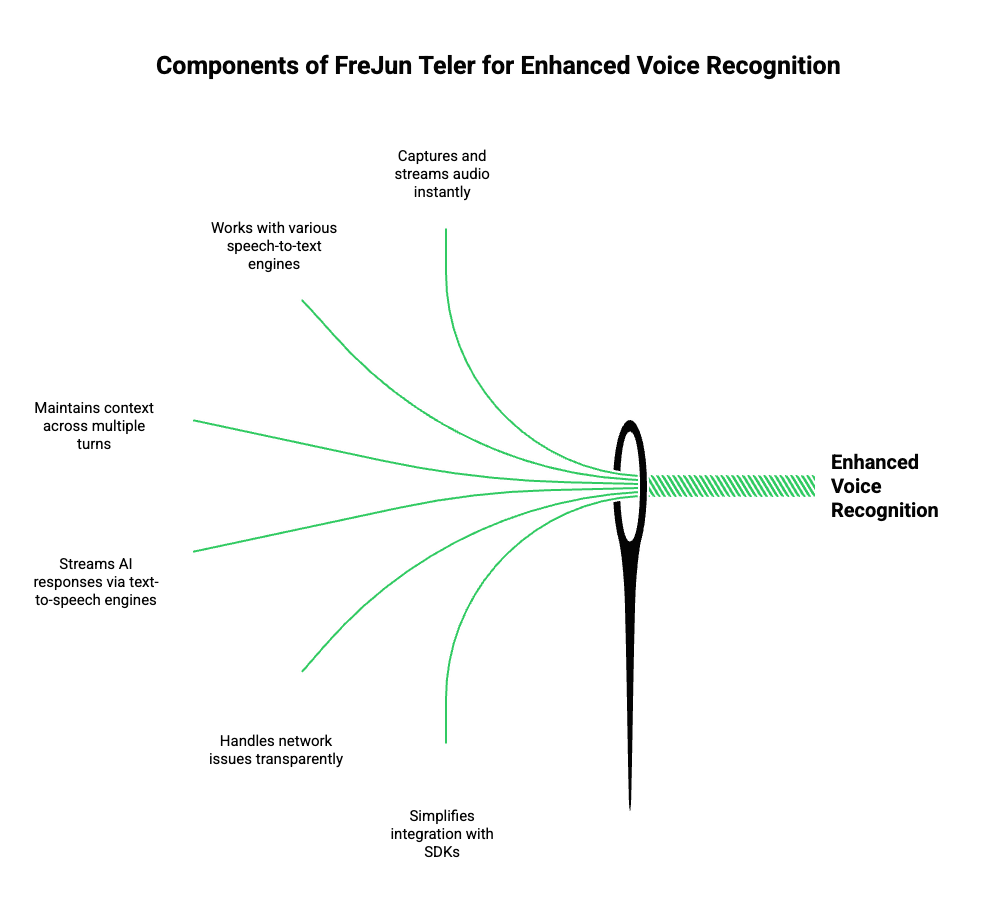

How Does FreJun Teler Enable Better Voice Recognition For AI Systems?

Here’s where FreJun Teler comes in. It is a global voice infrastructure platform designed specifically for AI agents and LLMs, supporting real-time, high-fidelity conversations.

How Teler Works:

- Real-Time Streaming: Captures audio from any inbound or outbound call and streams it to your AI system instantly

- STT Flexibility: Works with any speech-to-text engine, ensuring maximum accuracy

- LLM Integration: Supports STT LLM integration 2026, maintaining context across multiple turns

- TTS Compatibility: Streams AI responses back via any text-to-speech engine

- Resilient Infrastructure: Handles packet loss, jitter, and network variability transparently

- Developer Tools: Offers SDKs for web, mobile, and backend systems to simplify integration

Technical Advantages:

- Partial Transcript Streaming: Reduces response latency and enables dynamic interaction

- Context Management: Keeps conversation history for multi-turn dialogues

- Interrupt Handling: Supports natural back-and-forth without dropped words

- Scalable Architecture: Can manage thousands of concurrent AI voice agents worldwide

With Teler, building a natural voice AI system becomes a matter of connecting the right AI logic to a robust voice layer.

How Can Teams Implement Teler With Any LLM And Voice Stack?

Integrating Teler into an AI voice agent follows a simple but powerful architecture. This approach is vendor-neutral, allowing teams to use their preferred AI models, STT, and TTS engines.

Step-by-Step Flow:

- User Speaks: Audio is captured via Teler SDK in real time

- STT Processing: Audio is sent to any STT engine (cloud or custom)

- AI Decision: Text is passed to the LLM, which can call tools or query knowledge sources

- TTS Output: AI-generated text is converted to speech and streamed back via Teler

- Context Management: Multi-turn dialogue context is preserved for consistent responses

Implementation Tips:

- Use partial transcript streaming to reduce end-to-end latency

- Maintain a conversation buffer for context-sensitive AI

- Handle interruptions gracefully for realistic dialogue flow

- Monitor AI interaction accuracy with confidence thresholds

This approach ensures that voice recognition SDKs and AI models work together seamlessly to produce natural voice AI experiences.

What Use Cases Benefit Most From Voice Recognition SDKs?

Voice recognition SDKs, when paired with AI, unlock a wide range of use cases:

Inbound AI Agents:

- 24/7 customer support with multi-turn conversation

- Intelligent IVRs that understand natural language queries

- AI-powered receptionists for enterprise workflows

Outbound AI Agents:

- Personalized notifications and reminders

- Lead qualification calls using real-time AI

- Surveys and feedback collection with contextual responses

Enterprise Applications:

- AI-enabled help desks and operations support

- Global multilingual interactions

- Tools that combine AI reasoning with real-time voice

How Will Voice Recognition SDKs Shape AI Interactions In The Next Few Years?

As AI systems become more capable, voice recognition will define how humans and AI interact.

Trends to Watch:

- Real-time, interruptible AI agents that feel truly conversational

- Infrastructure-first architectures, separating AI logic from voice transport

- Cross-platform SDKs allowing consistent AI experiences across web, mobile, and telephony

- Multilingual, global voice AI capable of understanding accents, dialects, and context

By focusing on voice infrastructure and SDK design, organizations can future-proof their AI systems for 2026 and beyond.

What Are The Key Takeaways For Founders, PMs, And Engineers?

- Voice recognition SDKs are not optional: They are the foundation of AI interaction accuracy

- Latency and streaming matter more than raw AI power: Real-time partial transcripts enable natural conversations

- STT–LLM integration defines system reliability: Proper architecture ensures multi-turn dialogues succeed

- Infrastructure-first approach scales better: Decoupling voice delivery from AI logic prevents bottlenecks

- FreJun Teler simplifies implementation: Provides a reliable, scalable voice layer compatible with any AI stack

Final Thoughts

Voice recognition SDKs are the foundation of high-quality AI-driven interactions. By ensuring accurate, low-latency transcription and seamless integration with LLMs and TTS engines, they allow AI agents to understand intent, maintain context, and respond naturally. Traditional calling platforms often fail to meet these requirements, whereas a robust voice infrastructure layer solves latency, scaling, and reliability challenges. FreJun Teler provides a global, model-agnostic voice layer that connects any AI, STT, or TTS system, enabling developers to deploy production-grade AI voice agents efficiently. Teams can now focus on intelligence rather than infrastructure.

Schedule your demo today to experience how FreJun Teler makes AI voice agents seamless and reliable.

FAQs –

- What is a voice recognition SDK?

A toolkit for streaming, capturing, and processing real-time audio for AI applications, enabling accurate STT and natural conversation. - Why is low latency important for AI voice agents?

Low latency ensures responsive interactions, reduces awkward pauses, and maintains natural conversation flow in multi-turn AI dialogues. - Can Teler work with any LLM?

Yes, Teler is model-agnostic and supports seamless integration with all LLMs, preserving context across multiple conversation turns. - How does STT affect AI interaction accuracy?

Accurate speech-to-text prevents misinterpretation, ensures correct intent detection, and improves downstream AI responses and tool execution reliability. - Is real-time streaming necessary for voice AI?

Yes, it enables partial transcript updates, dynamic responses, and uninterrupted conversation, creating natural, human-like AI interactions. - Can I scale voice AI with many concurrent users?

Teler’s infrastructure supports thousands of simultaneous conversations while maintaining low latency and consistent transcription accuracy. - How do voice recognition SDKs handle background noise?

They incorporate noise suppression, speaker separation, and adaptive algorithms to maintain high transcription accuracy in real-world environments. - What is a multi-turn context in AI voice agents?

Maintaining conversation memory across turns allows AI to respond intelligently, track tasks, and provide context-aware interactions. - Can I integrate any TTS engine with Teler?

Yes, Teler streams audio from any TTS engine, enabling flexible, natural voice AI responses without modifying AI logic. - How fast can I deploy AI voice agents using Teler?

Teler’s SDKs and infrastructure allow developers to launch production-grade AI voice agents in days, not months.