Voice-based AI interactions are becoming a core part of how products and services engage users. As language models grow stronger and speech systems mature, teams are increasingly shifting from text-first bots to real-time voice agents. However, building reliable conversational voice systems involves much more than connecting an LLM to a speech model. It requires managing live audio streams, handling interruptions, and maintaining conversational flow across networks and devices. This is where voice chat SDKs emerge as a critical foundation.

Understanding how they work, what problems they solve, and how they fit into modern AI stacks is essential for any team planning to build scalable AI voice agents.

Why Are AI Voice Agents Suddenly Everywhere?

Over the last few years, AI has moved from experiments to production use. Initially, most AI systems lived inside chat interfaces. However, today, voice is becoming the preferred interaction layer for many real-world use cases.

There are clear reasons for this shift.

First, speech is the most natural interface for humans. People speak faster than they type, and they expect immediate responses. As a result, businesses are now looking beyond chatbots and toward voice automation technology to handle support, sales, and operational calls.

Second, recent progress in large language models has improved reasoning, context handling, and response quality. At the same time, speech-to-text and text-to-speech systems have reached a level where real conversations are finally possible.

However, AI models alone did not make this transition happen. Instead, the missing piece was infrastructure that could reliably connect AI systems to live voice conversations. This is where voice chat SDKs entered the picture.

Because of this combination, AI voice agents are no longer limited to demos. They are now handling real customer calls at scale.

What Exactly Is An AI Voice Agent From A Technical Perspective?

Although AI voice agents may sound simple from the outside, their internal design is very structured. In fact, a modern voice agent is a system composed of several independent components that work together in real time.

At a high level, an AI voice agent includes:

- Speech-to-Text (STT): Converts live audio into text with minimal delay. For real-time transcription, Google Cloud recommends 100 ms audio frames – a practical design point for balancing latency and network efficiency in production voice agents.

- Large Language Model (LLM): Understands intent, maintains context, and generates responses.

- Retrieval-Augmented Generation (RAG): Pulls information from documents, databases, or APIs.

- Tool Or Function Calling: Allows the agent to trigger actions such as bookings, ticket creation, or CRM updates.

- Text-to-Speech (TTS): Converts generated text into natural, streamed audio.

Despite this modular design, these components must behave like a single system. Therefore, coordination becomes critical. Audio cannot wait for slow processing, and responses cannot arrive out of order.

This is where infrastructure design matters more than individual models.

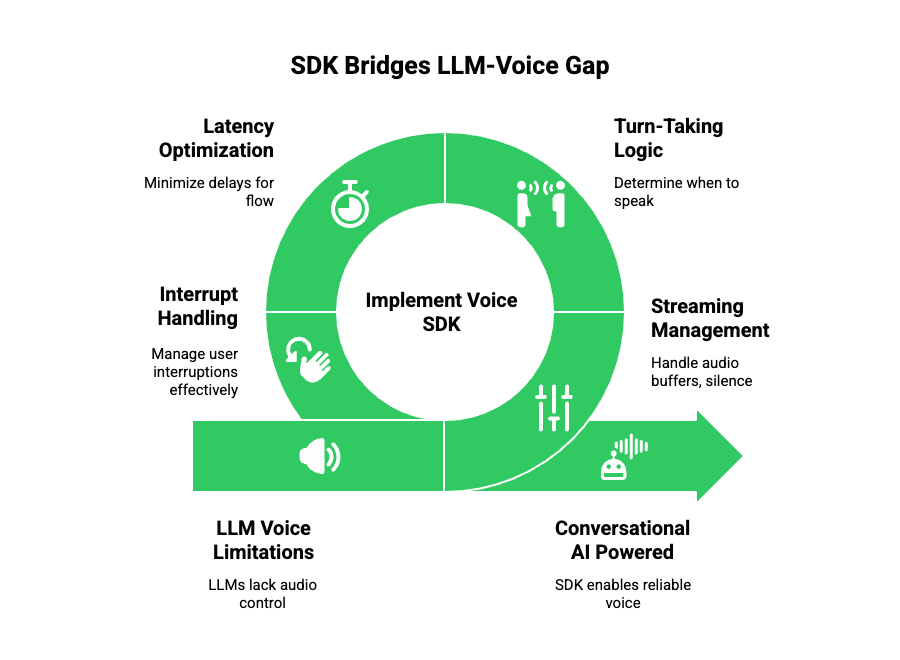

Why Can’t LLMs Alone Handle Voice Conversations?

At first glance, it may seem that an advanced LLM should be enough to power voice agents. However, this assumption breaks down quickly in real-world scenarios.

LLMs are designed for text processing. They are not built to manage live audio streams.

Because of that, several challenges appear immediately:

- No Native Streaming Control: LLMs do not manage audio buffers, silence detection, or speech boundaries.

- No Turn-Taking Logic

Voice conversations require knowing when to speak and when to listen. - High Sensitivity To Latency

Even small delays can break conversational flow. - Interrupt Handling Is External

Voice users interrupt often. LLM APIs do not manage this behavior.

As a result, teams quickly realize that a voice layer must sit between the AI and the user. That layer is responsible for timing, streaming, and audio reliability.

This is exactly the role that a SDK for conversational AI plays.

What Is A Voice Chat SDK And Why Is It Critical For AI Voice Agents?

A voice chat SDK is a software layer that manages real-time voice communication so AI systems do not have to.

In simple terms, it connects AI logic to live conversations.

More specifically, a voice chat SDK handles:

- Real-time audio capture and playback

- Bidirectional media streaming

- Call lifecycle events

- Network instability and jitter

- Device and platform differences

Because of this abstraction, developers can focus on AI behavior instead of telephony complexity.

Moreover, voice chat SDKs are essential for scalability. Handling a few test calls is easy. Handling thousands of simultaneous calls requires a different level of engineering.

That is why most production AI voice agents rely on an ai voice agent sdk rather than custom-built voice stacks.

How Do Voice Chat SDKs Enable Real-Time, Natural Conversations?

The biggest difference between scripted IVR systems and modern AI voice agents is conversation quality. People expect natural interaction, not rigid menus.

To achieve this, voice chat SDKs focus heavily on timing and flow.

Key technical responsibilities include:

Turn-Taking Coordination

- Detects when a caller stops speaking

- Avoids overlapping speech

- Determines when the AI should respond

Streaming-Based Responses

- TTS output is streamed, not generated fully upfront

- Responses begin playing within milliseconds

- Long pauses are avoided

Latency Budget Management

Typically, a smooth interaction requires:

- STT latency under 300 ms

- AI processing under 500 ms

- TTS stream start under 200 ms

Voice SDKs coordinate these steps so the user experiences a single seamless exchange.

As a result, voice agents feel conversational rather than robotic.

What Technical Problems Do Voice Chat SDKs Solve At Scale?

When AI voice agents move into production, several non-obvious problems emerge. Fortunately, most of these are handled by next-gen voice platforms built around SDKs.

Audio-Related Problems

- Background noise

- Variable microphone quality

- Packet loss on unstable networks

SDKs stabilize audio streams and improve STT accuracy by maintaining consistent input quality.

Conversation-Level Problems

- Users interrupt mid-response

- Silence needs to be interpreted correctly

- Context must not reset unexpectedly

Voice chat SDKs manage session state and ensure the AI responds appropriately.

Infrastructure-Level Problems

- Concurrent call scaling

- Regional latency differences

- Failover and resilience

Without SDKs, each of these would require custom engineering. Together, they form the backbone of voice automation technology.

How Can Teams Combine Any LLM And Any STT Or TTS Using A Voice SDK?

One of the most important architectural advantages of voice chat SDKs is model flexibility.

A well-designed SDK does not force teams into a single AI provider. Instead, it acts as a neutral transport layer.

Because of this:

- LLMs can be swapped without changing voice logic

- STT and TTS engines can be optimized independently

- RAG systems can evolve alongside business needs

This separation is crucial for product teams. It allows rapid experimentation without breaking the voice experience.

As a result, many modern teams now design voice agents as loosely coupled systems, with the SDK acting as the stable core.

Where Does Conversational Context Live In Voice AI Systems?

Despite common assumptions, conversational context does not live inside the voice SDK.

Instead:

- The SDK maintains a persistent connection

- The backend manages conversation state and memory

This separation is intentional.

Voice SDKs ensure that the same session remains intact across turns. Meanwhile, the AI backend tracks context, user data, and decision history.

Because of this design, long conversations remain coherent, even when they involve complex logic or external tools.

How Is FreJun Teler Designed For AI-First Voice Architectures?

Until now, we have discussed voice chat SDKs without naming specific platforms. This distinction matters because not all voice platforms are built with AI as the primary use case.

FreJun Teler is designed specifically for AI-first voice systems. Rather than focusing on calling workflows or static call logic, it focuses on acting as a real-time voice transport layer for AI agents.

From a technical perspective, Teler handles:

- Real-time audio streaming for inbound and outbound calls

- Stable, low-latency bidirectional media pipelines

- Global voice connectivity across VoIP and telephony networks

- Session-level reliability required for AI conversations

As a result, engineering teams can treat voice as infrastructure instead of application logic. Meanwhile, AI systems operate independently without being tightly coupled to telephony behavior.

This separation simplifies system design and reduces long-term maintenance risk.

How Does FreJun Teler Fit Into A Modern AI Voice Agent Stack?

To understand Teler’s role clearly, it helps to look at the full system flow.

End-To-End Voice Agent Flow

- A user places or receives a phone call

- FreJun Teler streams live audio in real time

- Audio is forwarded to the AI backend

- Speech-to-text converts audio into text

- The LLM processes intent and context

- Tools or RAG systems are invoked if required

- Text-to-speech generates response audio

- Teler streams audio back to the caller

Throughout this entire process, Teler never interprets conversation logic. Instead, it focuses on audio transport, latency control, and call stability.

Because of this design, teams can plug in:

- Any LLM

- Any STT engine

- Any TTS model

- Any RAG or tool-calling setup

This modularity is essential when building next-gen voice platforms that must evolve over time.

Why Are Traditional Calling Platforms Struggling With AI Voice Agents?

Traditional calling platforms were built for humans, not for AI systems. As a result, several limitations appear when they are used for conversational AI.

Key Limitations Of Legacy Platforms

| Area | Traditional Platforms | AI-First SDKs |

| Audio Streaming | Buffered, delayed | Real-time, low latency |

| Turn Handling | Limited | Continuous, adaptive |

| Model Flexibility | Restricted | Fully model-agnostic |

| Context Support | Minimal | Backend-driven |

| Scalability | Call-centric | Session-centric |

Because these platforms focus on call flows rather than conversations, developers often end up building complex workarounds.

In contrast, an ai voice agent sdk treats every call as a live conversation pipeline. As a result, it aligns better with how AI systems operate.

What Real-World Use Cases Are Driving Voice Chat SDK Adoption?

While the technology is complex, the business use cases are clear. Voice chat SDKs are now being used across multiple industries.

Common Use Cases

- Inbound AI Receptionists: Handle repeat queries, appointments, and routing.

- AI-Powered Support Agents: Resolve common issues without human escalation.

- Outbound Voice Automation: Run personalized reminders, follow-ups, and qualification calls.

- Agent Assistance: Provide real-time prompts and summaries to human agents.

Across all of these scenarios, voice automation technology reduces wait times and operational cost. At the same time, it improves consistency and availability.

What Should Founders And Engineering Leaders Look For In A Voice Chat SDK?

Choosing a voice chat SDK is not just a technical decision. It is also a long-term platform decision.

When evaluating options, teams should consider:

Technical Criteria

- Real-time streaming capability

- End-to-end latency guarantees

- SDK maturity and documentation

- Observability and logging support

Product-Level Criteria

- Model and provider flexibility

- Global voice coverage

- Scalability under concurrent load

Operational Criteria

- Reliability and uptime

- Security controls

- Support during integration

Because voice infrastructure becomes deeply embedded, switching later can be expensive. Therefore, early evaluation is critical.

How Are Voice Chat SDKs Shaping The Future Of Conversational AI?

Looking ahead, voice is becoming the default interface for AI systems. Screens are optional, but speech is universal.

As this shift continues:

- IVRs will be replaced by conversational agents

- Scripts will be replaced by reasoning

- Static flows will give way to adaptive dialogue

In this future, SDKs for conversational AI play the same role that web frameworks played for applications. They standardize complexity while enabling innovation.

FreJun Teler fits naturally into this future by focusing on the voice layer while letting AI systems evolve independently.

Final Thoughts

Voice AI has moved far beyond experimentation and pilots. Today, it is becoming foundational infrastructure for how modern businesses communicate, support users, and scale operations. However, deploying successful AI voice agents requires more than capable language models. Teams must solve for real-time audio streaming, latency control, conversational flow, and reliable voice transport at scale. This is precisely where voice chat SDKs play a critical role, turning complex voice automation into structured, production-ready systems.

For founders, product managers, and engineering teams building with LLMs today, choosing the right voice infrastructure determines whether an idea remains a prototype or becomes a dependable platform. FreJun Teler provides the real-time voice layer that allows AI agents to speak, listen, and scale globally with confidence.

Schedule a demo to see how FreJun Teler powers production-grade AI voice agents.

FAQs –

- What is a voice chat SDK?

A voice chat SDK manages real-time audio streaming so AI systems can hold natural voice conversations without handling telephony logic. - How is an AI voice agent different from an IVR?

AI voice agents understand intent, manage context, and respond dynamically, unlike IVRs that follow fixed scripts and menus. - Can I use any LLM with a voice chat SDK?

Yes. Modern voice chat SDKs are model-agnostic and work with any LLM, STT, or TTS provider. - Why is low latency important for voice agents?

Even small delays disrupt conversation flow, making interactions feel unnatural or broken to end users. - Does a voice chat SDK manage conversation logic?

No. The SDK handles voice transport; conversation logic and context live in your AI backend. - How do voice agents handle user interruptions?

Voice SDKs detect speech activity and streaming signals, allowing AI systems to pause, stop, or adapt responses. - Are voice chat SDKs scalable for enterprise use?

Yes. They are designed to handle thousands of concurrent real-time voice sessions reliably. - What industries benefit most from AI voice agents?

Customer support, sales, healthcare, logistics, fintech, and appointment-based services see strong value from voice automation. - Is WebRTC commonly used in voice chat SDKs?

Yes. WebRTC enables low-latency, real-time audio streaming and is a core technology in modern voice SDKs. - Where does FreJun Teler fit in the AI voice stack?

FreJun Teler provides the real-time voice infrastructure layer connecting AI systems with global telephony networks.