Turning your chatbot into a real-time voice assistant is the next step in conversational AI, but it’s also one of the hardest. Voice is not just text with sound; it requires low-latency streaming, telephony integration, and complex infrastructure. This guide is built for developers looking to launch a production-grade Voice Assistant Chatbot API experience. With FreJun as your voice transport layer, you get reliable call streaming and media handling, so you can focus on building the logic, not the plumbing.

Table of contents

- The Next Frontier for AI: Moving from Text to Real-Time Voice

- The Hidden Hurdle: Why Building Voice Infrastructure is an Engineering Nightmare

- Introducing FreJun AI: The Voice Transport Layer for Your AI

- Anatomy of a Modern Voice AI Stack

- FreJun AI vs. In-House Infrastructure: A Developer’s Comparison

- Best Practices for Developing a Production-Grade Voice Assistant Chatbot

- Final Thoughts: Building a Truly Conversational Experience with FreJun

- Frequently Asked Questions

The Next Frontier for AI: Moving from Text to Real-Time Voice

Your organization has developed a sophisticated AI. It’s been trained on your data, understands your business logic, and can resolve complex user queries through a text-based chat interface. The next logical step is to give it a voice, to transform it from a chatbot into a fully-fledged voice agent capable of handling real-time phone conversations.

This is where many development roadmaps hit a wall. The transition from text to voice is not a simple feature update; it’s an entirely new engineering domain. Creating a Voice Assistant Chatbot with the best API feels natural, responsive, and intelligent. It demands a robust, low-latency infrastructure that can handle the complexities of real-time audio streaming and telephony. While developers are experts at building AI logic, they are often unprepared for the unique challenges of building and maintaining the complex voice plumbing required to make that AI talk.

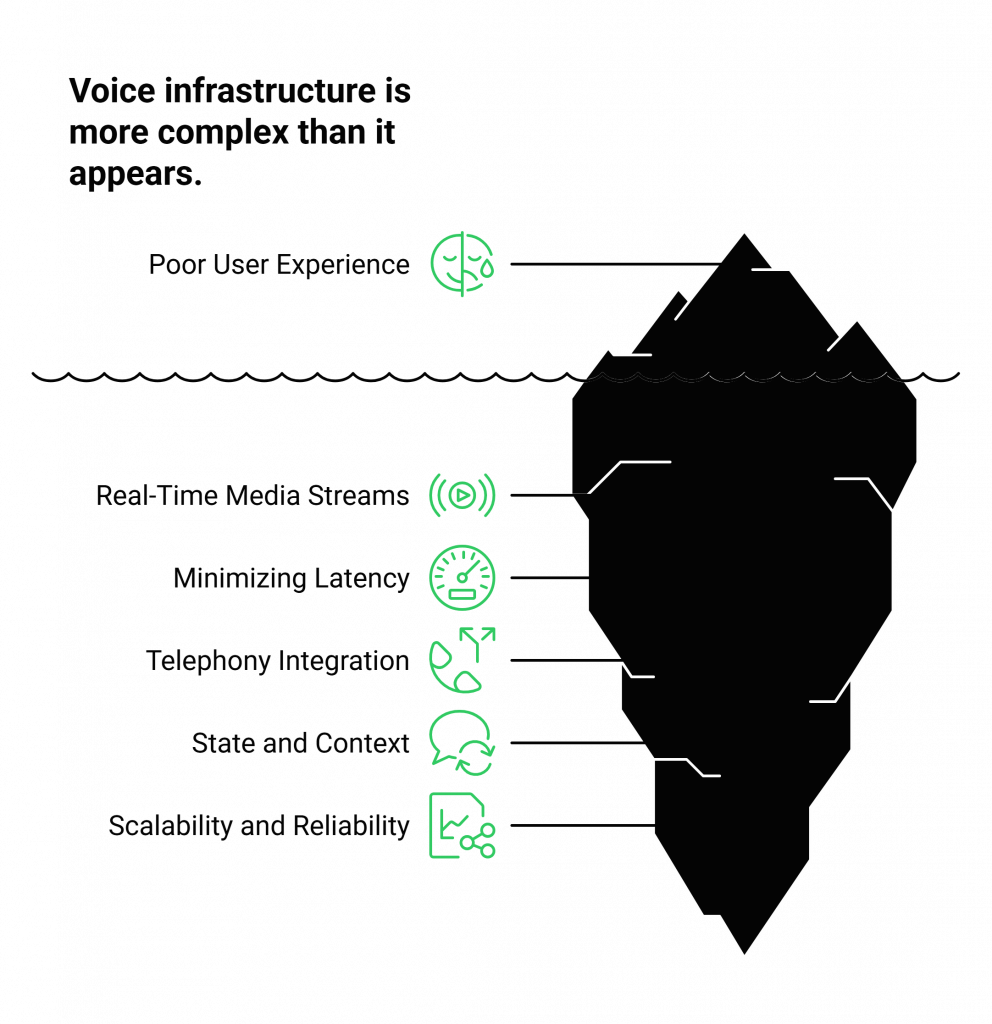

The Hidden Hurdle: Why Building Voice Infrastructure is an Engineering Nightmare

On the surface, creating a voice agent seems straightforward: capture user audio, send it to a Speech-to-Text (STT) service, process the text with a Large Language Model (LLM), generate a response, and play it back using a Text-to-Speech (TTS) service. However, the reality is a tangled web of infrastructure challenges that can derail projects, inflate budgets, and lead to a poor user experience.

Developers who attempt to build this layer from scratch quickly discover the immense difficulty:

- Managing Real-Time Media Streams: Capturing and transmitting audio from a live phone call is not like handling a simple data request. It requires a persistent, low-latency connection (often via WebSockets) that can stream raw audio data reliably without dropouts or delays.

- Minimizing Latency: The single biggest killer of a conversational AI is latency. Awkward pauses between a user speaking and the AI responding break the flow and frustrate users. Optimizing the entire stack, from audio capture to AI processing to voice playback, to shave off every possible millisecond is a monumental task.

- Integrating with Telephony: Connecting your application to the global telephone network (PSTN) involves complex protocols, carrier negotiations, and regulatory compliance.

- Maintaining State and Context: During a live call, your application needs to manage the dialogue state perfectly. The underlying connection must be stable enough to allow your backend to track context, handle interruptions, and manage the conversational flow without losing its place.

- Scalability and Reliability: A proof-of-concept that works for a single call can crumble under the pressure of hundreds or thousands of concurrent calls.

Also Read: WhatsApp Chat Handling Strategies for Medium‑Sized Enterprises in Iran

Introducing FreJun AI: The Voice Transport Layer for Your AI

This is precisely the problem FreJun AI was built to solve. We believe that developers should focus on building the best possible AI, not on the intricacies of voice infrastructure. FreJun AI is a developer-first platform that provides a robust, real-time voice transport layer, abstracting away the complexity of telephony and media streaming.

Our core value proposition is simple: We handle the complex voice infrastructure so you can focus on building your AI.

FreJun AI is not another LLM or a closed-off chatbot builder. FreJun’s API is model-agnostic. This means you bring your own AI. Whether you use OpenAI, Google Dialogflow, a custom-trained model for STT, or a premium voice from ElevenLabs for TTS, FreJun serves as the reliable, high-speed conduit that connects your AI services to any phone call. You maintain complete control over your AI stack, while we ensure the conversation is delivered with speed and clarity.

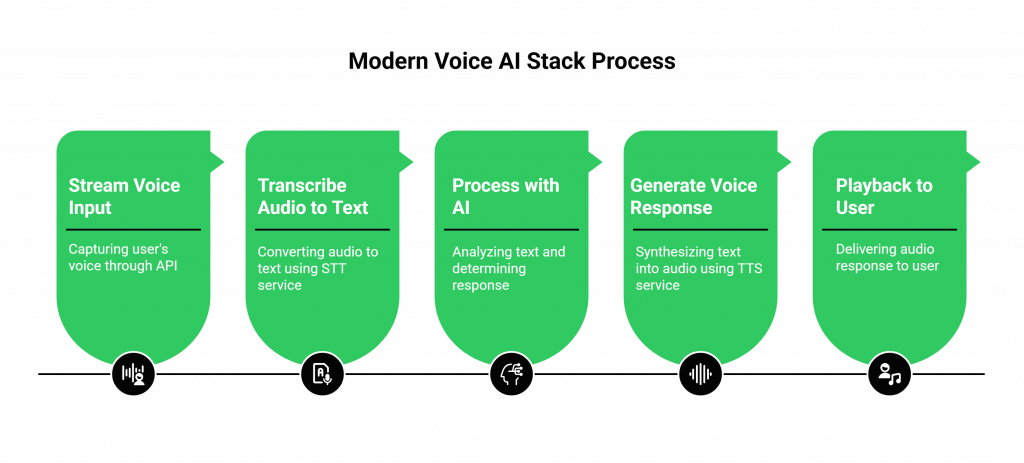

Anatomy of a Modern Voice AI Stack

With FreJun AI as the foundation, building a powerful AI voice chatbot assistant becomes a clear, three-step process. Our architecture is designed to give you maximum control over your AI logic while we manage the call itself.

Step 1: Stream Voice Input

It all starts with capturing the user’s voice. When a call is initiated, either inbound to a number you provision through FreJun or outbound via our API, we establish a real-time, low-latency audio stream. Our API captures every word clearly and pipes the raw audio data directly to the endpoint you specify. This is where you connect your chosen STT service to transcribe the user’s speech into text.

Step 2: Process with Your AI

Once the audio is transcribed, the text is sent to your AI brain. This is your domain. You use your preferred LLM or NLP platform to understand the user’s intent, access backend systems or APIs for information (like checking an order status), and decide on the appropriate response. Throughout this process, FreJun maintains the stable call connection, acting as a reliable transport layer. Your application retains full control over the dialogue state, allowing you to manage complex, multi-turn conversations.

Step 3: Generate the Voice Response

After your AI formulates a text response, you pipe it to your chosen TTS service to synthesize the audio. The final step is to stream that generated audio back to the FreJun API. We handle the low-latency playback to the user over the live call, completing the conversational loop seamlessly. This entire round trip is optimized to minimize delay, creating a natural and fluid interaction.

Also Read: Virtual PBX Phone Systems Solutions for Businesses

FreJun AI vs. In-House Infrastructure: A Developer’s Comparison

When deciding how to voice-enable your AI, the choice is between building the infrastructure yourself or leveraging a dedicated platform. The following table illustrates why partnering with FreJun is the more strategic choice.

| Feature / Consideration | Building In-House (DIY) | Using FreJun AI’s Transport Layer |

| Initial Setup Time | Months of development, integration, and testing. | Days. Get started immediately with our API & SDKs. |

| Latency Management | A constant, complex battle across the entire stack. | Engineered from the ground up for low-latency conversations. |

| Telephony Integration | Requires deep PSTN knowledge and carrier management. | Fully managed. Instantly provision numbers and manage calls via API. |

| Developer Focus | Divided between AI logic and infrastructure maintenance. | 100% focused on AI logic, context management, and user experience. |

| Scalability | Requires significant investment in redundant, geo-distributed servers. | Built on resilient, geographically distributed infrastructure for high availability. |

| Maintenance Overhead | Ongoing efforts to manage servers, security, and carrier relations. | Zero infrastructure maintenance. We handle all of it. |

| Model Flexibility | Locked into the models and services you initially integrate. | Model-agnostic. Swap STT, LLM, or TTS providers via API at any time. |

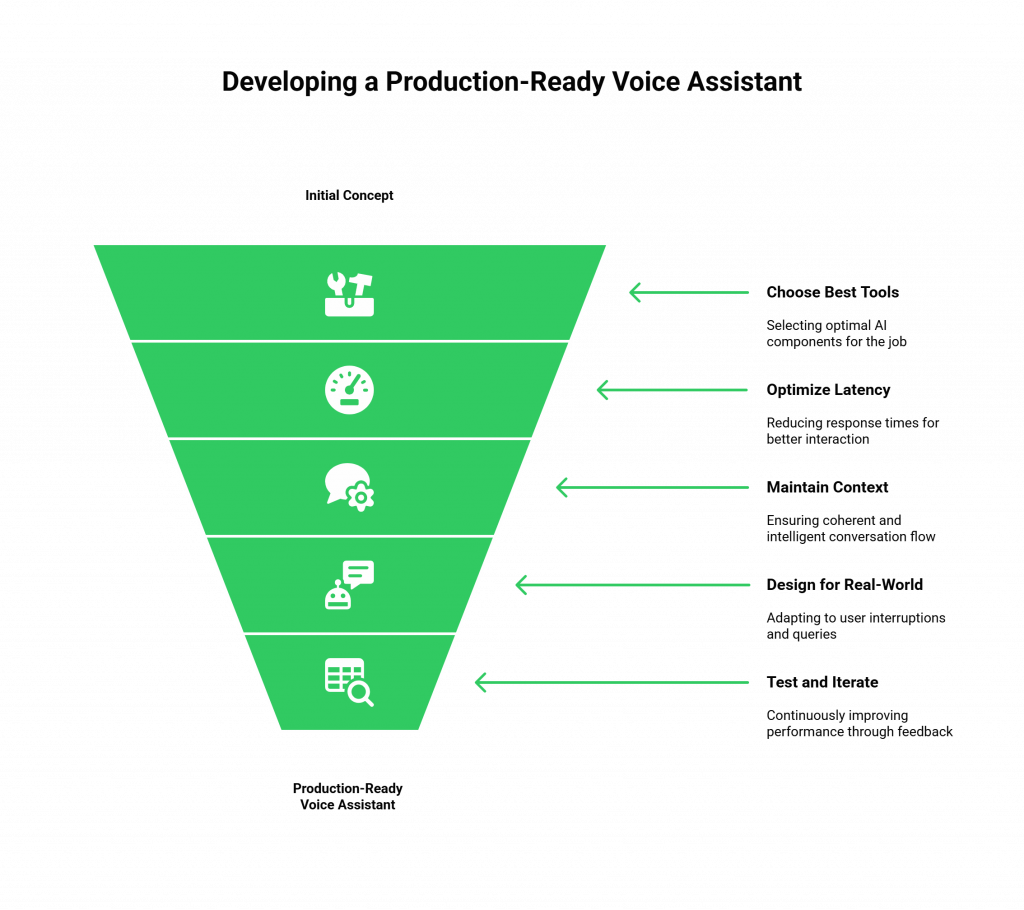

Best Practices for Developing a Production-Grade Voice Assistant Chatbot

Leveraging FreJun’s infrastructure frees you to concentrate on what makes your voice agent truly effective. Follow these best practices to move from a simple concept to a production-ready conversational voice assistant chatbot.

Choose the Best Tools for the Job

The most powerful feature of a model-agnostic platform is freedom of choice. Don’t settle for a one-size-fits-all solution. With FreJun, you can select the best-in-class service for each part of your AI stack:

- STT: Choose a transcription service optimized for your specific domain or language.

- LLM/NLP: Use the large language model that best suits your conversational logic, whether it’s OpenAI, Google Dialogflow, or a custom model.

- TTS: Select a voice that aligns with your brand, from a provider like ElevenLabs, for a truly natural-sounding interaction.

Obsess Over Latency

Even with FreJun’s low-latency infrastructure, your AI processing time matters. Optimize your backend logic to ensure responses are generated as quickly as possible. Cache common queries, streamline API calls, and design efficient decision trees. Remember, every 100ms you save contributes to a more natural conversational flow.

Maintain Meticulous Conversational Context

Your backend application is the single source of truth for the conversation. FreJun provides the stable channel, but it’s your responsibility to track the dialogue state. Design your system to handle interruptions, non-sequiturs, and multi-intent queries gracefully. This is what separates a rigid IVR from an intelligent Voice Assistant Chatbot.

Design for Real-World Conversations

Users won’t always follow the “happy path.” They will interrupt the bot, change their minds, or ask clarifying questions. Use FreJun’s developer-first SDKs to build a system that can handle these real-world dynamics. Implement features like barge-in (allowing users to speak over the bot) to create a more human-like interaction.

Test, Measure, and Iterate

Use real-world data and user feedback to continuously improve your voice agent. A/B test different prompts, voices, and conversational flows to see what performs best. Implement automated testing to guard against AI hallucinations or incorrect responses. With FreJun handling the infrastructure, you can dedicate your testing resources to the AI’s performance.

Also Read: Remote Team Communication Using Softphones for SMBs in India

Final Thoughts: Building a Truly Conversational Experience with FreJun

The goal of creating a Voice Assistant Chatbot is not simply to automate a task; it’s to provide a seamless, intuitive, and effective communication channel for your users. The quality of that experience is directly tied to the quality of the underlying infrastructure. Lag, dropped words, and robotic delays can undermine even the most intelligent AI.

Choosing FreJun AI is a strategic decision to prioritize the user experience. By offloading the complex, mission-critical voice layer to our platform, you de-risk your project and accelerate your time to market. You empower your developers to do what they do best: innovate.

Whether you are automating customer service, building intelligent IVRs, or deploying proactive outbound agents, FreJun provides the robust foundation you need. Our comprehensive SDKs, developer-first tooling, and dedicated integration support ensure your journey from concept to production is smooth and successful.

Give a Try to FreJun AI Today!

Frequently Asked Questions

No. FreJun AI is a voice transport layer. We are model-agnostic and do not provide our own AI services like STT, TTS, or LLMs. Our platform is designed to connect the AI models and services of your choice to the telephone network with low latency. You bring your own AI, giving you full control over the logic and user experience.

A voice transport layer is the foundational infrastructure that handles the real-time capture, streaming, and playback of audio over a call. It manages the complex telephony integration (connecting to phone numbers) and ensures a stable, low-latency media stream between the user and your backend AI services.

Absolutely. Because FreJun is model-agnostic, you can integrate with any STT, LLM, NLP, or TTS provider that offers an API. Our platform simply provides the real-time audio stream, which you can direct to any service you choose, including those from major providers like OpenAI, Google, and ElevenLabs.

We offer dedicated integration support to ensure your success. Our team of experts is available to help with everything from pre-integration planning and architectural guidance to post-launch optimization. Our goal is to make the process of connecting your AI to our voice layer as seamless as possible.

Yes. FreJun is built on a resilient, high-availability, and geographically distributed infrastructure. It can handle a high volume of concurrent calls, making it suitable for mission-critical, enterprise-grade deployments, from large-scale customer service automation to proactive outbound campaigns.