Live voice has become a critical interface for modern applications, especially as AI agents move beyond chat into real conversations. However, integrating real-time voice is not just another API task. It involves low-latency media streaming, call state management, telecom reliability, and seamless coordination with AI systems. This is where a voice API for developers plays a decisive role. Instead of dealing with SIP protocols, codecs, and carrier-level failures, teams can focus on building intelligent voice experiences.

This blog explains, step by step, how voice APIs simplify live voice integration and why they are becoming foundational infrastructure for AI-powered products.

Why Is Live Voice Integration Still Complex For Modern Applications?

At first glance, voice seems simple. A user speaks, the system listens, and a response is played back. However, under the hood, live voice integration involves multiple systems working together in real time.

According to industry forecasts, the Web Real-Time Communication (WebRTC) market – which underpins low-latency real-time voice and media – is projected to grow at a 33.5% CAGR and hit over USD 80 billion by 2034, underscoring the rising demand for real-time voice interfaces in modern applications.

Unlike chat or REST APIs, voice is continuous, time-sensitive, and stateful.

Key challenges include:

- Real-time audio streaming: Voice is not a single request. It is a constant stream of audio packets.

- Low latency requirements: Delays above 200–300 ms break conversation flow.

- Bidirectional communication: Audio must flow both ways at the same time.

- Call state handling: Ringing, answered, muted, hold, disconnect.

- Network variation: Packet loss, jitter, and bandwidth changes.

- Protocol complexity: SIP, RTP, WebRTC, PSTN gateways.

Because of this, building live voice directly on raw telecom protocols becomes expensive and fragile very quickly.

Therefore, teams need a layer that hides this complexity while still giving them control.

What Exactly Is A Voice API For Developers?

A voice API for developers is a programmable interface that abstracts telecom networks and real-time audio handling into simple application-level events and streams.

Instead of managing SIP servers, RTP packets, and carrier routing, developers interact with:

- Call events

- Media streams

- Webhooks

- SDKs

In simple terms, a voice API sits between phone networks and application logic.

Conceptual Flow

Caller

↓

Telecom Network (PSTN / VoIP)

↓

Voice API

↓

Application Logic

↑

Voice API

↑

Caller

As a result, developers focus on what should happen, not how telecom works.

What Does A Voice API Abstract For Engineering Teams?

A modern voice API removes multiple layers of infrastructure work. More importantly, it standardizes them.

Telecom Complexity Removed

- SIP signaling

- Call routing across carriers

- RTP media handling

- Codec negotiation (G.711, Opus, PCM)

- NAT traversal

- DTMF signaling

- Regional telecom differences

Application-Level Control Added

- Call answered / ended events

- Audio stream access

- Playback control

- Error handling

- Scaling hooks

Because of this abstraction, teams can iterate faster and ship voice features without telecom expertise.

How Is A Voice API Different From A Calling Platform?

This distinction matters, especially for AI-driven systems.

| Capability | Calling Platforms | Voice APIs |

| Primary focus | Human calls | Programmable voice |

| Media access | Limited | Full audio streams |

| Latency control | Basic | Fine-grained |

| AI integration | Indirect | Native |

| Custom logic | Restricted | Fully controlled |

While calling platforms focus on connecting humans, a live voice integration API focuses on connecting applications to voice.

Therefore, voice APIs are better suited for automation, AI agents, and custom workflows.

How Does A Voice Streaming API Enable Real-Time Conversations?

Traditional telephony often works with pre-recorded prompts or delayed responses. However, conversational systems require continuous streaming.

A voice streaming API provides:

- Live audio input as small frames

- Real-time playback of generated audio

- Event-based signaling for call state

Why Streaming Matters

Without streaming:

- Speech feels delayed

- AI responses sound robotic

- Interruptions are not handled well

With streaming:

- Users can speak naturally

- AI can interrupt or clarify

- Conversations feel human

As a result, voice streaming APIs are the foundation of real-time calling SDKs used in modern applications.

Why Is Low Latency Critical In Live Voice Integration?

Latency directly affects user trust.

Even small delays change how users perceive intelligence.

Typical Latency Budget

| Stage | Target Latency |

| Audio capture | < 50 ms |

| STT processing | 50–150 ms |

| LLM reasoning | 100–300 ms |

| TTS generation | 50–200 ms |

| Playback | < 50 ms |

If any layer slows down, the entire conversation feels broken.

Therefore, a voice API must:

- Stream audio efficiently

- Avoid buffering delays

- Maintain stable connections

This is why general messaging APIs cannot replace real-time voice APIs.

How Do Voice APIs Fit Into AI Voice Agent Architectures?

Voice agents are often described as a single system. In reality, they are a pipeline of components.

A typical AI voice agent includes:

- STT (Speech-to-Text): Converts audio to text

- LLM: Manages dialogue and reasoning

- RAG: Provides context from data sources

- Tool calling: Triggers actions

- TTS (Text-to-Speech): Converts responses to audio

The voice API does not replace these components. Instead, it acts as the transport layer.

Architecture Overview

Caller

↕

Voice API

↕

STT → LLM → Tools / RAG → TTS

↕

Voice API

↕

Caller

Because of this separation:

- Developers can swap models easily

- AI logic remains independent

- Voice infrastructure stays stable

This flexibility is critical for long-term scalability.

Why Are Voice APIs Preferred Over WebRTC Alone?

WebRTC is powerful. However, it solves only part of the problem.

WebRTC handles:

- Browser-based media transport

- Peer-to-peer audio

Voice APIs handle:

- PSTN connectivity

- Global phone routing

- Call lifecycle management

- Carrier failover

Therefore, most production systems use WebRTC for media transport and a voice API for telecom integration.

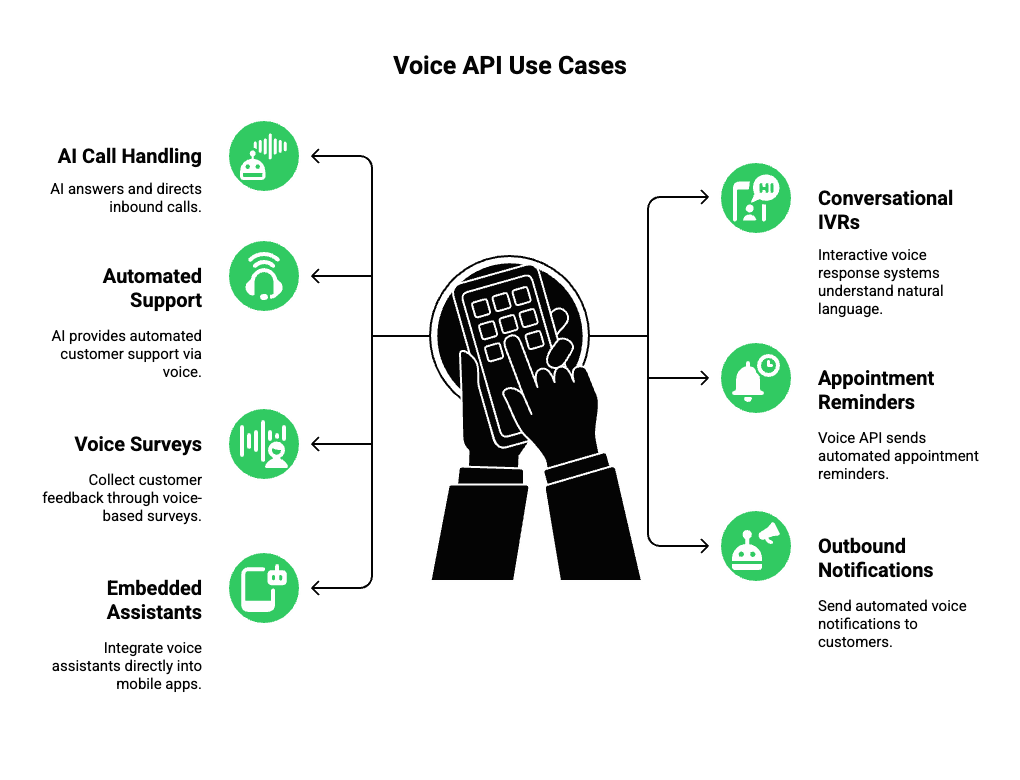

What Are Common Voice API For Developers Use Cases Today?

Voice APIs support a wide range of real-world applications.

Common Use Cases

- AI-powered inbound call handling

- Conversational IVRs

- Automated customer support

- Appointment reminders

- Voice-based surveys

- Outbound notifications

- Embedded voice assistants in apps

Because the same voice API can power all these flows, teams avoid rebuilding infrastructure for each use case.

Why Voice APIs Are Becoming Core Infrastructure

Voice is no longer just a communication channel. It is becoming a product interface.

As AI agents move from chat to calls:

- Reliability becomes critical

- Latency becomes visible

- Infrastructure choices matter more

Therefore, teams increasingly treat voice APIs as core backend infrastructure, similar to databases or message queues.

How Do Teams Move From Prototype To Production Voice Systems?

Once teams understand the role of a voice API, the next challenge is execution. A simple demo call is easy. However, production systems demand far more discipline.

At this stage, most engineering teams encounter the same friction points:

- Handling hundreds or thousands of concurrent calls

- Maintaining audio quality across regions

- Managing failures without breaking conversations

- Preserving conversational context across long calls

Therefore, moving from prototype to production is less about AI models and more about infrastructure reliability.

What Makes Live Voice Integration Hard To Scale?

Scaling voice is different from scaling web traffic.

Voice traffic is:

- Stateful

- Time-sensitive

- Sensitive to packet loss

Common Scaling Challenges

- Concurrency spikes: Campaigns or outages can create sudden load.

- Regional latency: Calls must route to the nearest media edge.

- Audio consistency: One dropped packet can distort speech.

- Failure recovery: Calls cannot simply “retry” like HTTP requests.

Because of this, scaling voice requires a platform that understands media, not just messages.

How Does A Real Time Calling SDK Help With Scale?

A real time calling SDK simplifies scaling by standardizing how applications interact with voice.

Instead of custom handling per call, developers rely on:

- Consistent media stream interfaces

- Event-driven call state updates

- Managed concurrency limits

- Built-in buffering and backpressure control

As a result, engineering teams gain predictability even under heavy load.

This is where a well-designed live voice integration API becomes essential.

How Do Voice APIs Handle Reliability And Failures?

Failures in voice systems are unavoidable. However, good infrastructure prevents them from becoming visible.

Common Failure Scenarios

- Network jitter

- Temporary carrier issues

- Regional outages

- Short STT or TTS delays

A production-ready voice API mitigates these issues by:

- Maintaining persistent connections

- Automatically rerouting media

- Handling partial failures gracefully

- Preserving call context

Therefore, users experience continuity even when systems degrade.

How Does FreJun Teler Simplify Live Voice Integration For Developers?

This is where FreJun Teler fits naturally into the architecture described so far.

FreJun Teler is a global voice infrastructure layer built for AI-driven conversations. It focuses on the hardest part of voice systems: real-time, low-latency media transport across telecom networks.

Instead of acting as a calling product, Teler operates as a voice API for developers.

What Teler Handles

- Real-time audio streaming

- Call lifecycle management

- Low-latency media transport

- Global telecom connectivity

- Failover and reliability

What Developers Control

- LLM selection

- STT and TTS engines

- Conversational logic

- Context and memory

- Tool calling and workflows

Because of this separation, teams can evolve their AI stack without touching voice infrastructure.

How Does Teler Work With Any LLM, STT, Or TTS Stack?

Modern AI systems change quickly. Therefore, flexibility matters.

Teler is designed to be model-agnostic.

This means:

- Any LLM can be connected

- Any STT provider can be used

- Any TTS engine can generate responses

Typical Integration Flow

Incoming Call

↓

Teler Voice API

↓

STT Engine

↓

LLM + RAG + Tools

↓

TTS Engine

↓

Teler Voice API

↓

Caller

Because Teler only manages the voice layer, it does not interfere with AI logic. As a result, teams avoid vendor lock-in.

Why Is Conversational Context Easier To Manage With A Voice API?

Context is critical for voice agents. Unlike chat, users cannot scroll back.

A stable voice API enables:

- Persistent connections

- Session-based state tracking

- Clear event boundaries

Therefore, applications can:

- Maintain dialogue history

- Handle interruptions

- Resume conversations smoothly

This is especially important for long-running calls such as support or onboarding.

How Does Teler Support Enterprise-Grade Reliability?

Enterprise voice systems demand more than basic uptime.

FreJun Teler is built with:

- Distributed media infrastructure

- Geographic redundancy

- Secure transport protocols

Key Reliability Benefits

- High availability across regions

- Consistent audio quality

- Predictable latency

- Controlled failure handling

As a result, AI voice agents remain usable even under heavy load.

How Do Voice APIs Reduce Time To Market?

Building voice infrastructure internally can take months.

Teams must:

- Set up carriers

- Configure SIP servers

- Manage codecs

- Build monitoring tools

By contrast, using a voice API for developers allows teams to:

- Integrate in days

- Focus on AI behavior

- Iterate faster

- Ship confidently

Therefore, product teams gain speed without sacrificing control.

When Should Teams Avoid Building Voice Infrastructure In-House?

In-house builds make sense only when voice infrastructure is the core business.

However, most teams benefit from a voice API when:

- Voice is one feature among many

- Reliability is critical

- Scaling is unpredictable

- AI models evolve rapidly

In these cases, outsourcing the voice layer reduces long-term risk.

How Do Voice APIs Fit Into Future AI-Driven Products?

Voice is becoming a primary interface.

As AI agents mature:

- Calls will replace forms

- Voice will replace menus

- Conversations will replace workflows

Because of this, infrastructure choices made today will shape future products.

A robust voice API ensures that systems remain adaptable as AI capabilities evolve.

What Is The Practical Takeaway For Founders And Engineering Leads?

To summarize:

- Live voice integration is complex

- Voice APIs abstract telecom complexity

- Real-time streaming is essential for AI

- Flexibility prevents lock-in

- Infrastructure reliability determines success

FreJun Teler fits into this ecosystem as a developer-focused voice API that enables scalable, low-latency, AI-powered conversations without forcing teams to become telecom experts.

How Can Teams Start Building With A Voice API Today?

The fastest path forward is simple:

- Choose your LLM

- Select your STT and TTS providers

- Use a production-ready voice API

- Focus on conversational logic

By separating voice infrastructure from AI intelligence, teams move faster and build systems that last.

Final Takeaway

Live voice integration is fundamentally an infrastructure challenge, not an AI problem alone. While LLMs, STT, and TTS define intelligence, a reliable voice API defines whether conversations actually work in real time. By abstracting telecom complexity, enabling low-latency voice streaming, and supporting scalable call handling, voice APIs allow teams to build production-ready voice agents faster and with far less risk.

FreJun Teler fits this model by acting as a dedicated voice infrastructure layer for AI-driven applications. It lets teams connect any LLM, any STT, and any TTS while handling real-time voice delivery at scale.

If you are building AI voice agents or real-time calling experiences, schedule a demo to see how Teler simplifies live voice integration from day one.

FAQs –

1. What is a voice API for developers?

A voice API lets developers programmatically manage calls and real-time audio without handling telecom protocols or infrastructure directly.

2. How does a voice API simplify live voice integration?

It abstracts SIP, RTP, and media streaming so developers focus on application logic instead of telecom complexity.

3. Can voice APIs work with AI and LLMs?

Yes, voice APIs act as transport layers connecting STT, LLMs, and TTS into real-time conversational systems.

4. What is a live voice integration API used for?

It enables real-time inbound and outbound voice interactions for AI agents, support systems, and automated calling workflows.

5. How is a voice API different from a calling platform?

Calling platforms focus on human calls, while voice APIs provide programmable, real-time audio access for applications.

6. Do voice APIs support real-time streaming?

Modern voice APIs support bidirectional, low-latency voice streaming essential for natural conversational experiences.

7. What industries benefit most from voice APIs?

Customer support, fintech, healthcare, logistics, and SaaS benefit most from scalable, AI-powered voice automation.

8. Can I use my own STT and TTS with a voice API?

Yes, most developer-focused voice APIs allow full flexibility to integrate any speech or synthesis engine.

9. Are voice APIs scalable for high call volumes?

Yes, they are designed to handle thousands of concurrent calls with built-in routing, buffering, and reliability mechanisms.

10. When should teams avoid building voice infrastructure in-house?

When voice is not the core business, using a voice API reduces cost, risk, and long-term maintenance overhead.