Bulk calling at scale is no longer about how many calls you can place. Instead, it is about how reliably those calls connect, start audio, and sustain engagement. As volumes grow, delivery rates often decline due to rigid telephony systems, delayed audio, and limited real-time control. This is where modern Voice APIs change the equation. By exposing call states, enabling real-time streaming, and supporting intelligent retry logic, a Voice API for bulk calling allows teams to actively improve delivery outcomes.

This blog breaks down, step by step, how programmable voice infrastructure improves call delivery at scale, especially when paired with AI-driven voice agents.

Why Do Bulk Calling Campaigns Struggle With Delivery At Scale?

Bulk calling looks simple on the surface. You upload numbers, trigger calls, and expect them to connect. However, at scale, delivery becomes unpredictable.

In telecom, a high connect rate is measured by the Answer-Seizure Ratio (ASR): above 60% is excellent, whereas under 40% is considered poor, highlighting common delivery challenges in large-scale outbound calling.

In practice, delivery rate is not just about how many calls are dialed. Instead, it reflects how many calls successfully connect, start audio, and remain active long enough to deliver a message or conversation.

Most delivery issues come from system limitations, not volume.

Common challenges include:

- Carrier throttling when call bursts exceed allowed limits

- Static retry rules that do not adapt to real outcomes

- Delayed audio playback that causes early hang-ups

- No visibility into why calls fail

- Inability to react in real time

As a result, teams often see delivery rates drop as volume increases. This happens because traditional systems treat calls as isolated events, not as part of a live system.

Therefore, improving delivery at scale requires control, feedback, and real-time decision-making. This is where a Voice API for bulk calling becomes essential.

What Is A Voice API For Bulk Calling And How Is It Different From Traditional Telephony?

A Voice API is a programmable interface that lets applications initiate, control, and monitor voice calls through code.

Unlike legacy telephony systems, a Voice API abstracts the complexity of:

- SIP signaling

- PSTN routing

- Media transport

- Call lifecycle events

As a result, developers work with APIs instead of telecom hardware.

Traditional Telephony Vs Voice API

| Aspect | Traditional Systems | Voice API For Bulk Calling |

| Call Control | Manual or fixed logic | Fully programmable |

| Scaling | Hardware-bound | Elastic and software-driven |

| Retry Logic | Static | Dynamic and event-based |

| Monitoring | Limited reports | Real-time events |

| Delivery Optimization | Reactive | Proactive |

Because a Voice API exposes call states through events and streams, systems can respond immediately. Therefore, delivery logic becomes adaptive, not fixed.

This difference is critical when the goal is to improve call delivery in 2026 and beyond.

How Does A Voice API Control Call Delivery At A System Level?

To understand delivery improvement, it is important to understand how calls are controlled internally.

A voice call moves through multiple states:

- Call initiated

- Ringing

- Answered or failed

- Media started

- Call completed

Traditional systems only record the final outcome. In contrast, a Voice API exposes each state in real time.

Because of this, applications can:

- Detect early failures

- Stop wasting retries on unreachable numbers

- Change pacing dynamically

- Trigger alternate logic instantly

For example, if a call is answered but audio fails to start within a few milliseconds, the system can terminate and retry using a different route. Without an API, this insight is not available.

Therefore, delivery improves because decisions are made during the call, not after.

How Does Real-Time Call Streaming Improve Connect And Answer Rates?

Reliable call streaming is one of the most overlooked factors in delivery.

A call may technically connect, yet still fail if the first audio arrives late. In outbound bulk calling, users often hang up if they hear silence for even a short duration.

What Reliable Call Streaming Means

Reliable call streaming refers to:

- Continuous low-latency audio transport

- Stable RTP or WebSocket streams

- Immediate media availability after answer

When streaming is delayed, users assume the call is broken. As a result, answer rates drop even when calls connect successfully.

Why Streaming Directly Impacts Delivery

- Faster audio start reduces early hang-ups

- Clear audio increases trust

- Stable streams prevent mid-call drops

Therefore, a high-connect-rate Voice API must prioritize media reliability, not just signaling success.

In bulk calling, where thousands of calls start in parallel, streaming quality becomes a delivery multiplier.

How Does A Voice API Enable Intelligent Retry And Pacing At Scale?

Retry strategy is one of the strongest levers for improving delivery.

However, retries only help when they are context-aware.

A Voice API provides real-time outcomes such as:

- Busy

- No answer

- Call rejected

- Network failure

- Voicemail detected

Because of this, retry behavior can change based on why a call failed.

Intelligent Retry Patterns

- Retry busy numbers after a short delay

- Delay retries for no-answer outcomes

- Avoid repeated attempts on unreachable endpoints

- Switch routes after carrier-level failures

In addition, pacing can be adjusted dynamically.

Instead of pushing all calls at once, systems can:

- Throttle by region

- Respect carrier limits

- Spread load across time windows

As a result, bulk calling becomes predictable instead of bursty. Consequently, delivery rates remain stable even as volume increases.

How Do LLMs, STT, And TTS Change Delivery Outcomes In Bulk Calling?

Modern bulk calling is no longer limited to playing recorded messages.

Today, voice agents are built using:

- Speech-to-Text (STT)

- Large Language Models (LLMs)

- Text-to-Speech (TTS)

- Retrieval and tool calling

This stack allows calls to respond dynamically instead of following fixed scripts.

Why Conversations Improve Delivery

When calls feel responsive:

- Users stay on the line longer

- Early hang-ups reduce

- Engagement increases

However, AI alone does not improve delivery. It must be paired with a Voice API that supports real-time streaming and low-latency media handling.

If STT input arrives late or TTS playback is delayed, users disengage. Therefore, delivery improvement depends on both AI logic and transport quality.

How Does A Voice API Act As The Transport Layer For Voice Agents?

In a modern architecture, responsibilities are clearly separated.

- The Voice API handles calls, media, and events

- The application handles logic, AI, and decisions

This separation is important because it allows teams to change models without touching telephony.

Typical Data Flow

- Call audio is streamed from the phone

- Audio is forwarded to an STT engine

- Text is processed by an LLM

- Response text is converted to speech

- Audio is streamed back to the call

Because this loop runs continuously, transport reliability directly affects delivery.

If the Voice API drops streams or delays packets, the entire conversation breaks. Therefore, reliable call streaming is a core requirement, not an optimization.

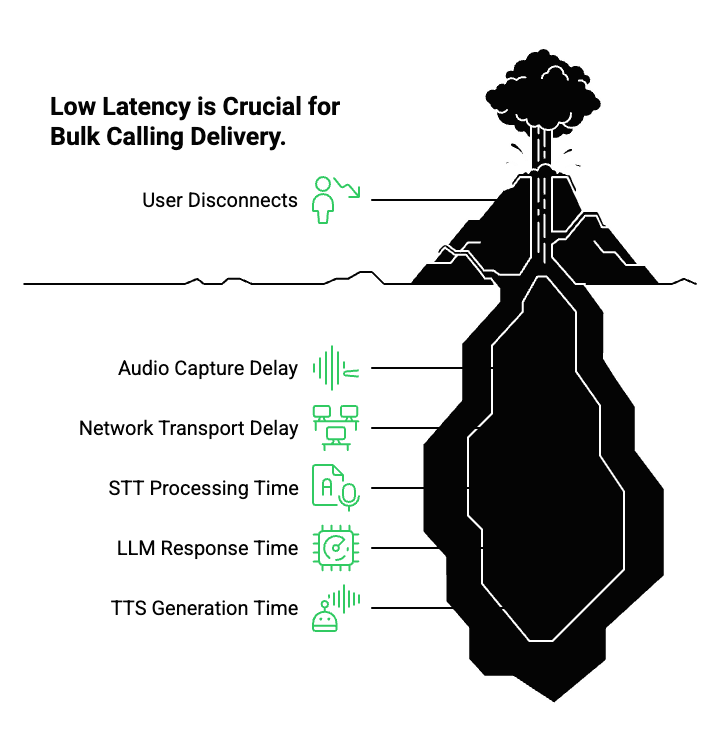

Why Does Low Latency Matter For Bulk Calling Delivery?

Latency determines how natural a call feels.

Even small delays compound when thousands of calls run in parallel.

Latency Components

- Audio capture delay

- Network transport delay

- STT processing time

- LLM response time

- TTS generation time

If total latency exceeds acceptable thresholds, users disconnect.

Therefore, a Voice API designed for bulk calling must minimize overhead at every step. Otherwise, connect rates decline despite successful dialing.

Where Does FreJun Teler Fit Into A Modern Bulk Calling Architecture?

At this stage, it becomes clear that improving delivery rates is not about dialing faster. Instead, it depends on how well the voice layer supports real-time decision-making, streaming, and AI interaction.

This is where FreJun Teler fits into the architecture.

FreJun Teler is a global voice infrastructure layer built to support AI-driven bulk calling. Rather than focusing only on call initiation, Teler is designed to manage call control, media streaming, and scalability as a single system.

How Teler Sits In The Stack

Teler operates as the voice layer between phone networks and your application.

- Phone networks connect to Teler

- Teler streams audio and events in real time

- Your application connects any LLM, STT, and TTS stack

- Logic stays fully under your control

Because of this separation, teams can evolve AI workflows without changing telephony infrastructure.

How Does FreJun Teler Improve Call Delivery At Scale?

Delivery improvement comes from how infrastructure behaves under load.

FreJun Teler focuses on four areas that directly impact delivery rates.

1. Real-Time Media Streaming By Design

Teler uses real-time streaming instead of delayed media handling. As a result:

- Audio starts immediately after answer

- STT receives clean, continuous input

- TTS playback begins without pauses

This reduces early hang-ups and improves perceived call quality. Consequently, connect rates stay high even during large campaigns.

2. Event-Driven Call Control

Every call state is exposed as an event.

Therefore, applications can:

- Detect failed routes instantly

- Stop retries that waste capacity

- Adjust pacing in real time

Instead of waiting for reports, delivery logic adapts while calls are active.

3. High-Concurrency Without Media Degradation

Bulk calling often fails when concurrency increases.

Teler is designed to handle large numbers of simultaneous calls while maintaining stable streaming. Because media and signaling scale together, quality does not drop as volume increases.

4. Model-Agnostic AI Integration

Teler does not lock teams into a specific AI provider.

You can connect:

- Any LLM

- Any STT engine

- Any TTS voice

As a result, delivery logic remains flexible. Teams can optimize AI performance without touching the voice layer.

How Does Reliable Call Streaming Translate To Higher Connect Rates?

It is important to connect infrastructure decisions to measurable outcomes.

Reliable call streaming improves delivery because:

- Users hear audio immediately

- Conversations feel responsive

- Fewer calls drop before engagement

In bulk calling, even small improvements multiply.

For example, improving early audio start by a few hundred milliseconds can significantly reduce hang-ups across thousands of calls. Therefore, reliable call streaming is not an enhancement. It is a delivery requirement.

How Do Teams Measure Delivery Improvements With A Voice API?

To improve delivery, teams must measure the right signals.

A modern Voice API exposes delivery metrics programmatically. This allows teams to monitor performance continuously.

Key Delivery Metrics To Track

- Call connect rate

- Answer rate

- Time to first audio

- Drop-off before first response

- Retry success rate

- Call completion rate

Because these metrics are available in real time, systems can adjust behavior automatically.

Why Measurement Improves Delivery

When metrics are visible:

- Retry logic improves

- Pacing becomes smarter

- Failures reduce over time

Therefore, delivery improves not only because of infrastructure, but also because systems learn from outcomes.

How Does A Voice API Improve Delivery For Different Bulk Calling Use Cases?

Delivery challenges vary by use case. However, the underlying mechanics remain the same.

Outbound Notifications And Alerts

- Immediate audio playback prevents confusion

- Clear delivery confirmation reduces repeat calls

Lead Qualification And Sales Calls

- Responsive conversations keep prospects engaged

- AI-driven dialogue reduces early hang-ups

Inbound Automation

- Faster answer times improve caller experience

- Accurate routing reduces dropped calls

In each case, a Voice API for bulk calling provides control that traditional systems cannot.

How Does A Voice API Compare To Traditional Calling Platforms?

Traditional calling platforms were built for human agents, not for AI-driven scale.

Key Differences

| Capability | Traditional Platforms | Voice API With AI |

| Media Streaming | Limited | Real-time |

| Retry Logic | Static | Adaptive |

| AI Integration | Complex | Native |

| Scalability | Constrained | Elastic |

| Delivery Optimization | Manual | Programmatic |

Because of these differences, delivery rates decline on legacy systems as volume grows. In contrast, API-driven systems improve delivery as logic becomes smarter.

What Does A Scalable Voice API Stack Look Like In 2026?

By 2026, bulk calling will no longer be script-based.

The standard stack will include:

- A Voice API for bulk calling

- Real-time call streaming

- AI-driven decision logic

- Continuous delivery monitoring

In this model, delivery rates are not guessed. They are engineered.

FreJun Teler supports this model by providing a voice layer that scales with AI, not against it.

Final Thoughts: Why Delivery Improves When Voice Becomes Programmable

Improving bulk call delivery at scale is not a dialing problem, it is an infrastructure problem. When voice systems expose real-time events, stream audio reliably, and adapt dynamically to call outcomes, delivery rates improve naturally. Voice APIs enable this shift by turning telephony into a programmable layer rather than a fixed pipeline. As AI voice agents become standard, delivery success increasingly depends on how well voice infrastructure supports low-latency streaming, intelligent pacing, and real-time decision-making.

FreJun Teler is built for this reality. It provides the AI-ready voice layer teams need to run large-scale, conversational calling systems with predictable delivery outcomes.

FAQs –

- What is a Voice API for bulk calling?

A Voice API lets developers programmatically control calls, media streams, retries, and events for scalable outbound and inbound calling. - Why do delivery rates drop at high call volumes?

Delivery drops due to carrier limits, delayed audio, static retries, and lack of real-time call state awareness. - How does real-time streaming improve call delivery?

It ensures audio starts immediately after answer, reducing early hang-ups and improving perceived call reliability. - Can Voice APIs work with any LLM or AI model?

Yes, modern Voice APIs act as transport layers and remain model-agnostic to LLM, STT, and TTS choices. - What metrics define call delivery success?

Connect rate, answer rate, time to first audio, call drop rate, and retry success rate define delivery performance. - How does retry logic affect delivery rates?

Adaptive retries based on call outcomes avoid wasted attempts and improve successful connections over time. - Is bulk calling possible without AI?

Yes, but AI improves engagement, reduces hang-ups, and increases delivery effectiveness through dynamic conversations. - Why is low latency critical in voice calling?

High latency creates silence or delays, causing users to hang up before engagement begins. - How does Teler differ from traditional calling platforms?

Teler is built for real-time streaming and AI workflows, not static scripts or agent-only calling models. - Who should use an AI-ready Voice API like Teler?

Teams building scalable voice agents, automated calling systems, or AI-driven customer interactions.