The world of real-time communication is in the midst of a seismic shift. The simple phone call has been transformed from a basic utility into a rich, programmable, and intelligent experience. For developers and product leaders, the voice calling SDK is the primary toolkit for building this new future. But as we look toward 2026, the definition of a “good” SDK is evolving at a breakneck pace.

The features that were once considered cutting-edge are now table stakes. The voice calling SDK of tomorrow is not just about connecting calls; it is about enabling seamless global reach, powering sophisticated AI, and providing a flawless developer experience.

Choosing the right voice platform is one of the most critical infrastructure decisions a modern, tech-forward company will make. The capabilities of your chosen SDK will directly enable, or limit, your ability to innovate and deliver a superior customer experience.

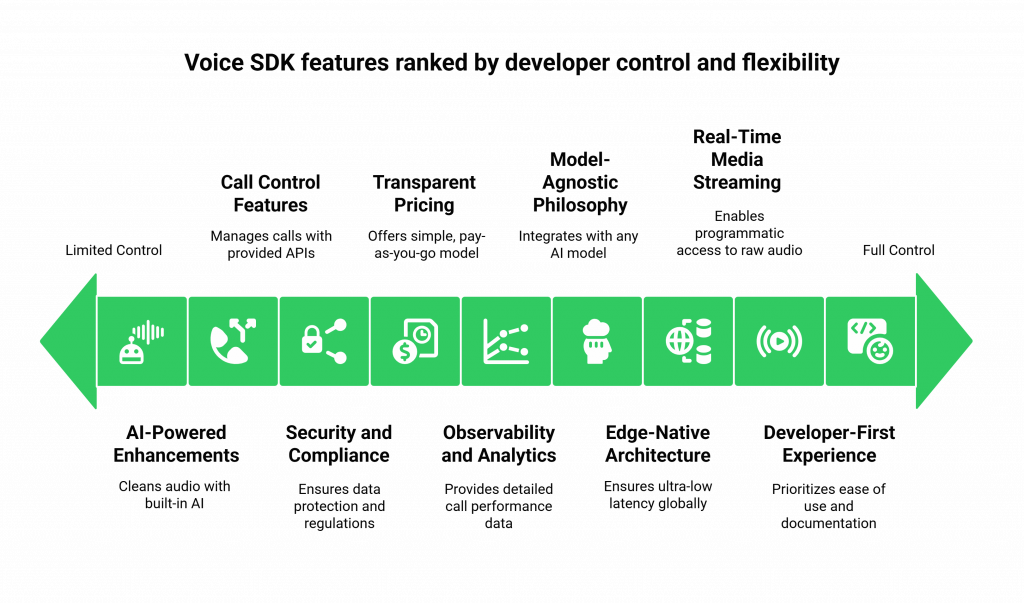

As we survey the 2026 voice API trends, a clear picture emerges of the non-negotiable features that separate the legacy providers from the true leaders. This guide will break down the top 10 best voice calling SDK features that should be on every developer’s checklist.

Table of contents

- 1. Globally Distributed, Edge-Native Architecture for Ultra-Low Latency

- 2. Granular, Real-Time Media Streaming APIs

- 3. A Radically Developer-First Experience

- 4. Uncompromising, Carrier-Grade Reliability with Transparent SLAs

- 5. Advanced, AI-Powered Audio Quality Enhancements

- 6. A Truly Model-Agnostic Philosophy for AI Integration

- 7. Deep Observability and Granular Analytics

- 8. Robust, End-to-End Security and Global Compliance

- 9. Advanced Call Control and Conversational Features

- 10. Simple, Transparent, and Usage-Based Pricing

- Conclusion

- Frequently Asked Questions (FAQs)

1. Globally Distributed, Edge-Native Architecture for Ultra-Low Latency

Why it matters: In 2026, latency is the ultimate measure of quality. For both human-to-human and human-to-AI conversations, a noticeable delay is a fatal flaw. An SDK that relies on a centralized cloud architecture is obsolete.

What to look for: A truly modern voice calling SDK must be the front-end to a globally distributed network of Points of Presence (PoPs). This “edge-native” architecture ensures that a call is always handled at a data center physically close to the end-user, drastically reducing the round-trip time for audio packets. This is the single most important factor in achieving the sub-300ms latency required for natural-sounding conversations.

2. Granular, Real-Time Media Streaming APIs

Why it matters: The future of voice is intelligent. To build sophisticated voice communication tools for AI, developers need more than just call control; they need direct, programmatic access to the raw audio stream of a live call.

What to look for: The SDK must provide a robust, low-level API for real-time media streaming (often called media forking). This is the core capability that allows a developer to send the live audio to a Speech-to-Text (STT) engine for an AI to “hear,” and to inject a synthesized audio stream from a Text-to-Speech (TTS) engine for the AI to “speak.” Without this, building a truly interactive voice AI is impossible.

3. A Radically Developer-First Experience

Why it matters: The primary user of a voice calling SDK is a software developer. The entire experience, from the first line of code to debugging a production issue, must be designed to make their life easier and more productive. A clunky, poorly documented SDK is a direct tax on your engineering velocity.

What to look for:

- Comprehensive, Searchable Documentation: It must be more than just a list of endpoints. It should include clear guides, tutorials, and real-world code examples.

- A Rich Ecosystem of Idiomatic SDKs: The provider should offer well-maintained SDKs in all major languages (Python, Node.js, Java, Go, etc.) that feel natural to a developer working in that ecosystem.

- A Self-Service, API-Driven Platform: A developer should be able to sign up, get an API key, purchase a number, and make their first call in under 15 minutes, without ever having to speak to a salesperson.

Also Read: Managing Utility Bills via AI Voicebots

4. Uncompromising, Carrier-Grade Reliability with Transparent SLAs

Why it matters: Voice is a mission-critical application. A dropped call is not just a bug; it is a lost sale or a frustrated customer. “Five nines” (99.999%) is the gold standard for a reason.

What to look for: Look for a provider that offers a financially backed Service Level Agreement (SLA) for uptime. This is a clear signal of their confidence in their infrastructure. The underlying platform should be built on a carrier-grade, highly redundant architecture. A recent ITIC survey on enterprise reliability found that for mission-critical applications, a single hour of downtime can cost a large enterprise over $300,000. Your voice platform must meet this high standard.

5. Advanced, AI-Powered Audio Quality Enhancements

Why it matters: The real world is a noisy place. Your users will be on calls from busy streets, in cars, or on spotty Wi-Fi connections. The SDK of 2026 must be intelligent enough to clean up this messy audio before it even reaches your application.

What to look for: The SDK should include or provide access to AI-powered features that run on the media server at the edge:

- Advanced Noise Cancellation: Algorithms that can identify and remove background noise (like traffic or a barking dog) from the audio stream in real-time.

- Intelligent Packet Loss Concealment (PLC): AI models that can predict and realistically fill in small gaps in the audio caused by network packet loss, making the audio sound much smoother.

Ready to build on a platform that defines the future of voice? Sign up for FreJun AI and explore our next-generation voice calling SDK.

6. A Truly Model-Agnostic Philosophy for AI Integration

Why it matters: The world of AI is moving at a blistering pace. The best LLM or TTS model today will almost certainly be superseded by a better one in six months. A voice calling SDK that locks you into its own proprietary, black-box AI models is a strategic dead end.

What to look for: The best voice calling SDK features include a deep, architectural commitment to being model-agnostic. The platform should be designed to be the “voice” for any AI “brain” you choose. It should provide the open, flexible tools to connect to any STT, LLM, or TTS provider, giving you the freedom to always use the best-in-class models for your use case.

7. Deep Observability and Granular Analytics

Why it matters: You cannot optimize what you cannot measure. When a user complains of a bad call, you need the tools to instantly diagnose the problem.

What to look for: The SDK and its underlying platform must provide a rich stream of data and analytics, all accessible via API:

- Detailed Call Detail Records (CDRs): Granular logs for every call, including all API requests and webhook events.

- Real-Time Quality Metrics: A live feed of key SDK performance benchmarks like jitter, packet loss, and Mean Opinion Score (MOS) for every active call.

- Comprehensive Webhook Eventing: Real-time notifications for every possible event in a call’s lifecycle, from “ringing” to “failed” to “quality warning.” A report by Forrester on observability highlighted that organizations with mature observability practices are 2.4 times more likely to exceed their business goals.

Also Read: Voice Calling API: Simplifying Cloud Communication for Businesses

8. Robust, End-to-End Security and Global Compliance

Why it matters: A voice call is a stream of sensitive data. Protecting it is a non-negotiable legal and ethical requirement.

What to look for:

- Encryption by Default: Full support for both TLS (for signaling) and SRTP (for media) should be standard, not an optional extra.

- Compliance Certifications: The provider must be able to demonstrate adherence to major global standards like SOC 2, ISO 27001, and be able to support the requirements of GDPR and HIPAA.

9. Advanced Call Control and Conversational Features

Why it matters: A modern voice application is more than just a two-party call. It is a dynamic, multi-faceted interaction.

What to look for: The SDK should provide a rich set of APIs for advanced call control features that are essential for sophisticated applications:

- Seamless Call Transfer and Conferencing: The ability to add, remove, and manage multiple parties in a call programmatically.

- Intelligent Barge-In/Interruption: The ability to detect when a user starts speaking over the AI and to manage the conversational turn-taking gracefully.

10. Simple, Transparent, and Usage-Based Pricing

Why it matters: The business model must be as modern and flexible as the technology. Complex, multi-year contracts with hidden fees are a relic of the past.

What to look for: A simple, pay-as-you-go pricing model. You should only pay for what you actually use, with clear, per-minute rates for calls and transparent pricing for phone numbers. This aligns your costs directly with your business activity and eliminates the financial waste of paying for idle capacity.

This table provides a quick checklist of these 10 essential features.

| Feature Category | Essential Capability for 2026 |

| Performance | Globally Distributed, Edge-Native Architecture |

| AI Enablement | Granular, Real-Time Media Streaming APIs |

| Developer Experience | A Radically Developer-First Experience |

| Reliability | Uncompromising, Carrier-Grade Reliability with SLAs |

| Audio Quality | Advanced, AI-Powered Audio Quality Enhancements |

| Flexibility | A Truly Model-Agnostic Philosophy for AI |

| Visibility | Deep Observability and Granular Analytics |

| Security | Robust, End-to-End Security and Global Compliance |

| Functionality | Advanced Call Control and Conversational Features |

| Business Model | Simple, Transparent, and Usage-Based Pricing |

Also Read: How Media Streaming Works Behind Every AI-Driven Voice Call

Conclusion

The voice calling SDK of 2026 is a far cry from the simple call-connection tools of the past. It is a sophisticated, multi-faceted platform that is at the very heart of the voice AI revolution. It is an intelligent, low-latency, and highly programmable bridge between the digital world of AI and the human world of conversation.

For any enterprise, developer, or product leader looking to build a truly modern and competitive voice experience, the ten features outlined above are not just a wish list; they are the new standard. Choosing an SDK that delivers on this new standard is the first and most important step in building the future of communication.

Want to see how the FreJun AI Voice Calling SDK measures up against these 10 critical features? Schedule a demo with our team at FreJun Teler.

Also Read: UK Phone Number Formats for UAE Businesses

Frequently Asked Questions (FAQs)

For an AI application, granular, real-time media streaming is arguably the most critical feature. It is the core capability that allows your AI to “hear” the caller and participate in a live conversation.

The biggest 2026 voice API trends are the move to edge-native architectures for ultra-low latency, the integration of AI-powered audio quality enhancements directly into the network, and an even deeper focus on providing a seamless, API-first developer experience.

The most important voice communication tools for AI are a low-latency voice network, a real-time media streaming API (for STT integration), and an API for injecting synthesized audio (for TTS integration).

Key SDK performance benchmarks to ask a provider about are their average P95 latency (the latency experienced by 95% of calls), their call setup time (the time from API call to the phone ringing), and their Mean Opinion Score (MOS) for call quality.

Barge-in is the ability for a user to interrupt a voice agent (human or AI) while it is speaking. A modern SDK provides the real-time events necessary to detect this and manage the conversation gracefully.

The world of AI models is evolving incredibly fast. A model-agnostic platform gives you the freedom to always use the best-in-class AI models from any provider, preventing you from being locked into a single vendor’s potentially outdated technology.

Latency is the total delay of a data packet. Jitter is the variation in that delay. High jitter means packets are arriving in an uneven, spiky pattern, which can make audio sound choppy even if the average latency is low.

It means the entire platform is designed around the needs of a software developer. This includes having a self-service sign-up, clear and comprehensive documentation, robust SDKs, and a powerful, logical API as the primary interface.

By processing the call at a server that is physically closer to the end-user, an edge-native architecture dramatically reduces the distance that audio packets have to travel across the internet. This is the most effective way to lower latency and reduce the potential for packet loss.

The FreJun AI platform was architected from the ground up to embody these principles. Our Teler engine is a globally distributed, low-latency network. Our voice calling SDK and APIs are designed for a seamless developer experience and provide the deep, real-time media control required for sophisticated AI. We are built to be the foundational layer for the next generation of voice communication.