The world of AI is evolving rapidly, and multimodal AI agents are at the forefront of this transformation. Unlike traditional AI, these agents can process and respond across multiple modalities – voice, text, and visual inputs -allowing for natural, human-like interactions. For founders, product managers, and engineering leads, understanding the rise of multimodal AI is crucial for building intelligent, scalable solutions that automate complex tasks while maintaining context.

This blog explores the technical foundations, real-world applications, and future trends of multimodal AI agents, highlighting how platforms like FreJun Teler simplify deployment and accelerate AI adoption in enterprise environments.

What Are Multimodal AI Agents and Why Are They Important?

Multimodal AI agents are intelligent systems capable of processing and responding to multiple forms of data, such as text, audio, images, and video. Unlike single-modality AI, which operates solely on text or speech, these agents can interpret diverse inputs, understand context, and deliver outputs across several formats.

The growing importance of multimodal AI agents stems from their ability to create natural, human-like interactions and deliver personalized experiences. For businesses, this capability is a game-changer. Instead of rigid, text-only chatbots or basic voice systems, multimodal agents can interact in ways closer to how humans communicate. According to Gartner, 80% of enterprise software and applications will be multimodal by 2030, up from less than 10% in 2024, highlighting the accelerating shift towards integrated AI solutions.

Some reasons these agents are gaining traction include:

- Enhanced user experience: They can understand both spoken commands and visual cues, making interfaces more intuitive.

- Improved efficiency: They reduce the need for human intervention in complex tasks by understanding and responding across multiple channels.

- Cross-domain applicability: Industries such as healthcare, finance, education, and retail can leverage them for automated, context-aware assistance.

How Do Multimodal AI Agents Work Technically?

At a technical level, multimodal AI agents consist of several interconnected components that process input, reason over it, and generate output. Understanding this stack is essential for anyone aiming to implement or integrate these agents.

Core Components

- Large Language Models (LLMs) / AI Engine

- These models are responsible for reasoning, generating context-aware responses, and managing dialogue logic.

- Popular choices include GPT-based models, Claude, or open-source LLMs like LLaMA.

- LLMs act as the decision-making brain, handling both structured and unstructured data.

- These models are responsible for reasoning, generating context-aware responses, and managing dialogue logic.

- Speech-to-Text (STT)

- Converts voice inputs into text for the LLM to process.

- Accurate transcription is critical for understanding context and intent in real-time interactions.

- Converts voice inputs into text for the LLM to process.

- Text-to-Speech (TTS)

- Converts the AI’s textual output into natural, human-like speech.

- Voice quality, tone, and latency are key factors in user satisfaction.

- Converts the AI’s textual output into natural, human-like speech.

- Retrieval-Augmented Generation (RAG)

- Provides access to external knowledge sources to enrich responses.

- Ensures the AI agent does not rely solely on its pre-trained knowledge but can reference updated, relevant information.

- Provides access to external knowledge sources to enrich responses.

- Tool and Plugin Calls

- Enable the AI to perform actions beyond conversation, such as querying a database, triggering workflows, or integrating with third-party applications.

- Enable the AI to perform actions beyond conversation, such as querying a database, triggering workflows, or integrating with third-party applications.

Data Flow and Processing

A typical multimodal AI agent follows a layered architecture:

| Layer | Function |

| Input Layer | Captures data from multiple modalities: voice, text, images, or video. |

| Processing Layer | LLM/AI reasoning, context management, RAG integration, and tool execution. |

| Output Layer | Delivers output via text, speech, or visual responses, maintaining coherence across modalities. |

This structure ensures that the AI can process complex scenarios while maintaining contextual awareness, critical for professional and enterprise-grade applications.

Why Are Multimodal AI Agents Becoming the Future of AI?

The adoption of multimodal AI agents is accelerating across industries because they deliver unprecedented efficiency and engagement. Here’s why businesses are increasingly relying on them:

- Natural interaction patterns: Users can speak, type, or show visual cues, and the AI responds appropriately.

- Automation of complex tasks: Routine processes, customer support queries, and decision-making workflows can be handled without human intervention.

- Context-aware responses: Combining multiple modalities allows the AI to maintain a coherent understanding of the conversation, improving accuracy and relevance.

- Industry adoption:

- Healthcare: patient triage and automated advice.

- Retail: personalized shopping recommendations with voice and visual cues.

- Finance: automated customer support for queries and account management.

- Healthcare: patient triage and automated advice.

Transitioning from traditional single-modality AI to multimodal agents also reduces friction in user adoption. When users can interact naturally, whether through speech, text, or images, engagement and satisfaction improve significantly.

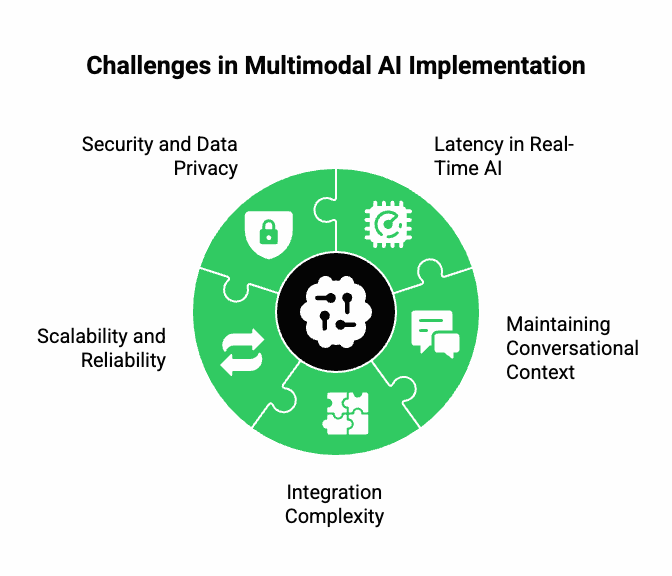

What Are the Common Challenges in Implementing Multimodal AI Agents?

Despite the advantages, implementing multimodal AI agents comes with several technical challenges:

- Latency in Real-Time Voice and Vision AI

- Processing audio, video, and text simultaneously can create delays.

- Ensuring low-latency responses is critical for live interactions, especially in customer service or sales.

- Processing audio, video, and text simultaneously can create delays.

- Maintaining Conversational Context

- Context tracking across multiple modalities requires advanced session management.

- Loss of context can lead to irrelevant or inconsistent responses.

- Context tracking across multiple modalities requires advanced session management.

- Integration Complexity

- Connecting LLMs, STT, TTS, RAG, and external tools into a seamless workflow demands robust APIs and infrastructure.

- Different providers may have varying protocols, making integration challenging.

- Connecting LLMs, STT, TTS, RAG, and external tools into a seamless workflow demands robust APIs and infrastructure.

- Scalability and Reliability

- Large-scale deployments require distributed systems to handle multiple simultaneous interactions.

- Systems must be resilient to network fluctuations or service interruptions.

- Large-scale deployments require distributed systems to handle multiple simultaneous interactions.

- Security and Data Privacy

- Handling sensitive data (audio, video, text) requires encryption, access controls, and compliance with regulatory standards.

- Handling sensitive data (audio, video, text) requires encryption, access controls, and compliance with regulatory standards.

Addressing these challenges is essential to deploy multimodal AI agents that deliver consistent and reliable performance.

Discover how AI transforms inbound calls, boosting efficiency, accuracy, and customer satisfaction in modern enterprise operations.

How Can FreJun Teler Simplify Multimodal AI Agent Deployment?

Implementing the technical infrastructure for real-time voice AI is often the most challenging part of multimodal AI deployment. This is where FreJun Teler provides a critical advantage.

What Teler Offers

- Global Voice Infrastructure: Teler connects any LLM or AI agent to cloud telephony or VoIP networks.

- Low-Latency STT/TTS Streaming: Ensures smooth, natural conversations without awkward pauses.

- Full Conversational Context: Maintains the state of ongoing dialogues, critical for complex interactions.

- Developer-First SDKs: Simplifies integration with web, mobile, or backend systems, reducing development overhead.

- Model-Agnostic Integration: Works with any LLM, TTS, or STT provider, offering flexibility for different enterprise needs.

Technical Benefits for Implementation

- Developers can focus on AI logic while Teler manages the voice infrastructure.

- Integration with Teler removes the need to handle complex telephony protocols.

- Scales seamlessly to support multiple simultaneous calls or interactions.

- Ensures secure, enterprise-grade deployment with high availability and reliability.

With Teler handling the voice infrastructure, multimodal AI agents can be applied across industries more effectively. Real-world applications now become feasible without the need to build telephony expertise in-house, enabling faster deployment and higher ROI.

How Can Multimodal AI Agents Be Applied in Real-World Scenarios?

Once the technical infrastructure is in place, multimodal AI agents can transform the way businesses interact with customers and automate internal processes. With voice, vision, and text working seamlessly together, organizations can deploy AI that is both intelligent and responsive.

1. Intelligent Voice Assistants

- AI receptionists or customer support agents capable of handling natural conversation.

- Examples of tasks:

- Answering frequently asked questions with context awareness.

- Escalating complex issues to human operators while maintaining context.

- Answering frequently asked questions with context awareness.

- Technical requirement: integration of LLM + STT + TTS + RAG to process multi-channel inputs and deliver consistent outputs.

2. Automated Outbound Campaigns

- Outbound calls, reminders, surveys, and feedback collection can be executed with human-like conversational design.

- Advantages:

- Scales campaigns without hiring additional staff.

- Personalizes interactions using AI to reference previous customer behavior or data.

- Scales campaigns without hiring additional staff.

- Key technologies:

- Voice AI for real-time interaction.

- LLM integration for dynamic, context-driven responses.

- Voice AI for real-time interaction.

3. Healthcare Applications

- AI agents can automate patient interactions:

- Scheduling appointments.

- Providing initial triage and instructions.

- Answering common medical queries with context-aware responses.

- Scheduling appointments.

- Integration of voice and vision AI allows agents to interpret spoken symptoms and visual reports, improving accuracy.

4. Enterprise Productivity Automation

- Internal support for HR, IT, and operations:

- Handling routine employee queries.

- Managing service tickets or workflow approvals.

- Providing instant, contextually aware guidance.

- Handling routine employee queries.

- Technical advantage: seamless STT/TTS pipelines with LLMs enable faster resolution while reducing human workload.

How Does FreJun Teler Support These Applications?

FreJun Teler serves as the foundation for real-time voice interactions in multimodal AI agents. While the LLM handles reasoning and decision-making, Teler manages the voice channel with high reliability and low latency.

Key Technical Advantages

- Seamless Integration with Any AI Model

- Teler is model-agnostic; it supports GPT-based models, Claude, LLaMA, and other proprietary or open-source LLMs.

- Developers retain full control over AI logic while Teler manages the voice delivery layer.

- Teler is model-agnostic; it supports GPT-based models, Claude, LLaMA, and other proprietary or open-source LLMs.

- Low-Latency Media Streaming

- Real-time STT/TTS pipelines ensure no perceptible delay between user input and AI response.

- Maintains conversation flow, reducing the risk of disengagement.

- Real-time STT/TTS pipelines ensure no perceptible delay between user input and AI response.

- Context Management

- Teler preserves the dialogue state for each session, critical for multimodal interactions spanning text, voice, and visual inputs.

- Teler preserves the dialogue state for each session, critical for multimodal interactions spanning text, voice, and visual inputs.

- Enterprise-Grade Security and Reliability

- Distributed infrastructure ensures high availability.

- Built-in security protocols protect sensitive data, including voice and textual interactions.

- Distributed infrastructure ensures high availability.

Table: Teler vs Traditional Telephony Platforms

| Feature | Traditional Telephony Platforms | FreJun Teler |

| LLM Integration | Limited | Full |

| STT/TTS Support | Basic | Advanced |

| Conversational Context Management | Minimal | Full |

| Low-Latency Streaming | Moderate | Optimized |

| Multi-Modal Input Support | No | Yes |

| Developer SDKs | Limited | Comprehensive |

What Are the Limitations of Competitors?

Most platforms in the voice domain focus exclusively on telephony or call management, without deep AI integration. Their limitations include:

- No LLM support: AI logic must be handled separately, increasing development complexity.

- Limited STT/TTS capabilities: Speech processing is often basic and cannot handle real-time, multi-turn conversations effectively.

- Minimal context tracking: Conversation memory is often stateless, leading to inconsistent responses.

- No multimodal support: Cannot process images, video, or integrate with other tools seamlessly.

FreJun Teler differentiates itself by bridging the gap between AI reasoning and real-time voice delivery, making multimodal AI agent deployment faster, scalable, and more reliable. Gartner predicts that by 2030, guardian agent technologies will capture 10 to 15% of the agentic AI market, underscoring the importance of secure and trustworthy AI systems.

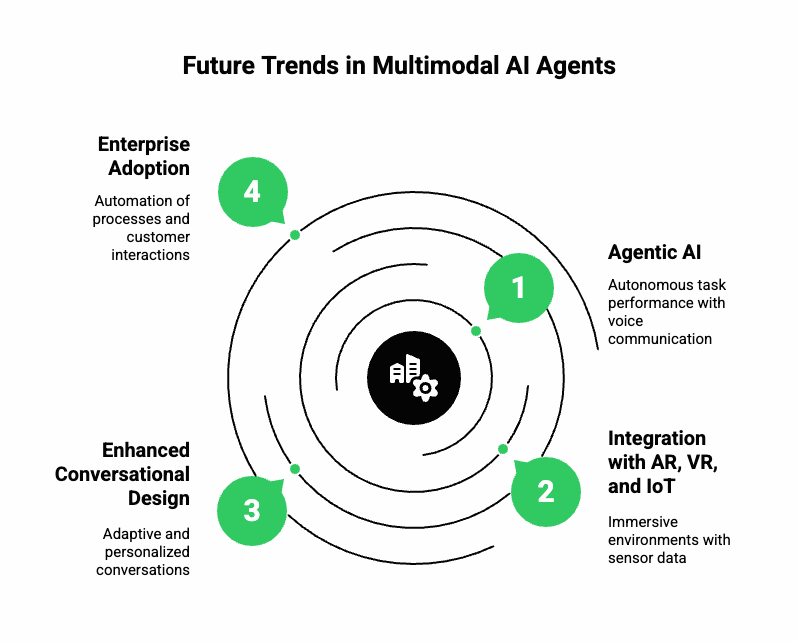

What Are the Future Trends in Multimodal AI Agents?

The rise of multimodal AI agents is only the beginning. The next generation of AI agents will leverage real-time, cross-modal interactions to perform increasingly complex tasks. Key trends include:

1. Agentic AI

- AI agents that autonomously perform multi-step tasks without human intervention.

- Integration with Teler ensures that these agents can communicate with humans over voice channels while maintaining context.

2. Integration with AR, VR, and IoT

- Multimodal agents will interact in immersive environments.

- Voice, vision, and sensor data integration will allow AI to provide richer, actionable insights.

3. Enhanced Conversational Design

- Conversations will become adaptive, context-aware, and personalized.

- Multimodal input allows AI agents to respond more naturally to human behavior, both in tone and content.

4. Enterprise Adoption

- Industries will increasingly rely on AI agents to automate internal processes, customer interactions, and outreach campaigns.

- Scalable and secure platforms like Teler will become essential infrastructure for multimodal AI deployments.

How Can Founders, Product Managers, and Engineering Leads Get Started?

Deploying multimodal AI agents may seem complex, but with the right approach, it becomes manageable. Here’s a roadmap:

- Identify Use Cases

- Determine which business processes can benefit from voice, vision, and text AI.

- Example: customer support, internal helpdesk, marketing outreach.

- Determine which business processes can benefit from voice, vision, and text AI.

- Select Your AI Stack

- Choose an LLM or AI agent that suits your task.

- Determine STT/TTS providers and RAG sources.

- Choose an LLM or AI agent that suits your task.

- Integrate Voice Infrastructure

- Use a platform like FreJun Teler to handle low-latency streaming, context tracking, and telephony integration.

- Use a platform like FreJun Teler to handle low-latency streaming, context tracking, and telephony integration.

- Design Conversational Flows

- Map out user interactions, edge cases, and fallback responses.

- Ensure that the AI can handle multi-turn, multi-modal conversations naturally.

- Map out user interactions, edge cases, and fallback responses.

- Pilot and Iterate

- Deploy small-scale tests to measure latency, accuracy, and user satisfaction.

- Refine AI responses and conversation design based on real-world feedback.

- Deploy small-scale tests to measure latency, accuracy, and user satisfaction.

- Scale Securely

- Expand usage across multiple channels or regions.

- Ensure security and compliance for enterprise-grade deployment.

- Expand usage across multiple channels or regions.

Learn the future of inbound call handling in 2025 and how AI-driven agents redefine customer service workflows.

Why Multimodal AI Agents Are a Strategic Investment

Implementing multimodal AI agents is not just a technical upgrade; it’s a strategic business decision:

- Operational efficiency: Automate repetitive or complex interactions.

- Enhanced customer experience: Provide fast, personalized, context-aware interactions.

- Scalability: Handle multiple channels, simultaneous calls, and diverse input types.

- Competitive differentiation: Early adopters gain a significant edge in customer engagement and internal productivity.

With platforms like FreJun Teler, integrating real-time voice with any LLM or AI agent becomes simpler and faster, allowing teams to focus on AI logic and conversational design rather than infrastructure.

Conclusion

The rise of multimodal AI agents represents a paradigm shift from traditional single-modality systems to intelligent, adaptive agents that interact naturally across voice, text, and visual inputs. For founders, product managers, and engineering leads, this is an opportunity to deploy AI that understands context, automates complex tasks, and scales seamlessly across business applications.

FreJun Teler serves as the critical backbone for these agents, offering enterprise-grade voice infrastructure that integrates effortlessly with any LLM, STT, TTS, and external tools. By managing real-time voice streaming, context tracking, and telephony integration, Teler allows teams to focus on building sophisticated multimodal AI experiences rather than infrastructure.

Schedule a demo with FreJun Teler today and launch human-like, real-time conversations in days – not months.

FAQs –

- What are multimodal AI agents?

AI systems that process text, voice, images, and video for natural, context-aware, multi-channel interactions. - How do multimodal agents differ from traditional AI?

They integrate multiple data types, maintaining context across modalities, unlike single-modality AI limited to text or voice. - What industries benefit most from multimodal AI?

Healthcare, retail, finance, customer service, and enterprise operations gain efficiency, personalization, and scalability. - Why is real-time voice integration important?

Ensures seamless conversation flow, reduces latency, and delivers human-like interactions critical for customer engagement. - Can any LLM be integrated with Teler?

Yes, Teler supports model-agnostic LLM integration, including GPT, Claude, LLaMA, and proprietary or open-source models. - How does Teler manage conversational context?

Teler preserves dialogue state across sessions, enabling consistent, multi-turn, multimodal conversations. - What is RAG in multimodal AI?

Retrieval-Augmented Generation fetches external knowledge to enhance AI responses beyond pre-trained capabilities. - How does Teler help scale AI deployments?

Provides enterprise-grade voice infrastructure, low-latency streaming, and SDKs for web, mobile, and backend integration. - Are multimodal AI agents secure for enterprise use?

Yes, they employ encryption, access controls, and compliance protocols, ensuring safe handling of sensitive data. - What’s the future of multimodal AI agents?

Real-time agentic AI, AR/VR integration, adaptive conversational design, and enterprise adoption will drive innovation and efficiency.