For years, the world of advanced AI has felt like a walled garden. The most powerful models, the most human-like voices, and the most intelligent “brains” were proprietary, locked away behind expensive, closed-source APIs. For developers, this meant a trade-off: either pay a premium to access the best technology or settle for less powerful alternatives.

That entire paradigm is collapsing. We are in the golden age of open-source AI. A new wave of incredibly powerful, community-driven models is not just catching up to their proprietary counterparts; in some cases, they are surpassing them. This revolution is putting state-of-the-art tools into the hands of every developer, creating an unprecedented opportunity to build custom, powerful, and cost-effective open source voice agents.

This guide is for the builders. We will explore the open-source landscape, break down the essential components you need to get started, and show you how to assemble your very own voicebot online from the ground up.

Table of contents

Why Should Developers Consider Open Source Voice Agents?

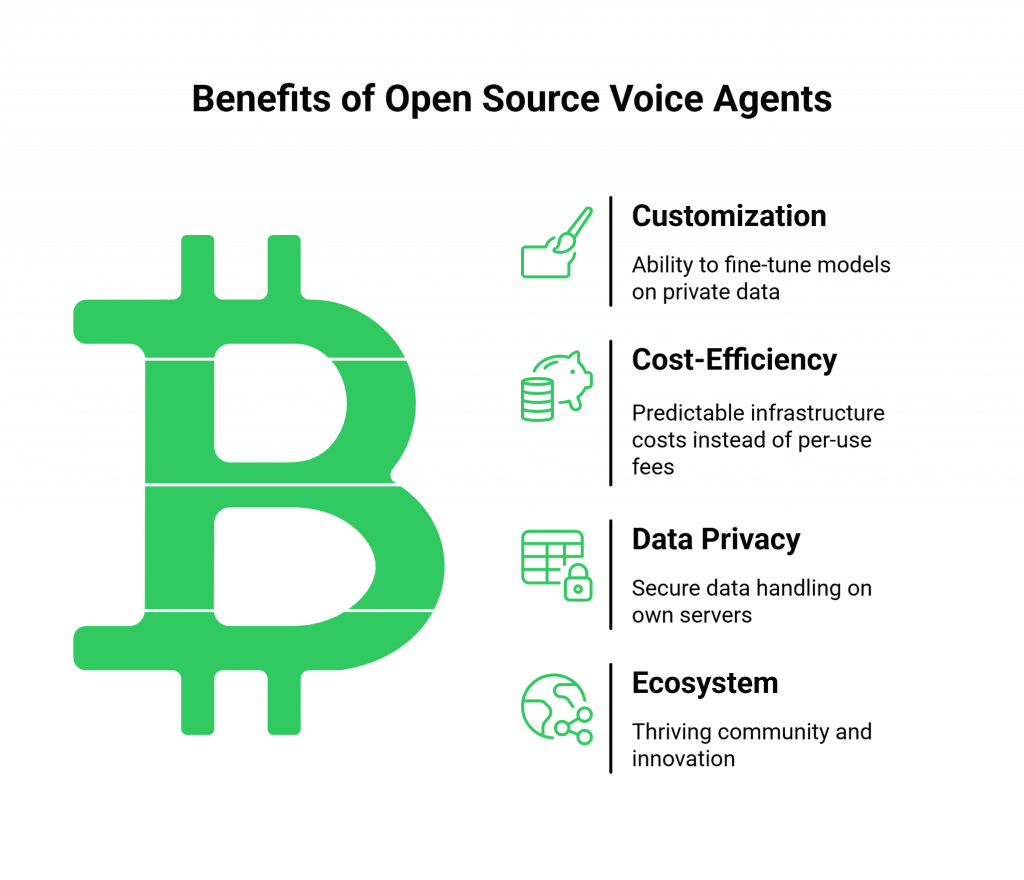

The decision to build with voice agents open source is more than just a technical choice; it’s a strategic one. It’s about taking back control and building an AI that is truly yours.

- Unmatched Customization and Control: An open model is a blank canvas. You can fine-tune it on your own private data, making it a true expert in your specific domain. This level of customization is simply not possible with a closed-box, proprietary model.

- Cost-Efficiency at Scale: The cost model is fundamentally different. Instead of paying a per-use or per-token fee for every single API call, you control the hardware. This shifts the cost from a variable operational expense to a more predictable infrastructure cost, which can be dramatically more affordable for high-volume applications.

- Ultimate Data Privacy: For businesses that handle sensitive information, this is the most compelling reason of all. By running your entire AI stack on your own servers, you can guarantee that your users’ conversational data never leaves your secure environment.

- A Future-Proof, Thriving Ecosystem: The open-source AI community is one of the most vibrant and fastest-moving in all of tech. A recent survey by Andreessen Horowitz found that a majority of enterprises are already using open-source models, which is fueling an explosion of innovation. By building on open standards, you are future-proofing your work and tapping into a global community of builders.

Also Read: Voice API for Developers: Debugging and Testing Guide

What Are the Core Components of an Open Source Voice Agent?

Building an open source voice agents solution is like assembling a high-performance “dream team” of specialized AI models. You get to pick the best player for each position.

- The “Brain” (The LLM): This is the core reasoning engine. The Large Language Model is responsible for understanding the user’s intent and formulating an intelligent response.

- The “Ears” (Speech-to-Text – STT): This is the model that listens to the raw audio of the caller’s voice and transcribes it into text for the brain to process.

- The “Mouth” (Text-to-Speech – TTS): This is the model that takes the brain’s text response and synthesizes it into natural-sounding, audible speech.

- The Communication Network (The Voice Infrastructure): This team of AI experts is useless if they can’t connect to a live phone call. This is the role of the voice infrastructure. A platform like FreJun AI is the essential communication network. It handles all the complex telephony and provides the ultra-low-latency, real-time audio streaming that allows your AI team to have a conversation.

Ready to start building with the power of open source? Sign up & Explore FreJun AI’s developer-first voice API

Also Read: Voice-Based Bot Examples That Increase Conversions

Which Open Source Tools Should You Know in 2024/2025?

The open-source ecosystem is vast. Here are some of the best-in-class tools that developers are using to build powerful voice agents open source.

“Brain” (LLMs)

- Meta’s Llama 3: A state-of-the-art family of models offering a fantastic balance of performance and size. The 8B version is a powerhouse of efficiency, while the 70B version rivals top proprietary models.

- Google’s Gemma Family: Models like Gemma 2 are designed for top-tier performance with a focus on efficiency, making them a highly flexible option.

- Mistral AI’s Models: Known for their high performance, especially the Mixtral model, which uses a “Mixture of Experts” (MoE) architecture to deliver incredible results.

“Ears” (STT)

- OpenAI’s Whisper: Whisper has become the gold standard for open-source STT. It offers remarkable accuracy across a wide range of languages and accents.

“Mouth” (TTS)

- Coqui TTS: A popular and highly flexible open-source library that allows you to train your own custom voices or use a variety of pre-trained, high-quality ones.

- Piper: A fast and lightweight neural text-to-speech system that is optimized for performance on local hardware.

How Do You Assemble Your First Open Source Voice Agent?

Bringing these components together is an exciting engineering challenge. Here’s a high-level blueprint.

- Deploy Your “Brain”: The first step is to host your chosen LLM. This will typically involve deploying the model on a cloud server with a powerful GPU and exposing it as a secure API endpoint.

- Set Up Your “Senses”: Similarly, you will deploy your chosen STT and TTS models, making them accessible via APIs within your private network.

- Establish the Voice Infrastructure: This is the bridge to the outside world. With a developer-first platform like FreJun AI, you can get a phone number and configure a webhook in minutes. This is the “front door” for every incoming call.

- Write the Orchestration Logic: Your backend application code is the conductor. It’s a real-time loop that receives the live audio from FreJun AI, forwards it to your self-hosted STT, sends the transcript to your self-hosted LLM, sends the LLM’s response to your self-hosted TTS, and streams the final audio back to the user via FreJun AI.

Also Read: How Multimodal AI Agents Transform Business Operations

Conclusion

The era of being locked into a single AI provider is over. The rise of powerful open source voice agents has put the power back into the hands of developers. You now have the freedom to build a voice AI that is more customized, more secure, and more cost-effective than ever before.

By combining the best of the open-source world with the robust, low-latency, and flexible infrastructure of a platform like FreJun AI, you have the complete toolkit to build the future of the voicebot online.

Want to learn more about the infrastructure that powers the most flexible voice agents open source? Schedule a demo with FreJun AI today.

Also Read: Outbound Call Center Software: Essential Features, Benefits, and Top Providers

Frequently Asked Questions (FAQs)

Open source voice agents are conversational AI systems built using publicly available, open-source models for their core components (like the LLM, STT, and TTS). This gives developers full control over the code, the data, and the hardware.

The main advantages are customization (you can fine-tune the model), cost control (you pay for hardware, not per-use fees), and data privacy (you can host it on your own servers).

For a real-time voice application, you will need a server with one or more powerful GPUs. The exact requirements depend on the size of the LLM you choose.

Fine-tuning is the process of taking a pre-trained open model and training it further on your own smaller, specialized dataset. This can make the model an expert in your specific industry’s jargon or your company’s tone of voice.

The choice depends on your needs. For maximum performance, you might choose a larger model like Llama 3 (70B) or Gemma 2. For efficiency on less powerful hardware, you might choose a smaller model like Llama 3 (8B) or one from the Phi-3 family.

It is more technically involved than using an all-in-one platform, as it requires you to manage your own servers and deployments. However, for a developer comfortable with cloud infrastructure and APIs, it is a very achievable and rewarding project.

Your best option is to use a world-class open-source STT model like OpenAI’s Whisper. It is trained on a massive and diverse dataset. It delivers strong performance across many accents.

FreJun AI provides the essential, cloud-based voice infrastructure. It handles the telephony (connecting to the phone network) and the ultra-low-latency streaming of audio between the user and your self-hosted application, acting as the secure and reliable bridge.

Yes, absolutely. RAG (Retrieval-Augmented Generation) is a technique that works perfectly with open models. You can connect your self-hosted LLM to a self-hosted vector database to ensure its answers are always grounded in your company’s specific knowledge.

Latency can be extremely low in this setup. The AI model runs on your own hardware. It can be hosted in a cloud region close to users. This often delivers lower latency than public, multi-tenant AI services.