In real-world settings, voice agents encounter a wide range of background noise that directly impacts their performance. Offices, factories, retail spaces, and public areas rarely offer a perfectly quiet environment. Even home environments can introduce unpredictable noises like traffic, pets, or appliances. These disturbances can cause speech recognition systems to misinterpret or completely miss user input, breaking the flow of conversation.

While modern speech models can achieve transcription accuracy of over 97% in clean environments, accuracy degrades significantly in realistic noisy conditions, demonstrating how background noise compounds recognition errors.

Key challenges include:

- Overlapping speech: When multiple people speak at the same time, STT engines often fail to separate the voices accurately.

- Environmental noise: Sounds such as traffic, construction, or background music can reduce transcription accuracy.

- Reverberation: Rooms with reflective surfaces distort the original speech signal.

- Low-quality microphones: Inexpensive or mobile devices may not capture audio clearly.

The consequences of these challenges are significant:

- Incorrect transcription leads to misunderstood commands or failed task execution.

- Latency in response can disrupt natural conversational flow.

- LLMs or AI agents may lose contextual understanding, resulting in irrelevant or incorrect answers.

Traditional voice platforms, while reliable for basic call handling, often fail in these scenarios. Most of them are designed for clear, controlled audio rather than noisy, unpredictable environments. Therefore, implementing a voice recognition SDK with noise-handling capabilities becomes essential.

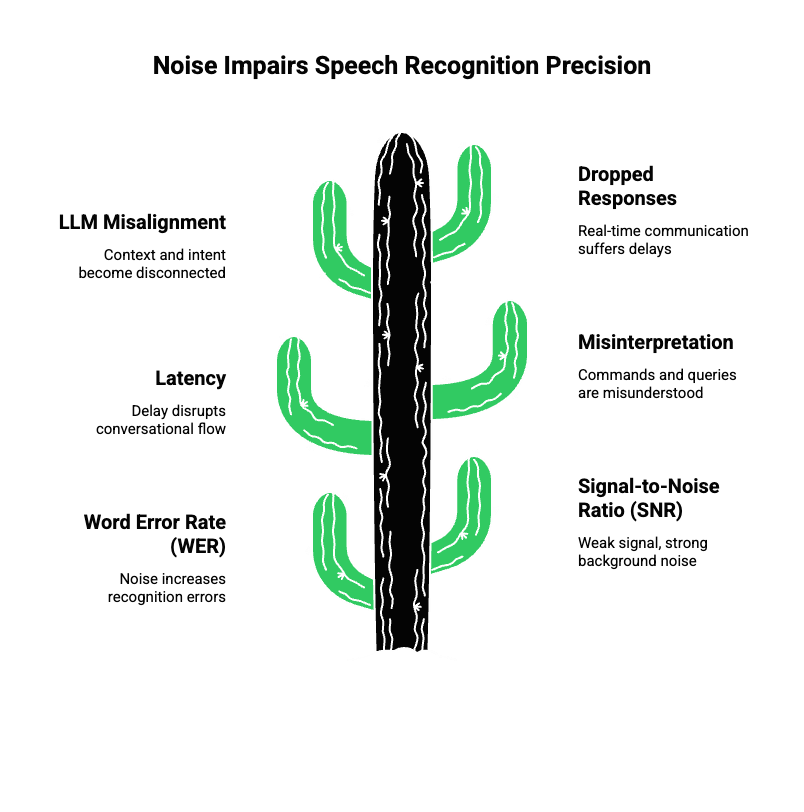

How Does Noise Affect High-Precision Speech Recognition?

Speech recognition accuracy is measured using several key metrics:

- Word Error Rate (WER): The percentage of words incorrectly recognized. Noise increases WER significantly if not managed properly.

- Signal-to-Noise Ratio (SNR): Lower SNR means the speech signal is weaker compared to background noise. High-precision systems aim to function effectively even with low SNR.

- Latency: Delay between spoken input and system response. High latency breaks conversational flow and reduces usability.

Noise can cause:

- Misinterpretation of commands and queries.

- Misalignment between LLM context and user intent.

- Dropped or delayed responses in real-time communication.

For example, consider a customer calling a virtual assistant in a busy call center. Without robust noise handling, the agent might misinterpret “Check my order” as “Cancel my order,” leading to errors and dissatisfaction.

Implementing high precision speech SDKs ensures that transcription accuracy remains high, even in the presence of multiple noise sources. Modern noise-handling systems use adaptive algorithms that continuously analyze and separate human speech from background sounds.

What Are The Core Technologies Behind Noise-Robust Voice Recognition?

Building a voice recognition SDK that handles noise with high precision requires a combination of advanced techniques. These technologies work together to ensure accurate and reliable performance:

Voice Activity Detection (VAD)

VAD identifies when speech occurs in an audio stream and distinguishes it from background noise. Modern AI-powered VADs, such as Quail VAD, offer:

- Real-time detection with minimal latency.

- Robust performance in multi-speaker and noisy scenarios.

- Ability to operate with any STT engine or AI agent.

Real-Time Audio Enhancement

Noise-robust systems use real-time audio enhancement techniques to clean the signal before transcription:

- Spectral subtraction: Removes constant background noise.

- Neural denoising networks: Adaptively remove unpredictable noise.

- Echo cancellation: Ensures that repeated sounds or feedback do not distort recognition.

These methods significantly improve the clarity of speech signals for downstream processing.

Low-Latency Streaming Pipelines

Maintaining real-time communication is critical for interactive voice agents. Streaming pipelines are optimized to:

- Deliver audio to STT engines and LLMs with minimal delay.

- Handle large-scale simultaneous calls without degradation.

- Support WebRTC or custom streaming protocols for smooth transmission.

Robust STT Models

STT engines designed for noisy environments include:

- Noise-adaptive training: Models trained on datasets with multiple noise types.

- Domain-specific adaptation: Custom fine-tuning for verticals like finance, healthcare, or logistics.

- Feedback loops: Continuous improvement based on real usage patterns.

Table: Noise-Handling Technologies vs. Benefits

| Technology | Key Benefit |

| Voice Activity Detection | Accurate detection of speech segments |

| Neural Denoising | Removes unpredictable noise in real-time |

| Echo Cancellation | Eliminates feedback and reverberation |

| Low-Latency Streaming | Real-time processing with minimal delay |

| Noise-Adaptive STT Models | High transcription accuracy in noisy settings |

Metrics to Track

For high-precision speech recognition, monitoring is essential:

- WER (Word Error Rate): Measures transcription accuracy.

- Latency: Should ideally be <150ms for real-time interactions.

- SNR (Signal-to-Noise Ratio): Higher values indicate better separation of speech from noise.

- Turn-taking Accuracy: Ensures the agent responds only after speech input is complete.

How Can Developers Build Noise-Resilient Voice Agents?

Designing a noise-resilient voice agent involves integrating multiple components into a unified architecture. A typical pipeline includes:

- Voice Input Capture: High-quality microphone or streaming input.

- Noise-Robust VAD: Detects when speech starts and ends.

- STT Engine: Converts speech to text, tuned for noisy environments.

- LLM or AI Agent: Processes text and determines appropriate response.

- TTS Engine: Converts AI response back to speech.

- Playback: Streams the output audio back to the user.

- Optional Layers: RAG (retrieval-augmented generation) or tool-calling for advanced workflows.

Developer Considerations:

- Use model-agnostic SDKs to plug in any LLM or TTS/STT combination.

- Optimize for low CPU and memory overhead.

- Ensure stable, low-latency streaming to maintain natural conversation.

- Implement metrics monitoring for continuous improvement.

Key Benefits:

- Enables AI agents to work reliably in noisy offices, public spaces, or remote environments.

- Provides flexibility to choose the best STT, TTS, and AI models.

- Reduces miscommunication, latency, and dropouts during live interactions.

Why Is FreJun Teler Ideal For Noise-Handling Voice Agents?

When building AI-first voice agents, the voice infrastructure layer plays a critical role. This is where FreJun Teler comes in. Teler acts as a real-time transport layer that ensures high-quality audio delivery, even in challenging environments.

Technical Advantages of Teler:

- Low-Latency Media Streaming: Every word reaches the AI agent in milliseconds.

- Noise-Resilient Capture: Maintains audio clarity even with overlapping speech or background sounds.

- Full Context Retention: Keeps conversational state intact for LLMs or AI agents.

- Flexible Integration: Works with any LLM, STT, or TTS without locking the developer into a specific AI stack.

Unlike traditional telephony platforms like Twilio or basic call SDKs, Teler is designed specifically for AI-driven voice agents, not just call routing. Its developer-first SDKs allow rapid deployment of voice agents capable of handling high-noise conditions, making it the backbone for real-world conversational AI applications.

Best Practices For Implementing Noise-Robust Voice Agents

Building a voice agent that handles noise with high precision requires more than just selecting the right SDK. Developers and engineering teams must focus on optimizing the pipeline, selecting the right models, and continuously monitoring performance.

How Can Developers Ensure Maximum Accuracy?

- Tune Voice Activity Detection (VAD) Parameters

- Adjust thresholds based on the environment: quiet office vs. busy call center.

- Monitor false positives and false negatives to maintain correct speech segmentation.

- Use adaptive VAD that changes sensitivity in real time depending on background noise.

- Adjust thresholds based on the environment: quiet office vs. busy call center.

- Select Appropriate STT Models

- Use noise-trained ASR models capable of handling multiple types of interference.

- Prefer domain-adapted models for specific industries (finance, healthcare, logistics).

- Implement continuous learning loops to retrain the model on real-world user audio.

- Use noise-trained ASR models capable of handling multiple types of interference.

- Optimize Streaming Pipelines

- Choose protocols that minimize latency while maintaining audio fidelity.

- Use real-time media streaming to reduce delays between speech input and AI response.

- Implement packet loss recovery and jitter buffering to maintain a consistent audio stream.

- Choose protocols that minimize latency while maintaining audio fidelity.

- Integrate RAG and Tool-Calling Thoughtfully

- For LLM-based agents, retrieval-augmented generation (RAG) ensures accurate answers even in complex queries.

- Tool-calling frameworks should have retry logic to avoid failures when noise causes transcription errors.

- For LLM-based agents, retrieval-augmented generation (RAG) ensures accurate answers even in complex queries.

- Monitor Key Metrics Continuously

- WER (Word Error Rate): Lower is better; track changes as noise patterns evolve.

- Latency: Aim for sub-150ms response times for real-time conversational flow.

- Turn-Taking Accuracy: Ensure AI only responds after detecting the end of a user’s input.

- Signal-to-Noise Ratio (SNR): Regularly evaluate audio quality to detect degradation.

- WER (Word Error Rate): Lower is better; track changes as noise patterns evolve.

By implementing these strategies, development teams can maintain high-precision voice recognition even in challenging environments.

Where Can High-Precision Voice Recognition Make The Biggest Impact?

The practical applications of noise-robust voice agents are vast. Businesses that rely on real-time communication or high-volume customer interactions benefit the most.

Key Use Cases:

- Intelligent Receptionists and Front Desk Assistants

- Automated AI agents answer calls, schedule appointments, and route queries.

- Capable of understanding multiple speakers and background noise.

- Automated AI agents answer calls, schedule appointments, and route queries.

- Outbound Campaigns With Personalization

- High-precision voice agents deliver reminders, confirmations, and notifications with human-like intonation.

- Noise-resilient recognition ensures messages are transcribed and responded to accurately.

- High-precision voice agents deliver reminders, confirmations, and notifications with human-like intonation.

- Customer Support in Noisy Call Centers

- Agents handle simultaneous calls in high-traffic environments.

- Accurate transcription ensures seamless handoff between AI and human agents.

- Agents handle simultaneous calls in high-traffic environments.

- Voice Interfaces in Vehicles and Public Spaces

- Voice assistants in cars must operate amid engine noise, traffic, or passenger chatter.

- Factory or warehouse assistants can process spoken commands despite machinery or background alarms.

- Voice assistants in cars must operate amid engine noise, traffic, or passenger chatter.

- Healthcare and Telemedicine Applications

- Doctors and patients communicate without disruptions, even in hospitals with constant ambient noise.

- Transcriptions maintain accuracy for records, prescriptions, and guidance.

- Doctors and patients communicate without disruptions, even in hospitals with constant ambient noise.

These use cases highlight the real-world value of implementing a noise-resilient voice agent. Companies that invest in high-quality voice recognition SDKs can significantly enhance operational efficiency and customer satisfaction.

How Can Engineering Teams Achieve High Precision in Production?

Achieving reliable results in production requires careful system design, monitoring, and iterative improvements.

Step-by-Step Recommendations:

- Design a Modular Pipeline

- Keep VAD, STT, LLM, TTS, and RAG/tool-calling layers decoupled.

- Modular design allows swapping or upgrading components without system-wide downtime.

- Keep VAD, STT, LLM, TTS, and RAG/tool-calling layers decoupled.

- Implement Continuous Noise Monitoring

- Collect samples from real deployment environments.

- Adjust denoising algorithms or retrain models based on observed patterns.

- Collect samples from real deployment environments.

- Prioritize Low-Latency Architecture

- Stream audio in small chunks to reduce delay.

- Use WebRTC or similar protocols for real-time audio transport.

- Stream audio in small chunks to reduce delay.

- Integrate Error Handling and Retry Logic

- Automatically retry failed API calls or mis-transcriptions.

- Implement fallback logic for critical workflows.

- Automatically retry failed API calls or mis-transcriptions.

- Optimize for Scalability

- Design infrastructure to handle thousands of simultaneous calls.

- Use geographically distributed servers to reduce latency for global deployments.

- Design infrastructure to handle thousands of simultaneous calls.

By following these steps, engineering teams can deploy AI-first voice agents capable of consistent, accurate performance, even in challenging noise conditions.

What Advanced Strategies Enhance Noise-Robust Recognition?

For Founders and Product Managers aiming for enterprise-grade voice agents, several advanced strategies provide additional reliability and precision:

1. Multi-Microphone Beamforming

- Combines input from multiple microphones to isolate the speaker’s voice.

- Reduces background noise while improving SNR.

2. Neural Audio Enhancement Models

- Deep learning models remove background noise and enhance speech clarity.

- Can operate in real time with minimal computational overhead.

3. Adaptive Voice Activity Detection

- Continuously adjusts VAD thresholds based on detected environment.

- Prevents false triggers in changing noise conditions.

4. Context-Aware AI Processing

- LLMs can leverage previous conversation history to correct misheard commands.

- RAG integration allows cross-checking noisy inputs against structured knowledge sources.

5. Dynamic STT Model Switching

- Use lightweight STT for low-noise settings, switch to noise-trained models in high-interference environments.

- Reduces latency while maintaining transcription accuracy.

These strategies ensure that voice agents operate reliably across multiple environments, maintaining high transcription accuracy, natural interaction flow, and AI context understanding.

What Future Trends Will Shape Noise-Resilient Voice Agents?

Voice AI is evolving rapidly, and the next generation of high-precision voice agents will leverage several trends:

- Self-Learning Noise Adaptation

- STT models automatically learn from mis-transcriptions in noisy environments.

- Continuous improvement reduces manual intervention.

- STT models automatically learn from mis-transcriptions in noisy environments.

- Multi-Modal Interaction

- Voice combined with text, gestures, or visual context improves comprehension.

- Helps disambiguate commands in noisy settings.

- Voice combined with text, gestures, or visual context improves comprehension.

- Emotion and Intent Recognition

- Detect user frustration or urgency even if audio quality is compromised.

- Allows AI agents to adjust responses dynamically.

- Detect user frustration or urgency even if audio quality is compromised.

- AI-First Voice Platforms

- Platforms like Teler provide real-time, low-latency, noise-resilient transport.

- Ensures high-fidelity audio capture for LLMs, STT, and TTS integration.

- Acts as a scalable backbone for building enterprise-grade, multi-use voice agents.

- Platforms like Teler provide real-time, low-latency, noise-resilient transport.

By aligning product roadmaps with these trends, companies can deliver future-proof voice solutions that perform reliably in real-world conditions.

How Can You Get Started With a Noise-Robust Voice Recognition SDK?

Implementing a high-precision voice agent can seem complex, but following a structured approach makes adoption straightforward:

- Choose a Noise-Resilient SDK

- Ensure it supports VAD, STT, TTS, and real-time streaming.

- Prefer model-agnostic SDKs compatible with any LLM.

- Ensure it supports VAD, STT, TTS, and real-time streaming.

- Integrate Into Your AI Agent Architecture

- Pipeline: Voice Input → Noise-Robust VAD → STT → LLM → TTS → Playback

- Optional: RAG and tool-calling layers for advanced workflows.

- Pipeline: Voice Input → Noise-Robust VAD → STT → LLM → TTS → Playback

- Test Across Multiple Environments

- Validate performance in quiet offices, call centers, public spaces, and noisy industrial areas.

- Monitor WER, latency, SNR, and turn-taking accuracy.

- Validate performance in quiet offices, call centers, public spaces, and noisy industrial areas.

- Leverage Analytics and Continuous Improvement

- Use metrics to identify gaps in transcription accuracy.

- Retrain models and adjust thresholds based on real-world usage.

- Use metrics to identify gaps in transcription accuracy.

- Deploy at Scale

- Use cloud infrastructure to handle multiple simultaneous voice agents.

- Ensure redundancy and geographically distributed servers for global reach.

- Use cloud infrastructure to handle multiple simultaneous voice agents.

By following these steps, engineering teams and product leaders can implement a robust, high-precision voice agent capable of operating in noisy environments, delivering consistent results and a natural user experience.

Conclusion

In a world where voice agents interact across diverse, noisy environments, high‑precision speech recognition is no longer optional – it’s essential. Throughout this article, we examined how noise impacts accuracy, core technologies that enable reliable voice recognition, and best practices for building noise‑resilient AI agents. Engineering leaders and product teams must prioritize noise‑aware detectors, adaptive STT models, and low‑latency streaming to ensure dependable performance.

If your product requires real‑world conversational accuracy, FreJun Teler provides the transport and voice infrastructure layer built for real‑time noise‑robust interactions. With flexible SDKs, seamless integration with any LLM/STT/TTS stack, and optimized media streaming, Teler accelerates deployment while enhancing audio clarity.

Schedule a demo with FreJun Teler today and experience how your AI agents can achieve high-precision speech recognition, even in the noisiest environments.

FAQs –

- What is a voice recognition SDK?

A voice recognition SDK provides tools for speech capture, analysis, and transcription, enabling developers to build real‑time, noise‑aware voice systems. - Why is noise reduction STT important?

Noise reduction STT ensures accurate transcription in real environments, minimizing errors and improving user interactions across diverse audio conditions. - How does VAD help noisy environments?

Voice Activity Detection identifies speech segments within noise, improving segment timing and bolstering downstream STT accuracy. - Can any LLM integrate with noise‑robust SDKs?

Yes – model‑agnostic voice SDKs allow you to connect any LLM, supporting flexible choices for AI and dialogue logic. - What metrics define STT quality?

Key metrics include Word Error Rate (WER), latency, signal‑to‑noise ratio (SNR), and conversational turn accuracy. - Do noise‑aware models require retraining?

Yes – continuous real‑world data improves noise adaptation by retraining models to handle evolving environmental interference. - What is clean audio AI?

Clean audio AI refers to systems that enhance or isolate speech signals, reducing noise for better recognition and interaction. - How does streaming impact voice agents?

Low‑latency streaming delivers audio quickly to processing layers, preserving natural flow and responsiveness in real‑time conversations. - Can RAG improve noisy recognition?

Yes – Retrieval‑Augmented Generation supports contextual recall, helping AI agents answer accurately despite noisy or incomplete input.

What makes a high-precision speech SDK?

A high-precision speech SDK combines adaptive STT, robust VAD, enhancement models, and low‑latency streaming to excel in noisy conditions.