Voice is becoming the most natural interface between humans and software. However, building voice applications that feel fast, responsive, and human is still technically hard. While chat interfaces can tolerate delays, voice cannot. Even small pauses break trust and flow.

Because of this, developers building AI voice agents, real-time calling apps, or conversational systems increasingly rely on a voice API for developers. But why exactly is a voice API essential? More importantly, why does low latency sit at the center of every successful voice experience?

This blog answers that question step by step, from fundamentals to architecture, with a strong focus on real-time audio, streaming voice infrastructure, and developer-first design.

What Is A Voice API And Why Should Developers Care?

A voice API allows developers to programmatically send, receive, and control voice calls using software. Instead of managing telecom hardware, signaling protocols, and carrier contracts, developers interact with simple APIs and SDKs.

However, a modern voice API for developers is not just about placing calls. It is about handling real-time audio streams, routing them correctly, and making them usable by applications like AI agents.

At a high level, a voice API helps developers:

- Connect applications to PSTN, SIP, and VoIP networks

- Capture inbound and outbound audio streams

- Control call logic using code instead of switches

- Integrate speech systems like STT, TTS, and AI models

Because of this abstraction, teams can focus on building product logic rather than telecom plumbing. As a result, development speed improves, reliability increases, and scaling becomes easier.

With more than 62% of U.S. adults regularly using voice assistants and over 20% of global internet users engaging via voice search, the adoption of voice interfaces continues to climb – making voice APIs indispensable for real-time applications.

However, while basic voice APIs enable calling, low-latency voice APIs enable conversations. This distinction is critical.

Why Is Low Latency Crucial For Voice Applications?

Latency refers to the time it takes for audio to travel from the speaker to the listener and back again. In voice applications, this includes:

- Audio capture delay

- Network transmission time

- Speech recognition processing

- AI inference time

- Text-to-speech generation

- Audio playback buffering

Individually, these delays may look small. However, when combined, they define whether a voice app feels natural or broken.

Human Expectations In Voice Interactions

Humans are highly sensitive to timing in conversations. Even small delays change how responses are perceived.

| Latency Range | User Perception |

| <100 ms | Feels instant |

| 100–250 ms | Feels conversational |

| 250–400 ms | Feels slightly delayed |

| >400 ms | Feels unnatural |

Because of this, low latency is not a performance metric – it is a user experience requirement.

Therefore, any real-time audio API that powers voice apps must minimize latency across the entire pipeline, not just the network layer.

How Do Real-Time Voice Apps Actually Work?

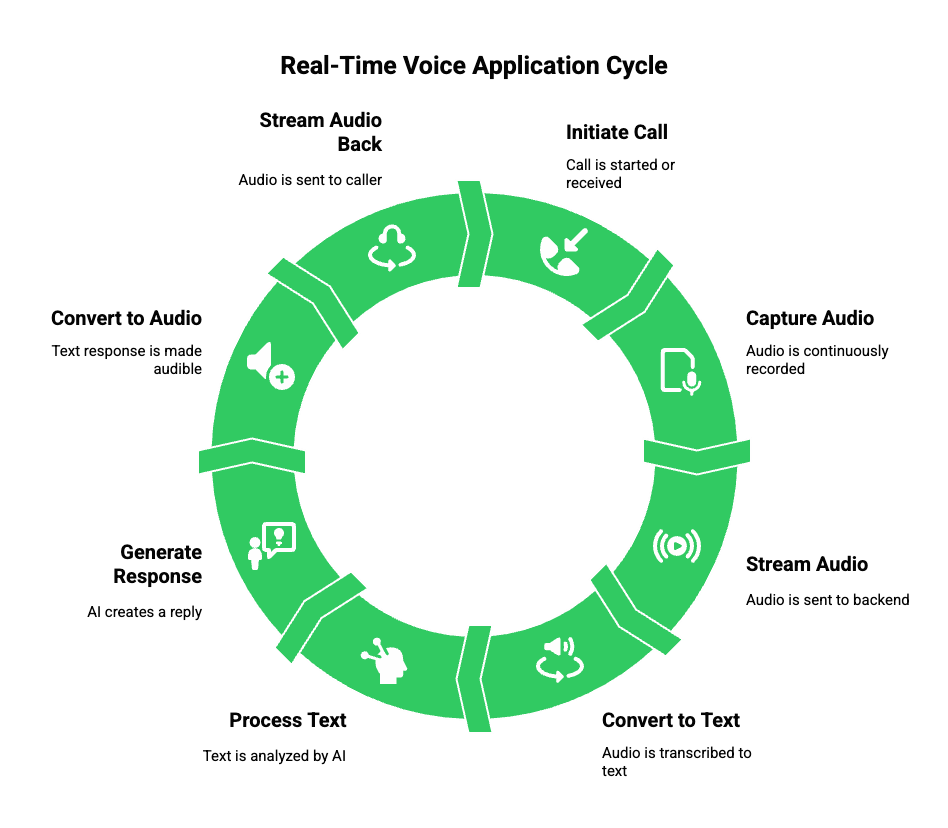

To understand why a low latency voice API is essential, it helps to look at how modern voice applications are built.

Most real-time voice apps follow this flow:

- A call is initiated or received

- Audio is captured continuously

- Audio is streamed to an application backend

- Speech-to-text converts audio into text

- An AI model processes the text

- A response is generated

- Text-to-speech converts the response to audio

- Audio is streamed back to the caller

This loop repeats continuously during the call.

Because of this structure, voice agents are often described as:

Voice Agent = STT + LLM + TTS + Context + Tool Calling

However, this pipeline only works when audio is streamed in real time. If audio is buffered or processed in chunks, latency increases rapidly.

Therefore, streaming voice infrastructure becomes the foundation of every real-time voice application.

What Makes Voice Latency Hard To Solve?

Voice latency is not caused by a single factor. Instead, it is the result of multiple technical layers working together.

1. Network Transport

Voice traffic must travel across networks reliably and quickly. Delays can come from:

- Physical distance between users and servers

- Poor routing between carriers

- Packet loss and retransmissions

Because of this, global voice apps need geographically distributed infrastructure.

2. Audio Encoding And Codecs

Raw audio is large. To reduce bandwidth, audio must be compressed using codecs.

However, some codecs prioritize quality over speed. Others are optimized for real-time use.

For low latency voice apps:

- Codecs like Opus are preferred

- Smaller frame sizes reduce delay

- Continuous streaming avoids buffering pauses

Codec choice directly affects perceived responsiveness.

3. Transport Protocols

Traditional HTTP is not designed for real-time audio. It introduces request-response delays and buffering.

Instead, low latency voice APIs rely on:

- WebRTC for real-time media streaming

- WebSockets for continuous audio data

- RTP-based pipelines for voice transport

These protocols allow audio to flow continuously instead of waiting for full payloads.

4. Streaming vs Batch Processing

Batch processing waits for full audio segments before processing. While this works for transcription, it fails for conversations.

In contrast, streaming systems:

- Send partial audio frames

- Produce partial transcriptions

- Trigger AI responses earlier

As a result, total response time drops significantly.

Why Voice APIs Are Different From General APIs

Many developers assume voice APIs behave like standard REST APIs. However, this assumption causes design issues.

Voice APIs must handle:

- Stateful, long-lived connections

- Continuous media streams

- Bi-directional communication

- Real-time error recovery

Because of this, voice APIs require specialized infrastructure.

| Feature | Standard API | Voice API |

| Connection | Short-lived | Persistent |

| Data | Request/response | Continuous streams |

| Latency Sensitivity | Low | Extremely high |

| Error Handling | Retries | Live recovery |

Therefore, choosing the best voice API for developers 2026 is less about endpoints and more about architecture.

Why Developers Cannot Build This Themselves Easily

At first glance, building a custom voice stack seems possible. However, in practice, it introduces long-term complexity.

Developers must manage:

- Carrier integrations and SIP routing

- Media servers and scaling logic

- Jitter buffering and echo control

- Failover handling

- Global latency optimization

Meanwhile, AI teams still need to focus on:

- LLM orchestration

- Prompt design

- Context handling

- Tool calling

Because of this, a low latency voice API acts as a force multiplier. It removes infrastructure burden while preserving full control over AI logic.

How This Sets The Stage For AI Voice Agents

So far, we have established that:

- Voice apps demand low latency

- Latency comes from many layers

- Streaming voice infrastructure is essential

- Voice APIs abstract telecom complexity

What Problem Does FreJun Teler Solve For Engineering Teams?

Most voice platforms were designed for calling first and automation later. As a result, they struggle when used for real-time AI conversations.

FreJun Teler takes a different approach.

Instead of centering the product around calls, Teler is built as a streaming voice infrastructure layer designed specifically for AI agents and LLM-driven applications.

At its core, Teler solves three hard problems at once:

- Reliable real-time audio streaming

- Low latency media transport across networks

- Clean integration with any AI or speech stack

Because of this, engineering teams can treat voice as a real-time data stream rather than a telecom feature.

How Does FreJun Teler Fit Into A Modern Voice Architecture?

A typical real-time AI voice setup using Teler looks like this:

Caller Audio

↓

FreJun Teler (Streaming Voice Layer)

↓

Streaming STT

↓

LLM / AI Agent + Context + Tools

↓

TTS Engine

↓

FreJun Teler (Playback Stream)

↓

Caller

Although this flow looks simple, each step requires tight coordination to keep latency low.

What Teler Handles

FreJun Teler is responsible for:

- Capturing inbound and outbound audio in real time

- Streaming audio with minimal buffering

- Maintaining stable, long-lived connections

- Handling call state and media transport

- Delivering audio back to users instantly

As a result, developers do not have to manage SIP stacks, RTP streams, or carrier routing.

What Developers Control

At the same time, developers maintain full ownership of:

- STT provider selection

- LLM or AI agent logic

- Context management and memory

- Tool calling and backend workflows

- TTS provider and voice selection

This separation is critical. It ensures flexibility while removing infrastructure burden.

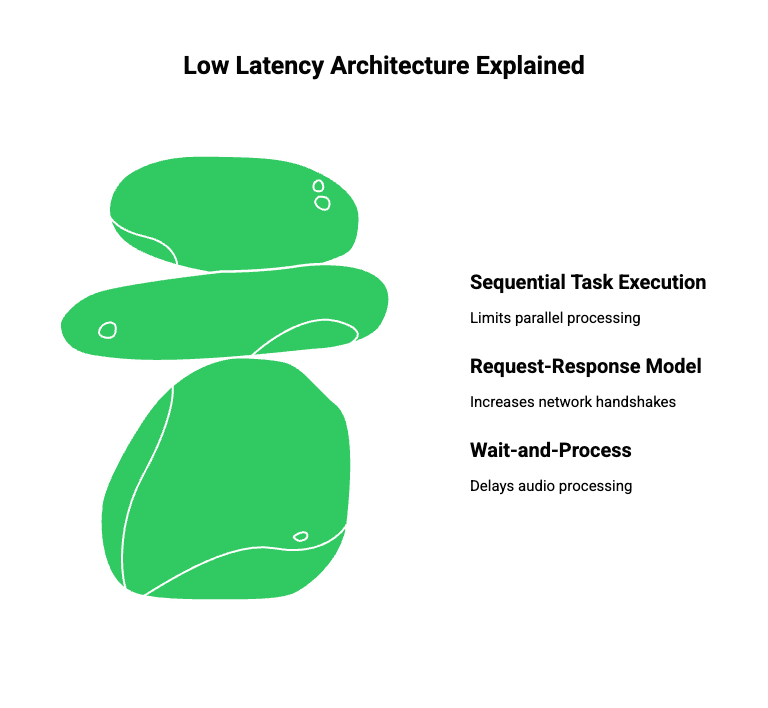

Why Is This Architecture Low Latency By Design?

Latency is reduced not by one feature, but by several architectural choices working together.

1. Continuous Audio Streaming

Teler streams audio as it is spoken, not after the speaker finishes. Because of this:

- STT can start processing immediately

- Partial transcripts can trigger early AI responses

- End-to-end delay drops significantly

This approach avoids the wait-and-process pattern seen in older systems.

2. Transport Optimized For Real Time

Instead of relying on request-response models, Teler uses persistent streaming connections. As a result:

- Network handshakes are minimized

- Audio packets flow continuously

- Jitter and packet loss are handled gracefully

This makes the platform suitable for real time audio API use cases.

3. Parallel Processing Pipelines

While audio is still being captured, other parts of the system are already working.

For example:

- STT processes incoming frames

- The AI agent reasons over partial text

- TTS begins generating audio early

Because tasks run in parallel, perceived latency stays low.

How Does Teler Support Any LLM Or AI Agent?

One of the biggest challenges teams face is platform lock-in. Many voice solutions tie developers to specific AI models or speech providers.

FreJun Teler avoids this entirely.

Model-Agnostic By Design

Teler does not impose restrictions on:

- LLM providers

- Self-hosted models

- Fine-tuned internal agents

Whether teams use commercial APIs or private deployments, Teler works as the transport layer.

AI Agents As Stateful Systems

Modern voice agents are not simple question-answer bots. They require:

- Conversation memory

- Context tracking

- Tool execution

- Dynamic routing

Because Teler maintains a stable audio stream, backend systems can safely manage state without worrying about dropped sessions.

How Does This Compare To Traditional Voice Platforms?

Many existing voice platforms evolved from IVR and call-center tooling. While they work well for basic automation, they fall short for real-time AI conversations.

The difference becomes clear when compared side by side.

| Capability | Traditional Voice Platforms | FreJun Teler |

| Audio Handling | Chunked or buffered | Continuous streaming |

| Latency Control | Limited | Core design goal |

| AI Integration | Add-on | Native |

| LLM Flexibility | Restricted | Fully agnostic |

| Developer Control | Partial | Full |

Because of this, teams building next-generation voice agents increasingly choose platforms designed for streaming first.

Why Is This The Best Voice API For Developers In 2026?

Voice applications are changing fast. Over the next few years, voice will shift from scripted flows to dynamic AI conversations.

To support this shift, the best voice API for developers 2026 must meet several criteria:

- Real-time streaming by default

- Low and predictable latency

- Global infrastructure

- AI-first design

- Strong developer tooling

FreJun Teler aligns closely with these needs.

Instead of forcing AI into legacy telephony systems, it enables voice as a native interface for intelligent systems.

What Are Best Practices When Building With A Low Latency Voice API?

Even with the right platform, architecture decisions still matter. Therefore, teams should follow these best practices.

Design For Streaming From Day One

Avoid batch workflows. Always process audio as a stream.

Keep Pipelines Parallel

Do not wait for STT to complete before triggering AI logic.

Monitor Latency At Each Stage

Track:

- Network delay

- STT response time

- LLM inference time

- TTS generation speed

Plan For Scale Early

Use infrastructure that supports global routing and failover.

Separate Voice And Intelligence

Let the voice API handle transport. Let your backend handle intelligence.

Why A Voice API Is No Longer Optional For Voice Apps

At this point, the answer to the original question becomes clear.

A voice API for developers is essential because:

- Voice apps require real-time audio handling

- Low latency defines user experience

- Telecom infrastructure is complex

- AI agents need stable streaming connections

Without a purpose-built voice API, teams spend months solving infrastructure problems instead of shipping features.

Final Thoughts

Low latency is the defining factor of successful voice applications. Whether you are building AI voice agents, automated calling systems, or real-time conversational experiences, delays directly impact usability and trust. A modern voice API for developers removes telecom complexity while enabling real-time audio streaming, flexible AI integration, and scalable infrastructure. Instead of stitching together fragmented systems, teams can focus on intelligence, logic, and user experience.

FreJun Teler is purpose-built for this shift, providing streaming voice infrastructure designed for AI-first applications. If you are planning to deploy low latency voice apps or AI agents at scale, Teler helps you move faster, stay flexible, and maintain full technical control.

Schedule a Demo with FreJun Teler.

FAQs –

1. What is a voice API for developers?

A voice API lets developers programmatically manage calls, audio streams, and real-time voice interactions using software instead of telecom hardware.

2. Why is low latency important in voice apps?

Low latency ensures conversations feel natural, responsive, and human, preventing awkward pauses that break user trust.

3. What is a low latency voice API?

It is a voice API optimized for real-time audio streaming with minimal delay across capture, processing, and playback.

4. How does real-time audio streaming work?

Audio is streamed continuously in small packets, allowing processing and responses before the speaker finishes talking.

5. Can I use any LLM with a voice API?

Yes. Modern voice APIs act as transport layers and remain agnostic to your choice of LLM or AI agent.

6. What makes voice APIs different from normal APIs?

Voice APIs manage persistent, stateful, real-time audio streams instead of short request-response interactions.

7. Do voice APIs support AI voice agents?

Yes. They enable AI agents by streaming audio to STT, LLMs, and TTS systems in real time.

8. Is building voice infrastructure in-house practical?

Usually no. Telecom routing, latency control, and global scaling add long-term complexity and cost.

9. What is streaming voice infrastructure?

It is infrastructure designed to handle continuous audio streams with low latency across networks and regions.

10. How does FreJun Teler help developers?

Teler provides real-time voice streaming, AI-agnostic integration, and scalable infrastructure for low-latency voice applications.