Building voice AI is no longer about adding speech to a chatbot. It requires careful system design that brings together telephony, real-time media streaming, and AI orchestration. As LLMs become more capable, the infrastructure supporting them must evolve as well. Programmable SIP plays a critical role in this transition by connecting AI systems to real phone networks in a reliable, low-latency way.

When voice is treated as infrastructure rather than a feature, teams gain the flexibility to build scalable, model-agnostic voice agents.

This shift is what enables production-ready voice automation, not just impressive demos.

Why Is Integrating LLMs With Voice And Telephony Still Hard?

Large Language Models are easy to demo in text. However, once you move them into real-world voice systems, complexity increases quickly. This is because voice is not just another input format. Instead, it is a real-time, stateful, and latency-sensitive interface.

Wider 5G and fixed-wireless deployments reduce transport latency and jitter in many regions, but application-level real-time guarantees still require careful infra design.

To begin with, text-based systems work in request–response cycles. A user sends text, the model replies, and the interaction ends. Voice does not work this way. Voice conversations are continuous. People interrupt, pause, restart, and change context mid-sentence.

In addition, telephony introduces constraints that most AI developers never deal with:

- Strict latency budgets measured in milliseconds

- Continuous audio streams instead of discrete messages

- Network jitter, packet loss, and call drops

- Protocols like SIP and RTP that were never designed for LLMs

As a result, simply connecting an LLM to a speech-to-text and text-to-speech service does not create a reliable voice agent. Instead, developers need a system that can bridge AI reasoning with telephony-grade voice transport.

This is exactly where programmable SIP and proper voice infrastructure become critical.

What Does Programmable SIP Mean For AI And LLM Developers?

Before connecting LLMs to telephony, it is important to understand what SIP actually does. SIP, or Session Initiation Protocol, is responsible for setting up, managing, and terminating voice sessions. It does not carry audio itself. Instead, it coordinates how calls begin and end.

Audio is carried separately using RTP (Real-Time Transport Protocol). Together, SIP and RTP form the foundation of internet calling.

Traditionally, SIP was rigid and hardware-focused. However, modern programmable SIP changes this model completely.

Programmable SIP allows developers to:

- Control call flows using APIs

- Stream audio in real time

- Route calls dynamically

- Integrate external systems during live calls

As a result, SIP becomes more than a calling protocol. It becomes a voice transport layer for AI systems.

For AI teams, this matters because:

- LLMs cannot speak SIP

- Telephony networks cannot talk to LLMs

- A programmable layer is required between them

In other words, programmable SIP is what enables LLM SIP integration at scale.

How Do LLM-Powered Voice Agents Actually Work End To End?

To understand how to connect an LLM to telephony, you first need a clear mental model of the full voice agent pipeline.

At a high level, a production-grade voice agent looks like this:

- A user speaks on a phone call

- Audio is streamed in real time

- Speech-to-text converts audio to text

- The LLM processes the text

- Tools or RAG systems are invoked if needed

- The response is converted to speech

- Audio is streamed back to the caller

However, each of these steps runs continuously, not sequentially. That distinction is critical.

Core Components In A Voice Agent

| Component | Role In The System |

| SIP Infrastructure | Call setup, routing, and session control |

| Media Streaming | Real-time audio transport |

| STT | Converts speech into text |

| LLM | Reasoning, intent handling, response generation |

| Tool Calling | Fetches external data or triggers actions |

| RAG | Grounds responses using private knowledge |

| TTS | Converts text back to speech |

Because audio never stops flowing, the system must handle partial transcripts, intermediate responses, and interruptions.

This is why voice agents are not just “LLMs with audio.” They are distributed real-time systems.

Where Does SIP Infrastructure Sit In The LLM Voice Stack?

Many teams misunderstand where SIP fits in the architecture. SIP does not replace AI logic, and it should not be mixed with it either.

Instead, SIP infrastructure sits below the AI layer and above the network layer.

Its responsibilities include:

- Accepting inbound and outbound calls

- Maintaining session state

- Streaming audio reliably

- Handling retries and failures

- Managing call lifecycle events

Meanwhile, the LLM focuses on:

- Understanding intent

- Managing conversation logic

- Deciding what to say next

Because of this separation, a good design principle is simple:

LLMs should never manage telephony directly.

Instead, developers should treat SIP as a transport abstraction. This abstraction exposes clean audio streams and call events that AI systems can consume safely.

This approach is the foundation of any scalable SIP API for AI development.

What Architectural Patterns Are Used For LLM And SIP Integration?

Once the layers are clear, the next question is how to connect them. In practice, several patterns exist. However, not all of them work well for voice.

Pattern 1: Synchronous Request–Response (Not Recommended)

This approach sends full audio clips to STT, waits for transcription, sends text to the LLM, waits for a response, then plays audio back.

Why it fails:

- High latency

- No interruption handling

- Feels robotic

Pattern 2: Streaming Pipeline Architecture (Recommended)

In this model, audio is streamed continuously. Partial transcripts are processed, and responses can start before the user finishes speaking.

Why it works:

- Low latency

- Natural turn-taking

- Supports barge-in

Pattern 3: Event-Driven State Machines

Here, call events trigger state transitions. The LLM operates within defined conversational states.

Why teams use it:

- Predictable behavior

- Easier debugging

- Strong control for enterprise flows

Pattern 4: Agent-Based Architecture With Tool Calling

In this pattern, the LLM acts as an agent that decides when to speak, listen, or call tools.

Why it matters:

- Supports complex workflows

- Works well with RAG

- Enables long-running conversations

Most production systems combine streaming pipelines with agent-based logic.

Sign Up for FreJun Teler Today

What Technical Challenges Appear In Production Voice AI Systems?

Once teams move beyond demos, real problems surface. These issues are not theoretical. They appear quickly in live environments.

Latency Budgeting

Every millisecond counts. Typical targets include:

- STT: <200 ms

- LLM reasoning: <300 ms

- TTS startup: <150 ms

If one component slows down, the conversation feels broken.

Interrupt Handling

Humans interrupt constantly. Voice agents must:

- Stop speaking immediately

- Discard partial responses

- Resume listening without resetting context

Conversation State Management

Calls can drop. Networks fail. Systems must recover:

- Session state

- Conversation memory

- Tool execution results

Audio Quality And Noise

Real calls include:

- Background noise

- Accents

- Silence

- Crosstalk

This directly affects STT accuracy and LLM behavior.

Because of these challenges, infrastructure decisions matter as much as model choice.

How Can Developers Design Voice Agents That Feel Natural And Reliable?

Finally, good voice agents are not just technically correct. They also feel natural.

To achieve this, teams should focus on:

- Streaming TTS instead of full-sentence playback

- Short, incremental responses

- Clear pause handling

- Explicit confirmation for critical actions

- Deterministic fallback paths

In addition, observability is essential:

- Call transcripts

- Latency metrics

- Error logs

- Drop-off points

Without visibility, improving a voice system becomes guesswork.

How Can Developers Implement LLM Voice Agents Using Programmable SIP?

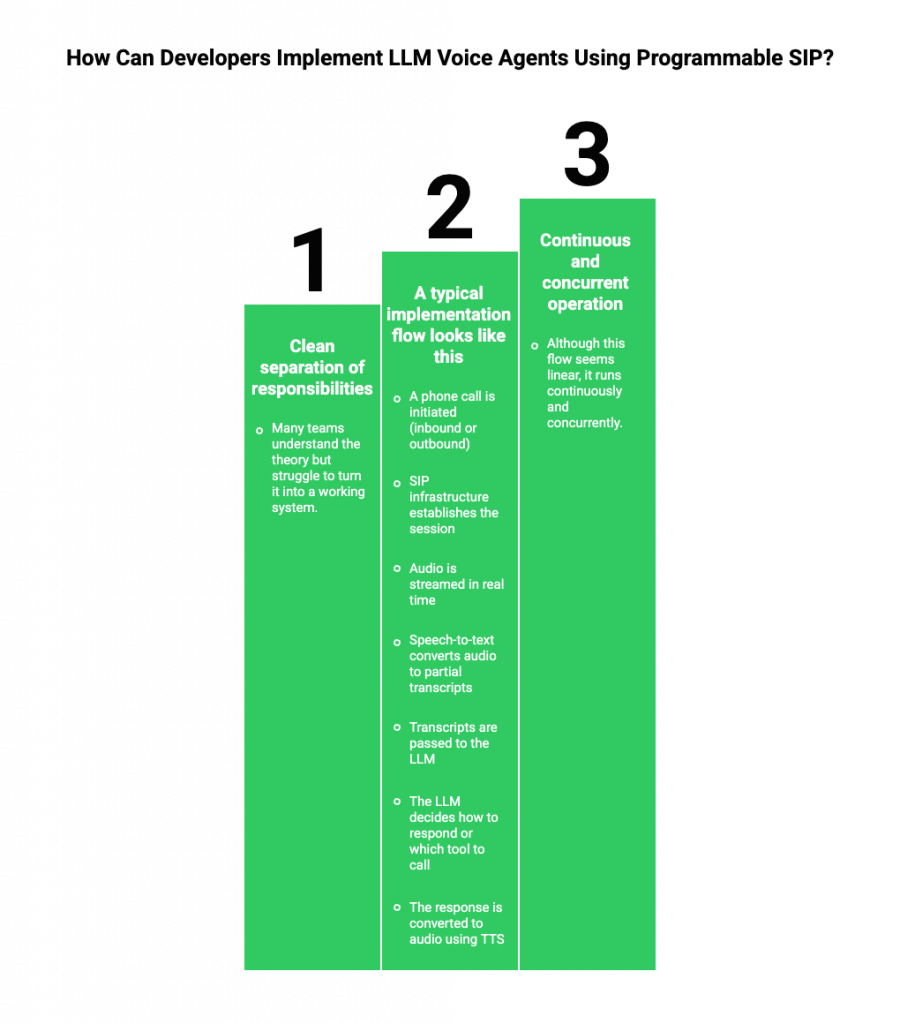

Once the architecture is clear, the next challenge is execution. Many teams understand the theory but struggle to turn it into a working system.

At a practical level, implementing an LLM-powered voice agent requires clean separation of responsibilities. Each component should do one job well.

A typical implementation flow looks like this:

- A phone call is initiated (inbound or outbound)

- SIP infrastructure establishes the session

- Audio is streamed in real time

- Speech-to-text converts audio to partial transcripts

- Transcripts are passed to the LLM

- The LLM decides how to respond or which tool to call

- The response is converted to audio using TTS

- Audio is streamed back into the live call

Although this flow seems linear, it runs continuously and concurrently. Therefore, the system must be built for streaming from the first step.

Key Design Principle

Do not tightly couple AI logic with telephony logic.

Instead, treat telephony as infrastructure and AI as application logic. This separation allows teams to swap LLMs, STT engines, or TTS providers without rewriting the calling layer.

How Does Programmable SIP Enable Real-Time LLM Conversations?

Programmable SIP is what makes real-time voice AI possible at scale. Without it, developers are forced to work around rigid calling systems.

With programmable SIP, developers can:

- Stream audio packets as they arrive

- React to call events instantly

- Route calls dynamically

- Maintain session continuity across services

More importantly, programmable SIP exposes live media streams, not static recordings. This is essential because LLMs perform best when they receive incremental input.

As a result, programmable SIP becomes the backbone for:

- Low-latency speech recognition

- Partial LLM responses

- Interrupt handling

- Natural turn-taking

This is why programmable SIP is now a core requirement for any serious LLM SIP integration.

How Does FreJun Teler Fit Into An LLM And SIP Architecture?

At this point, it is useful to introduce FreJun Teler and clarify its role in the stack.

FreJun Teler is a global programmable SIP and real-time media infrastructure designed specifically for AI-driven voice systems. It does not provide an LLM, a chatbot builder, or pre-defined call flows. Instead, it focuses on doing one thing well: handling the voice layer for AI agents.

What FreJun Teler Does

FreJun Teler acts as the transport and control layer between telephony networks and AI systems.

Specifically, it provides:

- Programmable SIP for inbound and outbound calls

- Real-time, low-latency audio streaming

- A stable session layer for conversational context

- APIs and SDKs designed for AI workloads

Because of this, developers can connect:

- Any LLM

- Any speech-to-text provider

- Any text-to-speech engine

without being locked into a specific AI stack.

In simple terms:

Developers bring the intelligence. Teler handles the voice infrastructure.

How Can Developers Connect Any LLM With Teler And Their AI Stack?

One of the biggest advantages of using infrastructure like Teler is flexibility. Teams are not forced to change how they build AI.

A typical integration looks like this:

Step-By-Step Integration Flow

- Call Setup

- A call enters or leaves through SIP

- Teler establishes the session

- A call enters or leaves through SIP

- Audio Streaming

- Audio is streamed in real time

- Developers receive audio frames instantly

- Audio is streamed in real time

- Speech Recognition

- Audio is forwarded to an STT engine

- Partial transcripts are generated

- Audio is forwarded to an STT engine

- LLM Processing

- Transcripts are sent to the LLM

- Context is preserved across turns

- Transcripts are sent to the LLM

- Tool Calling And RAG (Optional)

- The LLM fetches external data

- Business systems are queried

- The LLM fetches external data

- Speech Synthesis

- The response is converted to audio

- Streaming TTS is used for low latency

- The response is converted to audio

- Audio Playback

- Teler streams audio back into the call

- The loop continues

- Teler streams audio back into the call

This pattern allows teams to build custom AI behavior while relying on proven voice infrastructure.

How Does This Approach Scale Beyond MVPs?

Many voice AI systems work during demos but fail under real traffic. This usually happens because voice infrastructure was treated as an afterthought.

Programmable SIP infrastructure changes this.

Scaling Considerations Enabled By Programmable SIP

- Concurrent Calls

- Handle thousands of live conversations safely

- Handle thousands of live conversations safely

- Geographic Distribution

- Route calls closer to users

- Reduce latency across regions

- Route calls closer to users

- Fault Tolerance

- Recover from dropped calls

- Maintain session integrity

- Recover from dropped calls

- Traffic Spikes

- Scale during campaigns or peak hours

- Scale during campaigns or peak hours

Because FreJun Teler is built as infrastructure, these concerns are handled at the platform level. As a result, engineering teams can focus on improving AI behavior instead of firefighting call issues.

How Is This Different From Traditional Calling Platforms?

It is important to understand how this model differs from legacy calling platforms.

Traditional calling platforms are built around:

- Phone numbers

- IVRs

- Agents

- Call logs

AI is often added later as a feature.

In contrast, AI-first voice infrastructure assumes:

- The LLM controls the conversation

- Voice is a transport medium

- Calls are just sessions for AI agents

This difference matters because:

| Traditional Platforms | AI-First SIP Infrastructure |

| Fixed call flows | Dynamic, model-driven logic |

| IVR-centric | Conversation-centric |

| Limited streaming | Real-time media access |

| Hard to customize | Fully programmable |

As a result, teams building AI voice agents need infrastructure designed for AI from day one.

What Should Teams Evaluate Before Choosing A SIP API For AI Development?

Before committing to a platform, teams should evaluate several factors carefully.

Key Evaluation Checklist

- Does it support real-time media streaming?

- Can it handle partial transcripts and responses?

- Is it model-agnostic?

- Does it expose low-level call events?

- Can it scale across regions?

- Does it support long-running conversations?

Answering “yes” to these questions is critical for production systems.

What Does The Future Of LLM-Driven Voice Infrastructure Look Like?

Voice is becoming a primary interface again, this time powered by AI. As LLMs improve, voice agents will handle more complex tasks with less friction.

Looking ahead, we can expect:

- Continuous, long-running voice agents

- Deeper integration with enterprise systems

- Multi-modal conversations combining voice and text

- Infrastructure that fades into the background

In this future, programmable SIP will remain foundational. Without reliable voice transport, even the best LLMs cannot deliver good experiences.

How Can Teams Get Started Faster With LLM And SIP Voice Agents?

To move quickly without cutting corners, teams should:

- Start with streaming-first designs

- Separate AI logic from telephony logic

- Choose model-agnostic infrastructure

- Build observability early

- Treat voice as a system, not a feature

Platforms like FreJun Teler exist to remove the hardest infrastructure barriers, allowing teams to focus on building useful, intelligent voice agents.

Final Thought

Integrating LLMs with programmable SIP infrastructure is not just a technical task. It is a system design challenge that spans networking, real-time media handling, and AI orchestration. When these layers are designed together, teams can build voice agents that respond instantly, handle interruptions, and operate reliably over real phone calls. This approach allows LLMs to move beyond text interfaces and become active participants in real conversations.

FreJun Teler is built to support this exact shift by providing programmable SIP and real-time media streaming designed for AI workloads. If you are building voice agents with any LLM, STT, or TTS stack, Teler simplifies the voice infrastructure so your team can focus on intelligence, not telephony.

FAQs –

- What is programmable SIP in simple terms?

Programmable SIP lets developers control calls, media streams, and routing using APIs instead of fixed telephony configurations. - Can I connect any LLM to telephony using SIP?

Yes, with programmable SIP, any LLM can process call audio through streaming pipelines without managing telephony directly. - Do I need to understand SIP protocols deeply?

Not necessarily. Modern SIP APIs abstract protocol complexity while exposing real-time call and media control. - Why is streaming important for voice AI?

Streaming reduces latency, enables interruptions, and allows AI to respond naturally during live conversations. - Can I use my existing STT and TTS providers?

Yes. A model-agnostic SIP infrastructure allows integration with any STT and TTS services. - How does this differ from IVR systems?

IVRs follow fixed scripts, while LLM voice agents dynamically reason, respond, and adapt in real time. - Is programmable SIP suitable for enterprise scale?

Yes, it supports concurrent calls, geographic routing, and high availability when designed as infrastructure. - What latency is acceptable for natural conversations?

Sub-second, ideally under 300 milliseconds end-to-end, to maintain conversational flow. - Can voice agents handle interruptions and corrections?

Yes, when built with streaming audio and real-time LLM processing.

When should teams adopt programmable SIP?

As soon as voice becomes a core product interface, not just an experimental feature.