For developers in the Microsoft ecosystem, the Semantic Kernel is a game-changing SDK. It’s a powerful orchestrator that allows you to build sophisticated AI applications by chaining together plugins, functions, and AI models. You can create an agent that can reason, plan, and execute complex digital tasks.

But what happens when the next step in your workflow requires a conversation? This is the point where a VoIP Calling API Integration for Semantic Kernel Microsoft becomes not just a feature, but a necessity.

Imagine the AI-powered planner you built could not only devise a plan to solve a customer’s issue but could also execute that plan by picking up the phone and having a conversation.

This guide will provide a technical blueprint for developers on how to bridge this gap, transforming your silent Semantic Kernel orchestrator into a powerful, voice-enabled agent that can act autonomously in the real world.

Table of contents

What is Semantic Kernel?

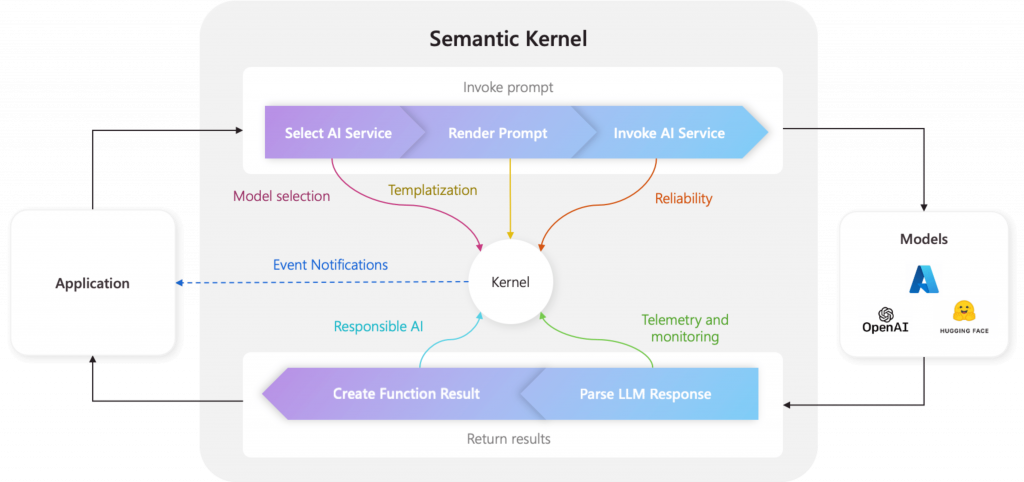

Semantic Kernel (SK) is an open-source SDK from Microsoft that lets you build AI agents that can interact with your own code and external services. It is not just a simple LLM wrapper; it’s a full-fledged framework for creating complex AI workflows.

Features of Semantic Kernel Microsoft

- The Kernel: The core orchestrator that processes requests.

- Plugins: The fundamental building blocks. A plugin is a collection of functions that you can expose to the AI. These can be “semantic functions” (natural language prompts) or “native functions” (your own C# or Python code).

- Planners: SK’s magic lies in its planners. A planner can take a user’s goal, analyze the available plugins, and automatically generate a step-by-step plan to achieve that goal.

- Connectors: These allow SK to connect to various AI models (like Azure OpenAI) and memory sources.

In essence, SK provides the “brain” for an AI that can reason and use tools. Now, let’s give that brain a voice.

Also Read: Programmable Voice APIs Vs Cloud Telephony Compared

The “Silent Orchestrator” Problem

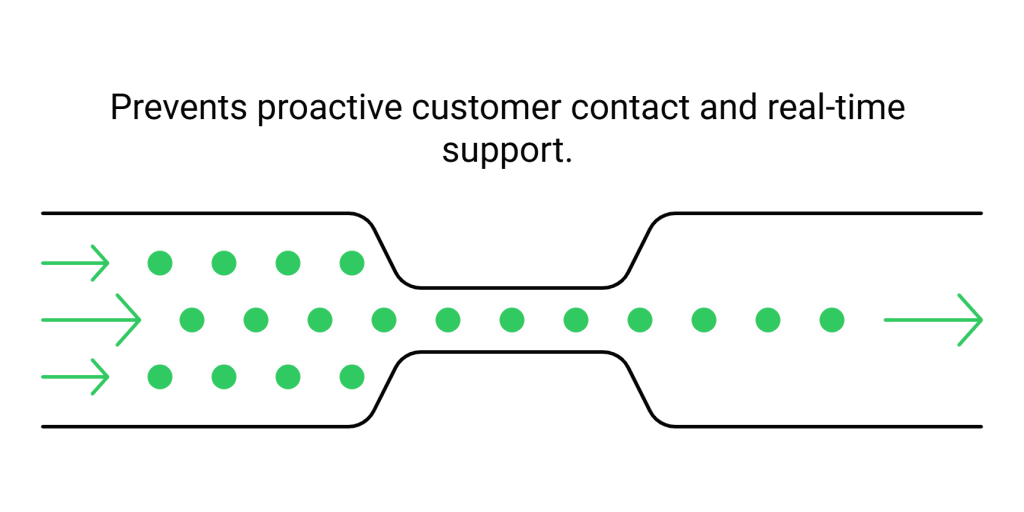

A standard Semantic Kernel application is incredibly powerful at automating digital tasks. It can call a CRM API, query a database, or summarize a document. However, its ability to interact with the world is limited to these digital interfaces. It cannot:

- Call a customer to proactively notify them of an issue found by a monitoring plugin.

- Receive an inbound support call and use its planner to troubleshoot a problem in real-time.

- Automate an outbound sales call where the conversation’s direction is unpredictable.

This is the exact limitation a VoIP Calling API Integration for Semantic Kernel Microsoft is designed to overcome.

Also Read: How To Lower Latency In Voice AI Conversations?

Step-by-Step Guide to VoIP Calling API Integration for Semantic Kernel Microsoft

This is the core data flow for a VoIP Calling API Integration for Semantic Kernel Microsoft. Your primary task will be to build the application logic that orchestrates this loop.

- A Call is Received: A user calls a number provided by your voice platform. The platform answers and sends a webhook to your application’s endpoint.

- The User Speaks: The user states their goal, e.g., “I need to check the status of my recent order.”

- Audio is Transcribed (STT): The voice platform streams the user’s audio to an STT service, which returns a text transcript.

- The Kernel is Invoked: This is the key step. Your application takes the transcribed text (“I need to check the status of my recent order.”) and passes it to your Semantic Kernel’s Kernel.InvokeAsync() method.

- SK Executes the Plan: The Kernel’s planner sees the goal. It identifies that it has a DatabasePlugin with a function called GetOrderStatus. It creates a plan to execute this function, calls your native C# or Python code to query the database, and gets the result.

- A Response is Generate The Kernel returns the final result in a text string, such as ‘Your order #12345 is currently out for delivery.

- Text is Synthesized (TTS): Your application sends this string to a TTS service, which generates the audio.

- The Agent Speaks: The voice platform streams this audio back to the user on the call. The loop is now ready for the user’s next turn.

Also Read: How VoIP Calling API Integration for ElevenLabs.io Improves AI Voice Apps?

Use Cases Powered by Voice-Enabled Plugins

A successful VoIP Calling API Integration for Semantic Kernel Microsoft allows your plugins to become real-time, interactive tools.

- Intelligent Support Agents: A user calls in. The SK agent uses a KnowledgeBasePlugin to answer questions, and if needed, a TicketSystemPlugin to create a new support ticket by talking to the user.

- Autonomous Sales Assistants: An outbound call is placed to a new lead. The agent uses a CRMPlugin to pull the lead’s details, has a qualifying conversation, and uses a CalendarPlugin to book a demo, all orchestrated by the planner.

- Proactive Monitoring Agents: A MonitoringPlugin detects a server outage. The planner decides the correct action is to call the on-call engineer, using the voice integration to place the call and deliver the alert message.

Also Read: Best Practices for VoIP Calling API Integration with Vapi AI

Conclusion

Microsoft’s Semantic Kernel gives you the power to build AI that can plan and act. A VoIP Calling API Integration for Semantic Kernel Microsoft gives AI a voice to act in the real world.

By connecting your Kernel’s powerful orchestration capabilities to a real-time voice interface, you transform your application from a silent, digital workflow engine into an autonomous agent that can communicate, solve problems, and execute tasks in the most human way possible.

Also Read: What is Cloud Telephony? Complete Guide for Businesses

Frequently Asked Questions (FAQs)

No. Semantic Kernel is a text-in, text-out SDK. You must integrate it with external voice services: a voice infrastructure platform for telephony, an STT service for listening, and a TTS service for speaking.

Your custom code (e.g., an ASP.NET Core Web API) acts as the central orchestrator. It receives webhooks from the voice platform, makes calls to the Semantic Kernel, and communicates with the STT/TTS services.

Semantic Kernel officially supports C# and Python. Therefore, you typically write your orchestration application in one of these two languages to integrate natively with the SK SDK.

It is extremely critical. Conversational latency is the total time it takes to transcribe the user’s speech, process it through the Kernel (including any plugin executions), convert it back to speech, and play the response. This must be very low (around a second) for the conversation to feel natural.