For years, the promise of conversational AI felt just out of reach. We were given automated phone systems that were little more than frustrating, audio-based flowcharts. These early bots were “brittle”, they could follow a script, but the moment you deviated, the entire conversation would shatter. They lacked understanding, context, and the fluid intelligence that defines human interaction.

The game has now fundamentally changed. The engine driving this revolution is the Large Language Model (LLM), and when applied to voice, it creates a new class of AI with unprecedented capabilities. A voice LLM is not just an incremental upgrade; it’s a complete paradigm shift. It transforms an AI voicebot from a rigid script-reader into a dynamic, reasoning conversational partner.

This isn’t just about making bots sound better; it’s about making them think better. This guide will explore the specific, measurable ways a voice LLM elevates the performance of a conversational AI voice assistant, turning a once-frustrating technology into a powerful tool for customer engagement.

Table of contents

What Makes a Voice LLM Different from Older AI?

To appreciate the leap forward, you must first understand the old world. Traditional automated voice systems were built on “rule-based” logic. A developer had to manually map out every single possible path a conversation could take. This is like writing a choose-your-own-adventure book with thousands of pages. It’s incredibly rigid and can’t handle anything unexpected.

A voice LLM is entirely different. It’s not a flowchart; it’s a massive neural network that has been trained on a vast corpus of human language. This gives it an intuitive grasp of grammar, context, and nuance. It’s the difference between a robot that can only follow a pre-programmed path and an expert guide who knows the entire landscape and can create a new path for you on the fly.

How Does a Voice LLM Specifically Improve Performance?

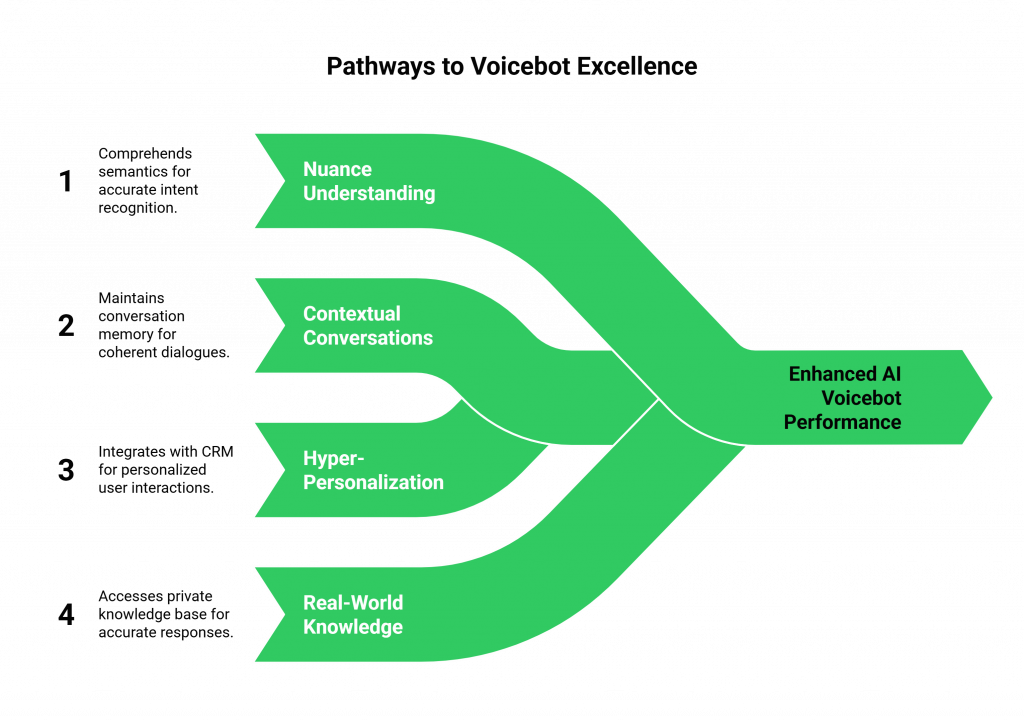

The “intelligence” of a voice LLM isn’t a vague concept; it translates into concrete, measurable improvements in the performance and effectiveness of your AI voicebot.

How Does It Understand Nuance and Complex Intent?

Older systems relied on simple keyword spotting. A voice LLM, on the other hand, understands semantics, the real meaning behind the words.

- Old Way: A user says, “My bill is wrong.” The bot looks for the keyword “bill” and sends them to the billing department.

- New Way with a Voice LLM: A user says, “I was looking at my last statement, and the charge for this month seems way higher than it should be.” The voice LLM understands the complete context. It recognizes the user’s frustration, identifies the core issue as a “billing dispute,” and can even ask intelligent follow up questions like, “I can help with that. Are you referring to the charge from May 15th?”

Also Read: How Multimodal AI Agents Transform Business Operations

How Does It Enable Contextual, Multi-Turn Conversations?

This is one of the most powerful performance enhancements. A voice LLM can be architected to have “memory.” It can track the state of the conversation, remembering what was said just moments ago. This eliminates the number one frustration for customers: having to repeat themselves.

- Without Context: “What is your order number?” … “Okay, I see that order. What is the order number again?”

- With Context: “What is your order number?” … “Okay, I see order 12345. Are you calling to check on the shipping status for that order?”

This ability to have a coherent, multi-turn dialogue is a hallmark of a high-performance conversational AI voice assistant.

How Does It Achieve Hyper-Personalization at Scale?

A voice LLM integrates with external tools, most importantly your CRM, which lets it move beyond generic scripts and deliver deeply personalized conversations. When a known customer calls, the AI instantly accesses their entire history.

- Personalized Greeting: “Welcome back, Sarah. Are you calling about the laptop you purchased last week?”

This level of personalization is a massive driver of growth. A report from McKinsey found that companies that excel at personalization generate a stunning 40% more revenue from those activities than their slower-moving competitors.

How Does It Dynamically Access Real-World Knowledge?

A standard LLM’s knowledge is static and general. To be a true expert, your AI voicebot needs to know your business. This is achieved with a technique called Retrieval-Augmented Generation (RAG). By connecting your voice LLM to your private knowledge base, it can “look up” the correct, up-to-the-minute information before answering any question, ensuring its answers are always factually accurate and specific to your policies.

Ready to build a truly high-performance voice AI? Explore FreJun AI’s low-latency voice infrastructure. Sign up Now!

Also Read: Voice-Based Bot Examples That Increase Conversions

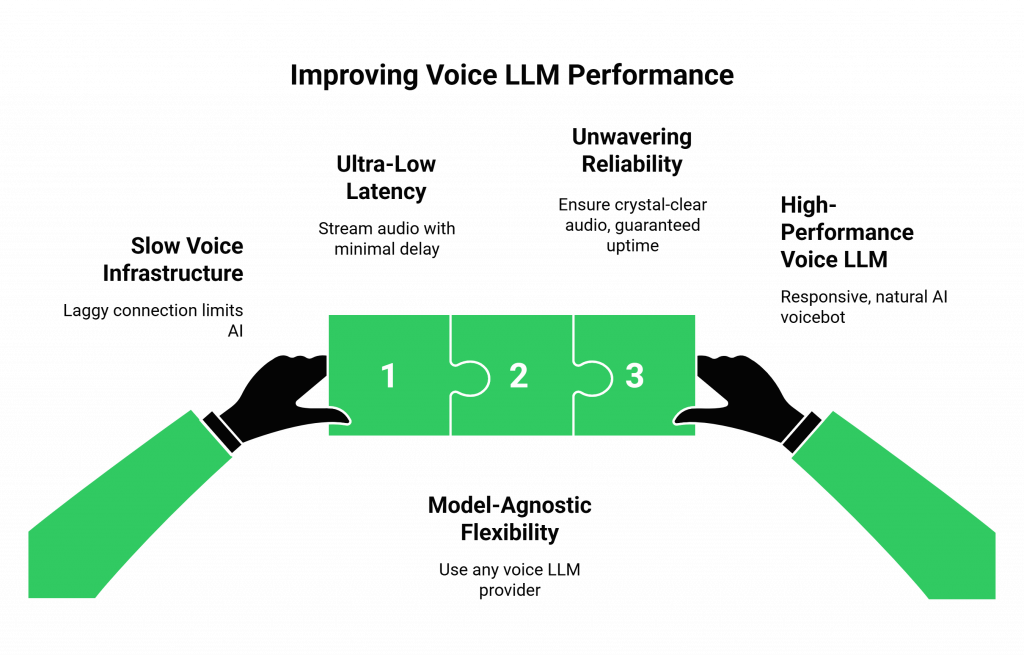

What is the Role of Voice Infrastructure in LLM Performance?

A voice LLM can generate a response in a fraction of a second, but a slow, laggy nervous system that connects it to a live phone call wastes that speed. The performance of your AI depends directly on the performance of your voice infrastructure.

This is where a high-performance, developer-first platform like FreJun AI is the essential foundation. Our philosophy is simple: “We handle the complex voice infrastructure so you can focus on building your AI.”

- Ultra-Low Latency: We are obsessed with speed. Our entire global network is engineered to stream audio back and forth with the lowest possible latency. This is what allows your voice LLM to feel responsive and natural, eliminating the awkward pauses that kill a conversation.

- Model-Agnostic Flexibility: We don’t lock you into a single AI provider. FreJun AI is a model-agnostic platform, giving you the freedom to use the most powerful and cost-effective voice LLM on the market, whether it’s from Google, OpenAI, or an open-source model you host yourself.

- Unwavering Reliability: A high-performance AI needs a reliable connection. Our enterprise-grade infrastructure ensures crystal-clear audio and guaranteed uptime, so your AI voicebot is always available to your customers.

Also Read: Voice API for Developers: Debugging and Testing Guide

Conclusion

The voice LLM is the engine of the modern conversational AI voice assistant. It replaces the rigid, brittle logic of the past with a new level of intelligence, understanding, and adaptability. This isn’t just about better technology; it’s about a better customer experience. The demand for this is clear, with the conversational AI market expected to grow by over 23% annually, reaching nearly $50 billion by 2028.

By enhancing performance through a deeper understanding of nuance, enabling contextual memory, and providing hyper-personalization, a voice LLM can transform your customer support from a cost center into a powerful driver of satisfaction and loyalty. And when this brilliant “brain” is paired with a high-performance “nervous system,” the possibilities are truly limitless.

Want to learn more about the infrastructure that powers the most intelligent voice LLMs? Schedule a demo with FreJun AI today.

Also Read: Outbound Call Center Software: Essential Features, Benefits, and Top Providers

Frequently Asked Questions (FAQs)

A voice LLM is a Large Language Model that has been specifically adapted for use in a voice-based conversational AI. It’s the “brain” that allows an AI voice agent to understand natural human speech, have a contextual conversation, and generate fluent, human-like spoken responses.

The biggest benefit is its ability to understand natural language intent. A user can describe their problem in their own words instead of navigating a rigid ‘press 1, press 2’ menu.

It does this by maintaining a “state” or memory of the current conversation. With each new turn, the application sends the entire conversation history back to the voice LLM, giving it the full context it needs to provide a coherent response.

Yes. Modern voice LLM systems can incorporate sentiment analysis. They can analyze the words a customer uses to determine if their sentiment is positive, negative, or neutral, and then adapt the conversation accordingly.

RAG (Retrieval-Augmented Generation) improves performance by ensuring factual accuracy. It allows the AI voicebot to “look up” information in your private, up-to-date knowledge base before answering, which prevents it from “hallucinating” or giving incorrect information.

Not necessarily. While larger models can have a slightly higher processing time, this is often negligible. The biggest factor in the overall response time (latency) is the speed of the voice infrastructure that transports the audio back and forth.

The goal is often augmentation, not replacement. A voice LLM is perfect for handling high-volume, repetitive queries, which frees up your highly-skilled human agents to focus on the most complex, emotional, or high-value customer interactions.

FreJun AI provides the ultra-low-latency voice infrastructure, which is the “nervous system” for the AI’s brain. Our platform’s speed and reliability deliver the voice LLM’s intelligence in real time, making conversations feel natural and responsive.