For a developer building voice AI, the real test isn’t how smart the response is, but how fast it arrives. Even the smartest AI feels broken if there’s a three-second pause before answering. That silence frustrates users and ruins the experience. This delay, called latency, is the biggest enemy of natural, human-like conversation.

At its core, a voice AI is a real-time data processing challenge. It’s the high-stakes, high-speed task of capturing the analog chaos of a human voice and transforming it into a structured, digital response in the blink of an eye. The key to solving this challenge is audio streaming.

This guide is for the developer in the trenches. We will move beyond a surface-level overview and take a deep architectural dive into the world of real-time audio streaming.

We will dissect the modern AI pipeline, explore the critical role of the websocket API, and explain how a high-performance voice APIs for developers platform is the essential foundation for achieving the ultra-low latency your application demands.

Table of contents

- Why is “Batch Processing” the Enemy of a Real-Time Conversation?

- What is the Technical Journey of a Spoken Word in a Streaming AI Pipeline?

- How Does a WebSocket API Make This Real-Time Stream Possible?

- What is the Role of a Voice API in This Streaming Architecture?

- What are the Best Practices for Handling a Live Audio Stream?

- Conclusion

- Frequently Asked Questions (FAQs)

Why is “Batch Processing” the Enemy of a Real-Time Conversation?

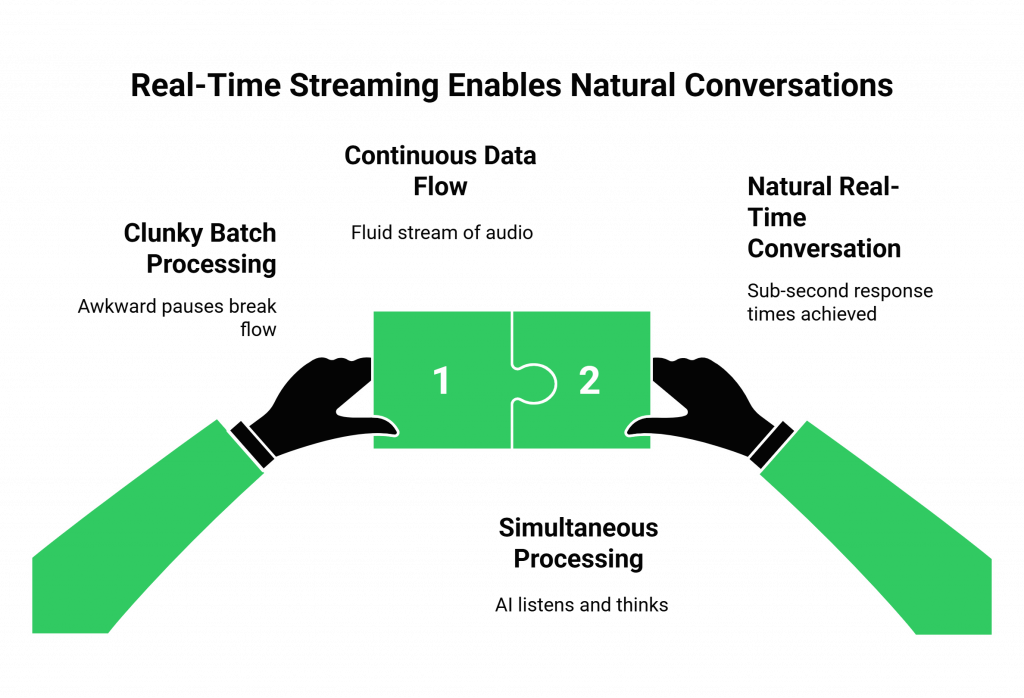

To understand why streaming is so revolutionary, you first have to understand the old, broken model: batch processing.

Imagine trying to have a conversation where you must first wait for the other person to finish their entire paragraph, then write it down, think of a response, and only afterward begin speaking. In other words, that’s how a batch-processing voice AI operates.

It first records several seconds of your speech, then sends the entire audio file to the AI, and afterward waits for the complete response before finally playing it back. As a result, the interaction becomes clunky and turn-based, filled with long, awkward pauses that break the natural conversational flow.

Real-time audio streaming is the exact opposite. It’s a continuous, fluid flow of data. Think of it like a live video call versus sending a video file.

A streaming architecture allows your AI to “listen” and “think” simultaneously, processing the user’s voice word-by-word as it’s being spoken. This is the only way to achieve the sub-second response times that a natural conversation requires. The demand for this level of immediacy is a defining characteristic of the modern consumer.

A recent HubSpot report found that a remarkable 90% of customers rate an “immediate” response as important or very important when they have a customer service question.

Also Read: Voice Assistant Chatbot Use Cases in 2025

What is the Technical Journey of a Spoken Word in a Streaming AI Pipeline?

To achieve this real-time effect, a single spoken word goes on an incredible, high-speed journey through a modern AI pipeline. Understanding this data flow is the key to building a low-latency system.

- The Capture: A user speaks into their phone or web browser. The sound waves are captured by the microphone.

- The Ingress: The audio is received by your voice infrastructure provider. This is the “on-ramp” to your system.

- The Stream (The WebSocket Tunnel): This is the critical first leg of the journey. The voice platform doesn’t wait; it immediately opens a persistent websocket API connection to your backend server and begins streaming the raw audio data packet by packet.

- The Relay (Your Backend Server): Your server’s job is to be a high-speed traffic controller. It receives the audio packets from the voice platform and instantly forwards them to your chosen streaming Speech-to-Text (STT) engine.

- The Transcription: The STT engine receives the audio stream and, in real-time, sends back a stream of text, a live, rolling transcript of the conversation.

- The Cognition: Your server analyzes this live transcript, detects when the user has finished their sentence, and then calls your LLM with the full context of the conversation.

- The Synthesis: The LLM returns a text response. Your server immediately starts streaming this text to a streaming Text-to-Speech (TTS) engine.

- The Egress: The TTS engine sends back a stream of generated audio packets. Your server forwards these packets back down the open WebSocket to the voice platform, which then plays them to the user.

This entire, eight-step round trip happens in a fraction of a second.

How Does a WebSocket API Make This Real-Time Stream Possible?

The hero of this entire story is the WebSocket. A traditional HTTP API is like sending a letter; you send a request, and you wait for a response. The connection is temporary. A websocket API is like having an open phone line; it’s a persistent, two-way connection where both the client and the server can send data to each other at any time, without having to establish a new connection for every message.

This persistent, bidirectional flow is what makes real-time audio streaming possible. It allows for a continuous, low-overhead stream of tiny audio packets, which is far more efficient than sending a series of large, individual HTTP requests. A professional voice platform uses a websocket API as the primary method for delivering live audio to your application.

Also Read: Best Practices for Voice API Integration in SaaS

What is the Role of a Voice API in This Streaming Architecture?

If the WebSocket is the open phone line, the voice APIs for developers platform is the entire global telecommunications company that makes that phone line work. It is the master abstraction layer that hides a world of complexity.

This is the core value of a platform like FreJun AI. We handle the connections to the global telephone network, the management of phone numbers, and the translation of arcane telecom protocols into a clean, digital stream.

FreJun Teler (FreJun AI) operate a globally distributed network of high-performance WebSocket servers, ensuring that no matter where your user is, their audio can be streamed to your application with the lowest possible latency.

We automatically handle the dozens of different audio formats and codecs used in the telephony world and deliver a clean, standardized raw audio stream to your server, so you don’t have to worry about decoding.

This powerful abstraction is a massive accelerator for development. The API economy is a dominant force in modern software, with the 2023 Postman “State of the API” report revealing that developers now spend a majority of their time, nearly 60% of their work week, working directly with APIs.

Ready to start working with a clean, real-time audio stream? Sign up for FreJun AI and get your API keys in minutes.

What are the Best Practices for Handling a Live Audio Stream?

Once you have the stream coming into your server, building a resilient application requires some specific best practices.

- Asynchronous Processing: Your server’s main thread should focus on the real-time task of routing audio packets, while slower, non-real-time tasks, like logging conversations to a database, run asynchronously in separate processes or threads to prevent blocking the audio stream.

- Intelligent Utterance Detection: Don’t just rely on a simple silence timer to detect when a user is done speaking. A more advanced approach involves analyzing the live transcript from the STT. Looking for grammatical cues or a pause after a complete sentence can be a much faster and more accurate way to trigger the “thinking” part of your AI pipeline.

- Graceful Error Handling: What happens if the WebSocket connection drops for a moment? Your application needs to have a retry logic to attempt to reconnect, and a clear “fallback” plan (like playing an error message to the user) if the connection cannot be re-established.

Also Read: Best AI Call Agent Platforms for Lead Qualification

Conclusion

A real-time voice AI is, at its heart, a real-time streaming data problem. The illusion of a natural, intelligent conversation is a direct result of the speed and reliability of the underlying AI pipeline. The ability to get a clean, raw, and ultra-low-latency stream of audio is the absolute prerequisite for building a high-quality voice experience.

The modern voice APIs for developers is the key that unlocks this capability. It is the powerful and elegant abstraction that allows any developer to harness the complexity of global, real-time communication and build the next generation of conversational AI.

Want to see our low-latency audio streaming architecture in action? Schedule a demo for FreJun Teler!

Also Read: What Is an Auto Caller? Features, Use Cases, and Top Tools in 2025

Frequently Asked Questions (FAQs)

Batch processing involves recording a chunk of audio, sending the whole file, and waiting for a response. Audio streaming continuously sends audio data in real time, enabling the AI to process speech as it’s spoken and reduce latency significantly.

A WebSocket API uses the WebSocket protocol to maintain a persistent, two-way connection between the client and server, allowing real-time applications like voice to send data instantly without repeatedly creating new HTTP connections.

The AI pipeline is the sequence of real-time processes that a user’s utterance goes through. It typically streams the audio, transcribes it with an STT, processes and generates a response with an LLM, and then converts that response back to audio using a TTS.

Low latency is critical because humans are very sensitive to delays in a conversation. A long pause makes the AI feel slow and robotic. An ultra-low-latency response is essential for a conversation to feel natural and fluid.

No. This is the main benefit of using a high-level voice APIs for developers. The platform handles all the deep, complex networking (like NAT traversal, packet loss concealment, and jitter buffers), providing you with a clean, simple stream to work with.

A codec (coder-decoder) is an algorithm used to compress and decompress digital audio data. The telephony world uses many different codecs. A good voice API platform automatically handles all the codec translation for you.

Look for a provider that explicitly focuses on being a voice APIs for developers platform, has a globally distributed network, offers a clear and simple websocket API for audio, and has a strong reputation for low latency and high reliability.

Full-duplex audio means that data can be sent and received at the same time, just like a natural phone call. This is a critical feature for enabling advanced capabilities like user interruption (“barge-in”).

FreJun AI provides the essential, high-performance voice infrastructure. We manage the connection to the user (via phone or web) and provide the clean, raw, ultra-low-latency audio streaming to your backend application via our websocket API. We are the specialized “nervous system” for your AI’s brain.

The first step is to choose your voice infrastructure provider and get an API key. Then, follow their “getting started” guide to set up a simple webhook and establish your first real-time audio streaming connection.