The launch of OpenAI’s latest model has sent a shockwave through the developer community. The power and flexibility of AI voice agents using OpenAI GPT-4.5 are setting a new standard for what’s possible in automated, human-like interaction. With its native ability to process audio and generate speech in real time, GPT-4.5 provides the “brain” for a new class of intelligent, context-aware assistants that can understand not just words, but also tone and emotion.

Table of contents

- What Makes GPT-4.5 a Game-Changer for Voice?

- The Hidden Challenge: A Brilliant AI Without a Voice

- FreJun: The Voice Infrastructure Layer for Your GPT-4.5 Agent

- DIY Telephony vs. A FreJun-Powered Agent: A Comparison

- How to Build a Complete AI Voice Agent (Step-by-Step)?

- Best Practices for a Flawless Implementation

- Final Thoughts

- Frequently Asked Questions (FAQ)

The path to creating this AI brain has never been clearer. However, a critical and often underestimated challenge remains that prevents these brilliant creations from reaching their full potential. An AI brain, no matter how powerful, is useless if it has no way to connect to the real world. This guide will walk you through not only how to build the AI core of your voice agent but also how to solve the crucial infrastructure problem that separates a promising prototype from a scalable, enterprise-ready solution.

What Makes GPT-4.5 a Game-Changer for Voice?

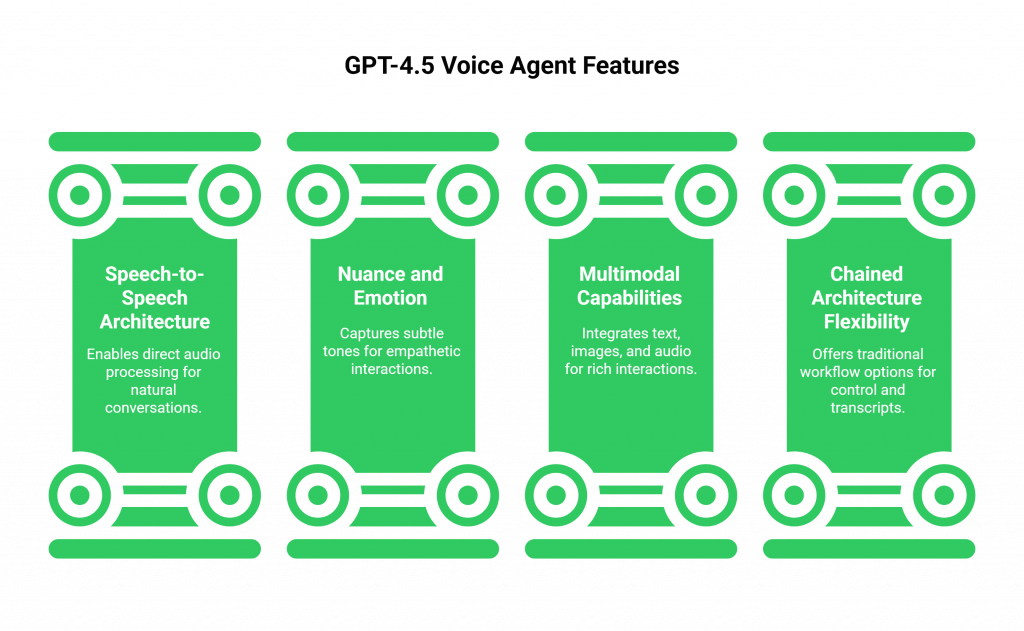

Building AI voice agents using OpenAI GPT-4.5 offers a distinct advantage over piecing together separate components. It represents a fundamental shift in capability. Key features include:

- Speech-to-Speech Architecture: The GPT-4.5 Realtime API can process audio input directly and return audio output with incredibly low latency. This bypasses the traditional, multi-step process of ASR -> LLM -> TTS, resulting in a much more natural and fluid conversational flow.

- Nuance and Emotion: By processing audio natively, the model can capture nuances like tone and emotion, allowing for more empathetic and engaging interactions.

- Multimodal Capabilities: The model can simultaneously analyze text, images, and audio, opening the door for rich, context-aware voice agent interactions that can, for example, discuss an image a user has sent.

- Chained Architecture Flexibility: For workflows where more control or a text transcript is needed, developers still have the option to use a traditional “chained” approach, combining a separate speech-to-text service with the GPT-4.5 text-based API.

The Hidden Challenge: A Brilliant AI Without a Voice

You’ve designed a brilliant agent. It’s powered by GPT-4.5, it’s connected to your business systems, and it’s ready to revolutionize your customer service. Now, you need it to answer a phone call. This is where most projects hit a formidable wall.

The entire ecosystem of AI tools, including the OpenAI API itself, is designed to provide the “brain” for your agent. They do not provide the underlying infrastructure needed to connect that brain to the Public Switched Telephone Network (PSTN). To make your agent answer a phone call, you would have to build a highly specialized and complex voice infrastructure stack from the ground up. This involves solving a host of non-trivial engineering problems:

- Telephony Protocols: Managing SIP (Session Initiation Protocol) trunks and carrier relationships.

- Real-Time Media Servers: Building and maintaining dedicated servers to handle raw audio streams from thousands of concurrent calls.

- Call Control and State Management: Architecting a system to manage the entire lifecycle of every call, from ringing and connecting to holding and terminating.

- Network Resilience: Engineering solutions to mitigate the jitter, packet loss, and latency inherent in voice networks that can destroy the quality of a real-time conversation.

This is the hidden challenge. Your team, expert in AI and application development, is suddenly forced to become telecom engineers. The project stalls, and the brilliant agent you built remains trapped, unable to be reached by the millions of customers who rely on the telephone.

FreJun: The Voice Infrastructure Layer for Your GPT-4.5 Agent

This is the exact problem FreJun was built to solve. We are not another AI platform. We are the specialized voice infrastructure layer that connects the powerful AI voice agents using OpenAI GPT-4.5 to the global telephone network.

FreJun provide a simple, developer-first API that handles all the complexities of telephony, so you can focus on building the best AI possible.

- We are AI-Agnostic: You bring your own “brain.” FreJun integrates seamlessly with any backend, allowing you to connect directly to the OpenAI API.

- We Manage the Voice Transport: We handle the phone numbers, the SIP trunks, the media servers, and the low-latency audio streaming.

- We Guarantee Reliability and Scale: Our globally distributed, enterprise-grade infrastructure ensures your phone line is always online and ready to handle high call volumes.

FreJun provides the robust “body” that allows your AI “brain” to have a real, meaningful conversation with the outside world via the telephone.

DIY Telephony vs. A FreJun-Powered Agent: A Comparison

| Feature | The Full DIY Approach (Including Telephony) | Your GPT-4.5 Backend + FreJun |

| Infrastructure Management | You build, maintain, and scale your own voice servers, SIP trunks, and network protocols. | Fully managed. FreJun handles all telephony, streaming, and server infrastructure. |

| Scalability | Extremely difficult and costly to build a globally distributed, high-concurrency system. | Built-in. Our platform elastically scales to handle any number of concurrent calls on demand. |

| Development Time | Months, or even years, to build a stable, production-ready telephony system. | Weeks. Launch your globally scalable voice agent in a fraction of the time. |

| Developer Focus | Divided 50/50 between building the AI and wrestling with low-level network engineering. | 100% focused on building the best possible conversational experience with GPT-4.5. |

| Maintenance & Cost | Massive capital expenditure and ongoing operational costs for servers, bandwidth, and a specialized DevOps team. | Predictable, usage-based pricing with no upfront capital expenditure and zero infrastructure maintenance. |

How to Build a Complete AI Voice Agent (Step-by-Step)?

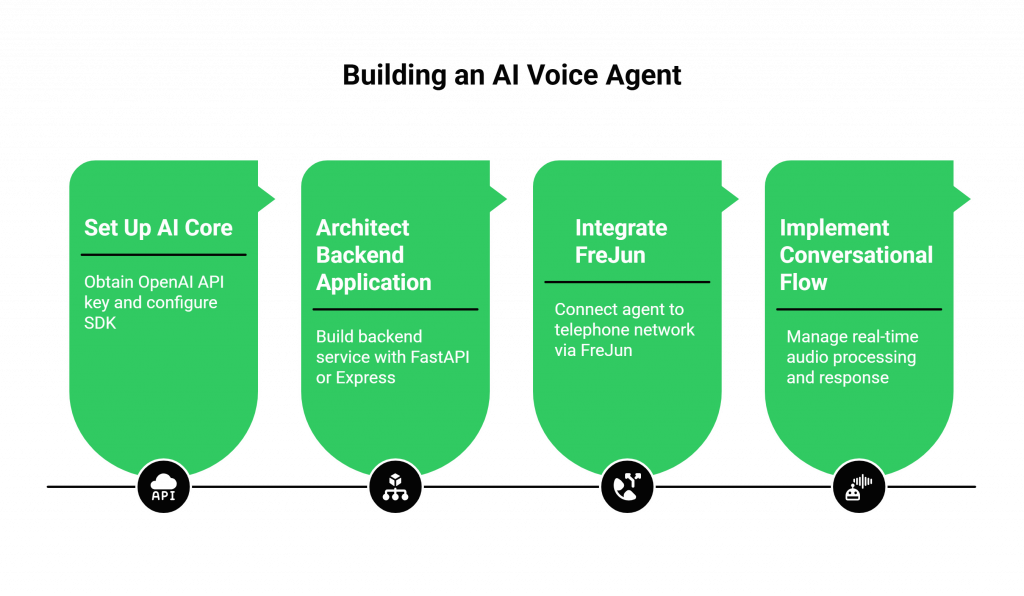

This guide outlines the modern, scalable architecture for building AI voice agents using OpenAI GPT-4.5 that can handle real phone calls.

Step 1: Set Up Your AI Core with the OpenAI SDK

First, get your OpenAI API key and set it up as an environment variable. Using the OpenAI Python SDK, you can begin to build the core logic of your agent. This is where you will define its personality, instructions, and any tools it can use.

Step 2: Architect Your Backend Application

Using your preferred framework (like Python with FastAPI or Node.js with Express), build a backend service that will orchestrate the conversation. This service will be the central hub that communicates with both FreJun and the OpenAI API.

Step 3: Integrate FreJun for the Voice Channel

This is the critical step that connects your agent to the telephone network.

- Sign up for FreJun and instantly provision a virtual phone number.

- Use FreJun’s server-side SDK in your backend to handle incoming WebSocket connections from our platform.

- In the FreJun dashboard, configure your new number’s webhook to point to your backend’s API endpoint.

Step 4: Implement the Real-Time Conversational Flow

When a customer dials your FreJun number, your backend will spring into action:

- FreJun establishes a WebSocket connection and streams the live audio to your backend.

- Your backend receives the raw audio stream and forwards it directly to the GPT-4.5 audio endpoint.

- GPT-4.5 processes the audio natively and returns a synthesized audio response.

- Your backend receives the audio response (often as a base64 encoded string), decodes it, and streams it back to the FreJun API.

- FreJun plays the audio to the caller with ultra-low latency.

With this architecture, you have a complete, enterprise-ready AI voice agents using OpenAI GPT-4.5.

Best Practices for a Flawless Implementation

- Handle Voice Input Gracefully: Use robust silence padding and voice activity detection to optimize responsiveness and create a more natural turn-taking experience.

- Design for Graceful Failure: No AI is perfect. Program clear fallback paths in your conversational logic and design a seamless handoff to a human agent when the bot gets stuck. FreJun’s API can facilitate this live call transfer.

- Ensure Security and Privacy: Manage all API keys and user data securely. Encrypt all communication and ensure your data handling practices comply with all relevant privacy regulations.

- Continuously Monitor and Iterate: Use call analytics and conversation logs to understand how users are interacting with your agent. This data is invaluable for refining its instructions, improving its tool usage, and enhancing the overall user experience.

Final Thoughts

The power of AI voice agents using OpenAI GPT-4.5 is undeniable. It represents a paradigm shift in our ability to create intelligent, helpful, and truly conversational AI. But that intelligence is only as valuable as its accessibility. A brilliant AI that is trapped in a digital sandbox cannot solve real-world business problems at scale.

The strategic path forward is to combine the best AI brain with the best voice infrastructure. By leveraging a specialized platform like FreJun, you can offload the immense burden of telecom engineering and focus your valuable resources on what truly differentiates your business: the intelligence of your AI and the quality of the customer experience you deliver.

Build an agent that’s as smart as GPT-4.5, and let us give it a voice that can reach the world.

Also Read: Why Choose Virtual Phone Providers for Enterprise Operations in Turkey?

Frequently Asked Questions (FAQ)

No, it integrates with it. You use the OpenAI API to access the GPT-4.5 model (the “brain”). FreJun provides the separate, essential voice infrastructure (the “body”) that connects that brain to the telephone network.

Yes. FreJun is designed to stream the raw, unprocessed audio from the phone call directly to your backend. You can then forward this raw audio to the GPT-4.5 audio endpoint, allowing you to take full advantage of its real-time, end-to-end processing.

Function calling is managed by your backend. When GPT-4.5 determines that it needs to call a function, it will send a request to your backend. Your backend code will then execute the function (e.g., make a database query), send the result back to GPT-4.5, and then the model will use that result to formulate its final response.

Absolutely not. We abstract away all the complexity of telephony. If you can work with a standard backend API and a WebSocket, you have all the skills needed to build powerful AI voice agents using OpenAI GPT-4.5.

This architecture is highly scalable. FreJun’s infrastructure is built to handle massive call concurrency. By designing your backend to be stateless, you can use standard cloud auto-scaling to handle any amount of traffic, ensuring your service is both resilient and cost-effective.