The first generation of customer support automation taught us a valuable lesson: a bot that can only talk is not enough. Customers grew tired of chatbots that could answer basic FAQs but couldn’t perform a single meaningful action. The true promise of AI in customer service lies not in its ability to just converse, but in its ability to do. Businesses need autonomous agents that can understand a request and then interact with other systems to fulfill it, booking an appointment, querying a database, or processing a refund.

Table of contents

- The Production Wall: Why Your Voice AI Project Is Stuck in the Demo Phase

- The Solution: An Action-Oriented Brain with an Enterprise-Grade Voice

- How to Build a Voice Bot Using Mistral Medium 3?

- DIY Infrastructure vs. FreJun: A Head-to-Head Comparison

- Best Practices for Optimizing Your Mistral Medium 3 Voice Bot

- From Advanced Model to Tangible Business Asset

- Frequently Asked Questions (FAQs)

This is where the next frontier of AI, powered by models like Mistral Medium 3, is changing everything. As a frontier-class multimodal model, it is not only optimized for understanding audio but also excels at “function calling.” This is the key capability that allows an AI to move beyond conversation and into action. The challenge, however, is no longer just about having a smart AI; it’s about connecting that action-oriented AI to your customers over a live, real-time phone call.

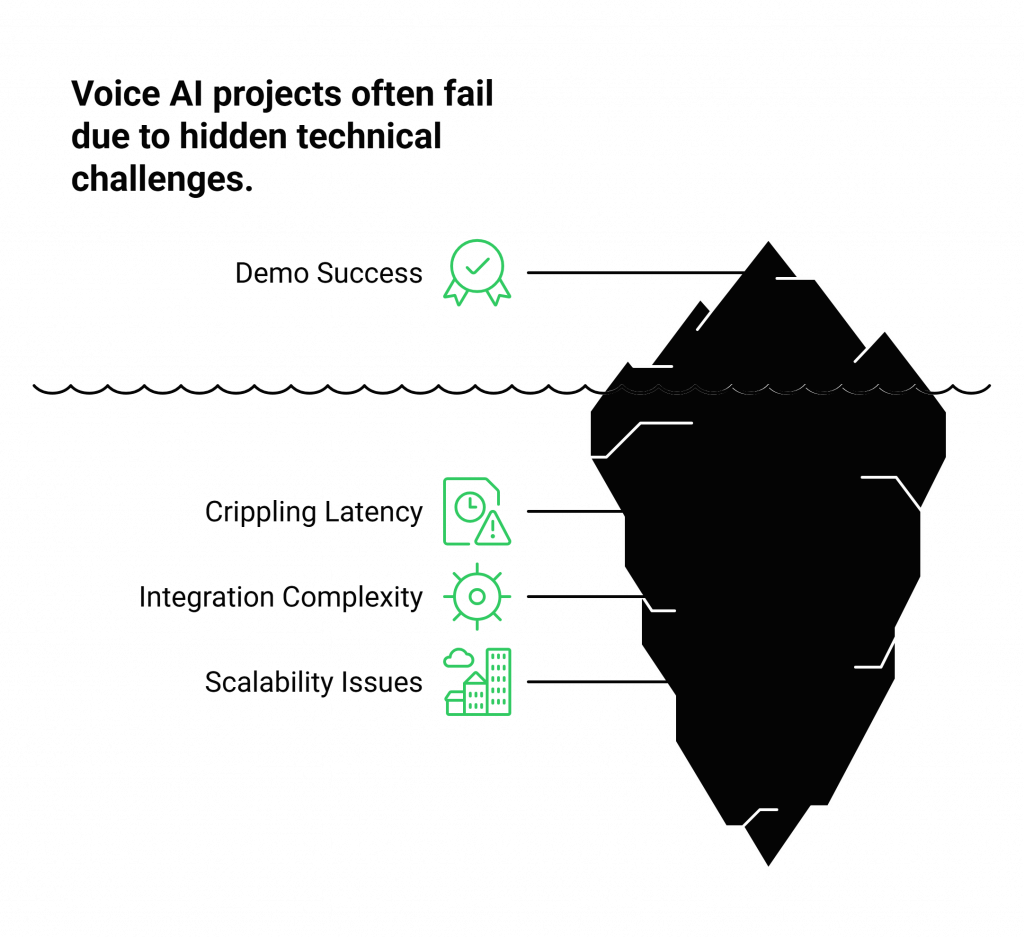

The Production Wall: Why Your Voice AI Project Is Stuck in the Demo Phase

The excitement around a powerful model like Mistral Medium 3 often inspires development teams to build impressive demos. The bot works perfectly in a controlled lab environment, taking input from a laptop microphone and demonstrating its ability to call external tools. But a massive chasm separates this local proof-of-concept from a scalable, production-grade system that can handle real customer calls. This is the production wall, and it’s where most voice AI projects fail.

When a business tries to deploy their AI over the phone, they run headfirst into daunting technical challenges:

- Crippling Latency: The delay between a caller speaking, the AI thinking, calling a function, and responding is the number one killer of a natural conversation. High latency leads to awkward pauses and a fundamentally broken user experience.

- Integration Complexity: A complete voice solution requires stitching together multiple real-time services: Automatic Speech Recognition (ASR), the Mistral Medium 3 model, an external API for function calling, and a Text-to-Speech (TTS) engine. Orchestrating this pipeline seamlessly is a major engineering hurdle.

- Scalability and Reliability: A system that works for one call will collapse under the pressure of hundreds of concurrent calls. Ensuring high availability and crystal-clear audio requires a resilient, geographically distributed network, which is incredibly expensive and complex to build and maintain.

This infrastructure problem is the primary reason promising voice AI projects stall, consuming valuable resources on “plumbing” instead of perfecting the AI’s core logic.

The Solution: An Action-Oriented Brain with an Enterprise-Grade Voice

To build a voice bot that can actually do things, you need to combine an action-oriented AI “brain” with a robust, low-latency “voice.” This is where the synergy between Mistral Medium 3 and FreJun’s voice infrastructure creates a powerful, production-ready solution.

- The Brain (Mistral Medium 3): As a frontier-class model optimized for audio and function calling, Mistral Medium 3 provides the intelligence to not only understand a customer’s request but also to execute the necessary backend tasks to fulfill it.

- The Voice (FreJun): FreJun handles the complex voice infrastructure so you can focus on building your AI. Our platform is the critical transport layer that connects your Mistral Medium 3 application to your customers over any telephone line. We are architected for the speed and clarity that a real-time Voice bot using Mistral Medium 3 demands.

By pairing Mistral’s intelligence with FreJun’s reliable infrastructure, you can bypass the biggest hurdles in voice bot development and move directly to creating a support experience that is genuinely helpful and automated.

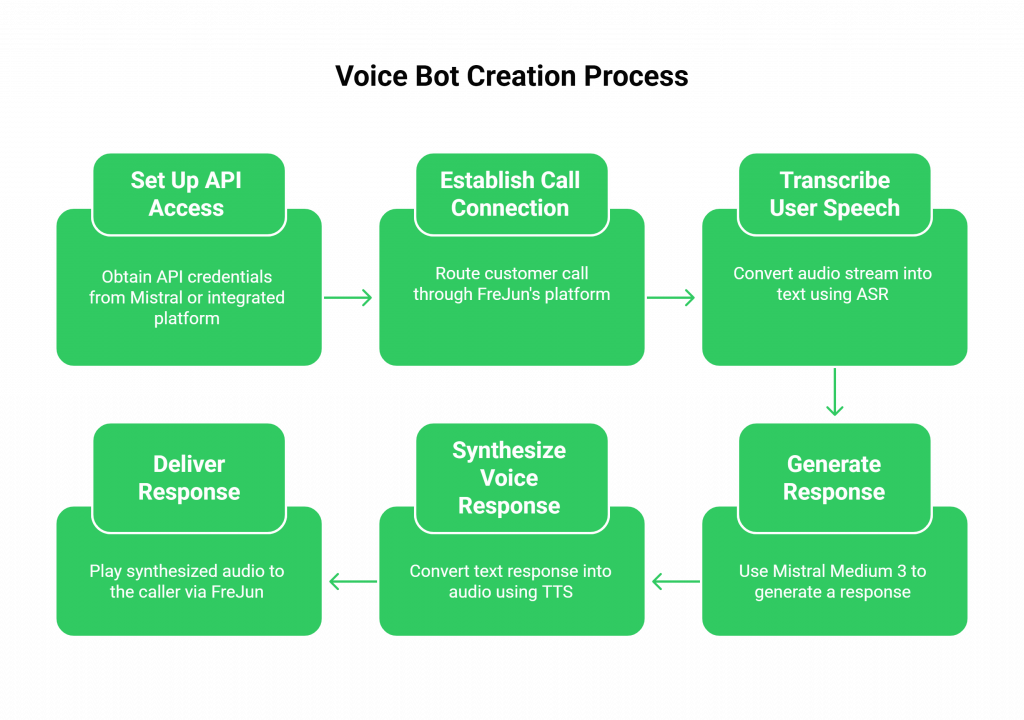

How to Build a Voice Bot Using Mistral Medium 3?

While many tutorials start with a text prompt, a real business application starts with a phone call. This guide outlines the production-ready pipeline for creating a Voice bot using Mistral Medium 3.

Step 1: Set Up Your Mistral Medium 3 API Access

Before your bot can think, its brain needs to be connected.

- How it Works: Obtain your API credentials from Mistral or an integrated platform. You will use these to make authenticated API calls to the Mistral Medium 3 model endpoint from your backend service.

Step 2: Establish the Call Connection with FreJun

This is where the real-world interaction begins.

- How it Works: A customer dials your business phone number, which is routed through FreJun’s platform. Our API establishes the connection and immediately begins providing your application with a secure, low-latency stream of the caller’s voice.

Step 3: Transcribe User Speech with ASR

The raw audio stream from FreJun must be converted into text.

- How it Works: You stream the audio from FreJun to your chosen ASR service. The ASR transcribes the speech in real time and returns the text to your application server.

Step 4: Generate a Response (and Action) with Mistral Medium 3

The transcribed text is fed to your Mistral Medium 3 model.

- How it Works: Your application takes the transcribed text, appends it to the ongoing conversation history, and sends it all as a prompt to the Mistral Medium 3 API. Here, you leverage its function calling feature. If the user says, “Book a demo for tomorrow at 10 AM,” the model can identify the intent and parameters, call your internal scheduling API, and then formulate a text response confirming the booking.

Step 5: Synthesize the Voice Response with TTS

The text response from Mistral Medium 3 must be converted back into audio.

- How it Works: The generated text (e.g., “Great, I’ve booked your demo for tomorrow at 10 AM. You’ll receive a confirmation email shortly.”) is passed to your chosen TTS engine, like ElevenLabs or Google TTS. Using a streaming TTS service is critical here.

Step 6: Deliver the Response Instantly via FreJun

The final, crucial step is playing the bot’s voice to the caller.

- How it Works: You pipe the synthesized audio stream from your TTS service directly to the FreJun API. Our platform plays this audio to the caller over the phone line with minimal delay, completing the conversational loop of your Voice bot using Mistral Medium 3.

DIY Infrastructure vs. FreJun: A Head-to-Head Comparison

When building a Voice bot using Mistral Medium 3, you face a critical build-vs-buy decision for your voice infrastructure. The choice will define your project’s speed, cost, and ultimate success.

| Feature / Aspect | DIY Telephony Infrastructure | FreJun’s Voice Platform |

| Primary Focus | 80% of your resources are spent on complex telephony, network engineering, and latency optimization. | 100% of your resources are focused on building and refining the AI reasoning and function-calling logic. |

| Time to Market | Extremely slow (months or even years). Requires hiring a team with rare and expensive telecom expertise. | Extremely fast (days to weeks). Our developer-first APIs and SDKs abstract away all the complexity. |

| Latency | A constant and difficult battle to minimize the conversational delays that make bots feel robotic. | Engineered for low latency. Our entire stack is optimized for the demands of real-time voice AI. |

| Function Calling | You are responsible for managing the entire real-time state machine between the call, your LLM, and your external APIs. | We provide the seamless, low-latency transport layer that makes real-time function calling over a voice call possible. |

| Maintenance | You are responsible for managing carrier relationships, troubleshooting complex failures, and ensuring compliance. | We provide guaranteed uptime, enterprise-grade security, and dedicated integration support from our team of experts. |

Best Practices for Optimizing Your Mistral Medium 3 Voice Bot

Building the pipeline is the first step. To create a truly effective Voice bot using Mistral Medium 3, follow these best practices:

- Master Prompt Engineering for Function Calling: Design your prompts to clearly define the available tools and functions. Provide clear descriptions of what each function does so the model knows when and how to use them.

- Implement Robust Context Management: A coherent, multi-turn conversation that involves multiple function calls depends entirely on maintaining accurate context. Ensure your application correctly stores and sends the full conversation history with every turn.

- Optimize for Latency: Use streaming APIs for every component of your pipeline: FreJun for voice, your ASR, and your TTS. This is the most critical factor in making a complex, function-calling bot feel responsive.

- Test Real-World Scenarios: Move beyond simple Q&A testing. Create complex test cases that require the bot to make multiple function calls, handle errors, and manage conversation state across several turns.

Pro Tip: When designing your Voice bot using Mistral Medium 3, start with a small, well-defined set of tools for the function calling feature. Perfect the workflow for one or two tasks (e.g., checking an order and booking a return) before expanding to more complex operations. This iterative approach makes debugging much easier.

From Advanced Model to Tangible Business Asset

The availability of models like Mistral Medium 3, with their powerful function-calling capabilities, represents a major step toward true automation. But a powerful AI is not, by itself, a business solution. It needs to be connected, reliable, and scalable. It needs a voice.

By building on FreJun’s infrastructure, you make a strategic decision to bypass the most significant risks and costs associated with voice AI development. You can focus your valuable resources on what you do best: creating an intelligent, action-oriented, and valuable customer experience with your custom Voice bot using Mistral Medium 3. Let us handle the complexities of telephony, so you can build the future of your business communications with confidence. This is how you turn a powerful AI model into a tangible business asset.

Further Reading – Turn Your Platform into an Online Voice Bot Ecosystem

Frequently Asked Questions (FAQs)

Mistral Medium 3 is a frontier-class, multimodal AI model released in May 2025. It is highly optimised for processing audio input and features advanced “function calling,” which allows it to interact with external tools and APIs to perform tasks.

No. FreJun is the specialised voice infrastructure layer. Our platform is model-agnostic, meaning you bring your own AI model (like Mistral Medium 3), Automatic Speech Recognition (ASR), and Text-to-Speech (TTS) services. This gives you complete control and flexibility.

Function calling is the ability of the AI model to pause its response, call an external tool or API (like your company’s scheduling system), get information back, and then use that information to continue its response. This is what allows a voice bot to move beyond just talking and start doing things for the customer.

Function calling adds an extra step to the response process. It’s therefore even more critical that the rest of the pipeline is as fast as possible. A low-latency platform like FreJun ensures that the time spent on audio transport is negligible, keeping the response time of your Voice bot using Mistral Medium 3 as low as possible.