Customer support automation is entering a multimodal era where AI can understand not just words, but also images, sounds, and video in a single conversation. Meta’s Llama 4 Maverick embodies this shift, offering powerful, open-weight reasoning across diverse inputs. Yet delivering that intelligence over a live phone call demands more than a great model; it requires robust, real-time voice infrastructure. FreJun provides this backbone, enabling developers to connect Maverick’s capabilities seamlessly to global telephony for responsive, production-ready voice agents.

Table of contents

- The Next Frontier of Customer Support: The Multimodal Voice Agent

- What is Llama 4 Maverick? A Generational Leap in AI

- The Voice Challenge: Why Intelligence Isn’t Enough

- FreJun: The Voice Transport Layer for Your Multimodal AI

- Building a Voice Bot Using Llama 4 Maverick

- DIY Voice Infrastructure vs. FreJun: A Strategic Breakdown

- Best Practices for a Production-Ready Maverick Voice Bot

- Focus on Reasoning, Not on Ringtones

- Frequently Asked Questions (FAQs)

The Next Frontier of Customer Support: The Multimodal Voice Agent

Customer support automation is evolving at a breakneck pace. For years, the goal was to create AI that could understand and respond to text. Then came the challenge of voice, building bots that could hold a natural, real-time conversation. Today, we stand at the cusp of a new frontier: the multimodal agent. This is an AI that can not only listen and speak but can also see, read, and process a rich variety of data formats in a single, unified interaction.

The release of Meta’s Llama 4 Maverick, a flagship open-weight model, marks the arrival of this new era. It is an AI designed from the ground up to reason across text, images, audio, and even video. Imagine a customer support agent that a user can talk to, while also showing it a picture of a broken part or having it listen to a strange noise their appliance is making. This is the power Maverick brings to the table.

However, harnessing this incredible intelligence and delivering it through the most immediate and universal channel, a live phone call, introduces a critical engineering bottleneck: the voice infrastructure.

What is Llama 4 Maverick? A Generational Leap in AI

Llama 4 Maverick isn’t just an incremental update; it’s a new class of model. Developed by Meta, it represents the cutting edge of open-weight AI, making elite capabilities accessible to developers and businesses everywhere.

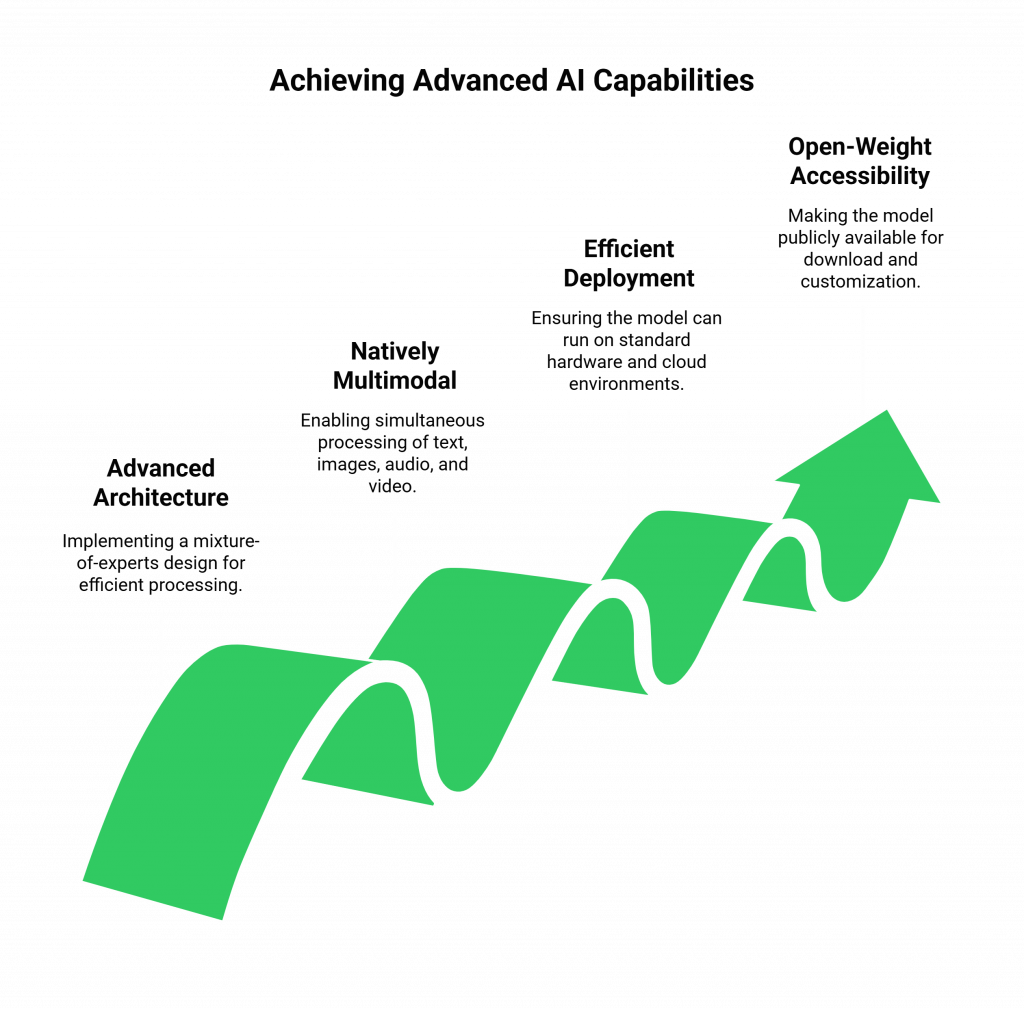

Here’s what sets it apart:

- Advanced Architecture: It employs a mixture-of-experts (MoE) design with 400 billion total parameters, activating an efficient 17 billion per query. This delivers immense power without the crippling computational cost of a dense model.

- Natively Multimodal: This is its defining feature. Maverick can process and reason across text, images, audio, and video inputs simultaneously. This unlocks entirely new use cases for customer support that were impossible with text-only models.

- Efficient Deployment: Despite its power, Maverick is engineered for efficiency. It can run on a single NVIDIA H100 GPU or within standard enterprise cloud environments, making it feasible for real-world deployment.

- Open-Weight and Accessible: As an open-weight model, it is publicly available for download and fine-tuning via platforms like Hugging Face, giving businesses unprecedented control and flexibility to build custom solutions.

Maverick provides the “brain” for a truly intelligent and context-aware voice bot using Llama 4 Maverick, one that can understand a customer’s problem in a far richer, more human way.

Also Read: Google Gemini 1.5 Pro Voice Bot Tutorial: Automating Calls

The Voice Challenge: Why Intelligence Isn’t Enough

A brilliant AI model is useless if it can’t communicate effectively. To build a voice bot, Maverick must be integrated into a real-time communication pipeline. This typically involves three core components:

- Automatic Speech Recognition (ASR): To convert the customer’s spoken words into text.

- The LLM (Llama 4 Maverick): To process the input, reason, and generate a response.

- Text-to-Speech (TTS): To convert the text response back into audible speech.

While Maverick handles the reasoning part, the real challenge is the voice transport layer. This is the complex, underlying infrastructure that connects the phone call to your AI. Building this yourself means tackling latency, jitter, scalability, and carrier integrations—a deep and resource-intensive engineering problem that is far removed from the core task of building an intelligent AI. A slow or unreliable connection will make even the smartest bot feel clumsy and frustrating to interact with.

FreJun: The Voice Transport Layer for Your Multimodal AI

This is the exact problem FreJun was built to solve. We are not an LLM provider. We provide the enterprise-grade, developer-first voice infrastructure that handles all the complex telephony, so you can focus on your AI. For a project involving Llama 4 Maverick, FreJun acts as the essential, low-latency bridge between the customer on the phone and your powerful multimodal agent.

Our platform is designed for exactly this kind of advanced application:

- Real-Time Media Streaming: We handle the bi-directional streaming of audio, ensuring your ASR gets a clear signal and the customer hears the TTS response instantly.

- Model-Agnostic Integration: FreJun works with any ASR, LLM, and TTS you choose. You bring your best-in-class AI stack, and we provide the communication backbone.

- Scalability and Reliability: Our geographically distributed infrastructure is built to handle thousands of concurrent calls, ensuring your voice bot using Llama 4 Maverick is always available and performs reliably under load.

Also Read: How to Build a AI Voice Agents Using OpenAI GPT-3.5 Turbo?

Building a Voice Bot Using Llama 4 Maverick

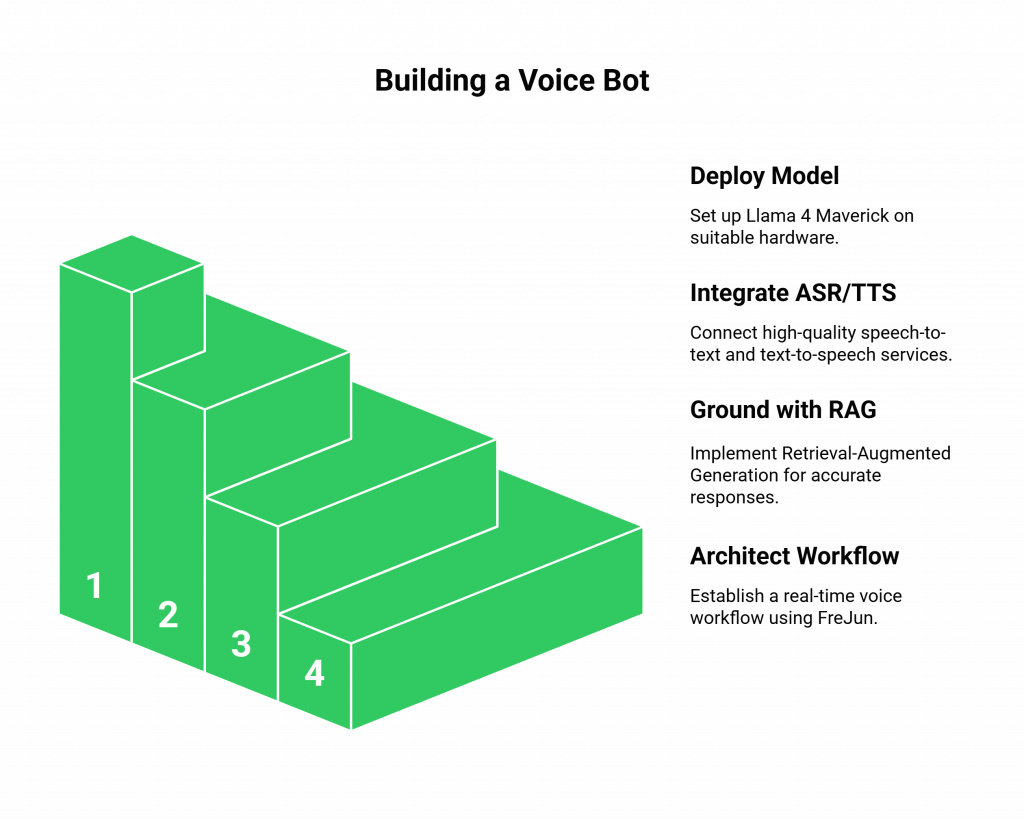

Let’s outline the architectural steps for creating a next-generation customer support agent.

Step 1: Deploy the Llama 4 Maverick Model

First, you need to get your model running and accessible via an API.

- Access the Model: Download the open-weight model from Meta or Hugging Face. For faster setup without managing hardware, you can use a platform like Chatbase.

- Set Up Infrastructure: For production use with low latency, you must run Maverick on suitable GPU hardware, such as an NVIDIA H100, either on-premise or in the cloud.

Step 2: Integrate ASR and TTS Services

These are the “ears” and “mouth” of your bot. Choose high-quality, low-latency services for both to ensure a fluid conversational experience.

Step 3: Ground Your Bot with a Knowledge Base (RAG)

To ensure your bot provides accurate, company-specific information, you must implement Retrieval-Augmented Generation (RAG).

- Create a vector database of your internal knowledge base (FAQs, product manuals, troubleshooting guides).

- When a customer asks a question, your application first retrieves the most relevant documents from this database.

- This context is then passed to Maverick along with the user’s query, ensuring the bot’s response is factual and helpful.

Step 4: Architect the Real-Time Voice Workflow with FreJun

This is where all the pieces come together.

- The Call Connects: A customer calls a phone number provided by FreJun. Our platform answers the call and establishes a connection to your application backend via a webhook.

- Audio is Streamed: FreJun streams the customer’s voice audio to your application in real-time.

- Speech is Transcribed: Your application forwards this audio stream to your ASR service, which returns a text transcription.

- Maverick Reasons: The transcribed text is sent to your Llama 4 Maverick API endpoint, along with any relevant context retrieved via RAG.

- Response is Generated: Maverick processes the input and generates a text-based response.

- Response is Vocalized: The text is sent to your TTS engine, which creates an audio file or stream.

- Audio is Delivered: Your application sends this audio back to the FreJun API, which plays it to the customer with minimal delay, completing the conversational turn.

Also Read: Virtual Phone Providers for Enterprise Operations in Norway

DIY Voice Infrastructure vs. FreJun: A Strategic Breakdown

| Feature | The DIY Infrastructure Approach | The FreJun Infrastructure Approach |

| Development Focus | Split between AI model development and complex, non-core telephony engineering. | 100% focused on refining your voice bot using Llama 4 Maverick and its business logic. |

| Time to Market | 6-12+ months to build a stable, secure, and scalable voice platform from scratch. | Days or weeks to connect your AI stack to a production-ready, global voice network via a simple API. |

| Latency Management | A constant and difficult engineering challenge requiring deep expertise in VoIP and real-time protocols. | Solved by design. FreJun’s entire platform is purpose-built and optimized for low-latency AI conversations. |

| Scalability & Reliability | Your responsibility. Requires significant ongoing investment in hardware, DevOps, and carrier management. | Fully managed for you. Our distributed infrastructure scales on demand and is engineered for high availability. |

| Specialized Expertise | Requires hiring a team of expensive and hard-to-find telecom engineers. | You get access to our team of voice experts for dedicated integration support and guidance. |

Also Read: Enterprise Virtual Phone Solutions for Professional B2B Growth in Austria

Best Practices for a Production-Ready Maverick Voice Bot

- Be Mindful of Benchmarks: As research has shown, the performance of publicly released models can differ from highly optimized experimental versions used for benchmarks. Always conduct your thorough testing to validate performance for your specific use case.

- Design for Human Handoff: No bot is perfect. Design a clear and seamless process for escalating a conversation to a human agent when the AI reaches its limits.

- Explore Future Architectures: While the ASR -> LLM -> TTS pipeline is the current standard, keep an eye on emerging end-to-end models like Voila or LLaMA-Omni, which promise even lower latency in the future.

- Prioritize Low-Latency Components: The perceived speed of your bot is the sum of its parts. Ensure your ASR, TTS, and especially your GPU inference server are all optimized for speed.

Focus on Reasoning, Not on Ringtones

Building a voice bot using Llama 4 Maverick is a strategic investment in the future of customer experience. The model provides the raw intelligence to solve customer problems in ways that were previously unimaginable. Your goal should be to harness that intelligence as quickly and effectively as possible.

Wasting months and millions on building your own voice infrastructure is a distraction from that goal. It’s an exercise in reinventing the wheel. By partnering with FreJun, you stand on the shoulders of experts in real-time communication, allowing you to focus your energy and resources on what truly matters: creating the smartest, most helpful, and most innovative customer support agent on the market.

Also Read: Virtual Number Implementation for B2B Operations with WhatsApp Business in Brazil

Frequently Asked Questions (FAQs)

Its key differentiator is being natively multimodal. This means a voice bot using Llama 4 Maverick can understand not just the text of a conversation but can also process images, audio clips, or other data formats provided by the customer for richer, more accurate support.

No. While it can understand audio inputs as a data type, it does not function as an end-to-end speech-to-speech model. You still need to integrate separate Automatic Speech Recognition (ASR) and Text-to-Speech (TTS) services to handle the live conversational interface.

FreJun provides the voice transport layer. We handle the complex telephony infrastructure that connects a live phone call to your backend AI application, managing the real-time streaming of audio in both directions.

For the low-latency inference required for a real-time voice conversation, you will need to run the model on powerful GPU infrastructure, such as a single NVIDIA H100 or an equivalent enterprise cloud setup.