For years, the process of building voice bots followed a predictable, turn-based rhythm. The user would speak a complete sentence. There would be a moment of silence. The bot would then “think” and deliver its complete response. This “stop-and-go” model was a direct limitation of the underlying technology.

The AI could not start listening until the user finished talking, and it could not start talking until its entire thought was complete.

But this is not how humans converse. We interrupt, we overlap, we give real-time feedback. Today, a profound architectural shift is underway, driven by the rise of real-time streaming AI, and it is completely reshaping the art and science of building voice bots.

The future of voice AI is not just about what the bot says; it is about how and when it says it. The move from a turn-based to a streaming architecture is a quantum leap in creating live conversational AI systems that feel less like a robotic transaction and more like a fluid, natural dialogue.

A new generation of AI models powers this evolution, along with a new kind of voice infrastructure that supports the intense, bidirectional demands of low-latency audio streaming.

Table of contents

The “Old World”: The Turn-Based (VAD) Architecture

To understand the revolution, we must first look at the old model, which is based on a concept called Voice Activity Detection (VAD).

The Inefficiency of the “Wait for Silence” Model

In the traditional VAD-based architecture, the workflow was a rigid, sequential process:

- User Speaks: The user speaks a complete sentence or phrase.

- VAD Detects Silence: The system waits for a pause of a certain duration, which it interprets as the end of the user’s turn.

- Process the Full Utterance: Only after detecting silence does the system take the entire audio clip and send it to the Speech-to-Text (STT) engine for transcription.

- LLM Thinks: The full transcription is sent to the Large Language Model (LLM).

- TTS Synthesizes: The LLM’s full text response is sent to the Text-to-Speech (TTS) engine, which synthesizes the entire audio response.

- Bot Responds: The complete audio file is then played back to the user.

The problem with this model is the cumulative latency. Each step has to wait for the previous one to be 100% complete. This creates a noticeable, multi-second delay between the user finishing their sentence and the bot starting its response. It is this delay that makes traditional voice bots feel slow and robotic.

Also Read: From Text Chatbots to Voice Agents: How a Voice Calling SDK Bridges the Gap

The “New World”: The Streaming Architecture Revolution

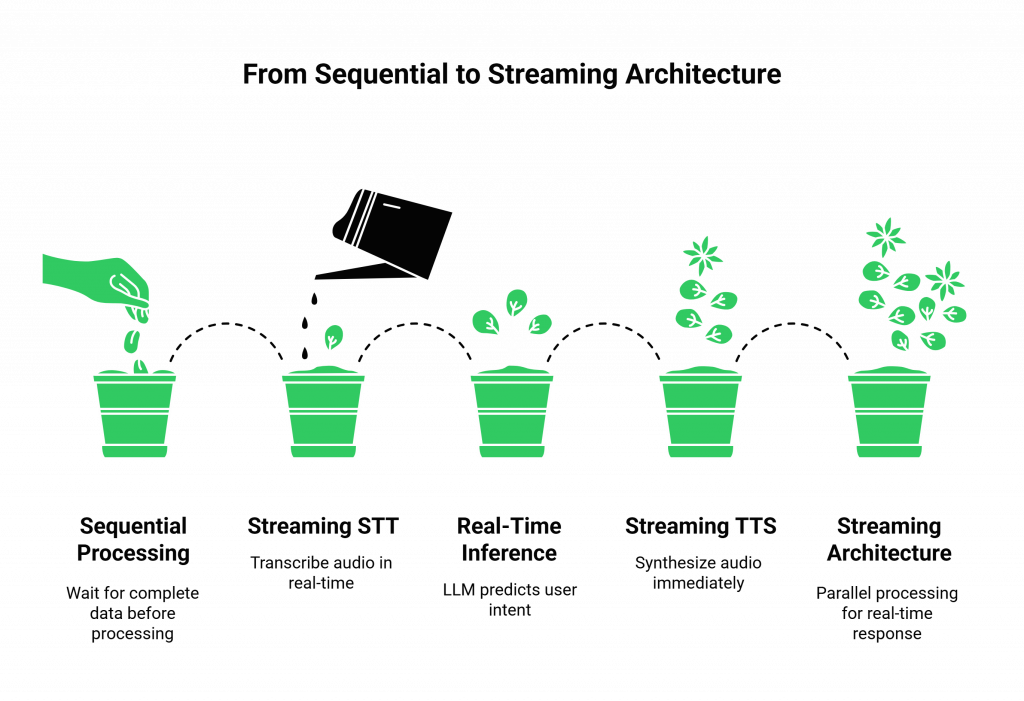

A real-time streaming architecture completely demolishes this sequential, “wait for it to be finished” model. It is a paradigm of parallel processing, where every part of the system is working simultaneously, in real-time. This is the heart of modern real-time AI voice processing.

The Power of a Continuous, Bidirectional Flow

In a streaming STT TTS architecture, the data is not a series of self-contained “clips”; it is a continuous, bidirectional river of audio packets.

- Streaming STT: The moment the user utters their first syllable, the audio stream begins to flow to the STT engine. The STT engine does not wait for the user to stop talking; it starts transcribing as the user is speaking. It can generate a real-time, “interim” transcript that is updated word by word.

- Real-Time Inference Optimization: The moment the STT has transcribed the first few words, that partial transcript can be sent to the LLM. The LLM can start to “think” about the user’s likely intent and begin formulating a response before the user has even finished their sentence. This real-time inference optimization is a key technique for reducing perceived latency.

- Streaming TTS: As soon as the LLM has generated the first few words of its response, that text can be sent to the TTS engine. The TTS engine does not need to wait for the full response; it can start synthesizing the audio for the first sentence immediately.

- First-Packet Latency: The synthesized audio can then start streaming back to the user. The critical metric is no longer the time to the end of the bot’s response, but the “time to first packet”, the delay until the user hears the very beginning of the response. By processing everything in parallel, this time can be reduced to a fraction of a second.

This table clearly illustrates the architectural shift.

| Characteristic | Turn-Based (VAD) Architecture | Real-Time Streaming Architecture |

| Data Flow | Sequential and chunk-based (full audio clips). | Parallel and continuous (a stream of audio packets). |

| STT Process | Starts after the user finishes speaking. | Starts as the user begins speaking. |

| LLM Process | Starts after the full transcript is ready. | Starts as the first few words are transcribed. |

| TTS Process | Starts after the full LLM response is ready. | Starts as the first part of the response is generated. |

| Key Latency Metric | Time to Last Byte (end of response). | Time to First Byte (start of response). |

| User Experience | Feels slow, with noticeable pauses. | Feels fast, fluid, and natural. |

Also Read: Security in Voice Calling SDKs: How to Protect Real-Time Audio Data

What is the Role of the Voice Infrastructure in a Streaming World?

This high-speed, parallel processing is only possible if the underlying voice infrastructure is designed for it. The process of building voice bots with streaming AI is deeply dependent on the capabilities of the voice platform.

The Need for True, Bidirectional Low-Latency Audio Streaming

The voice platform is no longer just a simple “pipe.” It must be a sophisticated, high-performance media server capable of handling a continuous, two-way flow of audio data with ultra-low latency. This is a far more demanding task than just connecting a call.

- The Uplink: It must be able to capture the audio from the phone call and stream it to your STT engine with minimal delay.

- The Downlink: It must be able to receive the synthesized audio stream from your TTS engine and play it back to the user instantly.

This principle sits at the core of the FreJun AI Teler engine. We architect our entire platform as a high-performance, low-latency audio streaming engine that provides the robust, real-time plumbing advanced live conversational AI systems require. Our developer-first APIs give you the granular, real-time control you need to manage these bidirectional streams.

Ready to start building voice bots that can think and talk at the speed of conversation? Sign up for FreJun AI and explore our real-time streaming capabilities.

The Future: Pre-emptive AI and Full-Duplex Conversation

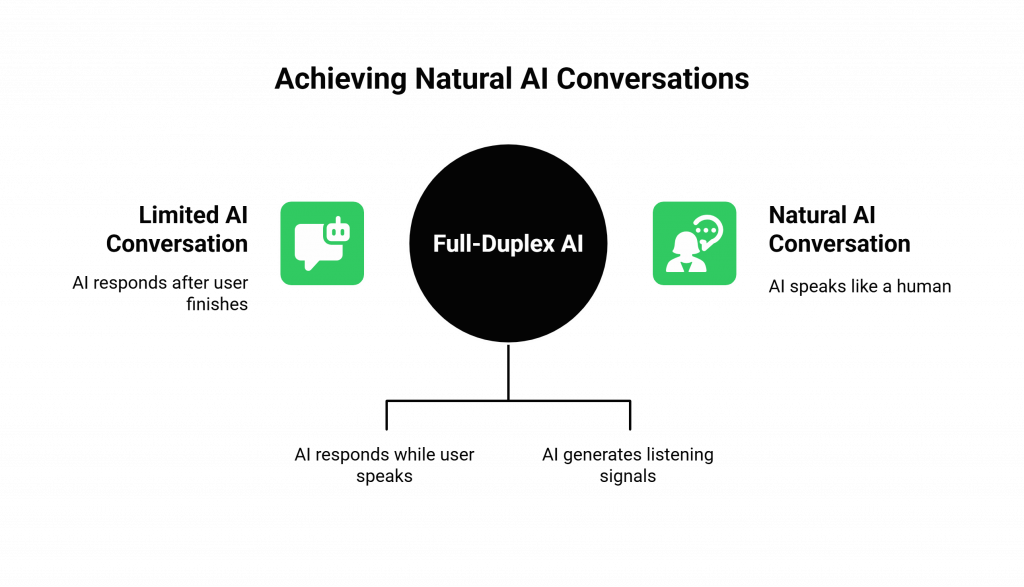

The streaming architecture is not the end of the road; it is the foundation for an even more advanced future. The next evolution in building voice bots is the move to “full-duplex” conversation, where the AI and the user can truly speak at the same time, just like humans do.

- Pre-emptive Response: As the AI listens to the user’s streaming transcript, it might predict the end of the user’s question before they even finish it. In a full-duplex system, it could start to respond while the user is still talking, creating an incredibly fast and natural interaction.

- Intelligent Backchanneling: The AI will also be able to generate “backchannel” responses—the “uh-huhs,” “okays,” and “I sees” that we use to signal that we are listening and engaged.

This level of conversational realism is the ultimate goal, and it is a goal that is only achievable with a true, real-time streaming architecture. The impact of this will be profound. A recent market analysis projects that the market for AI will contribute up to $15.7 trillion to the global economy by 2030, and these advanced, natural conversations will be a key driver of that value.

Also Read: 5 Common Mistakes Developers Make When Using Voice Calling SDKs

Conclusion

The evolution of building voice bots is a story of a relentless pursuit of speed. We are moving away from the slow, cumbersome, turn-based models of the past and entering a new era of fluid, fast, and natural-sounding live conversational AI systems. This revolution is powered by the architectural shift to real-time streaming AI, from the STT all the way through to the TTS.

But this powerful new generation of AI can only perform if it is built on a voice infrastructure that is designed for this new world. A platform that provides true, bidirectional low-latency audio streaming is no longer a “nice-to-have”; it is the essential, non-negotiable foundation for the future of voice.

Want to do a technical deep dive into our streaming architecture and see how you can use it for real-time inference optimization? Schedule a demo with our team at FreJun Teler.

Also Read: Top Automation Features Every Call Center Needs: IVR, Chatbots, Routing & More

Frequently Asked Questions (FAQs)

A turn-based bot waits for the user to finish speaking completely before it starts to process the audio. A real-time streaming bot starts processing the audio the moment the user begins to speak, allowing for a much faster and more natural conversation.

Streaming STT (Speech-to-Text) transcribes spoken audio into text in real time as the speaker talks. Streaming TTS (Text-to-Speech) begins generating and playing audio as soon as text arrives, which reduces the AI’s response time.

Real-time inference optimization lets the LLM start processing user intent early. It uses the first words from streaming STT instead of waiting for full sentences. This significantly reduces the AI’s perceived thinking time.

Low-latency audio streaming is the foundational plumbing of the system. The entire streaming AI architecture depends on fast audio packet exchange. Audio must move quickly between the user, platform, and AI models. Without this speed, parallel processing benefits disappear.

A full-duplex conversation is one where both parties can speak and be heard at the same time, just like a natural human conversation. This is the next frontier for live conversational AI systems and is only possible with a streaming architecture.

It does not always improve final answer accuracy. However, it improves interaction quality. Conversations feel more natural and fluid. Users are less likely to interrupt the bot. This leads to cleaner audio for STT. The overall outcome is better.

It involves a different, more event-driven programming model than a simple, turn-based bot. However, a modern voice platform like FreJun AI provides the APIs and tools to manage these real-time streams, abstracting away much of the low-level complexity.

FreJun AI provides the essential, high-performance voice infrastructure. Our Teler engine is design from the ground up to be a low-latency audio streaming platform. We provide the robust, real-time “plumbing” that connects your streaming AI models to the global telephone network.

Yes, but the system uses it in a more sophisticated way. Instead of acting as a simple start-and-stop signal, the VAD provides a continuous stream of information about whether the user is speaking, which helps the system manage turn-taking more intelligently.

The biggest advantage is the feeling. The conversation feels faster, more natural, and more engaging. The frustrating, awkward pauses of older voice bots are dramatically reduce. It make the AI feel more like a real conversational partner.