For developers at the forefront of AI, the conversation has moved beyond single, monolithic models. The new frontier is collaborative AI: building teams of specialized AI agents that can work together to solve complex problems. Frameworks like Microsoft’s AutoGen, orchestrated by tools like LangChain, make this “agent swarm” a reality. You can create a digital task force where one agent plans, another researches, and a third critiques the result.

But once you have assembled this brilliant, text-based team, how do you put them on the front lines of customer communication? How do you let a customer on a simple phone call interact with this powerful, collaborative intelligence? This is where a VoIP Calling API Integration for LangChain AutoGen Microsoft becomes the essential, game-changing technology. It is the bridge that connects your advanced AI ecosystem to the real world of voice, transforming a theoretical concept into a practical communication tool.

Table of contents

- Understanding the AI Power Trio: LangChain, AutoGen, and Microsoft

- The Critical Communication Barrier: From Text to Voice

- The Architectural Blueprint: How the Integration Works

- Why is FreJun AI the Essential Voice Layer for This Advanced Stack?

- Next-Generation Use Cases

- Conclusion

- Frequently Asked Questions (FAQ)

Understanding the AI Power Trio: LangChain, AutoGen, and Microsoft

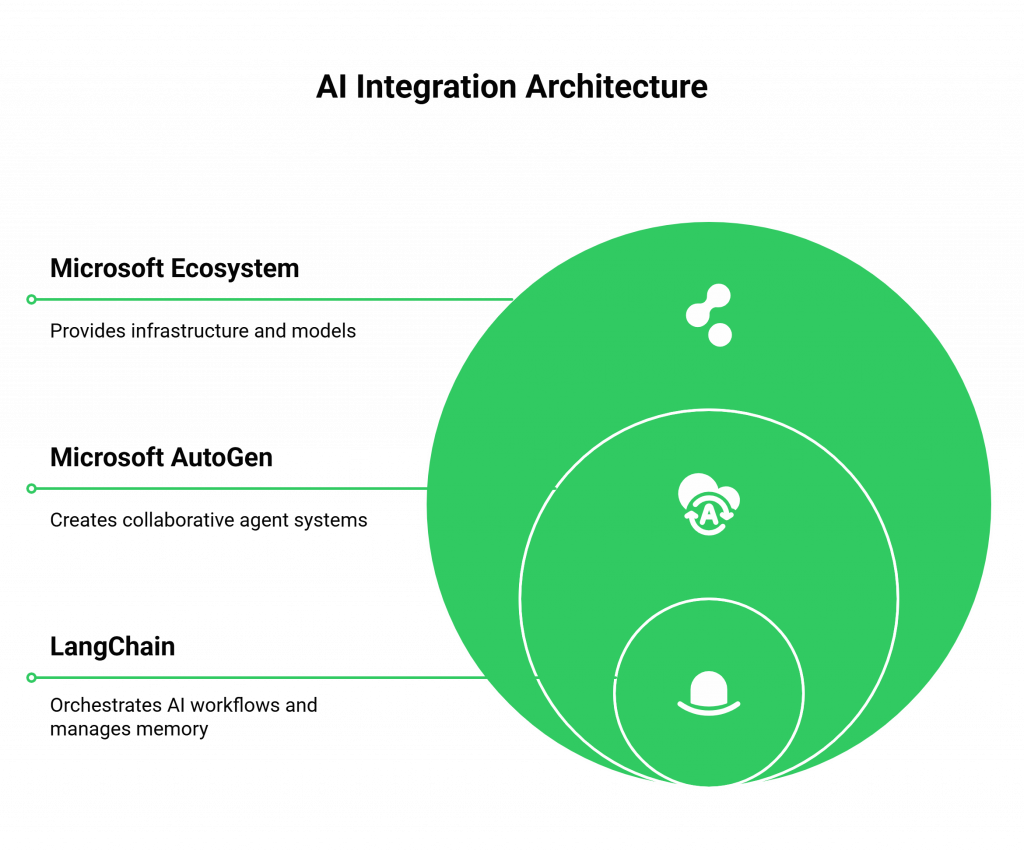

To grasp how the integration works, you first need to understand the roles of these three powerful components. Think of them as a highly organized team building a complex project.

- LangChain: The Conductor or Project Manager. LangChain is the framework that structures the entire AI workflow. It chains together calls to different models, manages memory so the AI can remember past interactions, and connects the AI to various tools (like APIs or databases). In our scenario, LangChain is the orchestrator that receives the user’s request and decides how to delegate the tasks.

- Microsoft AutoGen: The Team of Specialized Experts. AutoGen is a revolutionary framework for creating conversational applications using multiple, collaborating agents. Instead of one AI trying to do everything, you can define different agents with specific roles (e.g., a “Planner,” a “Coder,” a “Product Manager”). These agents can talk to each other to solve a problem, refining their approach until they reach an optimal solution. This is the “agent swarm.”

- The Microsoft Ecosystem: This entire stack is often powered by the robust infrastructure of Microsoft Azure, utilizing powerful models from Azure OpenAI Service. This provides the enterprise-grade computational power needed to run these complex, multi-agent interactions.

This trio allows you to build incredibly sophisticated, text-based problem-solving systems.

Also Read: Scaling AI Workflows with VoIP Calling API Integration for SynthFlow AI

The Critical Communication Barrier: From Text to Voice

Connecting a multi-agent system like AutoGen to a live phone call is a challenge of a different magnitude. The real-time, unpredictable nature of a voice conversation introduces a host of problems that can derail even the smartest AI.

| Challenge | The DIY Telephony Approach | The VoIP API Integration Approach |

| Real-Time Streaming | You must manually build and manage a stable, two-way audio stream. | A fully managed WebSocket connection handles all real-time audio transport. |

| Compounded Latency | Each step (STT, LangChain, AutoGen’s internal chatter, TTS) adds delay. A slow network makes it unusable. | An ultra-low latency network minimizes transport delay, preserving precious milliseconds for AI processing. |

| Reliability | A single dropped packet can disrupt the entire complex workflow. You are responsible for uptime. | Enterprise-grade infrastructure ensures the voice connection is rock-solid and always available. |

| Developer Focus | Your time is spent debugging SIP protocols instead of refining agent interactions. | You can focus 100% on the AI logic, which is where the real value lies. |

The complexity of a multi-agent AI system magnifies the need for a simple, reliable voice layer. A VoIP Calling API Integration for LangChain AutoGen Microsoft is designed to be exactly that.

The Architectural Blueprint: How the Integration Works

So, how does a spoken question from a customer trigger a collaborative session between multiple AI agents and result in a spoken answer? The process follows a sophisticated, high-speed architectural flow.

- The Call Connects (The Gateway): A customer calls a number managed by the VoIP API platform (like FreJun). The platform answers the call and immediately opens a secure WebSocket to your application server.

- Voice to Text (The Ears): As the customer speaks, their voice is capture and stream as raw audio data through the WebSocket to your application. Your application forwards this stream to a real-time Speech-to-Text (STT) engine.

- Orchestration Begins (The Project Manager): The live transcript from the STT service is passed to your LangChain application. This acts as the entry point, receiving the user’s problem or query.

- The Agent Swarm Activates (The Expert Team): LangChain initiates the AutoGen workflow. It might pass the user’s query to a “User Proxy Agent,” which then presents the problem to a “Group Chat Manager.” This manager then facilitates a conversation between specialized agents. For example, a “Researcher Agent” might query a database, while a “Writer Agent” formulates the response, and a “Critic Agent” reviews it for accuracy. This all happens as a rapid, internal text-based conversation.

- The Final Answer is Synthesized: LangChain, AutoGen, and Microsoft

- Text to Voice (The Mouthpiece): LangChain sends this final text response to a Text-to-Speech (TTS) service to convert it into high-quality, audible speech.

- The Spoken Response: This generated audio is streamed back through the VoIP API to the caller, completing the loop.

The entire complex workflow happens in a matter of seconds, enabled by a seamless VoIP Calling API Integration for LangChain AutoGen Microsoft.

Also Read: How Developers Use VoIP Calling API Integration for Retell AI in 2025?

Why is FreJun AI the Essential Voice Layer for This Advanced Stack?

You are building one of the most advanced AI systems possible. The voice infrastructure that connects it to the world must be uncompromisingly reliable and fast. FreJun AI is designed to be that infrastructure. We are not an AI framework; we are the specialized voice transport layer that makes your framework accessible.

Our mission is to support your most ambitious projects: “We handle the complex voice infrastructure so you can focus on building your AI.”

Next-Generation Use Cases

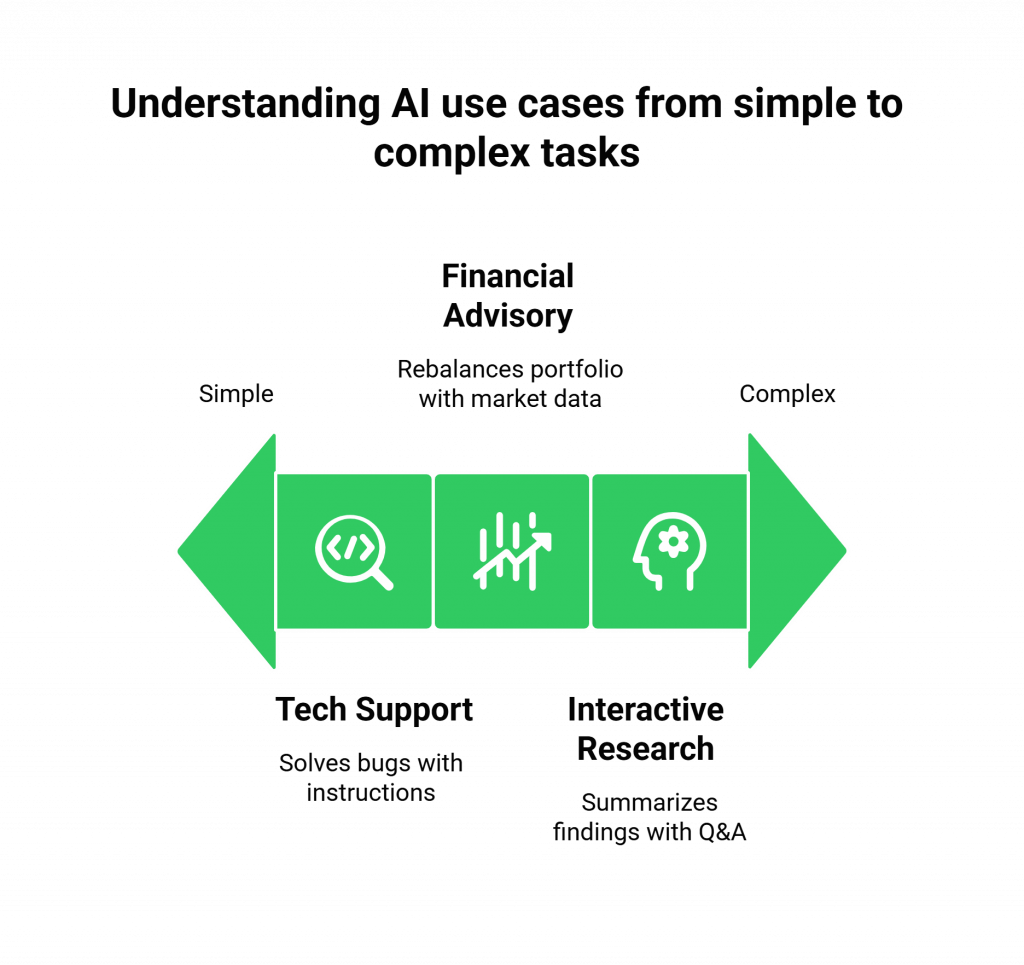

This integration unlocks possibilities that were pure science fiction just a few years ago.

- Live, Dynamic Tech Support: A user calls with a complex software bug. The AI triggers an agent to read the user’s logs, another agent to search a knowledge base for similar issues, and a third agent to write and deliver step-by-step troubleshooting instructions, all in a single, interactive phone call.

- Automated Financial Advisory: A client calls to ask about rebalancing their investment portfolio based on recent market news. The AI can trigger a “Data Analyst” agent to pull market data, a “Risk Analyst” agent to assess the current portfolio, and a “Financial Advisor” agent to synthesize a personalized recommendation and explain the reasoning verbally.

- On-Demand, Interactive Research: A user calls a research hotline and asks, “Summarize the latest findings on quantum computing for me.” The AI can deploy agents to search academic papers, synthesize the findings, and deliver a concise, spoken summary, complete with follow-up Q&A.

Conclusion

The evolution of AI has reached an exciting inflection point with collaborative, multi-agent systems. Frameworks like LangChain and Microsoft’s AutoGen are giving developers the tools to build AI that can reason, plan, and solve problems with unprecedented sophistication. However, this power remains locked in a text-based world without a reliable voice channel.

A robust VoIP Calling API Integration for LangChain AutoGen Microsoft is the key that unlocks this potential for real-world communication.

By partnering with a dedicated voice infrastructure provider like FreJun, you can offload the immense complexity of telecommunications and focus on building the next generation of intelligent, collaborative voice agents. You create the team of experts; we give them the phone line to talk to the world.

Also Read: How Business Expansion Is Fueled by a Smart Call System in Turkey (Türkiye)?

Frequently Asked Questions (FAQ)

AutoGen is an open-source framework from Microsoft that simplifies the creation of applications using multiple, collaborating AI agents. It allows you to define specialized agents that can “talk” to each other to accomplish complex tasks.

A multi-agent system allows for specialization and a “divide and conquer” approach. By assigning specific roles and encouraging collaboration and critique, these systems can often solve more complex, multi-step problems more reliably than a single model.

LangChain acts as the primary orchestrator. It provides the structure for the overall AI application, manages memory and tool usage, and initiates and manages the multi-agent workflow within the AutoGen framework.

Latency management is critical. It involves optimizing every step: using a low-latency voice API (FreJun), choosing fast STT and TTS services, and using powerful compute resources (like Azure) to ensure the AI’s internal “chatter” is as fast as possible.