In the exciting world of conversational AI, we often focus on the “brain” of the operation: the Large Language Model (LLM). We celebrate its intelligence, its ability to understand context, and its power to generate human-like text. But for a voice bot, this brilliant brain is completely deaf and mute. It can only perceive the world through a single, critical sense: its ability to “hear.”

And the quality of what it hears is the single most important, yet often overlooked, factor that determines its success or failure. When it comes to building voice bots, the quality of your audio stream is not just a technical detail; it is the foundation upon which the entire experience is built.

A voice bot is not a single piece of technology; it is a complex, real-time pipeline of data processing. At the very front of this pipeline is the raw, analog chaos of a human voice traveling over a phone line. If the audio that enters this pipeline is corrupted by noise, distortion, or packet loss, the failure will cascade through every subsequent step.

A garbled input will lead to a nonsensical transcription, which will lead to a confused LLM, which will result in an irrelevant and frustrating response for the user. This is the “garbage in, garbage out” problem, and it is the arch-nemesis of a successful voice bot.

Table of contents

What is the “Garbage In, Garbage Out” Problem in Voice AI?

The “garbage in, garbage out” (GIGO) principle is a concept as old as computing itself. It means that the quality of the output is determined by the quality of the input. In the context of voice AI, this principle is magnified. The “input” for a voice bot is not a clean, structured piece of text; it is a messy, unpredictable stream of audio.

The journey from a spoken word to an AI’s response involves a critical first step: Speech-to-Text (STT) transcription. The STT engine is the “ears” of your AI. Its job is to listen to the audio stream and convert it into a written transcript.

The accuracy of this transcription is paramount. If the audio is clean and clear, a modern STT engine can achieve accuracy rates of over 95%. However, if the audio is poor, that accuracy can plummet dramatically.

This is the core of the problem. If the STT engine mishears “I need to book a flight” as “I need a boot right,” your incredibly intelligent LLM, for all its power, is now working with a completely incorrect premise. It will provide a perfectly logical but utterly useless response. The customer is frustrated, the conversation is derailed, and the expensive AI you have built has failed.

Also Read: Why Latency Matters: Optimizing Real-Time Communication with Voice Calling SDKs

How Does a High-Quality Voice Infrastructure Provide the Solution?

The key to solving the GIGO problem is to ensure that the audio stream is as clean and clear as possible before it ever reaches your STT engine. This is where the choice of your underlying voice platform becomes a critical architectural decision. When you are building voice bots, you are not just choosing an API; you are choosing the entire audio transport layer.

A modern, carrier-grade voice platform like FreJun AI is not just a passive pipe; it is an active, intelligent system that is obsessively engineered to protect and optimize the audio stream at every step of its journey. This is achieved through a combination of a superior network architecture, advanced audio processing, and intelligent codec selection.

The Foundational Role of a Low-Latency, Edge-Native Network

Good audio starts with a good connection.

- Minimizing Packet Loss and Jitter: The public internet is a chaotic place. Audio packets can get lost or arrive in an uneven, spiky pattern (a phenomenon known as “jitter”), both of which are devastating to audio quality. A high-quality voice platform has a globally distributed, private network backbone. It onboards your call at an “edge” location physically close to your user and then transports the audio over its own managed, optimized network, which is far more reliable than the public internet. This is the first and most important step in improving call clarity.

- Lowering Latency: While not strictly an “audio quality” metric, low latency is a critical component of the perceived quality of a conversation. A globally distributed network that handles calls at the edge is the most effective way to reduce the round-trip time and create a snappy, responsive experience.

The Power of Intelligent Audio Preprocessing Pipelines

Before the audio is even sent to your STT engine, a sophisticated voice platform can perform a series of real-time enhancements. These audio preprocessing pipelines are designed to clean up the signal.

- Advanced Noise Reduction Techniques: The platform can use AI-powered algorithms to identify and suppress common sources of background noise, such as a barking dog, a passing siren, or the hum of an office.

- Echo Cancellation: It can remove the echo of the AI’s own voice from the audio stream, ensuring that the STT engine is only listening to the user.

- Automatic Gain Control (AGC): It can automatically normalize the volume of the caller’s voice, boosting quiet speakers and reducing loud ones to create a consistent, clear signal.

Also Read: From Text Chatbots to Voice Agents: How a Voice Calling SDK Bridges the Gap

The Importance of Codec Selection Best Practices

A codec (coder-decoder) is the algorithm that compresses and decompresses the audio. The choice of codec has a huge impact on both the quality of the sound and the amount of bandwidth it requires.

- Using High-Fidelity Codecs: A modern platform will support high-fidelity codecs like Opus, which can deliver crystal-clear, wideband audio. Using a high-quality codec is a key part of codec selection best practices as it provides the STT engine with a much richer and more detailed audio signal to work with.

- Dynamic Codec Negotiation: The platform can intelligently negotiate the best possible codec that is supported by both the user’s device and the network conditions, ensuring the optimal balance of quality and performance for every single call.

This table summarizes the key audio quality challenges and how a modern voice platform solves them.

| Audio Quality Problem | Impact on Voice Bot | How a High-Quality Voice Platform Solves It |

| Background Noise | Leads to inaccurate STT transcription. | Implements advanced, AI-powered noise reduction techniques. |

| Packet Loss & Jitter | Causes choppy, garbled audio with missing words. | Uses a private, managed network backbone and an adaptive jitter buffer. |

| Low-Fidelity Audio | Provides a “muffled” or “narrow” signal that is harder for STT to process. | Supports and prioritizes modern, high-fidelity codecs like Opus. |

| Echo | The STT gets confused by hearing the AI’s own voice. | Implements real-time acoustic echo cancellation (AEC). |

Ready to build your voice bot on a foundation of crystal-clear, reliable audio? Sign up for FreJun AI and explore our voice infrastructure.

What Are the Tangible Business Benefits of Investing in Audio Quality?

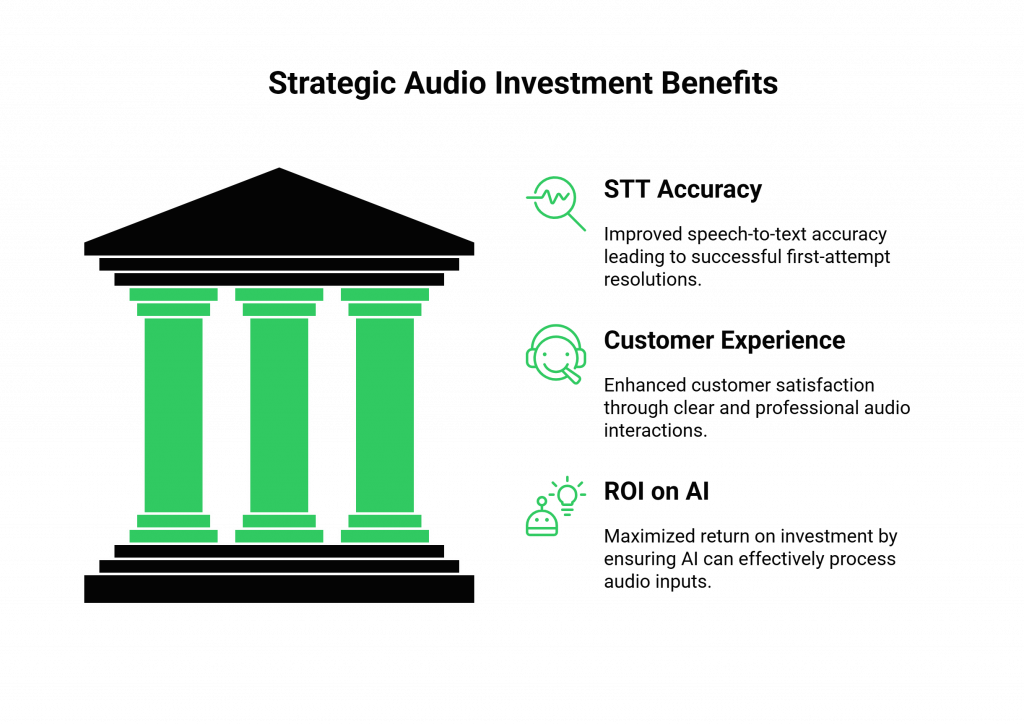

Focusing on audio quality is not just about technical perfection; it is a strategic decision that has a direct and measurable impact on the success of your voice AI investment.

Increased STT Accuracy Optimization and Higher Resolution Rates

This delivers the most direct benefit. By providing a cleaner audio signal, you dramatically improve STT accuracy. This improvement gives the LLM correct input more often and increases the number of successful conversations that resolve customer issues on the first attempt without escalation to a human agent.

A Superior Customer Experience

A call that is clear, crisp, and easy to understand is a fundamentally better customer experience. It shows a level of professionalism and respect for the customer’s time. Conversely, a choppy, garbled call is a major source of frustration and can damage your brand’s reputation.

A Higher ROI on Your AI Investment

You have invested a significant amount of money in developing the “brain” of your AI. Poor audio quality is like putting that brilliant brain in a soundproof box. By investing in a high-quality voice infrastructure, you are ensuring that your AI can actually hear the world, which is the key to unlocking its full potential and achieving the highest possible return on your investment.

Also Read: Security in Voice Calling SDKs: How to Protect Real-Time Audio Data

Conclusion

In the exciting process of building voice bots, it is easy to become hyper-focused on the intelligence of the LLM. But the most brilliant AI on the planet is useless if it is deaf. The quality of the audio that feeds your AI system is the single most important determinant of its success.

A low-quality, noisy audio stream inevitably creates a frustrating and ineffective user experience. When you choose a modern voice platform engineered obsessively for audio quality, through its network architecture, audio preprocessing pipelines, and intelligent codec selection best practices, you build your voice bot on a foundation of clarity.

This investment in a clean audio signal is the key to STT accuracy optimization and to delivering an AI-powered conversational experience that is not just functional, but truly exceptional.

Want a technical deep dive into our audio processing pipeline and see how our infrastructure can help you build a more accurate and reliable voice bot? Schedule a demo with our team at FreJun Teler.

Also Read: Cloud Telephony Features That Boost Sales Team Output

Frequently Asked Questions (FAQs)

Audio quality is critical because the Speech-to-Text (STT) engine, which is the “ears” of your voice bot, relies on a clean audio signal to accurately transcribe what the user is saying. Poor audio leads to poor transcription, which leads to poor AI responses.

It means that if you feed a voice bot a low-quality, “garbage” audio input (e.g., a noisy, choppy signal), you will get a low-quality, “garbage” output (an irrelevant or incorrect response), regardless of how intelligent your AI model is.

Noise reduction techniques are algorithms, often powered by AI, that can identify and suppress unwanted background sounds (like traffic, a fan, or other people talking) from the main audio stream, resulting in a clearer signal for the STT engine.

Audio preprocessing pipelines apply a series of real-time enhancements to an audio stream before the platform sends it to the AI. These enhancements include noise reduction, echo cancellation, and automatic volume control to optimize the audio for transcription.

The codec selection best practices involve prioritizing modern, high-fidelity codecs like Opus, which provide wideband audio for a richer, more detailed sound, and ensuring your platform can dynamically negotiate the best codec based on network conditions.

A globally distributed network helps with improving call clarity by reducing packet loss and jitter. By onboarding the call at a location close to the user and transporting it over a private, managed network, it avoids much of the congestion and unpredictability of the public internet.