The customer support landscape is in the midst of a seismic shift. The traditional model of long hold times and overworked agents is being replaced by a new paradigm: instant, intelligent, and automated assistance. At the forefront of this revolution is the voice bot.

For a business, the promise is transformative, the ability to provide 24/7, scalable, and highly efficient support. For a developer, the journey of building voice bots is an exciting venture into the heart of modern AI and communication technology.

But for those new to this world, the prospect can be intimidating. Where do you even begin? How do you go from a simple idea, “let’s automate our password resets” to a fully functional, production-grade AI that can have a natural conversation with a real customer? The process is more accessible than you might think.

It is a logical, step-by-step journey that starts with a clear plan and is built on a foundation of powerful, developer-friendly tools. This voice bot setup guide will provide a clear, beginner-friendly roadmap for building voice bots for beginners, from initial concept to a working prototype.

Table of contents

What is the Foundational Philosophy of a Good Voice Bot?

Before you write a single line of code, it is crucial to understand the core principle of a successful customer support voice bot: it is a specialist, not a generalist. The most common mistake beginners make is trying to build a bot that can “do everything.” This is a recipe for a frustrating and ineffective user experience.

The best voice bots are designed to do one or two things exceptionally well. Your goal is not to replace your entire human support team on day one. Your goal is to identify the most common, repetitive, and time-consuming inquiries that your human agents handle and build a bot that can automate those specific tasks flawlessly. This approach, often called “containment,” is the key to a successful customer support automation workflow.

A recent study on contact center automation found that automating just the top 5 most common inquiries can often deflect over 40% of inbound call volume.

What are the Foundational Components of a Voice Bot?

Every voice bot, from the simplest to the most complex, is built from the same three core technological pillars. Understanding these foundational components of voice bots is the first step in the technical journey.

- The “Ears” – Speech-to-Text (STT): This is the technology that listens to the raw audio of the caller’s voice and transcribes it into written text. This is the “input” to your AI’s brain.

- The “Brain” – A Natural Language Understanding (NLU) or Large Language Model (LLM): This is where the intelligence lives. It takes the transcribed text, understands the user’s intent (what they want to do), and decides on the appropriate action or response.

- The “Mouth” – Text-to-Speech (TTS): This technology takes the AI’s text-based response and synthesizes it into a natural-sounding, human-like voice. This is the “output” that the caller hears.

As a developer building voice bots, your primary job is to orchestrate the flow of data between these three components and to connect the “brain” to your own business logic and systems.

Also Read: Voice AI in Fleet Dispatch Systems

What Are the Steps to Build an AI Voice Assistant?

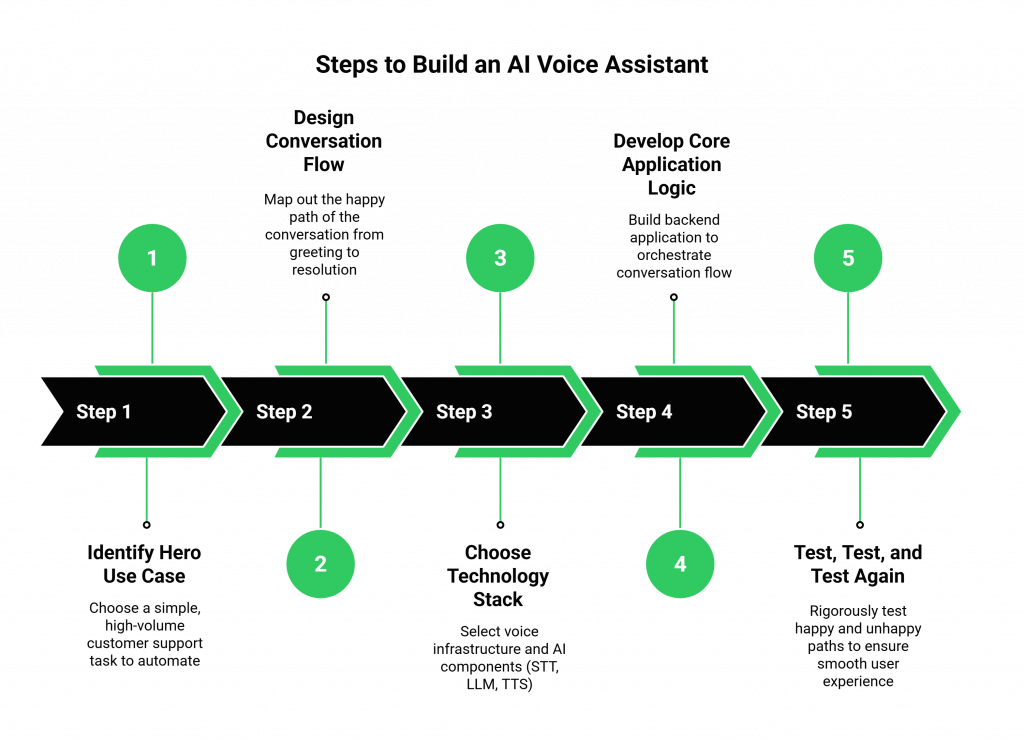

The journey of building voice bots can be broken down into a logical, multi-step process.

Step 1: Identify Your “Hero” Use Case

This is the strategic starting point. Do not boil the ocean. Work with your customer support team to identify the single most common and automatable reason that customers call.

- Is it to check the status of an order?

- Is it to reset a password?

- Is it to get your business’s hours and location?

Choose a simple, high-volume use case. This will be the “hero” of your first voice bot. Let’s use “order status lookup” as our example for the rest of this guide.

Step 2: Design the Conversation Flow

This is where you act as a playwright. You need to map out the “happy path” of the conversation, from the initial greeting to the final resolution.

- Greeting: “Hello, thank you for calling. You’ve reached our automated assistant. How can I help you today?”

- Intent Recognition: The user says, “I’d like to check on my order.” Your NLU/LLM will need to be trained to recognize this intent.

- Data Gathering: The bot needs the order number. “I can help with that. Could you please tell me your order number?”

- Action: The bot takes the order number, makes an API call to your e-commerce system to look up the status.

- Resolution: The bot receives the status and reports it back to the user. “I’ve found your order. It is currently out for delivery and is expected to arrive by 5 PM today.”

Step 3: Choose Your Technology Stack

This is where you select the tools for the job.

- The Voice Infrastructure: This is the most critical decision. You need a platform that will handle the immense complexity of the phone call itself. This is where a provider like FreJun AI comes in. Our platform provides the voice calling SDK and the powerful Teler engine that acts as the bridge to the phone network. It handles the real-time SIP media streaming and gives you the simple API tools to control the call.

- The AI Components (STT, LLM, TTS): Thanks to the model-agnostic nature of a platform like FreJun AI, you have the freedom to choose the best-in-class AI models from any provider (Google, OpenAI, Microsoft, etc.) that best fit your needs and budget.

Step 4: Develop the Core Application Logic (Your “AgentKit”)

This is where you write the code. Your backend application (your “AgentKit”) is the central orchestrator.

- Set up a Webhook Endpoint: You will configure a phone number on the FreJun AI platform to point to a URL on your application server. When a call comes in, we will send you a webhook.

- Orchestrate the Flow: Your application’s code will be responsible for receiving the events from our platform and sending back commands. When you receive the user’s transcribed speech, you will send it to your LLM. When your LLM gives you a response, you will use our API to send that response to our TTS engine and play it back to the user.

Ready to start this journey and bring your first voice bot to life? Sign up for FreJun AI and explore our developer-friendly tools.

Also Read: Managing Returns with AI Voice Support

Step 5: Test, Test, and Test Again

This is one of the most important steps to build AI voice assistants. You need to test your bot rigorously before it ever talks to a real customer.

- Test the “Happy Path”: Make sure the primary conversation flow works perfectly.

- Test the “Unhappy Path”: What happens if the user gives a fake order number? What if they stay silent? What if they ask a question your bot does not understand? You must build in graceful error handling and a clear path to escalate to a human agent if the bot gets confused.

A recent study on customer experience found that 46% of customers will abandon a brand if its employees (including its digital ones) are not knowledgeable. A bot that gets stuck in a loop is a major source of this frustration.

This table provides a summary of the development journey.

| Step | Key Activity | Primary Goal |

| 1. Identify Use Case | Analyze call center data. | Choose a simple, high-volume, and repetitive task to automate first. |

| 2. Design Conversation | Map out the conversational turns. | Create a clear, efficient, and natural-sounding “happy path” for the user. |

| 3. Choose Technology | Select a voice infrastructure provider and AI models. | Choose flexible, scalable, and developer-friendly tools. |

| 4. Develop Logic | Write the backend application code. | Orchestrate the flow of data between the voice platform and your AI brain. |

| 5. Test Rigorously | Test both “happy” and “unhappy” paths. | Ensure the bot is reliable, handles errors gracefully, and is ready for real users. |

Also Read: Real-Time Driver Support via AI Voice

Conclusion

The journey of building voice bots for customer support is an accessible and incredibly rewarding one for a modern developer. By starting with a clear, focused use case and by following a logical, step-by-step process, you can move from concept to reality with surprising speed.

The key is to build on a powerful, developer-first foundation. By leveraging a modern voice infrastructure platform like FreJun AI to handle the immense complexity of real-time telephony, you are freed up to focus on the most exciting part of the process: building the intelligence and the personality of your AI.

The era of the voice-first customer experience is here, and you now have the roadmap to start building it.

Want a personalized walkthrough of our platform and a live demonstration of how to build your first voice bot? Schedule a demo with our team at FreJun Teler.

Also Read: How to Log a Call in Salesforce: A Complete Setup Guide

Frequently Asked Questions (FAQs)

The very first and most important step for building voice bots for beginners is a strategic one: identify a single, simple, high-volume, and repetitive task to automate. Do not try to build a bot that can do everything at once.

The three foundational components of voice bots are a Speech-to-Text (STT) engine to listen, a Natural Language Understanding (NLU) or Large Language Model (LLM) to think, and a Text-to-Speech (TTS) engine to speak.

A customer support automation workflow is a designed process where a recurring customer inquiry is handled by an automated system, like a voice bot, from the initial contact to the final resolution, without needing a human agent.

The most common mistake is trying to make the bot a generalist that can answer any question. This usually results in a bot that is not very good at anything. The best practice is to design your bot to be a specialist that is an expert at a few specific tasks.

FreJun AI provides the foundational voice infrastructure. We handle the incredibly complex “telephony” part of the problem, connecting to the phone network, managing the real-time audio stream, etc. and give you a simple API to control it all. This lets you focus on the “AI” part of the problem.

A webhook is an automated message that a platform (like FreJun AI) sends to your application when a specific event happens. For example, we send a webhook to your app when a call is answered or when a user has finished speaking, which is the trigger for your app to take its next action.

No. While it helps to understand the concepts, you do not need to build your own AI models. You can use powerful, pre-trained STT, LLM, and TTS models from major providers (like Google, OpenAI, etc.) and integrate them into your application.

It is very important. The voice you choose (via TTS) and the words you write for its script will define the user’s experience. You should design a personality that is consistent with your brand, whether it is professional and formal or friendly and casual.

The “happy path” is the ideal, straightforward conversation where the user provides all the information correctly and the bot can easily resolve their request. Designing this path first is a core step, but you must also design for the “unhappy paths” where things go wrong.

A critical part of your design must be a clear and easy escalation path. If the bot does not understand a user’s request after one or two tries, or if the user simply says, “I need to talk to a human,” the bot should be able to seamlessly transfer the call to a live agent.