You have successfully built and deployed a voice bot in English. It is intelligent, efficient, and your customers love it. Now, it is time to take your business global. Suddenly, you are faced with a new and formidable challenge: how do you teach your brilliant AI to speak Spanish, German, Japanese, and a dozen other languages?

The process of localization for a voice bot is far more complex than simply translating a website. It is a deep and nuanced endeavor that touches every layer of your technology stack, from the AI’s “ears” to its “mouth,” and even its “personality.”

Building voice bots for a global audience requires a deliberate and sophisticated strategy that goes far beyond simple, word-for-word translation. A successful multilingual voice agent must not only understand and speak different languages, but it must also be sensitive to cultural nuances, regional accents, and local compliance requirements.

This guide will provide a comprehensive framework for how to approach localization, covering the key technical components, design considerations, and translation workflows for AI that are essential for creating a truly global conversational experience.

Table of contents

Why is Voice Bot Localization So Much Harder Than Text?

A text-based chatbot can often get by with a high-quality machine translation of its script. A voice bot cannot. The spoken word introduces layers of complexity that make a simple translation insufficient and, in many cases, a recipe for failure. The unique challenges of voice include:

- The Complexity of Speech Recognition: Accurately transcribing spoken language is incredibly difficult. It requires an AI model that is not only trained on a specific language but also on the vast diversity of accents, dialects, and colloquialisms within that language.

- The Nuance of Spoken Language: The way people speak is often very different from the way they write. A direct translation of a formal, written script can sound robotic and unnatural when spoken aloud.

- The Importance of a Persona: The “voice” of your brand is literal in a voice bot. The tone, pacing, and personality of the synthesized speech are a critical part of the customer experience and must be carefully chosen for each language and culture.

- The Web of Regional Regulations: Regional compliance for voice is a major consideration. Different countries have different laws about call recording, data storage, and what information can be collected over the phone.

Also Read: Why Latency Matters: Optimizing Real-Time Communication with Voice Calling SDKs

What is the Core Technology Stack for a Multilingual Voice Bot?

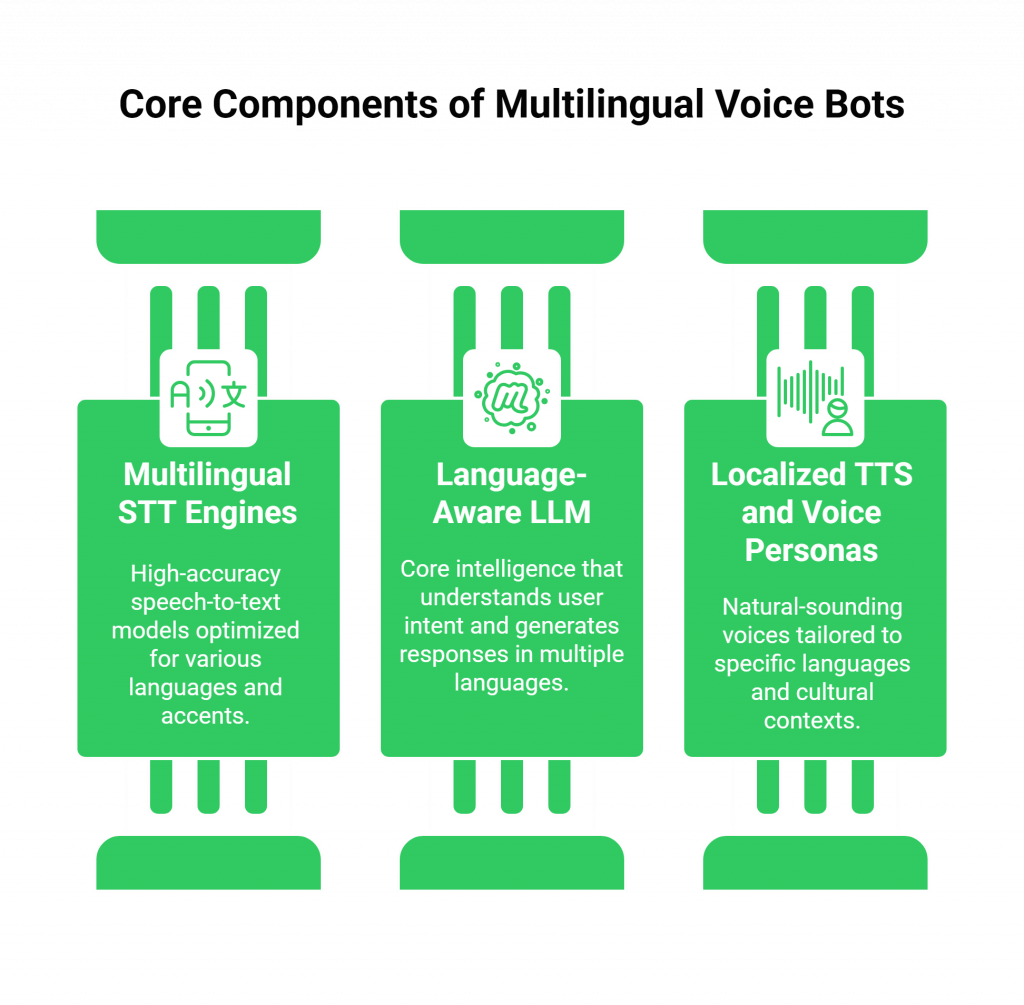

Building voice bots that can operate on a global scale requires a flexible, model-agnostic architecture. You need the ability to “plug in” the best-in-class AI models for each specific language you want to support. This is a core principle of the FreJun AI platform. Your multilingual technology stack will have three primary AI components:

The “Ears”: Multilingual STT (Speech-to-Text) Engines

This is your first and most critical choice. Your Speech-to-Text engine is responsible for transcribing the caller’s spoken words into text.

- The Requirement: You must use an STT provider that offers high-accuracy models specifically trained for each of your target languages.

- The Best Practice: Look for providers that offer accent-specific optimization. For example, a high-quality STT engine will have different models for Mexican Spanish versus Castilian Spanish, or for Australian English versus American English. This is crucial for achieving the accuracy needed for a successful conversation.

The “Brain”: A Language-Aware LLM (Large Language Model)

This is the core intelligence of your bot. The LLM must be able to understand the user’s intent and generate a response.

- The Requirement: Most modern, large-scale LLMs (like OpenAI’s GPT-4 or Google’s Gemini) are inherently multilingual and can process and respond in dozens of languages.

- The Best Practice: While the core LLM is multilingual, your “prompt engineering” and business logic must be designed with localization in mind. You may need to provide the LLM with specific, localized examples and context to ensure its responses are culturally appropriate.

The “Mouth”: Localized TTS (Text-to-Speech) and Voice Personas

This is the final and most experiential part of the stack. Your Text-to-Speech engine synthesizes the AI’s text response into audible speech.

- The Requirement: You must choose a TTS provider that offers a variety of high-quality, natural-sounding voices for each of your target languages.

- The Best Practice: This is where you develop your localized voice personas. The voice you choose for your German customers should feel German. The voice for your Japanese customers should feel Japanese. This involves selecting a voice with the right gender, age, tone, and accent to represent your brand appropriately in that specific market.

This table summarizes the technology stack for localization.

| Component | Function | Key Localization Consideration |

| STT Engine | Transcribes user’s speech to text. | Must have high-accuracy models for each language and support accent-specific optimization. |

| LLM | Understands intent and generates a text response. | Must be a powerful, multilingual model; your prompts may need to be localized. |

| TTS Engine | Synthesizes the AI’s text response into speech. | Must have a library of high-quality, natural voices to create localized voice personas. |

| Voice Platform | Connects the call and streams the audio. | Must be model-agnostic to allow you to plug in the best STT/LLM/TTS for each language. |

Ready to start building a voice bot that can speak to the world? Sign up for FreJun AI and explore our model-agnostic voice infrastructure.

Also Read: From Text Chatbots to Voice Agents: How a Voice Calling SDK Bridges the Gap

What Are the Key Steps in the Localization Workflow?

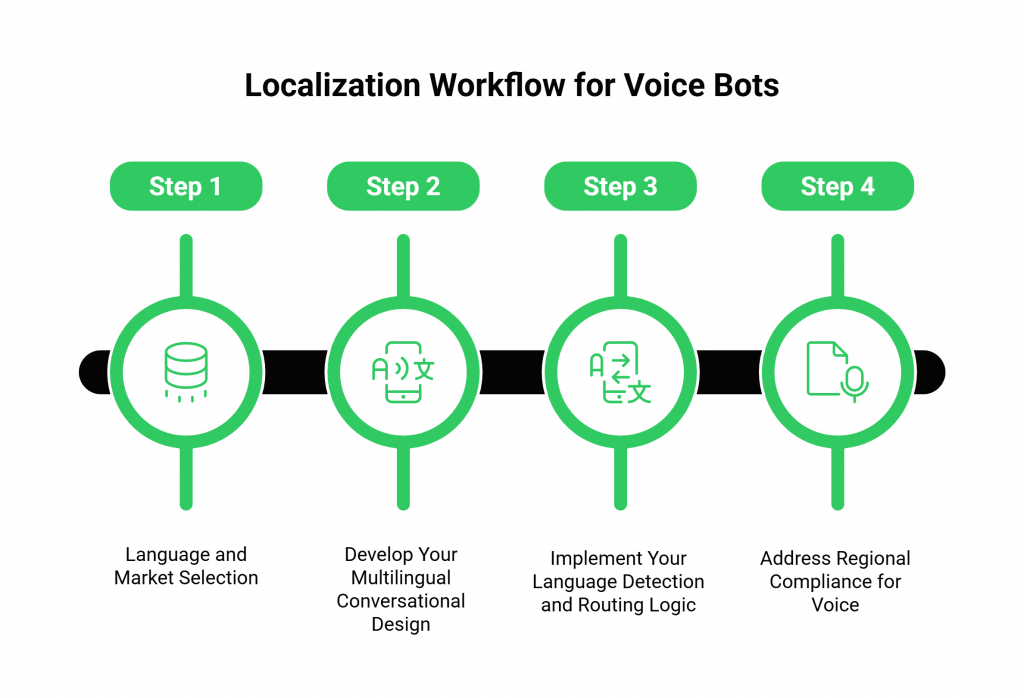

A successful localization project is a systematic process that combines technology, linguistics, and cultural expertise.

Step 1: Language and Market Selection

You cannot launch in every language at once. Start with a data-driven approach. Analyze your website traffic, your customer data, and your market expansion goals to prioritize the 2-3 most important languages to launch after your primary one.

Step 2: Develop Your Multilingual Conversational Design

This is the core of your translation workflows for AI. It is not just about translating your English script.

- Transcreation, Not Translation: The goal is “transcreation”, adapting the meaning, intent, and cultural nuance of your conversation, not just the literal words. For example, a joke that is funny in English might be confusing or even offensive in another culture.

- Engage Native Speakers: It is absolutely essential to work with native speakers of the target language to review and refine your conversational scripts. They will be able to catch the subtle cultural and linguistic errors that a machine translation would miss.

- Localize Key Data Points: Remember to localize things like dates, times, currencies, and units of measurement.

Step 3: Implement Your Language Detection and Routing Logic

Your application’s code needs to be able to handle multiple languages.

- Language Detection: Your voice bot should be able to ask the user which language they prefer at the beginning of the call (“For English, press one. Para español, oprima dos.”).

- Dynamic Model Routing: Based on the user’s choice, your application’s logic must then route the audio and text to the correct set of STT, LLM, and TTS models for that specific language. This is where a model-agnostic platform like FreJun AI is essential.

Step 4: Address Regional Compliance for Voice

Regional compliance for voice is a critical and often-overlooked step.

- Call Recording Consent: The laws regarding notifying a user that a call is being recorded vary dramatically by region. You must build logic to play the appropriate legal disclosure based on the caller’s country.

- Data Sovereignty: Some countries have laws that require customer data to be stored within that country’s borders. You may need to architect your application to accommodate these data residency requirements.

Also Read: Security in Voice Calling SDKs: How to Protect Real-Time Audio Data

Conclusion

Building voice bots for a global audience is a complex but incredibly rewarding endeavor. It is a journey that requires a deep appreciation for the nuances of language and culture, and a technology stack that is flexible, powerful, and built for a multilingual world.

By moving beyond simple translation and embracing a holistic localization strategy that includes multilingual STT engines, carefully crafted localized voice personas, and a systematic approach to translation workflows for AI, you can create an experience that truly resonates with customers in any market.

The key is to build on a model-agnostic foundation that gives you the freedom to choose the best-in-class tools for every language, allowing you to speak to the world, one conversation at a time.

Want to do a technical deep dive into how our model-agnostic platform can support your multilingual voice bot strategy? Schedule a demo with our team at FreJun Teler.

Also Read: Cloud Telephony Features That Boost Sales Team Output

Frequently Asked Questions (FAQs)

The first step is a strategic one: use your business data to decide which languages to prioritize. Do not try to launch in ten languages at once. Start with the one or two that will have the biggest impact on your customer base or your expansion goals.

Translation is the literal, word-for-word conversion of a text. Transcreation is the process of adapting the meaning, intent, and cultural nuance of a message for a specific locale. For a conversational experience, transcreation is essential for building voice bots that feel natural.

Multilingual STT engines are Speech-to-Text services that offer high-accuracy transcription models for many different languages. A key feature to look for is accent-specific optimization to ensure the best possible accuracy.

A localized voice persona is the unique voice and conversational style you choose for your AI in a specific region. It involves selecting a Text-to-Speech voice that has a culturally appropriate accent, gender, and tone for that market.

A sophisticated localization strategy will treat these as separate languages. You will need to use a different STT model that is tuned for that specific dialect and a different TTS voice that has the correct accent to create the best possible user experience.

The biggest challenge in translation workflows for AI is ensuring that the translated scripts are not just linguistically correct, but also conversationally natural and culturally appropriate. This almost always requires a final review by a native human speaker.

FreJun AI provides a model-agnostic voice infrastructure. This is critical for localization because it gives you the freedom to choose and “plug in” the absolute best STT and TTS providers for each specific language you need to support, rather than being locked into a single provider’s limited language options.

Regional compliance for voice refers to adhering to the specific laws and regulations of a country or region regarding voice communication. This includes things like call recording consent laws (e.g., one-party vs. two-party consent) and data privacy regulations like GDPR.

Testing requires engaging native speakers from the target region. They need to have real conversations with the bot to test for linguistic accuracy, conversational flow, and cultural appropriateness. You cannot effectively test a Spanish voice bot if your entire QA team only speaks English.

In most cases, the core business logic (e.g., how to look up a balance or book an appointment) will remain the same. However, the conversational logic and the “script” of the conversation must be localized to be effective.