In the world of technology, the only certainty is change. The programming language that is in vogue today could be a legacy skill tomorrow. The revolutionary AI model that is dominating the headlines this year will inevitably be surpassed by a newer, more powerful one next year.

For a developer or a CTO, this relentless pace of innovation creates a profound and constant challenge: how do you build an application today that will not be a technical fossil in three years? This is the challenge of “future-proofing.”

And in the rapidly evolving world of voice technology, the single most important decision you will make to future-proof your application is the choice of your foundational voice api for developers.

A common mistake is to view a voice API as a simple commodity, a “pipe” to the phone network. This is a dangerously short-sighted perspective. The architectural philosophy of your chosen voice platform will have a massive and long-lasting impact on your application’s ability to adapt, to scale, and to incorporate the next wave of innovation.

A future-proof voice API does more than solve today’s problems; it provides a strategic foundation explicitly designed to embrace tomorrow’s uncertainties. This guide explores the key architectural principles that define a modern voice architecture and explains how they enable a scalable AI voice platform built to last.

Table of contents

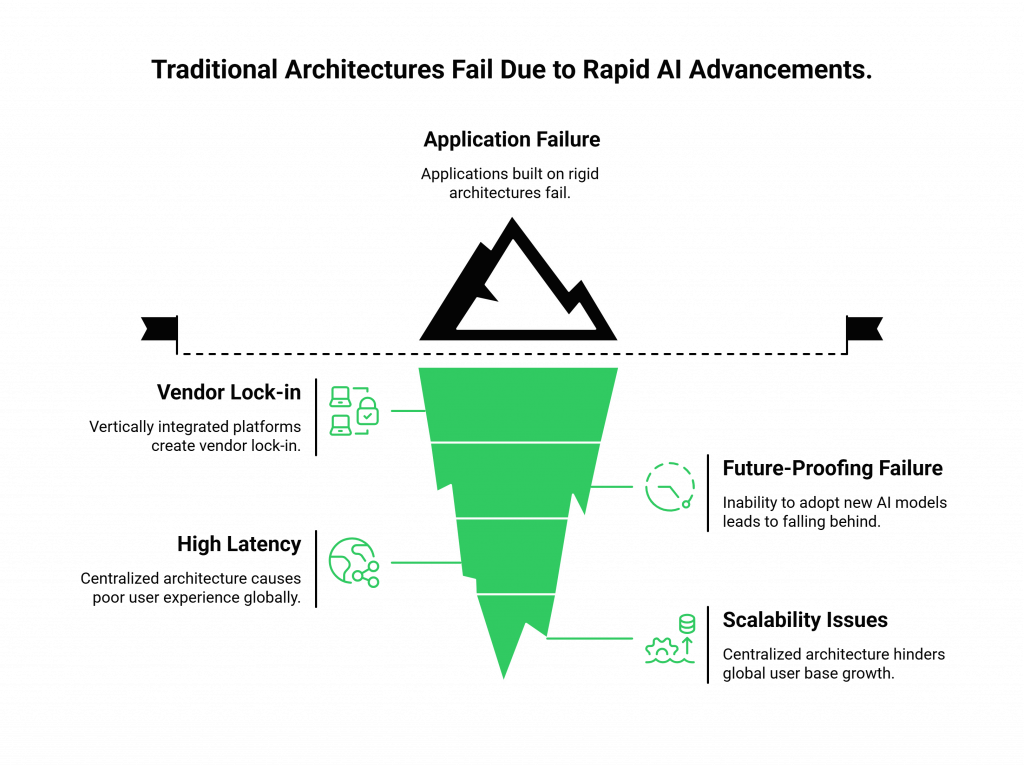

The “Pace of Change” Problem: Why Traditional Architectures Fail

The future of voice AI is arriving at a blistering pace. The capabilities of Speech-to-Text (STT), Large Language Models (LLMs), and Text-to-Speech (TTS) are advancing exponentially. This creates a huge problem for any application that is built on a rigid, closed, or monolithic architecture.

The Trap of the Vertically Integrated, “Walled Garden”

Many first-generation voice platforms offered a vertically integrated, “all-in-one” solution. They provided the voice connectivity and their own, proprietary AI models (STT, TTS, etc.) in a single, closed package.

- The Problem: This model creates a powerful and dangerous form of vendor lock-in. Your application’s “intelligence” is completely tied to a single provider’s AI stack. You are at the mercy of their product roadmap, their innovation speed, and their pricing.

- The Future-Proofing Failure: When a new, breakthrough AI model is release by a different company, you get stuck. You cannot adopt it. Your application is instantly a generation behind the competition, and the cost of migrating your entire voice infrastructure to a new provider is enormous.

Also Read: Can A Smarter Voice Recognition SDK Improve App Experience?

The Limitations of a Centralized, Non-Global Architecture

Many early cloud platforms made on a centralize architecture, with a few massive data centers in one or two geographic regions.

- The Problem: This model is fundamentally at odds with the demands of a global, real-time application. A user in Asia trying to connect to a voice application hosted in North America will always have a high-latency, poor-quality experience.

- The Future-Proofing Failure: As your application’s user base grows globally, this centralized architecture becomes an anchor that drags down your user experience and prevents you from providing a world-class service in new markets.

The Architectural Principles of a Future-Proof Voice API

A future-proof voice API uses modern, cloud-native architectural principles to solve change and scale challenges.

Principle 1: A Radically Model-Agnostic and Decoupled Architecture

This is the single most important principle. It is the philosophy of separating the “voice” (the infrastructure) from the “brain” (the intelligence).

- The Architecture: A model-agnostic voice api for developers, like the one from FreJun AI, acts as a flexible, open “bridge.” Its core job is to provide a high-performance, low-latency connection to the global telephone network and to give you a raw, real-time stream of the call’s audio.

- The Future-Proof Benefit: You have complete freedom to take that audio stream and send it to any AI model, from any provider, at any time. This allows you to:

- Build a “best-in-class” AI stack by mixing and matching the best STT, LLM, and TTS models from different vendors.

- Instantly adopt new, breakthrough AI models as soon as they are released, without ever having to change your core voice infrastructure.

- Avoid vendor lock-in and maintain a position of strategic flexibility.

Principle 2: A Globally Distributed, Edge-Native Network

This is the key to providing a high-performance experience, both today and in the future.

- The Architecture: A modern voice architecture uses a globally distributed edge network of interconnected servers located close to end users.

- The Future-Proof Benefit: This architecture is the only way to solve the problem of network latency for a global user base. As AI models get faster and faster, the network latency becomes the single biggest bottleneck in a real-time conversation. An edge-native architecture is a bet on the enduring importance of the speed of light.

Also Read: Why Use a Voice Recognition SDK for High Volume Audio Processing

Principle 3: A 100% API-First and Programmable Foundation

- The Architecture: The platform exposes every function, from buying phone numbers to controlling live call media, through clean, well-documented, robust APIs.

- The Future-Proof Benefit: This makes the entire voice infrastructure a piece of software. It can be version-controlled, automated, and integrated into a modern CI/CD pipeline. This allows your team to build, test, and deploy new voice features with the same speed and agility that they use for the rest of your application.

This table provides a clear summary of how these principles create a future-proof platform.

| Architectural Principle | How It Future-Proofs Your Application |

| Model-Agnostic & Decoupled | Gives you the freedom to constantly adopt the best, most advanced AI models as they are released, preventing your AI from becoming obsolete. |

| Globally Distributed & Edge-Native | Provides the low-latency foundation required for the increasingly real-time and interactive voice applications of the future. |

| 100% API-First & Programmable | Allows you to manage your voice infrastructure as code, enabling the speed, agility, and automation of a modern DevOps workflow. |

Ready to build your voice application on a foundation that is designed for the future? Sign up for FreJun AI and explore our future-ready voice platform.

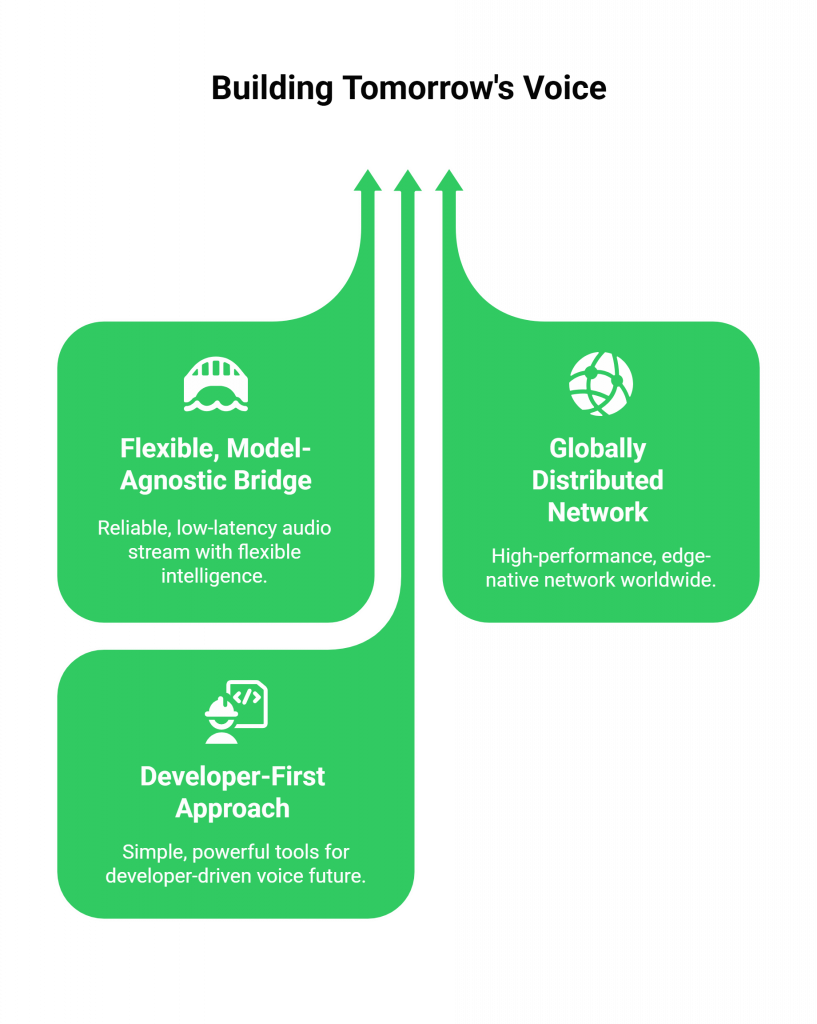

How FreJun AI Embodies This Future-Focused Vision

At FreJun AI, we are not just building a voice API for the applications of today; we are building the foundational scalable AI voice platform for the innovations of tomorrow. Our entire Teler engine and its associated APIs were architected from the ground up based on these three core, future-proofing principles.

- We are a flexible, model-agnostic bridge. Our core mission is to provide you with the most reliable, lowest-latency, and most powerful real-time audio stream on the planet. The “intelligence” you connect to that stream is entirely your choice.

- We operate a globally distributed, edge-native network that delivers high performance to users worldwide.

- We are a radically developer-first company. We believe that the future of voice will be built by developers, and we are obsessed with providing them with the simple, powerful, and elegant tools they need to build it. This is our core promise: “We handle the complex voice infrastructure so you can focus on building your AI.”

Also Read: Modern Voice Recognition SDK Supporting Multilingual Apps

Conclusion

In the rapidly accelerating world of voice technology, the only losing move is to stand still. Choosing a voice api for developers that is built on a closed, monolithic, or centralized architecture is a decision to stand still. It is a decision that will leave your application vulnerable to the relentless pace of AI innovation.

A future-proof voice API embraces change by enabling flexible, low-latency, API-first platforms that support continuous innovation.

You are building a scalable AI voice platform that is ready to adapt, to evolve, and to lead in the exciting and unpredictable future of voice.

Want to do a deep architectural dive into how our platform is designed to be a future-proof foundation for your voice applications? Schedule a demo with our team at FreJun Teler.

Also Read: IVR Software vs Call Routing Tools: Which One Does Your Business Need?

Frequently Asked Questions (FAQs)

A future-proof voice API uses a flexible, model-agnostic, globally distributed architecture to easily adopt emerging AI and communication technologies.

It is important because it prevents vendor lock-in. It gives you the freedom to always use the best-in-class AI models from any provider, ensuring your application remains competitive.

A modern voice architecture is cloud-native and API-first. It is also globally distributed and edge-native. This design prioritizes low latency, scalability, and developer flexibility.

A scalable AI voice platform works by decoupling the voice infrastructure from the AI logic. The infrastructure is built on an elastic, cloud-native foundation that can scale automatically to handle any call volume.

The biggest risk is limiting your application to one vendor’s innovation pace. Your capabilities may stagnate over time. You may also miss new breakthrough AI technologies. Other providers could advance faster.

As AI models get faster, network latency becomes the biggest performance bottleneck. An edge-native network is the best way to minimize this latency, ensuring your platform is fast enough for future, real-time AI.

“API-first” means that the API is the core product. Every single function of the platform is designed to be controlled programmatically, which is essential for modern, automated development workflows.