For the past decade, the chatbot has been the undisputed king of automated customer service. Businesses have invested billions in building sophisticated, text-based bots that can answer questions, solve problems, and guide users through complex workflows. These bots, powered by ever-smarter AI and Large Language Models (LLMs), have become incredibly intelligent.

But for all their intelligence, they have been trapped in a world of silence, confined to a small chat window at the bottom of a screen. The next great leap in the evolution of automated interaction is to give these brilliant AI brains a voice. The technology that acts as the essential bridge to make this leap possible is the modern voice calling SDK.

The process of AI chatbot to voice conversion is far more complex than simply plugging a microphone and a speaker into your existing chatbot logic. A voice conversation is a fundamentally different medium than a text chat. It is a real-time, synchronous, and deeply nuanced interaction that requires a completely new layer of technology to manage.

A voice calling SDK provides this critical layer, handling the immense complexity of real-time audio and allowing developers to focus on what they do best: building the intelligence of the conversation.

Table of contents

Why is a Voice Conversation So Much Harder Than a Text Chat?

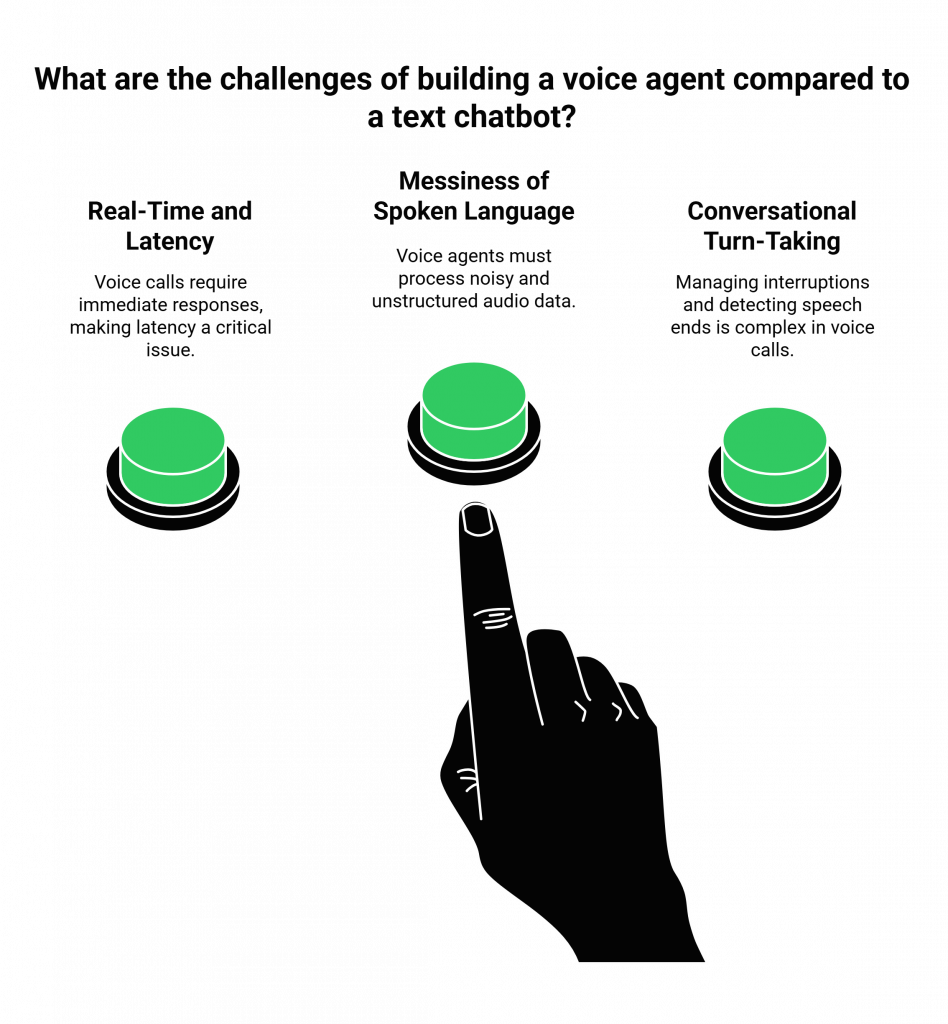

Before we can appreciate the role of the SDK, we must first understand the unique and formidable challenges of a real-time voice conversation. A developer who has mastered building a text chatbot will find that a voice agent introduces a whole new set of problems to solve.

The Tyranny of Real-Time and Latency

This is the single biggest difference.

- Text Chat: A text chat is asynchronous. It is perfectly acceptable for a user to type a message and wait a few seconds for the bot to respond. The user can even switch to another tab while they wait.

- Voice Call: A voice call is synchronous and brutally real-time. Any delay of more than a few hundred milliseconds between the user finishing a sentence and the AI starting to respond is perceived as an awkward, unnatural pause. This latency shatters the illusion of a real conversation and is a major source of user frustration. A recent study on customer patience found that the average person is only willing to wait on hold for about 45 seconds, and a slow, laggy AI can feel just as frustrating.

The Messiness of Spoken Language

Text is a clean, structured input. The spoken word is not.

- Text Chat: The chatbot receives a perfectly formed string of text.

- Voice Call: A voice agent receives a chaotic stream of audio data. It has to deal with background noise, different accents, people who start and stop their sentences, and the use of filler words like “um” and “uh.” This requires a sophisticated pipeline of technology just to turn that messy audio into a clean piece of text.

The Nuances of Conversational Turn-Taking

In a chat, the “enter” key clearly signals the end of a turn. In a conversation, the signals are much more subtle.

- Text Chat: The bot can wait until it receives a complete message before it starts “thinking.”

- Voice Call: A voice agent needs to be able to detect the end of a user’s speech, but it also needs to be able to handle interruptions. A user might start to speak while the AI is still talking (a feature called “barge-in”). Managing this natural back-and-forth is a complex, real-time challenge.

Also Read: Best Voice API for Global Business Communication: What to Look for Before You Build

What is a Voice Calling SDK and How Does It Act as the Bridge?

A voice calling SDK (Software Development Kit) is a powerful layer of abstraction. It is a set of software libraries and tools that takes the immense, underlying complexity of the global telephone network and real-time audio processing and presents it to a developer as a simple, programmable set of features. It is the essential toolkit for voice-enabling chatbots.

The SDK is the bridge that solves the hard problems of voice, allowing your existing chatbot’s “brain” to participate in a real-time call. It handles three critical functions that are completely absent in a text-based world.

This table illustrates the core functions the SDK provides to bridge the gap:

| Challenge | Text Chatbot Solution | How the Voice Calling SDK Solves It |

| Input | Receives a clean string of text. | Provides a real-time audio stream and enables TTS and STT integration. |

| Output | Sends a clean string of text. | Provides an API to “inject” a synthesized audio stream into the live call. |

| Latency | Not a critical issue. | Is built on a globally distributed, low-latency infrastructure to minimize delay. |

| Turn-Taking | Based on the “enter” key. | Provides real-time events for end-of-speech detection and barge-in. |

The Critical Role of TTS and STT Integration

A voice calling SDK is the enabler for your TTS and STT integration.

- The “Ears” (STT): The SDK’s real-time media streaming feature is what captures the live audio from the phone call and pipes it to your chosen Speech-to-Text (STT) engine.

- The “Mouth” (TTS): After your LLM has generated a text response, you use the SDK’s API to send the audio synthesized by your Text-to-Speech (TTS) engine back into the call for the user to hear.

The SDK acts as the sophisticated, high-speed “plumbing” between the phone call and your AI’s senses.

Ready to give your chatbot a voice and bring it into the world of real-time conversation? Sign up for FreJun AI and explore our powerful voice calling SDK.

Also Read: LLMs + Voice APIs: The Perfect Duo for Next-Gen Business Communication

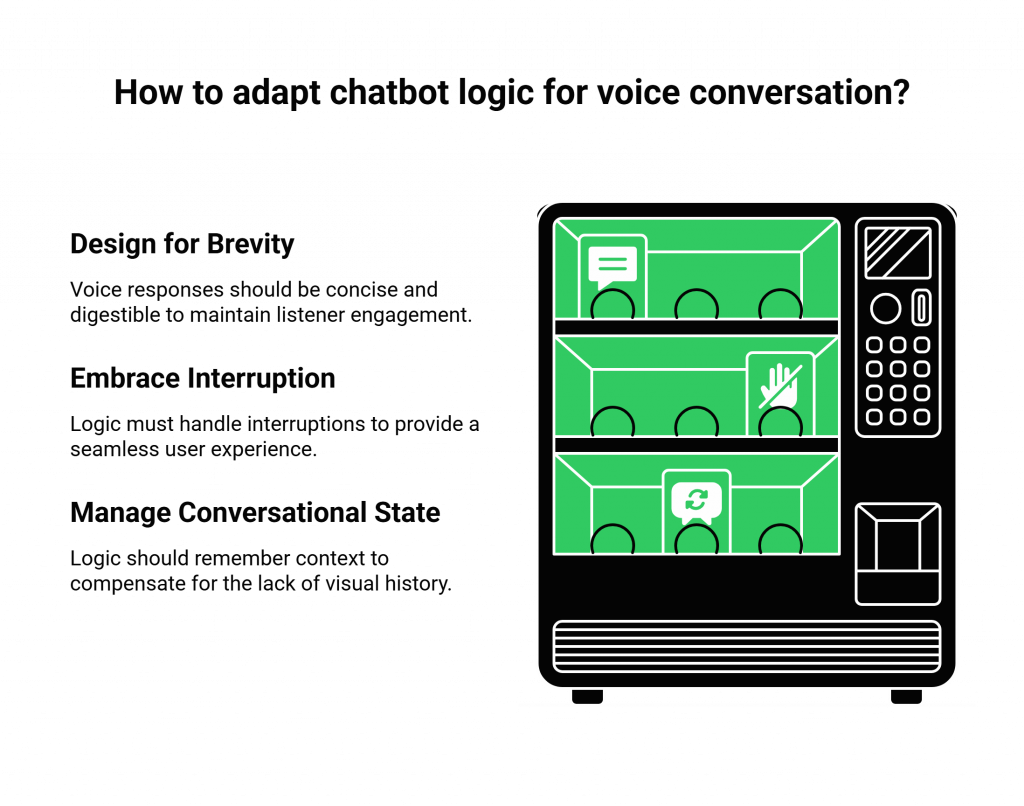

How Do You Adapt Your Chatbot’s Logic for a Voice Conversation?

The process of AI chatbot to voice conversion is not just a technical integration; it is also a shift in design philosophy. The logic that works well in a text chat needs to be adapted for the unique medium of voice.

Design for Brevity and Clarity

- Text Chat: A chatbot can send a long paragraph of text with multiple links. The user can read this at their own pace.

- Voice Agent: A voice agent must be much more concise. Long, rambling sentences are difficult for a listener to follow. The responses need to be broken down into shorter, more digestible chunks.

Embrace the Power of Interruption

- Text Chat: Interruption is not a concept.

- Voice Agent: You must design your logic to handle barge-in. If your AI is in the middle of a long explanation and the user says, “Okay, I get it,” your agent should be able to stop talking and immediately respond to the user’s new input. This is a key part of the voice agents using LLMs experience.

Manage the Conversational State with Precision

- Text Chat: The history of the chat is always visible on the screen.

- Voice Agent: The user is relying entirely on their memory. Your agent’s logic must be very good at managing the conversational state to remember the context of what was said earlier in the call, as there is no visual history to fall back on.

The future of voice AI is not just about making AI that can talk; it is about designing conversations that are a pleasure to participate in. A recent study on user experience found that 70% of customers say they have already used voice to conduct a search, a clear indicator that users are becoming increasingly comfortable with voice-first interfaces.

Also Read: Voice AI in Fleet Dispatch Systems

How FreJun AI Provides the Ideal SDK for This Transition?

At FreJun AI, our entire platform was architected with the demands of voice agents using LLMs in mind. Our voice calling SDK is more than just a tool for making calls; it is a comprehensive toolkit for building sophisticated, low-latency conversational AI.

- A Low-Latency, Edge-Native Foundation: Our Teler engine is a globally distributed network. This means the audio from your user’s call is processed at a location physically close to them, which is the single most important factor in creating a fast, responsive, and natural-sounding conversation.

- Powerful, Real-Time Media Streaming: Our SDK provides the robust, real-time media streaming capabilities that are the essential prerequisite for your TTS and STT integration.

- A Developer-First Experience: We handle the immense underlying complexity of global telecommunications, allowing your development team to focus on the part of the system they know best: the AI’s logic.

Conclusion

The chatbot was a revolutionary step in the journey of automated customer interaction. But it was only the first step. The future belongs to the voice agent. The transition from a silent, text-based bot to a fully interactive, conversational voice agent is one of the most powerful upgrades a business can make to its customer experience.

But this transition is impossible without the right bridge. A modern, developer-first voice calling SDK is that bridge.

By providing the essential, high-performance “plumbing” for real-time audio, and the sophisticated tools for managing a live conversation, the SDK is the key technology that is finally allowing the brilliant minds of our AI to find their voice.

Want to see a live demonstration of how our SDK can be used to voice-enable an existing text-based AI? Schedule a demo with our team at FreJun Teler.

Also Read: Call Log: Everything You Need to Know About Call Records

Frequently Asked Questions (FAQs)

A voice calling SDK (Software Development Kit) is a set of software libraries and tools that allows a developer to easily integrate voice calling features, like making and receiving phone calls and managing live conversations, directly into their own applications.

The main challenge is handling the real-time, synchronous, and low-latency demands of a live phone call. A voice conversation is much less forgiving of delays than a text chat, which is why the underlying infrastructure is so important for voice-enabling chatbots.

TTS and STT integration is the core technical process of connecting your AI to a voice channel. Speech-to-Text (STT) is the “ears” that transcribe the user’s spoken words into text. Text-to-Speech (TTS) is the “mouth” that synthesizes your AI’s text response into audible speech.

For voice agents using LLMs, the SDK provides the critical, real-time data pipe. It streams the audio to the STT engine and then plays the audio from the TTS engine back to the user, acting as the high-speed nervous system for the AI.

The first step in the AI chatbot to voice conversion is to choose a developer-first voice platform and its voice calling SDK. This will be the foundational layer that connects your existing chatbot logic to the telephone network.

Barge-in is the ability for a user to interrupt the AI agent while it is speaking. It is a critical feature for a natural conversation, and a good voice calling SDK provides the real-time events needed to manage it.

Low latency is the key to a natural-sounding conversation. If there is a long pause between when a user speaks and the AI responds, the conversation feels robotic and frustrating.

Yes, some adaptation is required. The core intelligence can remain, but the conversational design needs to be adapted for voice. This usually means making the responses more concise and designing the logic to handle the real-time, turn-by-turn nature of a spoken conversation.

FreJun AI provides the foundational voice calling SDK and the underlying global, low-latency voice network (our Teler engine). We provide the powerful and easy-to-use “plumbing” that allows you to connect your AI “brain” to a real-time phone call.

No. This is the key benefit. A modern voice calling SDK abstracts away the immense complexity of telecommunications. If you are a developer who can work with web APIs, you can build a sophisticated voice agent without needing to be an expert in the underlying protocols.