For the better part of a decade, the chatbot has been the undisputed face of automated customer interaction. We have all seen it: the little widget in the corner of a website, ready to answer simple questions and guide us to the right FAQ page. It was a revolutionary step, freeing businesses from the high cost of live chat for Tier-1 support.

But in the grand story of human-to-machine communication, the chatbot was just the opening chapter. Today, we are witnessing the next, far more profound, evolution: the rise of the callbot. And the technology making this leap possible is the modern, developer-first best voice API for business communications.

The transition from a text-based chatbot to a voice-based callbot is not merely a change in interface; it is a quantum leap in complexity, user expectation, and technical demand. A chatbot can take a few seconds to respond, and the user will barely notice.

A callbot, on the other hand, is a real-time AI phone agent. If it pauses for even a second too long, the illusion of a natural conversation is shattered, and the user’s frustration skyrockets.

This is why the underlying voice infrastructure, the API that connects the AI’s brain to the global telephone network, has become the single most critical component in the next generation of business communication.

Table of contents

What’s the Real Difference Between a Chatbot and a Callbot?

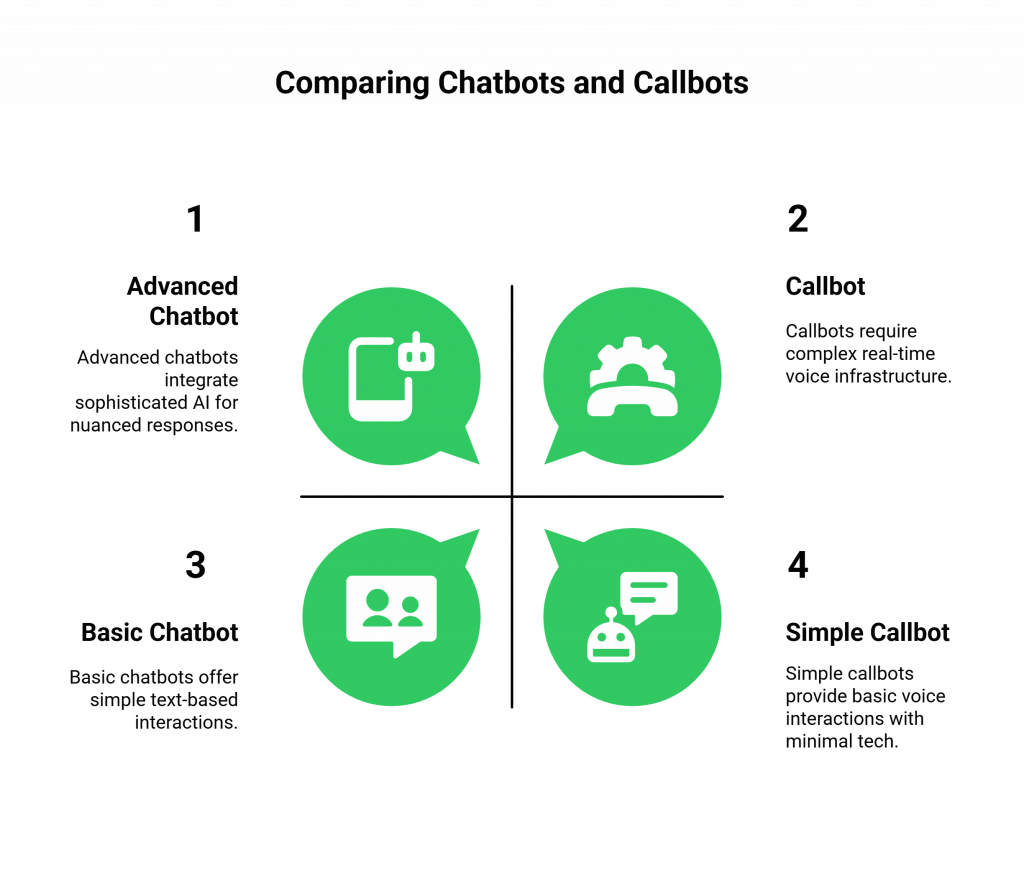

On the surface, the difference between a chatbot and a callbot seems simple: one types, and one speaks. But beneath this simple distinction lies a world of architectural and experiential differences. Think of it this way: both can draw on the same intelligent ‘brain’ (the LLM), but they rely on entirely different senses and operate in completely different environments.

The Fundamental Shift from Asynchronous to Synchronous

This is the most critical distinction.

- A Chatbot is Asynchronous: A text-based conversation is forgiving. A user types a message, and they do not expect an instantaneous response. If the chatbot takes two, three, or even five seconds to process the request and formulate an answer, the user experience is largely unaffected. They can look at another tab or sip their coffee while they wait.

- A Callbot is Synchronous: A voice conversation is a live, real-time event. The social contract of a phone call demands an immediate response. The moment one party stops speaking, the other is expect to begin. Any perceptible delay is interpreted as confusion, incompetence, or a technical failure. This places an immense burden on the entire technology stack to perform with ultra-low latency.

The Leap in Technological Complexity

Moving from text to voice introduces several new, highly complex layers to the technology stack.

- Speech-to-Text (STT): The callbot needs “ears.” It requires a sophisticated STT engine to instantly and accurately transcribe the user’s spoken words into text that the AI’s brain can understand.

- Text-to-Speech (TTS): The callbot needs a “mouth.” It needs a TTS engine that can synthesize the AI’s text response into a natural, human-sounding voice.

- Real-Time Voice Infrastructure: This is the most complex new layer. The callbot needs a “nervous system.” It requires a specialized voice API and infrastructure that can handle the real-time, bidirectional streaming of audio with minimal delay.

Also Read: How Media Streaming Powers Human-Like Conversations in AI Voice Agents

The Dimension of Emotional Nuance

Voice is an incredibly rich medium. It carries not just words, but also tone, pitch, pace, and emotion. A sophisticated callbot must be able to understand these nuances (e.g., detecting frustration in a user’s voice) and respond with an appropriate tone. Text is, by comparison, a much flatter and less expressive medium. This table provides a clear, at-a-glance summary of the key differences:

| Characteristic | Chatbot (Text-Based) | Callbot (Voice-Based) |

| Communication Modality | Asynchronous (text, delayed responses are acceptable). | Synchronous (voice, requires real-time, immediate responses). |

| Core Technology Stack | NLP/LLM, Messaging Platform APIs. | STT, NLP/LLM, TTS, Real-Time Voice API. |

| Latency Tolerance | High (several seconds of delay are often unnoticeable). | Extremely Low (delays of >800ms feel unnatural and break the conversation). |

| Emotional Bandwidth | Low (relies on word choice and emojis). | High (carries tone, pace, pitch, and emotional cues). |

| Primary Challenge | Understanding user intent from text. | Understanding intent and managing a low-latency, real-time conversational flow. |

The Engine of Evolution: Why the Voice API is the Critical Component

While the Large Language Model (LLM) provides the intelligence, it is the voice API that makes a callbot possible. The best voice API for business communications is not just a simple tool to make a call; it is a sophisticated, low-latency infrastructure designed for the unique demands of an AI-driven conversation.

The Unyielding Demand for Ultra-Low Latency

As we have established, latency is the arch-nemesis of a good callbot. A great voice API is architected from the ground up to minimize this delay at every possible point.

- Edge-Native Infrastructure: A provider like FreJun AI uses a globally distributed network of servers (Points of Presence). When a call is made, it is handled at a server physically close to the end-user. This dramatically reduces the network travel time for the audio data, which is the single biggest contributor to latency.

- Optimized Media Processing: The API and underlying infrastructure fine-tune high-speed, real-time forking and streaming of media, ensuring the software introduces no delays when sending audio to and from the AI. You cannot overstate the importance of this speed. A recent survey found that 33% of customers are most frustrated by having to wait on hold, and a slow AI agent is the new form of hold music.

Also Read: Voice AI API: Bringing Intelligence to Voice Communication Systems

The Necessity of Programmable, Real-Time Media Access

An AI needs to “hear.” This means the voice API must provide a developer-friendly way to get a live, real-time stream of the call’s audio. This is a core feature of the FreJun AI platform.

Our Real-Time Media API allows a developer to programmatically “tap into” the live call and stream the audio directly to their AI application (their “AgentKit”), which is the essential first step in any AI conversation.

The Role of LLMs: The Shared Brain Behind the Bots

The beauty of this evolution is that the core intelligence can remain the same. The same powerful LLM that you trained to be an expert text-based chatbot can be repurposed to be the brain of your new, voice-based callbot. This is the essence of modern LLM integration with voice APIs.

The LLM is the “what” of the conversation, it decides what to say. The voice API is the “how”, it handles the mechanics of saying it and hearing the response. This decoupling is incredibly powerful.

It means you can choose the absolute best LLM for your business needs (from providers like OpenAI, Google, Anthropic, etc.) and pair it with the best voice API for business communications. This is a core tenet of the FreJun AI philosophy.

FreJun is model-agnostic. We believe our job is to provide the most reliable, lowest-latency “nervous system” (our Teler engine), giving you complete freedom to choose your own “brain” (your AgentKit). This is a critical advantage in the fast-moving AI landscape.

The market for generative AI is growing at a breathtaking pace, with one forecast predicting it will reach $1.3 trillion in the next 10 years. Being able to easily integrate the latest and greatest models is a key competitive advantage.

Ready to give your intelligent LLM a powerful, low-latency voice? Sign up for FreJun AI.

Building a Real-Time AI Phone Agent: The Architectural Blueprint

So, how does it all come together? The workflow of a single conversational turn in a callbot is a high-speed dance orchestrated by the voice API.

- The Call Arrives: The call hits the voice API provider’s infrastructure (e.g., FreJun AI’s Teler engine) at an edge location.

- The Audio is Streamed: Using the Real-Time Media API, the live audio from the caller is instantly streamed to your AI application.

- The STT “Hears”: Your Speech-to-Text engine transcribes this audio into text.

- The LLM “Thinks”: The transcribed text is sent to your LLM, which processes the intent and generates a text-based response.

- The TTS “Speaks”: The LLM’s text response is sent to your Text-to-Speech engine, which synthesizes it into a new audio stream.

- The Response is Delivered: Your application sends this new audio back to the voice API with a command to play it to the caller.

This entire round trip must happen in a fraction of a second. It is a process that is completely dependent on the speed, reliability, and programmability of the underlying voice API.

Also Read: The Complete Guide to Low-Latency Media Streaming for Developers

Conclusion

The shift from chatbots to callbots is far more than just a new feature; it is a fundamental evolution in how businesses and customers interact. It marks the transition from static, asynchronous communication to dynamic, real-time, and emotionally resonant conversation. While the ever-advancing power of LLMs provides the intelligence for these interactions, it is the modern, developer-first voice API that provides the voice.

The best voice API for business communications is built for real-time AI phone agents, where latency is the enemy and seamless, instant conversation is the goal. This is the new frontier of customer experience, and the right voice infrastructure is the key to leading it.

Want to dive deeper into the technical architecture of building a low-latency callbot? Schedule a demo for FreJun Teler!

Also Read: How to Log a Call in Salesforce: A Complete Setup Guide

Frequently Asked questions (FAQs)

The main difference between a chatbot and a callbot is the communication modality and the real-time demands. A chatbot communicates via text and can have delays in its responses (asynchronous). A callbot communicates via voice and must respond instantly to feel like a natural, real-time conversation (synchronous).

Yes. This is a key benefit. The core “brain” or intelligence can be the same. The primary difference is the technology stack surrounding it. A callbot requires additional components like a Speech-to-Text engine, a Text-to-Speech engine, and a real-time voice API.

A voice API is a set of programming tools that allows developers to build and integrate voice communication features (like making and receiving phone calls) directly into their own applications. The best voice API for business communications is one that is designed for high reliability, scalability, and low latency.

Low latency is the key to a natural conversation. If there is a long pause after a user speaks, the AI agent feels slow and robotic, which leads to a frustrating user experience. Minimizing this delay is the primary technical challenge for callbots.

The LLM integration with voice APIs happens in a loop. The voice API captures the user’s spoken audio and provides it to a Speech-to-Text engine. The system sends the resulting text to the LLM, forwards the LLM’s response to a Text-to-Speech engine, and returns the generated audio to the voice API to play to the user.

No. A modern, developer-first voice API platform like FreJun AI is designed to abstract away the low-level telecom complexity. If you are a software developer who is comfortable with web APIs, you have the skills you need to build a callbot.

Yes. The conversational ability of the callbot is determined by the power of the LLM you choose to use. With a sophisticated LLM, a callbot can handle very complex, multi-turn conversations, answer nuanced questions, and even perform tasks.

FreJun AI is not the LLM. We provide the foundational voice infrastructure, the “nervous system.” Our Teler engine is the powerful, low-latency voice API platform that handles all the complex, real-time audio streaming, allowing you to connect any LLM of your choice to the global telephone network.

While the development requires an initial investment, the operational cost of an AI-handled call is a fraction of the cost of a human-handled call. By automating high-volume inquiries, a callbot can provide a very significant return on investment.