The way businesses handle customer conversations is changing rapidly. Traditional phone systems are being replaced by cloud telephony solutions, which make voice channels programmable, scalable, and easy to integrate with modern software.

At the same time, AI voice agents are moving beyond static IVR menus, enabling natural, real-time conversations that can understand intent, access data, and respond instantly. When these two technologies work together, they unlock a new model of communication: reliable telephony infrastructure combined with intelligent dialogue management.

This blog explores exactly how cloud telephony works with AI voice agents, the technical architecture behind it, why latency matters, and the most impactful business use cases today.

What Is Cloud Telephony and Why Does It Matter?

Cloud telephony is the migration of voice infrastructure from hardware-based PBX systems into software-defined, cloud-hosted platforms. In traditional setups, businesses relied on on-premise PBX boxes, dedicated ISDN or PRI lines, and a fixed number of trunks to handle calls. Scaling meant installing new hardware and negotiating with telecom operators – a process that was both expensive and slow.

According to The Business Research Company, the cloud telephony market jumped from USD 31.9B in 2024 to USD 37.5B in 2025, growing at 17.6%, underlining how businesses are rapidly deploying cloud telephony solutions.

With cloud telephony solutions, the telephony core is hosted on distributed servers connected to global carriers. Numbers can be provisioned instantly across multiple geographies, calls can be routed dynamically through SIP trunks, and voice packets are handled as RTP streams across IP networks. This shift matters because it makes voice programmable: what was once a static telecom service is now an API-driven infrastructure layer.

For businesses exploring AI, this programmability is essential. A telephony layer that can expose raw audio streams, deliver call events over webhooks, and scale elastically is the foundation that allows AI systems to process and respond to live conversations. Without cloud telephony, voice agents would still be confined to IVR menus and rigid DTMF flows.

What Are AI Voice Agents?

An AI voice agent is not a chatbot with a voice add-on. It is a system engineered to handle the entire conversational loop over a live phone call. At the most basic level, this loop consists of:

- Capturing the caller’s speech through a microphone or phone line.

- Transcribing that speech into text using a streaming speech-to-text (STT) engine.

- Feeding the transcription into a language model that can interpret intent, maintain conversational state, and decide what action to take next.

- Optionally querying knowledge bases or external APIs – a process often described as retrieval-augmented generation (RAG) or tool calling.

- Synthesizing a natural voice response using text-to-speech (TTS).

- Streaming that audio back to the caller over the same phone channel.

Unlike legacy IVR bots, which followed fixed menu trees, voice agents can adapt to unstructured input. A caller does not need to “press 1 for sales” or repeat a phrase verbatim; they can speak naturally, and the agent interprets the intent in context. More importantly, the agent can remember details across turns, handle interruptions mid-sentence, and interact with backend systems to perform real tasks such as scheduling or verifying identity.

This makes AI voice agents qualitatively different from their predecessors. They are not just answering calls; they are conducting conversations.

How Do Cloud Telephony and AI Voice Agents Work Together?

To see how these two layers interact, it is useful to trace the path of a single call.

When a customer dials a business number, the call first enters the public switched telephone network (PSTN) or a VoIP network. If the business is using a cloud telephony provider, that number is mapped to a virtual endpoint in the cloud. The provider establishes a SIP session and begins streaming audio packets (RTP) between the caller and the cloud service.

At this point, the telephony layer exposes the live audio stream to the AI stack. Platforms like Teler act as the bridge: they capture the real-time voice input and forward it to the developer’s chosen STT service. The STT engine produces transcripts incrementally, so the text is available almost as soon as the words are spoken.

The transcript is then passed into the dialogue manager – often a large language model augmented with retrieval mechanisms. The model interprets what the caller wants, queries any necessary data sources, and generates a text response. That response is sent to a TTS service, which converts it back into audio.

Finally, the audio is streamed back through the telephony layer and delivered to the caller. If the caller interrupts, the streaming stops, the new speech is captured, and the cycle continues. This loop happens continuously in real time, enabling conversations that feel human rather than machine-driven.

What Does Technical Architecture Look Like?

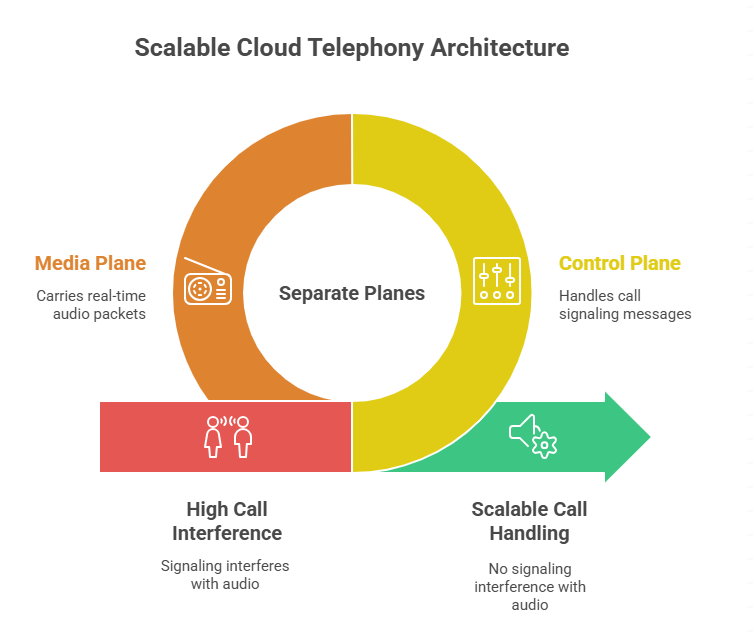

The architecture of a cloud telephony + AI voice agent system can be thought of as two parallel planes: the control plane and the media plane.

- The control plane handles signaling: when the call starts, when it rings, when it is answered, when a DTMF key is pressed, or when it is hung up. This is typically exchanged as SIP messages or via webhooks from the cloud telephony API.

- The media plane carries the audio itself, transported as RTP packets. This is the layer where low latency, jitter buffers, and codec handling are critical.

For inbound calls, the sequence is:

- Caller dials a DID number.

- The call is routed through SIP trunks to the cloud telephony solution.

- Media streams are forked – one path to the STT engine, one path optionally to storage or analytics.

- AI logic produces responses, which are synthesized via TTS and streamed back to the caller.

For outbound calls, the process is similar but initiated from the application. The developer triggers an API request to the telephony provider, which originates a call to the target number. Once answered, the same media streaming loop is established. Even modest packet loss (above 5%) already causes noticeable audio drops and distortion in VoIP streams.

Separating control and media planes allows systems to scale. You can handle thousands of concurrent calls without the signaling traffic interfering with the real-time audio path. It also allows developers to focus on conversational state management without worrying about SIP minutiae.

Why Is Latency So Critical in AI Voice Calls?

Human conversation is extremely sensitive to delay. A pause of even half a second can feel unnatural, and anything beyond a second can break the flow entirely. This makes latency management the single most important technical challenge in AI-powered telephony.

Consider the budget for each component:

- Speech-to-Text (STT): Modern streaming engines can return partial transcriptions in 100–200 milliseconds.

- Language Model Processing: Depending on model size and context length, this may take 150–300 milliseconds. Smaller models or cached context can reduce this further.

- Text-to-Speech (TTS): Neural synthesis engines typically deliver the first chunk of audio in 150–250 milliseconds.

- Network Transport: Jitter buffers and cross-region hops can add another 50–150 milliseconds.

Adding these together, the realistic target is 400 to 600 milliseconds from the end of a caller’s utterance to the beginning of the AI’s spoken response. Anything slower and the system begins to feel robotic.

To stay within this budget, developers employ techniques like incremental STT (streaming text before the utterance ends), chunked TTS playback (start speaking while later words are still rendering), and barge-in handling (allowing the caller to interrupt without waiting). Placing telephony and AI servers geographically close also reduces cross-region latency.

In one state-of-the-art pipeline, ASR latency is 0.05s, TTS 0.28s, and LLM generation 0.67s – totaling 0.94s per response – underscoring how low-latency design is vital.

Latency is not just a performance metric; it defines whether the system feels usable at all.

Where Does Teler Fit Into This Workflow?

Implementing all of the above from scratch would require provisioning SIP trunks, configuring SBCs, handling RTP packet loss, managing jitter buffers, and ensuring global reliability – before even integrating AI. This is where Teler provides value.

Teler acts as the global voice infrastructure layer. It manages number provisioning, SIP routing, and carrier interconnects across geographies. More importantly, it provides low-latency media streaming APIs that allow developers to connect any STT, TTS, and LLM stack without worrying about the telephony plumbing underneath.

By abstracting the complexities of SIP and RTP, Teler lets businesses focus on the intelligence layer: prompt design, dialogue management, retrieval systems, and tool integration. It is model-agnostic, meaning you can use Whisper for STT today and switch to Deepgram tomorrow without rewriting telephony code. You can use ElevenLabs for TTS in one deployment and Amazon Polly in another.

Teler ensures that regardless of the AI vendors chosen, the transport layer remains stable, reliable, and optimized for speed. For product teams, this means faster go-to-market. For developers, it eliminates the need to debug SIP traces or manage carrier failover.

What Are the Practical Use Cases?

Cloud telephony solutions integrated with AI agents are already delivering value across industries. In healthcare, AI agents can answer after-hours calls, schedule appointments, and route emergencies to on-call staff. In retail and e-commerce, they can confirm orders, handle returns, and provide shipping updates automatically. Professional services firms use them for intake calls, gathering structured data before passing cases to human advisors.

Outbound campaigns are another strong use case. Instead of blasting prerecorded messages, AI agents can conduct personalized conversations: reminding patients of appointments, following up with leads, or conducting satisfaction surveys. Because they are powered by real-time AI, these calls feel interactive rather than one-way.

Inbound reception is equally transformative. Instead of static IVRs, businesses can deploy agents that understand natural speech, authenticate customers, and handle common queries without human intervention. Calls that require escalation can still be transferred instantly, with context carried over.

These applications demonstrate how cloud telephony and AI voice agents work hand in hand: one provides the reliable, programmable communication channel, and the other provides the conversational intelligence.

| Industry / Use Case | Practical Applications |

| Healthcare | After-hours call handling, appointment scheduling, emergency routing to on-call staff. |

| Retail & E-commerce | Order confirmations, return processing, automatic shipping updates. |

| Professional Services | Intake calls where structured data is gathered before passing cases to human advisors. |

| Outbound Campaigns | Personalized conversations for appointment reminders, lead follow-ups, satisfaction surveys. |

| Inbound Reception | Natural speech recognition, caller authentication, resolution of common queries, and seamless escalation with context transfer. |

| Overall Value | Cloud telephony ensures reliable communication channels, while AI agents add conversational intelligence for efficiency and personalization. |

Learn the technical factors for choosing the best AI tools that simplify development of scalable Voice AI bots.

How Secure and Reliable Is Cloud Telephony with AI?

Security and reliability are non-negotiable when handling voice calls, especially when conversations involve personal or financial information. Cloud telephony solutions embed protection mechanisms at multiple layers to ensure integrity and compliance.

On the transport level, SIP signaling is typically secured with TLS, while media streams use SRTP (Secure Real-Time Transport Protocol). This prevents interception of either call control messages or the voice itself. At the data storage level, any call recordings or transcripts are encrypted at rest using industry-standard algorithms such as AES-256. Access to recordings is controlled through role-based permissions, and audit logs track who accessed what and when.

Compliance frameworks also come into play. For businesses in healthcare, HIPAA mandates how voice data is stored and shared. For businesses in Europe, GDPR requires strict consent for recording and processing. Many cloud telephony providers, including platforms like Teler, provide features such as automated recording prompts, consent banners, and regional data residency options to align with these regulations.

Reliability is handled through geographically distributed infrastructure. Calls are routed through multiple points of presence, ensuring that if one region experiences an outage, another takes over seamlessly. Carrier interconnects are redundant, and failover policies can reroute calls within seconds. For enterprises, service-level agreements guarantee uptime and provide clear remedies if thresholds are not met.

The end result is a voice infrastructure that is both secure and resilient enough to support mission-critical AI applications.

How Do You Integrate Cloud Telephony With Your AI Stack?

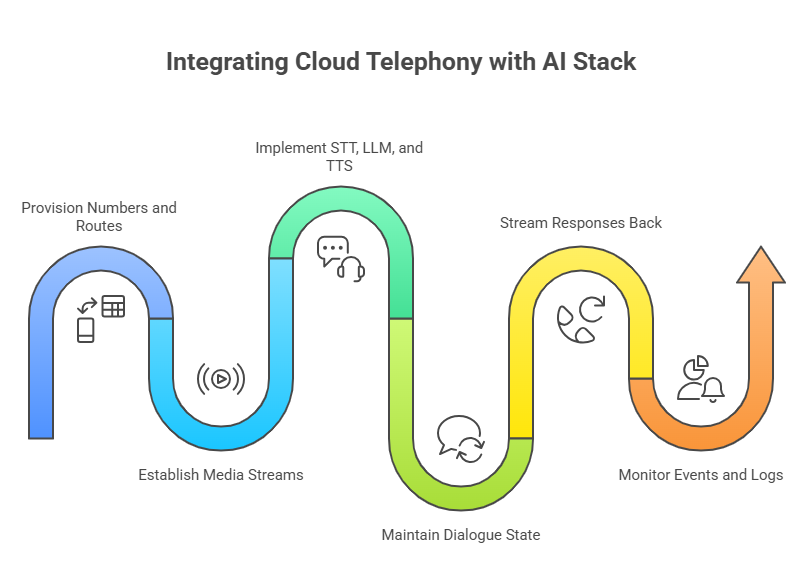

For engineering teams, the integration process can be broken into clear steps. The telephony layer provides APIs and SDKs, and the AI stack supplies the intelligence.

- Provision Numbers and Routes: Using the telephony provider’s dashboard or API, developers can acquire DID numbers in the required geographies. Routing rules define which calls go to AI agents and which are escalated to humans.

- Establish Media Streams: Once a call is active, the media stream is forked and sent over secure channels to the STT engine. The provider’s API exposes these streams in formats that are easy to integrate into existing pipelines.

- Implement STT, LLM, and TTS: The developer plugs in the chosen STT service for transcription, a language model for dialogue management, and a TTS service for responses. These can be swapped out as needed since the telephony layer remains agnostic.

- Maintain Dialogue State: The application backend holds session memory. This ensures that context from earlier in the call is retained and that tool calls (such as querying a CRM) are tied to the right session.

- Stream Responses Back: Once the TTS generates audio, the telephony layer streams it back to the caller with minimal buffering. Interruptions are handled by pausing playback and processing the new input.

- Monitor Events and Logs: Webhooks provide call lifecycle updates: ringing, answered, transferred, or terminated. Developers can use these to build dashboards and automate escalations.

This modular design allows teams to build a proof of concept in days and scale it into a production-grade deployment later. With Teler, the complexity of SIP routing and carrier interconnects is hidden, leaving developers free to focus on AI logic and integrations.

Similar Article: Discover how developers can evaluate Voice AI APIs in 2025 to build reliable, low-latency conversational agents.

What Metrics Should You Track for AI Voice Agents?

Measuring the effectiveness of an AI voice deployment requires metrics from both telephony and AI layers.

- Latency per Turn – the time between a caller finishing speech and the agent beginning its response. This is the most direct measure of conversational fluidity.

- Mean Opinion Score (MOS) – a network-level quality metric indicating how clear the audio sounds to the human ear.

- Intent Confidence – whether the AI correctly understood what the caller asked. Low confidence can trigger escalation to a human agent.

- First-Call Resolution (FCR) – the percentage of issues solved without requiring follow-up.

- Escalation Rate – how often the AI needed to hand off to a human.

- Call Abandonment – the number of callers who hung up before resolution.

- Operational Costs – minutes consumed, STT/TTS processing usage, and LLM token costs.

By tracking these metrics, businesses can iterate on both infrastructure and AI logic. For example, high latency may suggest switching to a faster STT model, while low MOS scores may indicate packet loss on certain carrier routes.

How Do Cloud Telephony and AI Impact Costs and ROI?

Cost structures in AI-powered telephony are different from traditional call centers. Instead of staffing expenses, the main drivers are infrastructure usage and AI services.

- Minutes: Each inbound or outbound call incurs per-minute charges.

- Concurrent Channels: Pricing may scale with the number of simultaneous calls.

- STT/TTS Usage: These are billed based on duration of audio processed.

- LLM Tokens: Costs scale with the size of prompts and responses.

- Storage: Recordings and transcripts occupy storage billed monthly.

The ROI comes from reducing the need for human operators in tier-one queries, enabling 24/7 coverage, and improving customer satisfaction. For outbound use cases, AI voice agents can handle thousands of calls simultaneously, something no human team could match economically.

By tuning models for shorter responses, caching context, and managing retry policies, businesses can reduce token usage and call duration, which directly lowers costs.

Also Read: Understand the key advantages of Retell AI in automating calls and improving efficiency within modern businesses.

What Are Common Integration Challenges?

Despite the maturity of cloud telephony, integrating with AI voice agents presents unique challenges:

- Accents and Noisy Lines: STT accuracy can drop if not tuned for domain-specific vocabulary. Developers often build custom acoustic and language models to compensate.

- Tool Latency: External APIs like CRMs may introduce unpredictable delays. Caching and asynchronous design help mitigate this.

- Escalation Logic: Deciding when to hand over to a human requires careful thresholds – too early wastes automation, too late frustrates callers.

- Compliance Complexity: Outbound calls in particular must obey Do-Not-Call registries, quiet hours, and consent rules that differ by country.

These challenges are solvable but require deliberate design choices.

How Do You Get Started Quickly With Teler?

One of the advantages of platforms purpose-built for AI voice is the ability to move fast. Teler provides developers with SDKs, APIs, and sample applications to launch prototypes quickly. A typical first project might be:

- Provision a number.

- Configure an inbound route to stream audio to a simple backend.

- Connect that backend to an STT service.

- Run the transcript through a lightweight model for response generation.

- Synthesize the text with TTS and send it back over the call.

From this starting point, teams can add complexity: integrating RAG pipelines, connecting CRM tools, adding barge-in logic, or scaling to outbound campaigns. Because the telephony side remains stable, changes on the AI side do not require reworking the call routing.

This modularity is why businesses can move from proof-of-concept to production in weeks instead of months.

Final Takeaways

Cloud telephony provides the communication backbone; AI voice agents deliver intelligence and automation. Together, they enable programmable, low-latency, and scalable conversations that outperform legacy IVRs. The workflow – SIP signaling, RTP media streaming, STT, LLM orchestration, and TTS – is intricate, but essential for real-time interactions. Instead of building and maintaining telephony infrastructure, teams should focus on AI logic, integrations, and customer experience. Teler abstracts the telephony layer, offering global reliability, streaming APIs, and model-agnostic integration.

Explore Teler to connect your LLM, STT, and TTS stack into production-ready voice agents with minimal latency and maximum scalability.

FAQs –

1: What is cloud telephony and how does it work?

Cloud telephony replaces hardware PBX, delivering calls via internet APIs, enabling businesses to scale, integrate AI, and manage communication efficiently.

2: How do cloud and AI work together in voice agents?

Cloud telephony handles call transport, while AI voice agents interpret speech, generate responses, and enable natural real-time automated conversations.

3: How do AI voice agents work in practice?

AI voice agents use STT, LLMs, and TTS to understand callers, access data, perform

4: What’s the difference between VoIP and cloud telephony?

VoIP enables internet calls, while cloud telephony provides programmable, scalable infrastructure with APIs, global numbers, integrations, and AI capabilities.