The world of customer communication is in the midst of a seismic shift. The era of the frustrating phone menu and the long, silent queue is finally giving way to a new paradigm of instant, intelligent, and conversational service. At the heart of this revolution is the AI voicebot, a technology that has rapidly evolved from a futuristic concept into a mission-critical tool for businesses of all sizes.

But as the hype around voice AI reaches a fever pitch, a new and complex challenge has emerged for business leaders and technical architects: choosing the right platform. The market is now a crowded and often confusing landscape of “all-in-one” builders, specialized AI models, and foundational infrastructure providers.

Making the right choice is a high-stakes decision that will define the quality of your customer experience, the flexibility of your technology stack, and the long-term cost of your operations.

This comparison guide is for you. We will dissect the current state of the voice AI software market, explore the key market trends that are shaping the future, and provide a clear framework for evaluating the different architectural approaches to your deployment.

Table of contents

What is the Core Philosophical Divide in the Voice AI Market?

To make an informed decision, you must first understand that the AI voicebot market is not a monolith. It is fundamentally split into two distinct philosophical and architectural approaches: the “All-in-One Platform” and the “Infrastructure-First” approach.

What is the “All-in-One Platform” Approach?

An all-in-one platform is a vertically integrated, bundled solution. It provides all the necessary components for building a voicebot in a single package: the telephony, the Speech-to-Text (STT), the AI “brain” (LLM), and the Text-to-Speech (TTS). These platforms are often designed with a user-friendly, low-code interface to accelerate development.

- The Analogy: Think of it as buying a pre-built, high-end computer from a major brand like Apple. You get a beautifully designed, perfectly integrated product that works flawlessly out of the box. It’s fast, convenient, and comes with a single point of contact for support.

- Top Players: This space includes enterprise-focused platforms like Google Cloud Dialogflow, Amazon Lex, and Cognigy.AI.

- The Trade-Off: The convenience of this approach comes at the cost of control and flexibility. You are locked into the manufacturer’s choice of components (their proprietary AI models), and your ability to customize or upgrade individual parts is limited.

What is the “Infrastructure-First” Approach?

The infrastructure-first approach is a more flexible, modular, and developer-centric model. In this philosophy, the provider focuses on perfecting one thing: the foundational voice infrastructure.

A platform like FreJun AI is a leader in this space. FreJun AI (FreJun Teler) provides best voice infrastructure so you can focus on building your AI.

FreJun AI provides the state-of-the-art motherboard, the power supply, and the case, the rock-solid, high-performance foundation. You, the developer, then get to choose the absolute best “best-of-breed” components on the market: the fastest processor (your LLM from OpenAI, Google, or Anthropic), the most accurate sensor array (your STT), and the most immersive speakers (your TTS).

Also Read: Why Are Businesses Shifting to AI Voice Agents?

What Are the Key Market Trends Shaping AI Voicebot Platforms in 2025?

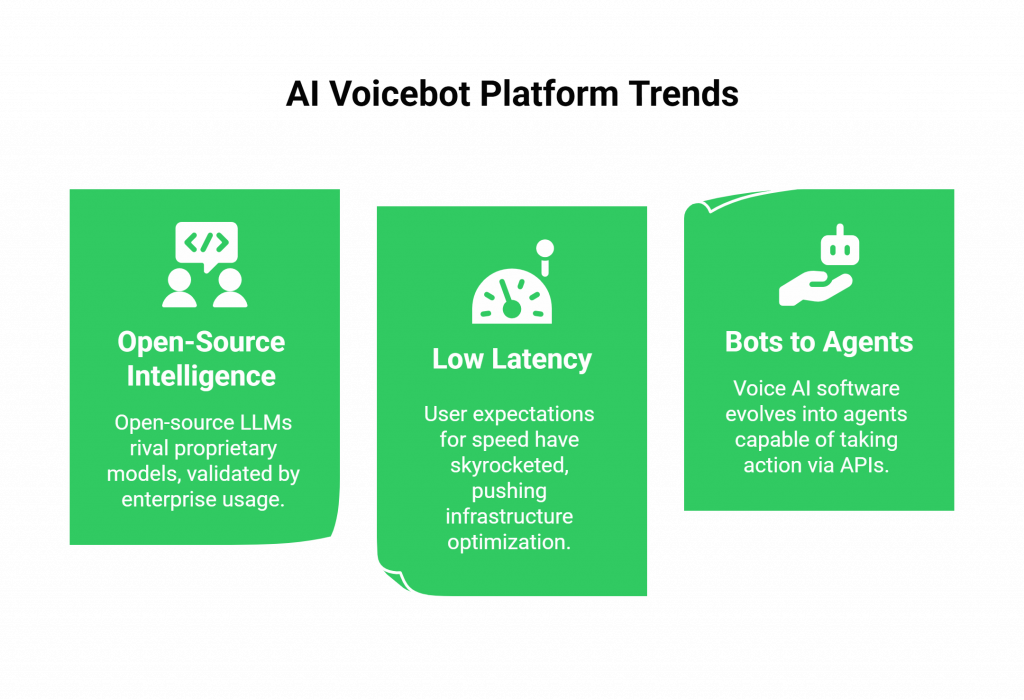

The market trends in conversational AI are moving at a blistering pace. Understanding these trends is crucial for making a future-proof decision.

- The Rise of Open-Source Intelligence: The performance of open-source LLMs (like Llama 3 and Gemma 2) is now rivaling that of the top proprietary models. A 2024 survey by Andreessen Horowitz found that a majority of enterprises are already using open-source models. This trend is a massive validation of the infrastructure-first, model-agnostic approach, as it gives developers the freedom to leverage these powerful and cost-effective open models.

- The Obsession with Low Latency: As bots become more human-like, user expectations for speed have skyrocketed. A sub-500-millisecond response time is the new benchmark for a natural-sounding conversation. This is pushing infrastructure providers to obsessively engineer their networks for the lowest possible latency.

- The Shift from “Bots” to “Agents”: The next generation of voice AI software is not just a Q&A machine; it’s an “agent” that can take action. This means the ability to use “tools” to connect to external APIs, to book an appointment, process a refund, or update a CRM, is now a must-have feature.

How Should You Compare the Different Deployment Architectures?

Your deployment choice is a long-term architectural commitment. Here’s a head-to-head comparison guide based on the criteria that matter most to an enterprise.

| Feature | All-in-One Platform (e.g., Dialogflow, Lex) | Infrastructure-First Platform (e.g., FreJun AI) |

| AI Model Choice | Locked-In: You must use the platform’s proprietary STT, LLM, and TTS models. | Model-Agnostic Freedom: Complete freedom to choose the “best-of-breed” models from any provider (OpenAI, Google, Anthropic, Open Source, etc.). |

| Customization | Limited: You are constrained by the features and logic exposed by the platform’s UI and limited API. | Unlimited: You have full, granular programmatic control to build any custom logic, workflow, or persona. |

| Performance | Good: Generally good performance, but you have no control to optimize individual components for lower latency. | Optimizable: You can achieve the lowest possible latency by choosing the fastest AI models and building a highly optimized, streaming-first application. |

| Time-to-Market | Faster (for simple bots): The low-code interface allows for very rapid prototyping and deployment of simple use cases. | Slower (initially): Requires an initial investment in backend development to orchestrate the AI components. |

| Long-Term Cost | Higher at Scale: The per-minute or per-conversation pricing model can become very expensive for high-volume applications. | Lower at Scale: The ability to choose more cost-effective AI models and optimize your infrastructure leads to a much lower Total Cost of Ownership (TCO). |

| Developer Control | Low: The platform abstracts away most of the underlying control, which is a “black box.” | High: The developer has full control over the “glass box” architecture, enabling deep debugging and optimization. |

Ready to build a voice AI without the limitations of a locked-in platform? Sign up for FreJun AI and explore our API.

Also Read: How Do Voicebots Help Reduce Wait Times?

What is the Verdict for 2025?

The AI voicebot platform you choose should be a direct reflection of your business’s strategic goals and technical capabilities.

- Choose an All-in-One Platform If: Your primary goal is speed-to-market for a relatively simple use case, you have limited in-house development resources, and you are comfortable with the long-term trade-offs of vendor lock-in.

- Choose an Infrastructure-First Platform like FreJun AI If: You are building a strategic, mission-critical application, you want the freedom to use the most powerful and innovative AI models on the market, you demand the lowest possible latency, and you have a development team that wants to build a truly custom and differentiated experience.

The growing demand for a superior customer experience is a powerful argument for the flexibility of the infrastructure-first approach. A recent Salesforce report found that 80% of customers now say the experience a company provides is as important as its products, and a custom, low-latency AI delivers a superior experience.

Also Read: How Do Voice APIs Power Next-Gen AI Systems?

Conclusion

The landscape of voice AI software is more exciting and more accessible than ever before. The rise of powerful AI models and flexible infrastructure platforms has democratized the ability to build a world-class AI voicebot. The “best” platform is not a one-size-fits-all answer. It requires a careful evaluation of the trade-offs between the convenience of an all-in-one solution and the power of an infrastructure-first approach.

For the forward-thinking enterprise of 2025, the ability to adapt, customize, and innovate is the key to a lasting competitive advantage. A flexible, model-agnostic architecture is not just a technical choice; it’s a business strategy for the future.

Want to discuss how a model-agnostic infrastructure can future-proof your voice AI strategy? Schedule a demo for FreJun Teler!

Also Read: What Is Click to Call and How Does It Simplify Business Communication?

Frequently Asked Questions (FAQs)

An AI voicebot is a conversational AI that uses a voice interface to communicate with users. It leverages technologies like Speech-to-Text, a Large Language Model, and Text-to-Speech to have natural, human-like conversations.

An all-in-one platform provides a bundled solution that includes the telephony and all the AI models. An infrastructure platform like FreJun AI specializes in providing the core voice and telephony layer, giving you the freedom to choose and integrate your own AI models.

“Model-agnostic” means the platform is not tied to a specific AI provider. It allows you, the developer, to choose your own “best-of-breed” STT, LLM, and TTS models from any company, preventing vendor lock-in.

Low latency is critical because humans are very sensitive to delays in a conversation. A long pause makes the AI feel slow and robotic. Ultra-low latency is essential for creating a fast, responsive, and natural-sounding conversational experience.

The biggest market trend is the rise of powerful, open-source LLMs. This is shifting the power away from closed, proprietary ecosystems and giving developers more choice, control, and cost-effective options for their AI “brain.”

It requires an initial investment in backend development to orchestrate the different AI model APIs. However, for a skilled developer, a well-documented voice API can make this process surprisingly straightforward.

The choice depends on your use case. For complex reasoning and tool use, you might choose a large model like GPT-4o or Claude 3. For more straightforward conversations where speed is paramount, you might choose a smaller, faster model. A model-agnostic platform allows you to test and choose the best one.

Yes. This is a major advantage. If a new, more powerful LLM is released next year, you can easily swap it into your application without having to change your underlying voice infrastructure. This is a key part of a future-proof deployment strategy.

FreJun AI is a leading example of the infrastructure-first approach. We provide the high-performance, secure, and reliable voice layer that is the ideal foundation for businesses looking to build their own custom, “best-of-breed” voice AI software.

TCO is a financial estimate that includes not just the direct, upfront costs of a platform (like per-minute fees), but also the indirect, long-term costs, such as the cost of development, maintenance, and the potential business cost of being locked into less-effective technology.