For the past few years, the world of automated voice has been in a state of rapid, foundational change. We have moved from the rigid, frustrating “press-one” menus of the IVR to the first generation of truly conversational voice bot solutions. This leap, powered by the rise of Large Language Models (LLMs), has been profound.

But it is just the beginning. The future of voice automation is not just an incremental improvement on what we have today; it is a story of a deeper, more seamless, and more intelligent integration of voice into the very fabric of our digital lives.

As we look towards the horizon, a clear set of powerful voicebot trends 2026 are emerging, and they are poised to once again redefine what is possible in human-to-machine communication.

These trends are not isolated innovations. They are a convergence of advancements in AI, in network architecture, and in our own expectations as users. They point towards a future where the line between a human agent and an AI agent becomes increasingly blurred, and where voice is no longer a separate channel, but a native, fluid, and ever-present interface to the digital world.

For any business, developer, or strategist looking to stay ahead of the curve, understanding these trends is not just a matter of curiosity; it is a matter of competitive survival.

Table of contents

- Trend 1: The Rise of the Hyper-Personalized, Proactive Voice Bot Solutions

- Trend 2: The Shift to Truly “Speech-to-Speech” and Low-Latency Interactions

- Trend 3: The Multi-Modal, Channel-Less Conversation

- The Role of the Voice Infrastructure in Voice Bot Solutions

- Conclusion

- Frequently Asked Questions (FAQs)

Trend 1: The Rise of the Hyper-Personalized, Proactive Voice Bot Solutions

The first generation of intelligent voicebots was largely reactive. They were very good at answering the questions that a customer asked. The next generation will be defined by its ability to be proactive, to anticipate a customer’s needs, and to initiate a conversation before the customer even realizes they have a problem.

From Reactive Answering to Proactive Assistance

- The Trend: AI voice automation is moving from an inbound, “pull” model to an outbound, “push” model. Deep integration between the voice bot solution and real-time business data streams powers this capability, including CRM, e-commerce, and logistics systems.

- What It Looks Like in 2026

- The Proactive Delivery Agent: Your e-commerce platform’s data shows that a customer’s high-value package is scheduled for delivery, but the driver’s GPS indicates a major traffic delay. Instead of waiting for the customer to call and ask, “Where is my order?”, the system automatically triggers a call from a voicebot. It says, “Hi, Sarah. This is a proactive alert about your delivery. I see your driver is facing an unexpected delay, and your new estimated delivery time is 3:30 PM. Does that still work for you, or would you like me to reschedule for tomorrow?”

- The Predictive Churn Intervention: Your customer success platform’s AI flags a high-value B2B customer as being at “high risk” of churn based on their declining product usage. This triggers a call from a specialized, high-empathy voicebot to check in, offer assistance, and proactively schedule a meeting with their human account manager.

Also Read: Why Use a Voice Recognition SDK for High Volume Audio Processing

Trend 2: The Shift to Truly “Speech-to-Speech” and Low-Latency Interactions

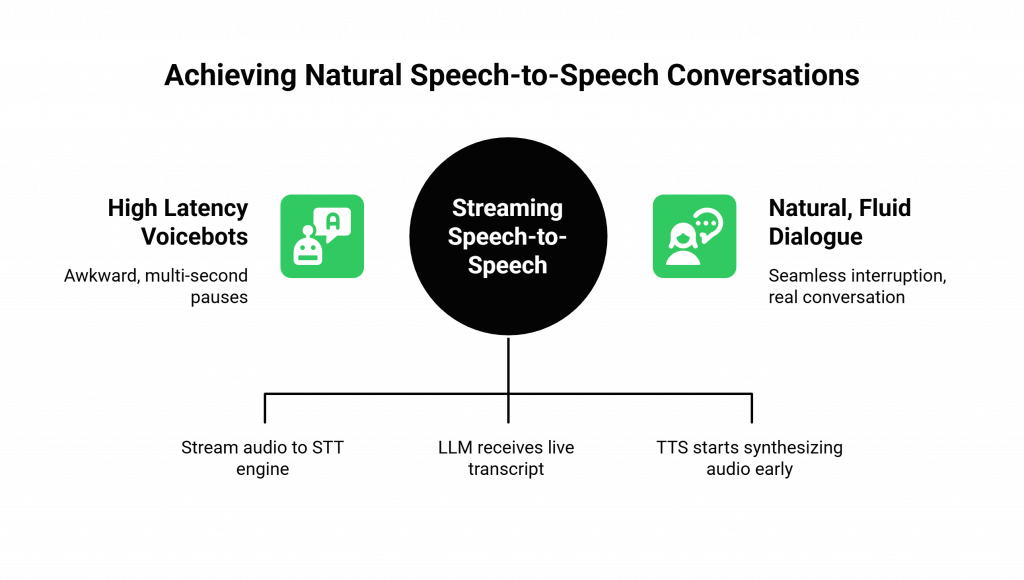

The future of voice automation is a story of a relentless war against latency. The awkward, multi-second pause of a first-generation voicebot is the single biggest barrier to a natural conversation.

From a “Walkie-Talkie” to a Fluid Dialogue

- The Trend: The architecture of voice AI is moving from a slow, sequential, “turn-by-turn” model to a high-speed, parallel, and fully streaming “speech-to-speech” model.

- What It Looks Like in 2026:

- Streaming Everything: The moment a user starts speaking, the audio is being streamed to the STT engine, which is transcribing in real-time. The LLM is receiving this live transcript and is formulating its response as the user is still talking. The TTS engine is then receiving the first part of the LLM’s response and is starting to synthesize the audio and stream it back to the user before the LLM has even finished “thinking.”

- Natural Interruption: This streaming architecture will make “barge-in” (the ability to interrupt the AI) a standard, seamless feature. The AI will be able to stop speaking the instant the user starts, creating a much more natural conversational rhythm.

- Why It Matters: This obsession with low latency is the key to escaping the “uncanny valley.” It is what will make talking to an AI feel less like operating a machine and more like having a real conversation.

Trend 3: The Multi-Modal, Channel-Less Conversation

One of the most powerful voicebot trends 2026 is the idea that “voice” is not just a phone call. It is a feature, an interface that can be embedded anywhere.

- The Trend: The silos between the phone call, the web chat, the mobile app, and even the in-car assistant are breaking down. The future is a single, unified conversation that can move seamlessly between these modalities.

- What It Looks Like in 2026:

- The “Handoff” from Chat to Voice: A user is chatting with an AI on a website. The issue becomes complex. The AI says, “This might be easier to resolve over the phone. Click this button, and I’ll call your number on file and we can continue this conversation right where we left off.”

- The “Show Me” Escalation: A user is on a voice call with an AI, trying to describe a problem with a physical product. The AI says, “I’m having a little trouble picturing that. I’ve just sent a secure link to your phone. If you tap it, it will open your camera, and you can show me the issue.”

Ready to build on a platform that is architected for these future trends? Sign up for FreJun AI, and explore our powerful, future-ready voice infrastructure.

This table provides a summary of these key future trends.

| The Trend | The Core Technology Driving It | The Impact on the User Experience |

| Hyper-Personalized & Proactive | Deep integration of the voice platform with real-time business data (CRM, etc.). | The AI feels omniscient and helpful, anticipating needs and solving problems before they happen. |

| Truly “Speech-to-Speech” & Low-Latency | Globally distributed, edge-native voice infrastructure and fully streaming AI pipelines. | The conversation feels instantaneous, natural, and fluid, with no awkward, robotic pauses. |

| Multi-Modal & Channel-Less | A unified, API-first communication platform that can manage voice, video, and text in a single session. | The user can move seamlessly between different modes of communication without ever losing context. |

Also Read: Modern Voice Recognition SDK Supporting Multilingual Apps

The Role of the Voice Infrastructure in Voice Bot Solutions

It is tempting to think that these trends are all about the AI models. But the most brilliant AI in the world is useless without a powerful “nervous system” to connect it to the world. The underlying voice platform is the non-negotiable foundation.

A platform that is built for the future of voice automation must be:

- Radically Developer-First and API-Driven: The future is built on code. The platform must be fully programmable, allowing developers to create these complex, integrated, and multi-modal workflows.

- Globally Distributed and Edge-Native: The war against latency can only be won at the edge. The platform must have a global network of servers to process audio as close to the user as possible.

- Completely Model-Agnostic: The world of AI is too big and is moving too fast for any single company to own. A future-ready voice api, like the one from FreJun AI, must be a flexible bridge, allowing a business to always integrate the best-in-class AI models from any provider on the market. Around 70% of AI-adopting companies are using a mix of third-party and proprietary AI models.

This is our core architectural philosophy at FreJun AI. We handle the complex voice infrastructure so you can focus on building the intelligent, future-ready AI.

Also Read: How Does a Voice Recognition SDK Improve AI Driven Interactions

Conclusion

The future of voice automation is a story of a deeper and more seamless integration into our lives. We are moving beyond the simple, transactional voice bot solutions of today and into a new era of proactive, intelligent, and truly conversational partners.

The voicebot trends 2026 point to a world where our interactions with AI are not just more efficient, but more natural, more helpful, and more human than ever before.

For businesses and developers building this future, the strategic imperative is clear: choose a foundational voice platform built for today’s challenges and architected for tomorrow’s transformative possibilities.

Want to discuss your long-term voice roadmap and see how our platform supports the future of AI? Schedule a demo with our team at FreJun Teler.

Also Read: CRM Call Center Security: Protecting Customer Data & Call Logs

Frequently Asked Questions (FAQs)

The most significant trend is the shift from reactive to proactive, hyper-personalized engagement, where the voicebot initiates contact based on real-time data and anticipated customer needs.

The future of voice automation is about creating a seamless, omnichannel experience where a single AI can maintain a continuous, context-aware conversation across voice, chat, and other channels.

A “speech-to-speech” model is an architectural approach that uses a fully streaming pipeline to dramatically reduce the latency between a user speaking and the AI responding, creating a more natural conversation.

They will become proactive through deep integration with a business’s real-time data streams (like a CRM), allowing them to automatically trigger a call based on a business event.

A multi-modal conversation is one that can seamlessly move between different modes of communication, such as escalating from a voice call to a video call to “show” a problem.

It is important because the world of AI is moving very fast. A model-agnostic platform gives you the freedom to always use the best-in-class AI models from any provider.

They will handle interruptions seamlessly. A fully streaming architecture allows the AI to detect the instant a user starts speaking and to immediately stop its own response.