Customer experience is no longer tied to a single channel. Instead, users move between voice calls, chat, apps, and emails while expecting the conversation to continue without friction. Because of this shift, businesses are now rethinking how they design customer interactions.

At the center of this change is building voice bots that are not limited to call handling, but are deeply connected to the broader omnichannel experience. When designed correctly, voice bots can become a powerful layer that unifies conversations across channels rather than fragmenting them.

This article explains how voice bots improve customer experience across channels, from a technical and product perspective, with a clear focus on real implementation patterns.

Why Is Customer Experience Shifting Toward Voice Across Channels?

For years, chat interfaces dominated digital customer support. However, voice has re-emerged as a critical channel. This is not because chat failed, but because chat alone cannot solve every customer problem.

Research shows that omnichannel customer support boosts CSAT to 67%, compared with just 28% for disconnected service models, underscoring why unified voice + digital experiences are critical for modern CX strategies.

Several factors are driving this shift:

- Customers want faster resolution, not longer text exchanges

- Complex issues are easier to explain through speech

- Voice feels more natural during urgent or emotional situations

- Mobile and hands-free use cases are increasing

At the same time, customers do not think in terms of channels. Instead, they expect a single continuous experience. For example, a user may start with chat, move to a call, and then expect follow-up messages to reflect the same context.

Because of this, improving CX with AI calls is no longer about replacing agents. Rather, it is about connecting voice with the rest of the customer journey.

What Do We Really Mean By Building Voice Bots Today?

Traditionally, voice bots were tightly linked to IVR systems. However, modern voice bots are fundamentally different.

Today, building voice bots means creating real-time conversational systems powered by AI. These systems are designed to listen, understand, reason, and respond in natural language.

A modern voice bot is not a single model. Instead, it is a pipeline of components, each with a clear role.

Core Components Of A Modern Voice Bot

- Speech-To-Text (STT): Converts live audio into text with low delay

- Language Understanding Using LLMs: Interprets intent, context, and meaning beyond keywords

- Conversation State Management: Tracks what has already happened in the conversation

- Retrieval-Augmented Generation (RAG): Fetches correct data from internal systems or knowledge bases

- Tool Calling: Performs actions such as ticket creation, order lookup, or scheduling

- Text-To-Speech (TTS): Converts responses back into natural, human-like audio

Because all these components work together, voice bots today behave more like AI agents than scripted call flows.

How Do Voice Bots Fit Into An Omnichannel Customer Experience?

Voice bots become truly valuable when they are designed as part of omnichannel voice automation, not as standalone call handlers.

In an omnichannel setup:

- Voice, chat, and messaging share the same customer context

- Conversations can move across channels without restarting

- Customer intent is preserved regardless of entry point

For example:

- A customer chats with support, then switches to a call

- The voice bot already knows the issue, history, and intent

- The call continues instead of starting from zero

This approach enables unified customer journeys with voice AI, where voice acts as one interface among many, not an isolated system.

As a result, voice bots stop being a cost center and start becoming a CX accelerator.

How Do Voice Bots Improve Customer Experience Compared To Traditional Call Systems?

Traditional call systems focus on routing. Voice bots focus on understanding.

This difference directly impacts customer experience.

Key Improvements Enabled By Voice Bots

- No rigid menus: Customers speak naturally instead of pressing keys

- Faster issue resolution: AI understands intent early and responds immediately

- Higher first-contact resolution: Bots can solve or correctly route without multiple transfers

- Consistent responses: Customers receive the same accurate information every time

- Reduced wait times: Voice bots handle concurrency better than human-only setups

Because of this, improving CX with AI calls is less about automation volume and more about conversation quality.

What Is The Technical Architecture Behind A Modern Voice Bot?

To understand why voice bots improve CX, it is important to understand how they actually work.

Below is a simplified but accurate architecture flow.

Voice Bot Architecture Flow

- Live Audio Capture: The system captures incoming audio in real time

- Streaming Speech-To-Text: Audio is converted into text continuously, not in batches

- Intent And Context Processing: The LLM evaluates:

- What the user wants

- What has already been discussed

- What action is required

- What the user wants

- Knowledge Retrieval (RAG): Relevant data is fetched from internal systems

- Tool Execution: APIs or backend services are triggered if needed

- Response Generation: The AI prepares a response in text form

- Text-To-Speech Streaming: Audio is generated and streamed back instantly

Because this pipeline runs continuously, latency and reliability become critical. Even a small delay can break conversational flow and harm CX.

Why Is Real-Time Media Streaming Critical For Voice Bot Experience?

Voice conversations are sensitive to delays. Unlike chat, silence in a call feels awkward and unnatural.

For this reason, voice bots must operate on real-time media streaming, not request-response cycles.

Why Real-Time Streaming Matters

- Reduces gaps between user speech and AI response

- Enables interruptions and corrections (barge-in)

- Preserves conversational rhythm

- Feels closer to human dialogue

Without real-time streaming:

- Responses feel robotic

- Customers interrupt or hang up

- CX scores drop quickly

Therefore, voice bot success depends not only on AI models, but also on the underlying voice infrastructure.

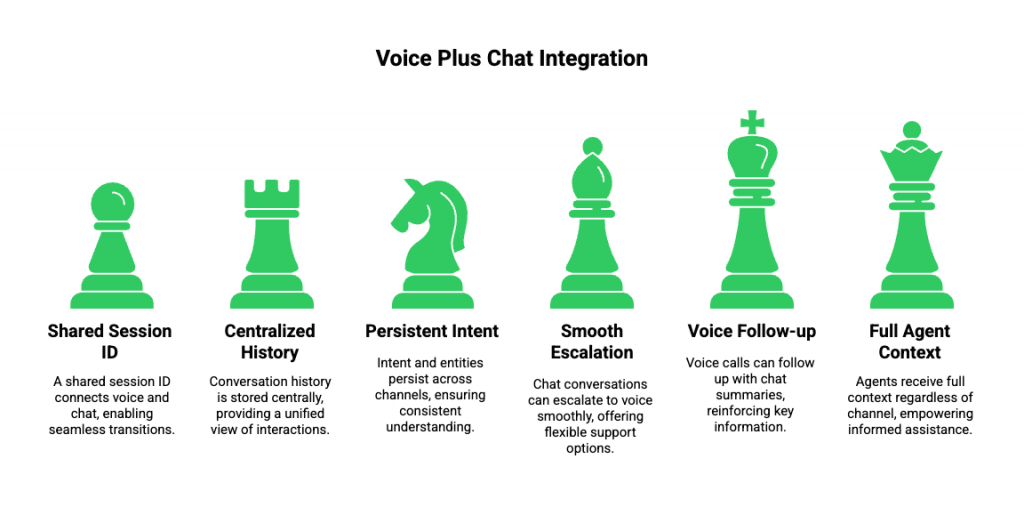

How Do Voice Bots Enable Voice Plus Chat Integration?

One major advantage of modern voice bots is their ability to share context with chat systems.

This is where voice plus chat integration becomes a key CX differentiator.

How Context Sharing Works

- A shared session ID connects voice and chat

- Conversation history is stored centrally

- Intent and entities persist across channels

As a result:

- Chat conversations can escalate to voice smoothly

- Voice calls can follow up with chat summaries

- Agents receive full context regardless of channel

This type of multichannel conversational design removes friction and builds trust with users.

What Are The Biggest Challenges In Building Voice Bots At Scale?

While the benefits are clear, building voice bots is not trivial.

Teams often face these challenges:

- Latency control across STT, LLM, and TTS

- Handling noisy audio and accents

- Maintaining long conversation context

- Scaling concurrent real-time sessions

- Integrating with existing business systems

Because of these challenges, many early voice bot projects fail to deliver strong CX results.

However, when these issues are addressed correctly, voice bots become a stable and scalable CX layer across channels.

Why Do Voice Bots Fail Without The Right Infrastructure?

At first glance, building a voice bot may seem straightforward. After all, many teams already use LLMs, STT, and TTS. However, voice introduces constraints that text-based systems do not.

The most common failure points are not model-related. Instead, they are infrastructure-related.

Key Infrastructure Gaps That Impact CX

- High latency between components: Delays between STT, LLM, and TTS break conversational flow

- Unstable audio streaming: Dropped frames and jitter lead to incomplete responses

- Poor session state handling: Context gets lost during long or multi-turn calls

- Limited concurrency support: Systems fail under real-world call volumes

Because of these issues, many voice bots sound intelligent in demos but fail in real customer interactions. As a result, CX suffers even when AI quality is high.

This is where the difference between telephony-first platforms and AI-native voice systems becomes clear.

How Does Infrastructure Shape Omnichannel Voice Automation?

Omnichannel voice automation depends on one core requirement: reliable, real-time transport of voice and context.

Unlike chat, voice requires:

- Continuous audio streaming

- Tight latency guarantees

- Bidirectional communication

- Immediate interruption handling

If the infrastructure cannot support this, even the best AI models cannot deliver a good experience.

What Omnichannel Voice Automation Needs

- Persistent session connections

- Real-time media pipelines

- API-level control over call flows

- Seamless handoff to other channels

When these elements are present, voice bots stop acting as isolated tools. Instead, they become connectors between channels, enabling unified customer journeys with voice AI.

How Does FreJun Teler Enable AI-Native Voice Bots?

FreJun Teler is built specifically to address the infrastructure gaps that limit voice bot performance.

Rather than focusing on call routing or traditional telephony features, Teler functions as a global voice infrastructure layer for AI agents and LLMs.

What FreJun Teler Provides At A Technical Level

- Real-Time Bidirectional Audio Streaming: Designed for low-latency, continuous voice interactions

- Model-Agnostic Integration: Works with any LLM, STT, or TTS stack without lock-in

- Stable Transport Layer: Maintains session continuity for long and complex conversations

- Developer-Controlled Architecture: Your application owns dialogue logic, context, and tools

- Cloud Telephony And VoIP Compatibility: Operates across PSTN, SIP, and cloud networks

In simple terms, you build the intelligence, and Teler ensures that voice moves smoothly between the user and your AI.

Because of this separation, engineering teams can focus on conversational design and CX, rather than managing voice complexity.

How Can Teams Implement Voice Bots Using Teler And Any LLM Stack?

Once the infrastructure layer is in place, implementation becomes more predictable and scalable.

Below is a high-level implementation flow that many engineering teams follow.

Reference Implementation Flow

- Inbound Or Outbound Call Starts: Audio is captured in real time

- Audio Is Streamed To STT: Speech is converted into text continuously

- Text Is Sent To LLM: The LLM processes intent, context, and next steps

- RAG Retrieves Business Knowledge: Internal data is injected when required

- Tool Calls Execute Actions: CRM updates, ticket creation, or scheduling

- Response Is Converted To Speech: TTS generates natural audio

- Audio Is Streamed Back To Caller: The loop continues with minimal delay

Because the voice layer is abstracted, teams can swap models, upgrade AI logic, or extend functionality without touching telephony logic.

This flexibility is critical for long-term CX improvements.

How Do Voice Bots Improve CX Across Real Business Use Cases?

The technical benefits of voice bots become most clear when applied to real customer scenarios.

Intelligent Inbound Support

- Customers explain issues naturally

- Voice bots understand intent without menus

- Common issues are resolved instantly

- Complex cases are routed with full context

CX impact: Faster resolution and lower frustration.

Proactive Outbound Engagement

- Appointment reminders

- Payment follow-ups

- Feedback collection

Instead of scripted calls, voice bots conduct natural conversations.

CX impact: Higher engagement and better response rates.

Voice And Chat Continuity

- Chat escalates to voice when needed

- Voice calls trigger chat follow-ups

- Context flows across channels

CX impact: Customers do not repeat themselves.

Multilingual Customer Interactions

- LLM-driven understanding

- Language-specific TTS output

CX impact: Global support without large agent teams.

How Should Founders And Engineering Leads Evaluate Voice Bot Solutions?

Before investing in voice bots, teams should evaluate systems beyond surface features.

Key Questions To Ask

- Does the system support real-time streaming end to end?

- Can we bring our own LLM and AI stack?

- How is conversational context maintained?

- Can voice integrate with chat and CRM?

- Will this scale under production load?

Founders and product leaders who focus on these questions tend to build systems that last, rather than short-lived pilots.

How Will Voice Bots Shape The Future Of Customer Experience?

Voice bots are no longer experimental. They are becoming a core interface for customer interaction.

Looking ahead:

- Voice will act as the fastest path to resolution

- AI agents will handle the first layer of CX

- Human agents will focus on edge cases

- Channels will merge around shared context

In this future, customer experience is not defined by channels. Instead, it is defined by continuity, speed, and clarity.

Building voice bots with the right architecture makes this future achievable today.

Final Thought

Voice bots are no longer limited to handling calls or reducing support load. When built using modern AI architectures, they become a unifying layer that connects voice, chat, and digital experiences into a single customer journey. By combining real-time speech processing, LLM-driven reasoning, and shared conversational context, businesses can deliver faster, more consistent, and more natural interactions across channels. However, achieving this requires more than choosing the right AI models. It depends on reliable voice infrastructure designed for low latency, scalability, and developer control.

FreJun Teler enables teams to build production-grade voice bots by acting as the real-time voice interface for any LLM or AI agent.

Schedule a demo.

FAQs –

1. What is a voice bot in simple terms?

A voice bot is an AI-powered system that understands speech, reasons using language models, and responds naturally over calls.

2. How are modern voice bots different from IVRs?

Modern voice bots understand natural language, manage context, and perform actions instead of following fixed menu trees.

3. Can voice bots work with existing chat systems?

Yes, voice bots can share context with chat systems to create seamless voice plus chat experiences.

4. Do voice bots require a specific LLM?

No, modern architectures support any LLM, allowing teams to choose models that fit their needs.

5. How do voice bots improve customer experience?

They reduce wait times, avoid repeated explanations, and deliver faster, more consistent responses across channels.

6. What role does real-time streaming play in voice bots?

Real-time streaming ensures low latency, natural conversations, and the ability to handle interruptions smoothly.

7. Can voice bots scale for high call volumes?

Yes, with the right infrastructure, voice bots can handle thousands of concurrent conversations reliably.

8. Are voice bots secure for enterprise use?

When designed correctly, they support encryption, access controls, and secure integrations with enterprise systems.

9. Can voice bots trigger backend actions?

Yes, voice bots can call tools and APIs to update CRMs, create tickets, or schedule tasks.

10. How long does it take to implement a voice bot?

With proper infrastructure, teams can deploy functional voice bots in days rather than months.