Voice is becoming the default interface for modern applications. However, as products expand globally, supporting voice across languages, accents, and regions introduces new technical challenges. A modern voice recognition SDK must do more than convert speech to text. It must support multilingual conversations, real-time processing, and seamless integration with AI systems. This is especially critical for teams building voice agents that rely on LLMs, speech models, and live telephony.

In this blog, we explore how modern voice recognition SDKs support multilingual apps, what technical components are required, and why infrastructure plays a central role in scaling reliable, global voice experiences.

Why Do Modern Apps Need A Multilingual Voice Recognition SDK?

Voice has moved from being an optional feature to becoming a primary interface for digital products. Today, users expect to speak naturally with applications, just as they would with another person. However, this expectation changes drastically when products expand across regions, languages, and cultures.

According to market research, the global speech and voice recognition industry is expected to more than double from around $9.66 B in 2025 to $23.11 B by 2030 at a CAGR of about 19.1%, highlighting the rapid adoption of voice interfaces globally.

As businesses scale globally, voice systems must handle:

- Multiple languages

- Regional accents

- Dialect variations

- Mixed-language conversations

Because of this, a basic voice interface is no longer enough. Instead, modern products require a voice recognition SDK that is designed for multilingual and cross-region usage.

Moreover, global voice apps are no longer limited to call centers. They are now used in:

- AI customer support

- Virtual assistants

- Voice-enabled SaaS platforms

- Healthcare and fintech applications

- Logistics and operations tools

Therefore, the real challenge is not adding voice, but adding reliable multilingual voice recognition that works consistently across regions.

What Is A Modern Voice Recognition SDK, And How Is It Different From Traditional STT APIs?

At first glance, many teams assume that a voice recognition SDK is simply a speech-to-text (STT) API. However, this assumption often leads to design failures later in production.

Traditional STT APIs Focus On Transcription Only

Legacy STT systems were built primarily for:

- Post-call transcription

- Single-language input

- Batch audio processing

As a result, they struggle with real-time voice applications.

A Modern Voice Recognition SDK Is Built For Conversations

In contrast, a modern voice recognition SDK supports live, interactive experiences. It acts as part of a larger conversational pipeline rather than a standalone service.

Key differences include:

| Capability | Traditional STT APIs | Modern Voice Recognition SDK |

| Audio processing | Batch-based | Real-time streaming |

| Language handling | Single or fixed | Multilingual & dynamic |

| Latency | High | Low and predictable |

| Context awareness | None | Session-aware |

| AI integration | Limited | AI-native |

Because of these differences, a modern SDK becomes the foundation for language recognition AI in real-world products.

How Does Language Recognition AI Work In Multilingual Voice Applications?

Language recognition AI is responsible for understanding what language is being spoken, even before full transcription occurs. This step is critical in multilingual environments.

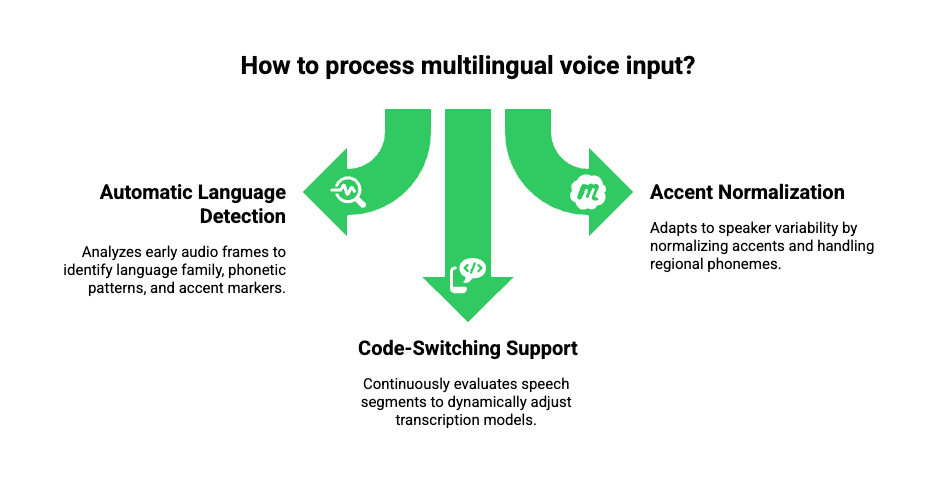

Automatic Language Detection

Instead of forcing users to select a language, modern systems analyze early audio frames to detect:

- Language family

- Phonetic patterns

- Accent markers

This allows the system to route audio to the correct multilingual STT model.

Handling Accents And Dialects

Even within the same language, pronunciation can vary widely. Therefore, language recognition AI must:

- Normalize accents

- Handle regional phonemes

- Adapt to speaker variability

For example, English spoken in India, the US, and the UK differs significantly. Without accent normalization, transcription accuracy drops sharply.

Supporting Code-Switching

In many regions, users switch languages mid-sentence. This is common in:

- Hinglish

- Spanglish

- Arabic-English mixes

Modern language recognition AI continuously evaluates speech segments. As a result, it can dynamically adjust transcription models without breaking conversational flow.

What Are The Core Building Blocks Of A Multilingual Voice Agent?

To understand how multilingual voice apps work end to end, it helps to break them into core components.

Voice Agent = LLM + STT + TTS + RAG + Tool Calling

Each part plays a specific role, and more importantly, they must work together in real time.

Why This Architecture Matters

If even one component introduces latency or errors, the entire conversation feels unnatural. Therefore, modern voice recognition SDKs are designed to support all five layers smoothly.

How Does Multilingual Speech-To-Text Work In Real-Time Voice Apps?

Speech-to-text is the entry point of every voice interaction. In multilingual systems, this layer is significantly more complex.

Real-Time Audio Streaming

Instead of waiting for the call to end, audio is processed in small chunks:

- Each chunk is streamed immediately

- Partial transcripts are generated

- Final transcripts are refined continuously

This approach reduces latency and keeps conversations natural.

Language-Aware Transcription

A multilingual STT SDK must:

- Detect language early

- Switch models when needed

- Maintain accuracy across accents

Because users may change languages mid-call, transcription models must adapt without restarting the session.

Confidence Scoring And Error Handling

To maintain reliability, modern STT systems include:

- Word-level confidence scores

- Noise suppression

- Fallback strategies for unclear speech

As a result, downstream AI systems receive cleaner and more reliable input.

How Do LLMs Handle Multilingual Conversations In Voice-Based Systems?

Once speech is converted into text, large language models take over. However, multilingual voice interactions introduce new challenges for LLMs.

Multilingual Understanding Without Translation Loss

Some systems rely on translating speech into English before processing. While this approach seems simple, it often loses intent and context.

In contrast, modern systems use:

- Multilingual LLMs

- Language-specific prompts

- Context preservation across turns

This ensures that meaning remains intact, even when users switch languages.

Maintaining Conversational Context

Voice conversations are stateful. Therefore, LLMs must:

- Track session memory

- Understand prior turns

- Maintain intent continuity

Without proper context handling, conversations feel disjointed and repetitive.

Why Is Text-To-Speech Critical For Natural Multilingual Voice Experiences?

Speech recognition is only half of the conversation. The response must sound natural, clear, and regionally appropriate.

Key Requirements For Multilingual TTS

A strong TTS system must support:

- Correct pronunciation per language

- Consistent voice identity

- Natural pacing and pauses

Moreover, in live calls, TTS must stream audio quickly to avoid delays.

Interrupt Handling And Natural Flow

In real conversations, users interrupt or respond early. Therefore, modern TTS systems support:

- Barge-in detection

- Audio interruption

- Smooth playback control

Without these features, voice interactions feel robotic.

How Do RAG And Tool Calling Improve Multilingual Voice Accuracy?

While recognition and response are important, real value comes from action.

Retrieval-Augmented Generation (RAG)

RAG allows voice agents to:

- Fetch relevant documents

- Use multilingual embeddings

- Answer accurately across languages

For example, a user may ask a question in Spanish while the knowledge base is in English. RAG bridges this gap.

Tool Calling For Real-World Actions

Voice agents often need to trigger actions such as:

- Updating CRM records

- Scheduling appointments

- Creating support tickets

Therefore, modern voice systems integrate tool calling directly into the conversation flow.

What Are The Biggest Challenges In Building Cross-Region Voice Applications?

Even with advanced AI models, many global voice apps fail in production.

Common Challenges Include:

- High latency across regions

- Audio packet loss

- Inconsistent transcription quality

- Session drops during long calls

- Poor scaling across telecom networks

Because of these issues, cross region voice applications require more than just AI models. They require a strong voice infrastructure layer, which we will explore next.

Sign Up to FreJun Teler Today!

Why Calling APIs Alone Are Not Enough For AI Voice Applications?

At this stage, it is important to separate two concepts that are often confused: calling platforms and voice infrastructure platforms. While both deal with phone calls, their roles are very different.

Calling APIs are designed to:

- Initiate calls

- Receive calls

- Route calls

- Record calls

- Generate call logs

However, modern AI voice applications require much more than call connectivity.

Where Calling APIs Fall Short

Calling platforms usually lack:

- Real-time bidirectional audio streaming

- Tight synchronization between STT, LLM, and TTS

- Support for multilingual conversational context

- Low-latency audio transport across regions

As a result, teams often struggle when they attempt to build AI-driven voice agents on top of basic calling APIs.

What Modern Voice Systems Actually Need

For a voice recognition SDK supporting multilingual apps, the system must:

- Stream audio in real time

- Preserve conversational state

- Support language switching

- Maintain consistent latency across regions

Therefore, calling APIs alone cannot support global voice apps that rely on real-time AI interactions.

What Role Does Voice Infrastructure Play In Multilingual AI Systems?

Once the limitations of calling APIs become clear, the importance of voice infrastructure becomes obvious.

Voice infrastructure sits between:

- Telecom networks (PSTN, SIP, VoIP)

- AI systems (LLMs, STT, TTS)

- Business applications (CRMs, databases, internal tools)

Why Infrastructure Matters

Without a reliable infrastructure layer:

- Audio packets may arrive late or out of order

- STT accuracy drops due to jitter

- TTS playback becomes delayed

- Conversational flow breaks

In contrast, a well-designed voice infrastructure:

- Maintains stable audio streams

- Keeps latency predictable

- Preserves session continuity

- Supports multilingual voice at scale

Because of this, infrastructure becomes the backbone of cross-region voice applications.

How Does FreJun Teler Support Modern Multilingual Voice Recognition At Scale?

FreJun Teler is designed specifically to solve the infrastructure challenges discussed above. Importantly, it does not replace AI models. Instead, it connects them reliably to real-world voice conversations.

What FreJun Teler Is (And Is Not)

FreJun Teler is:

- A global voice infrastructure platform

- A real-time voice interface for AI agents

- A transport layer for multilingual voice conversations

FreJun Teler is not:

- An LLM

- A speech-to-text provider

- A text-to-speech engine

This distinction matters because it gives teams full freedom over their AI stack.

Technical Capabilities That Matter

FreJun Teler enables:

- Real-time, low-latency audio streaming

- Bidirectional voice communication

- Stable conversational sessions

- Multilingual voice handling across regions

Moreover, it works seamlessly across:

- PSTN networks

- VoIP systems

- Cloud telephony

- SIP-based infrastructure

As a result, teams can build language recognition AI systems without worrying about telecom complexity.

How Can Teams Implement Teler With Any LLM, STT, And TTS Stack?

One of the biggest advantages of FreJun Teler is its model-agnostic architecture. This allows teams to integrate it without changing existing AI decisions.

Flexible Architecture By Design

With Teler, teams can:

- Choose any LLM (open-source or commercial)

- Select any multilingual STT SDK

- Use any TTS engine that fits their needs

- Plug in RAG pipelines and tool calling logic

Because Teler handles the voice layer, AI components remain independent.

Typical Implementation Flow

A common setup looks like this:

- User speaks on a phone call

- Teler streams audio in real time

- STT converts speech to text

- LLM processes intent and context

- RAG fetches relevant knowledge

- TTS generates spoken response

- Teler streams audio back to the user

This flow keeps latency low while preserving full conversational context.

What Should Founders And Engineering Leads Look For In A Voice Recognition SDK?

Choosing the right voice recognition SDK is a long-term decision. Therefore, evaluation should go beyond short demos.

Key Evaluation Criteria

Founders and engineering leaders should ask:

- Does it support multilingual and mixed-language input?

- Can it handle real-time streaming reliably?

- Is it flexible across AI models?

- Does it scale across regions?

- Does it preserve conversational context?

Infrastructure As A Differentiator

While many platforms compete on features, infrastructure quality determines:

- User experience

- Reliability

- Global scalability

- Long-term maintenance cost

Thus, infrastructure-first platforms often perform better in production.

How Do Cross-Region Voice Apps Scale Without Losing Quality?

Scaling voice applications globally introduces challenges that are not visible during early testing.

Common Scaling Issues

As usage grows, teams face:

- Increased latency in distant regions

- Inconsistent audio quality

- Telecom routing failures

- Higher error rates in STT

How Infrastructure Solves These Problems

A strong infrastructure layer:

- Routes audio through geographically distributed nodes

- Maintains session stability

- Reduces jitter and packet loss

- Keeps latency within acceptable bounds

Because of this, global voice apps remain reliable even under high load.

What Is The Future Of Multilingual Voice Recognition In AI Products?

Voice is becoming the most natural interface for AI systems. As adoption grows, expectations will rise.

Key Trends Shaping The Future

- Voice-first AI agents replacing static IVRs

- Multilingual support becoming the default

- Real-time conversations replacing asynchronous interactions

- Infrastructure-driven differentiation

Additionally, users will expect:

- Faster responses

- Better language understanding

- More natural voice output

To meet these expectations, teams must invest in both AI intelligence and voice infrastructure.

How Can Teams Start Building Multilingual Voice Agents Faster?

Building multilingual voice agents does not require reinventing the entire stack. Instead, it requires the right separation of concerns.

Practical Next Steps

Teams should:

- Keep AI logic modular

- Use flexible LLM and STT choices

- Invest in reliable voice infrastructure early

- Test across languages and regions from day one

By doing so, products can scale smoothly without constant rework.

Final Thoughts

Building multilingual voice applications requires more than selecting a speech-to-text API or an LLM. Modern voice systems must support real-time audio streaming, language recognition AI, conversational context, and consistent performance across regions. When these components are not tightly aligned, voice experiences quickly break at scale. This is where voice infrastructure becomes critical.

FreJun Teler provides the real-time voice layer that connects AI systems to global phone networks without locking teams into specific models or vendors. By handling low-latency media streaming and cross-region reliability, Teler allows engineering teams to focus on AI logic, not telecom complexity. If you’re building multilingual voice agents or global voice apps, Teler helps you move from prototype to production with confidence.

Schedule a demo to see how FreJun Teler powers real-time, multilingual voice agents at scale.

FAQs –

1. What is a voice recognition SDK used for?

A voice recognition SDK converts live speech into structured data, enabling applications to understand and respond to spoken user input.

2. Why do multilingual apps need specialized voice SDKs?

Because languages, accents, and dialects vary widely, requiring dynamic language detection and adaptive speech recognition models.

3. Can one voice recognition SDK support multiple languages?

Yes, modern SDKs support multilingual speech recognition through shared models and real-time language detection.

4. How does language recognition AI detect languages automatically?

It analyzes phonetic patterns and acoustic signals early in speech to identify the spoken language accurately.

5. What causes poor voice quality in global voice apps?

High latency, packet loss, unstable audio streaming, and lack of regional infrastructure often degrade voice quality.

6. Is speech-to-text enough to build voice agents?

No, voice agents require STT, LLMs, TTS, context management, and tool calling to function effectively.

7. How important is real-time processing for voice applications?

Real-time processing is critical to maintain natural conversations and avoid delays that disrupt user experience.

8. What role does infrastructure play in multilingual voice systems?

Infrastructure ensures stable audio streaming, low latency, and consistent performance across regions and telecom networks.

9. Can voice agents handle language switching mid-call?

Yes, modern systems support code-switching by dynamically adjusting recognition and language models.

10. How does FreJun Teler help with multilingual voice applications?

FreJun Teler provides the real-time voice infrastructure that reliably connects AI systems to global voice networks.