Bulk calling has evolved far beyond dialing thousands of numbers with the same recorded message. Today, faster engagement depends on how quickly a system can listen, understand, and respond in real time. As customer expectations rise, delays, static scripts, and rigid IVRs reduce trust and completion rates. This is where next-gen voice APIs reshape bulk calling into a dynamic, conversational system. By combining low latency communication, real-time media streaming, and AI-driven logic, modern voice architectures enable instant outreach that feels natural and responsive.

This blog explores how faster engagement is achieved technically, and how teams can design bulk calling systems built for scale, speed, and intelligence.

Why Is Faster Engagement Becoming Critical For Bulk Calling Today?

Customer attention windows are shrinking rapidly. As a result, businesses that rely on delayed or static outreach are seeing lower pickup rates and weaker conversions. Bulk calling is no longer about how many calls you place. Instead, it is about how fast and how meaningfully you engage once the call connects.

According to industry forecasts, by 2025, over 70% of customer service interactions are expected to include real-time voice AI, reinforcing that fast response and instant engagement are becoming baseline expectations.

Traditionally, bulk calling tools focused on scale. However, modern engagement depends on response speed, clarity, and interaction quality. If a call feels slow, scripted, or disconnected, users drop off within seconds. Therefore, faster engagement has become a core technical requirement, not just a product feature.

Several shifts are driving this change:

- Users now expect instant responses, even on voice calls

- AI-driven interactions are becoming common across chat and voice

- Businesses are moving from broadcast messaging to instant outreach tech

- Low-latency communication directly impacts trust and completion rates

Because of these factors, voice systems must respond in real time. Otherwise, even high-volume calling campaigns lose effectiveness.

In short: faster engagement is not about dialing faster. It is about reducing the time between user speech and system response.

What Are Traditional Bulk Calling Tools Optimized For And Where Do They Fall Short?

Most bulk calling platforms were built for a different era. Their primary goal was to deliver messages at scale. As a result, their architecture reflects broadcast thinking rather than conversation design.

What Traditional Bulk Calling Tools Do Well

- High-volume outbound dialing

- Playing pre-recorded audio messages

- Simple IVR flows using keypad input

- Campaign scheduling and retry logic

These capabilities are useful. However, they are optimized for one-way communication.

Where The Technical Gaps Appear

In contrast, modern engagement requires interaction. This is where traditional tools fall short:

- No real-time audio streaming: Audio is played, not streamed dynamically.

- Static call flows: Logic is defined before the call starts and cannot adapt.

- No natural language understanding: Speech input is often ignored or reduced to keypad input.

- High response latency: Delays occur between user action and system reaction.

Because of these limitations, traditional bulk calling tools struggle to support conversational use cases. Therefore, even when calls connect, engagement remains low.

As a result, businesses begin to look for more flexible voice APIs that support real-time interaction.

What Does Next-Gen Voice API Mean For Bulk Calling Use Cases?

The term “next-gen voice API” is often used loosely. However, for bulk calling, it has a very specific technical meaning.

A next-gen voice API is not just an API that places calls. Instead, it acts as a real-time communication layer between users and applications.

Key Characteristics Of A Next-Gen Voice API

- Real-time, bidirectional audio streaming

- Low latency communication across the call lifecycle

- Support for dynamic, stateful conversations

- Ability to integrate with AI systems in real time

In contrast to legacy voice APIs, which rely on request-response patterns, next-gen systems operate continuously during the call.

Why This Matters For Bulk Calling

Bulk calling traditionally meant “many calls, same message.” However, next-gen systems enable:

- Many calls with unique, dynamic conversations

- Instant adaptation based on user speech

- Real-time decisions during the call

This shift is why terms like voice API for bulk calling and fast calling API 2026 are gaining relevance. They reflect a move toward speed, flexibility, and intelligence.

How Do AI Voice Agents Actually Work Under The Hood?

To understand faster engagement, it is important to understand how modern voice agents are built. Voice agents are not single tools. Instead, they are systems composed of multiple components working together.

Core Components Of An AI Voice Agent

| Component | Role In The System |

| Speech To Text (STT) | Converts live audio into text |

| Large Language Model (LLM) | Understands intent and generates responses |

| Retrieval (RAG) | Adds business context and accurate data |

| Tool Calling | Triggers actions like CRM updates or scheduling |

| Text To Speech (TTS) | Converts responses back into audio |

Each component must operate in real time. Otherwise, delays compound quickly.

Why Orchestration Matters

Even if each component is fast individually, poor coordination increases latency. Therefore, voice agents require:

- Streaming input, not batch processing

- Immediate handoff between components

- Stable connections throughout the call

In practice, voice agents are only as fast as the slowest step in the loop. This is why infrastructure decisions directly impact engagement speed.

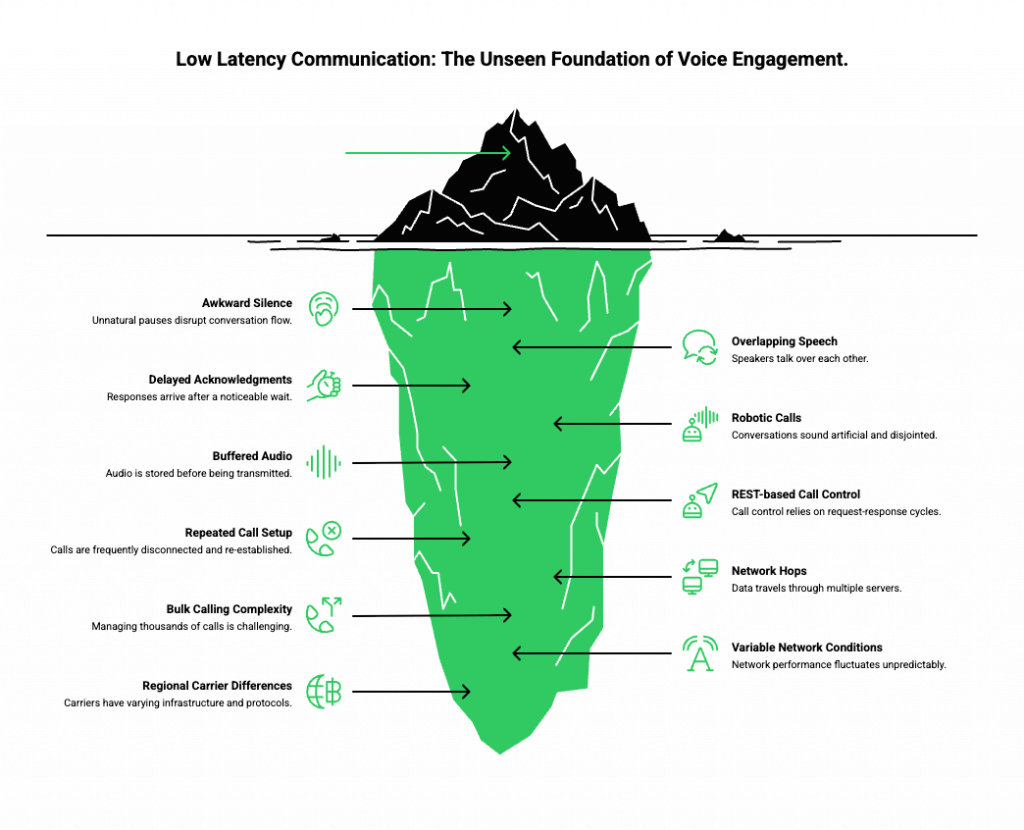

Why Is Low Latency Communication The Backbone Of Faster Voice Engagement?

Latency is the silent engagement killer. While users may tolerate slight delays in chat, voice conversations are different. Even a short pause can feel unnatural.

What Latency Feels Like To Users

- Awkward silence after speaking

- Overlapping speech

- Delayed acknowledgments

- Calls that feel robotic or broken

As a result, users disengage quickly.

Common Technical Causes Of Latency

- Buffered audio instead of live streaming

- REST-based call control instead of persistent connections

- Repeated call setup and teardown during interactions

- Network hops without optimization

Because of these issues, low latency communication must be designed into the system from the start.

Why This Is Hard At Scale

Bulk calling adds complexity:

- Thousands of concurrent calls

- Variable network conditions

- Different regions and carriers

Therefore, achieving low latency at scale requires real-time media infrastructure, not just faster servers.

How Can You Architect Bulk Calling With Real-Time AI Conversations?

At this point, the architectural difference becomes clear. Modern bulk calling systems resemble streaming platforms, not messaging systems.

High-Level Architecture Flow

- Outbound call is initiated

- Audio is streamed live from the call

- STT processes speech continuously

- LLM generates responses incrementally

- TTS converts responses into audio

- Audio is streamed back instantly

- Conversation state is maintained throughout

Because each step happens in real time, the system can respond naturally.

How This Differs From Legacy IVR

- No fixed menus

- No pre-recorded paths

- No waiting for full input before responding

Instead, conversations evolve naturally, just like human calls.

As a result, engagement increases because users feel heard and understood immediately.

Where Does FreJun Teler Fit In A Modern Voice Agent Architecture?

By now, the architectural direction is clear. Faster engagement requires real-time audio streaming, AI-driven dialogue, and low latency communication at scale. However, building this stack from scratch is complex. This is exactly where FreJun Teler fits in.

FreJun Teler acts as the voice infrastructure and transport layer between phone networks and your AI systems. Instead of managing telephony, streaming, and reliability internally, teams use Teler to handle these concerns while retaining full control over intelligence.

What FreJun Teler Is Responsible For

- Real-time bidirectional audio streaming

- Call control across PSTN, VoIP, and cloud telephony

- Maintaining stable connections throughout conversations

- Optimizing latency for live speech exchange

- Ensuring reliability at high call volumes

What FreJun Teler Does Not Control

- Which LLM you use

- Which STT or TTS engine you choose

- How your conversation logic is written

- How RAG or tool calling is implemented

Because of this separation, Teler integrates cleanly into existing AI pipelines. As a result, engineering teams avoid vendor lock-in while still gaining production-grade voice infrastructure.

In short: Teler powers the voice layer, while your application controls the intelligence.

How Does FreJun Teler Enable Faster Engagement At Scale?

Faster engagement is not achieved by one feature. Instead, it comes from removing delays at every stage of the call lifecycle. FreJun Teler is designed specifically for this purpose.

Real-Time Media Streaming By Design

Traditional voice APIs rely on command-based controls such as “play audio” or “collect input.” In contrast, Teler uses continuous media streaming.

This approach enables:

- Immediate speech capture as users talk

- Faster STT processing with partial transcripts

- Incremental AI responses instead of full-message waits

- Natural turn-taking during conversations

As a result, the system responds faster, even during complex calls.

Latency Reduction Across The Entire Loop

Latency often comes from accumulated delays. Therefore, Teler optimizes:

- Audio buffering strategies

- Network routing across regions

- Connection persistence throughout the call

- Streaming synchronization between input and output

Because these optimizations are built into the platform, teams do not need to engineer them independently.

This is a key reason why Teler supports low latency communication even under heavy load.

How Does Teler Compare To Traditional Bulk Calling Platforms?

At a surface level, both systems place outbound calls. However, the similarities end there. The architectural differences directly impact engagement quality.

Comparison At A System Level

| Capability | Traditional Bulk Calling Tools | Teler-Based Voice Systems |

| Audio Handling | Pre-recorded playback | Live, bidirectional streaming |

| Interaction Model | Static IVR | Dynamic conversation |

| AI Integration | Limited or none | Full LLM + STT + TTS support |

| Latency Control | Minimal | Designed for low latency |

| Personalization | Before the call | During the call |

| Scalability | Volume-focused | Engagement-focused |

Because of these differences, Teler-based systems support instant outreach tech that adapts in real time.

How Can Teams Implement Teler With Any LLM And STT TTS Stack?

One of the most important design goals behind Teler is flexibility. Teams rarely use the same AI stack. Therefore, the platform is built to support any combination of models and services.

Typical Integration Flow

- Your system initiates an outbound call through Teler

- Teler streams live audio from the call

- Audio is sent to your chosen STT engine

- Transcripts are processed by your LLM

- RAG adds business context if needed

- The LLM generates a response

- TTS converts text into audio

- Teler streams the response back instantly

Because this loop remains open, the conversation feels continuous.

Benefits For Engineering Teams

- No need to re-architect AI pipelines

- Easy experimentation with different models

- Independent scaling of voice and intelligence layers

- Clear separation of concerns

As a result, teams can focus on improving conversation quality instead of managing telephony complexity.

What Are The Key Use Cases Where Faster Voice Engagement Delivers Results?

Not every bulk calling use case requires AI. However, for high-value interactions, faster engagement creates measurable impact.

Intelligent Lead Qualification

- AI adapts questions based on responses

- Leads are qualified during the call

- CRM updates happen in real time

Conversational Appointment Management

- Natural language scheduling

- Immediate confirmation or rescheduling

- Reduced no-show rates

Proactive Customer Outreach

- Context-aware reminders and updates

- Personalized messaging during the call

- Better completion rates than robocalls

Because these use cases rely on interaction, they benefit directly from fast calling APIs and real-time voice systems.

What Should Founders And Engineering Leads Evaluate Before Choosing A Voice API?

Choosing a voice API is a long-term decision. Therefore, it should be evaluated as infrastructure, not tooling.

Key Evaluation Criteria

- Does the API support real-time streaming or only playback?

- How is latency handled under load?

- Can you bring your own AI models freely?

- Are SDKs mature and well-documented?

- How does the platform handle failures and retries?

- Is the system designed for future AI evolution?

Because AI capabilities evolve quickly, infrastructure flexibility becomes critical.

This is why many teams now prioritize voice APIs for bulk calling that are model-agnostic and infrastructure-first.

Is Next-Gen Voice API The Future Of Bulk Calling In 2026 And Beyond?

Looking ahead, the trend is clear. Bulk calling is moving away from scripted broadcasts and toward real-time engagement systems.

Several forces are driving this shift:

- AI models are becoming faster and more affordable

- Users expect natural conversations, even from machines

- Businesses demand better engagement metrics, not just call counts

- Infrastructure now supports global, low-latency streaming

As a result, the idea of a fast calling API in 2026 will likely imply conversational intelligence by default.

Platforms that focus only on dialing will struggle. Meanwhile, systems built around real-time voice interaction will define the next phase of outreach.

Final Thoughts

Faster engagement in bulk calling is no longer achieved through volume alone. Instead, it comes from real-time voice infrastructure that enables instant, conversational interactions at scale. As this blog outlined, next-gen voice APIs remove latency, support AI-driven dialogue, and transform static outreach into meaningful engagement. For founders, product leaders, and engineering teams, the key shift is architectural: moving from playback-based calling to streaming-based voice systems.

FreJun Teler supports this transition by providing a global, low-latency voice layer designed specifically for AI-powered bulk calling workflows. It allows teams to integrate any LLM, STT, or TTS stack while maintaining reliability and speed.

Schedule a demo to explore how Teler can power your next-gen voice engagement strategy.

FAQs –

1. What Is A Voice API For Bulk Calling?

A voice API enables programmatic outbound and inbound calls with real-time audio streaming, automation, and AI-driven interaction at scale.

2. How Is A Next-Gen Voice API Different From Traditional Bulk Calling Tools?

Next-gen APIs support live audio streaming and AI conversations, while traditional tools rely on static recordings and fixed IVR flows.

3. Why Does Low Latency Matter In Bulk Voice Engagement?

Low latency ensures natural conversation flow, faster responses, and higher user trust, reducing call drop-offs and improving engagement quality.

4. Can Voice APIs Work With Any LLM Or AI Model?

Yes, modern voice infrastructures are model-agnostic, allowing integration with any LLM, STT, or TTS system.

5. Is Bulk Calling With AI Scalable For High Volumes?

When built on streaming-based infrastructure, AI-driven bulk calling scales reliably across thousands of concurrent calls.

6. What Industries Benefit Most From AI-Powered Bulk Calling?

Sales, support, healthcare, logistics, fintech, and appointment-driven businesses benefit most from conversational bulk calling systems.

7. How Does Real-Time Streaming Improve Engagement Speed?

Streaming removes buffering delays, enabling instant speech recognition, faster AI responses, and natural turn-taking during calls.

8. Are AI Voice Agents Secure For Enterprise Use?

Yes, when deployed on enterprise-grade voice infrastructure with encrypted transport and controlled AI integration.

9. Can Bulk Calling Be Personalized In Real Time?

Yes, AI voice agents personalize conversations dynamically based on user input, context, and backend data during the call.

10. What Should Teams Evaluate Before Choosing A Voice API?

Latency performance, streaming support, AI flexibility, SDK maturity, reliability, and long-term architectural compatibility.