Voice systems look simple until they are pushed to real scale. As call volumes grow into the millions, traditional assumptions around APIs, latency, and reliability begin to fail. For founders, product managers, and engineering leads, bulk calling is no longer just about placing calls; it is about sustaining real-time conversations under heavy load, without breaking user experience.

This blog explores what it truly takes to build a voice API for bulk calling that operates reliably at scale. From telephony fundamentals to AI-driven voice systems, we break down the architecture, challenges, and decisions that matter when voice becomes mission-critical.

Why Is Bulk Voice Calling Still Hard At a Million-Scale?

Bulk voice calling looks simple on the surface. You trigger calls, play audio, and connect users. However, once volume increases from thousands to millions, the system behavior changes completely.

In practice, voice systems fail under scale not because of logic, but because of infrastructure limits. As call volume grows, even small inefficiencies multiply rapidly. Therefore, what works at 10,000 calls often breaks at 1 million.

Some common issues that appear at scale include:

- Call setup delays during peak bursts

- Dropped calls due to carrier throttling

- Audio jitter and degraded voice quality

- Media streams failing under heavy load

- Unpredictable latency across regions

Because of this, founders and engineering leaders often underestimate how different heavy load voice systems behave compared to other APIs.

More importantly, voice traffic is real-time. Unlike emails or notifications, voice cannot be queued or retried without user impact. As a result, every failure is immediately visible to the end user.

This is why building a voice API for bulk calling requires a fundamentally different mindset than building other scalable systems.

What Exactly Is A Voice API For Bulk Calling?

At its core, a Voice API allows applications to programmatically place and receive phone calls. However, when bulk calling enters the picture, the definition expands significantly.

A modern million scale calling API typically handles:

- Call initiation and termination

- Telephony signaling (SIP or similar protocols)

- Media transport for live audio

- Call state tracking

- Event callbacks for call progress

While REST APIs are often used to trigger calls, the real complexity lives elsewhere. Specifically, media transport and call concurrency define whether a system can scale.

It is important to separate two concepts:

| Concept | What It Means |

| Bulk Calling | Large number of calls triggered |

| Million-Scale Calling | High concurrency, sustained throughput, low failure |

Many platforms support bulk calling. However, far fewer can handle millions of concurrent or near-concurrent calls without quality loss.

Telecom APIs – including voice – are forecast to grow from $32B in 2024 to nearly $88B by 2030, reflecting broad enterprise adoption of programmable voice and telecom services.

Where to place: When explaining why enterprise apps use APIs for voice.

Therefore, when evaluating a voice API for bulk calling, scale must be treated as a first-class design goal, not an afterthought.

What Happens When You Try To Scale Voice Calls To Millions?

Scaling voice systems exposes limits that are invisible at smaller volumes. Initially, calls may succeed. Then, under load, performance degrades suddenly.

This happens because voice systems rely on multiple layers working together:

- Signaling layer – handles call setup and teardown

- Media layer – streams live audio

- Carrier layer – routes calls across telecom networks

- Application layer – manages call logic

At million scale, stress appears across all four layers at once.

Key Bottlenecks That Appear Under Heavy Load

- Calls Per Second (CPS) limits during outbound bursts

- Carrier-imposed concurrency caps

- RTP media stream saturation

- CPU spikes from audio encoding/decoding

- Network jitter affecting real-time audio

Because voice is continuous, systems cannot pause or recover gracefully. As a result, small timing issues turn into dropped calls quickly.

In contrast to typical APIs, retries are not helpful here. Once a user hears silence or delay, trust is lost.

This is why heavy-load voice systems require strict latency control and predictable performance, not just raw throughput.

Why Are Traditional Calling APIs Not Enough For Modern Use Cases?

Traditional calling APIs were designed during a different era of voice usage. Their main goal was reliability for static flows.

They work well for:

- IVR menus

- Pre-recorded outbound campaigns

- Basic call routing

- Call recording and tracking

However, modern use cases demand more.

Today, businesses want:

- Dynamic conversations

- Real-time decision making

- Context-aware responses

- Personalized call flows

- AI-driven automation

Traditional APIs struggle here because they are event-based, not stream-based. They react to call events but do not handle continuous real-time processing well.

As a result, when intelligence is added on top, teams start to experience:

- Delays between user speech and response

- Complex state management

- Fragile integrations

- Scaling problems when logic becomes dynamic

Therefore, while traditional APIs support calling, they do not support modern, intelligent, million-scale voice systems.

What Does A Modern Voice System Need To Handle Heavy Load Seamlessly?

To scale voice reliably, architecture must be designed specifically for real-time workloads. Simply adding more servers is not enough.

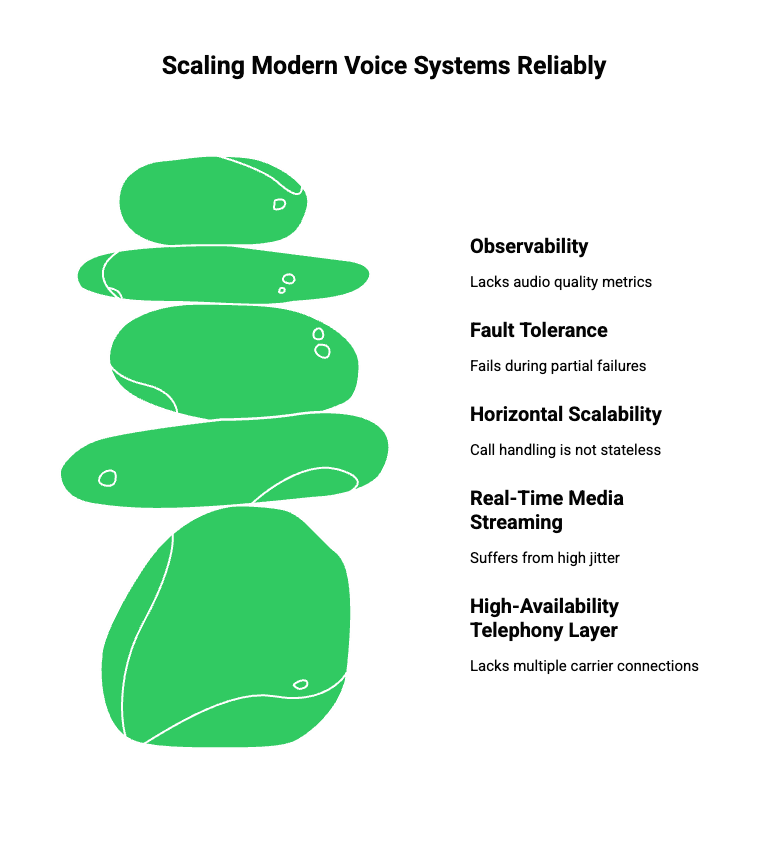

A modern voice system must support the following pillars:

High-Availability Telephony Layer

- Multiple carrier connections

- Automatic failover

- Region-aware routing

Real-Time Media Streaming

- Continuous audio streams

- Low jitter tolerance

- Bidirectional communication

Horizontal Scalability

- Stateless call handling where possible

- Independent scaling of media and logic

- Isolation between call sessions

Fault Tolerance

- Graceful degradation during partial failures

- Retry strategies at carrier level

- Fast session recovery

Observability

- Call success rates

- Latency per stage

- Audio quality metrics

Without these foundations, systems cannot claim best scalability 2026 readiness.

How Do Voice APIs Change When AI And LLMs Are Introduced?

When AI enters the system, voice architecture changes fundamentally.

Voice agents are not simple bots. Technically, they are composed of multiple systems working together in real time.

A typical voice agent includes:

- Speech-to-Text (STT) for live audio

- A Large Language Model (LLM) for dialogue logic

- Retrieval systems (RAG) for context

- Tool execution for actions

- Text-to-Speech (TTS) for responses

Because of this, voice becomes a continuous processing pipeline, not a sequence of events.

Moreover, latency becomes critical. Every delay feels unnatural. Therefore, batch processing or delayed callbacks are no longer acceptable.

This is where many systems break. They were designed for call control, not conversational flow.

What Does The Real-Time Voice AI Architecture Look Like At Scale?

To understand scale, it helps to visualize the real-time loop.

End-To-End Voice Flow

- Caller speaks

- Audio is streamed instantly

- STT produces partial transcripts

- LLM processes intent and context

- Tools or data sources are queried

- Response text is generated

- TTS streams audio back

- Caller hears the response

This loop repeats continuously during the call.

Because each step depends on the previous one, any delay compounds across the system. Therefore, systems must be designed to stream data as early as possible.

Why Streaming Matters

- Reduces perceived latency

- Allows early response generation

- Improves conversational flow

- Handles interruptions naturally

At scale, streaming is not optional. It is mandatory.

How Do You Prevent Latency And Audio Issues At Scale?

Finally, preventing failures requires discipline at every layer.

Key strategies include:

- Using lightweight audio codecs

- Avoiding blocking calls in the pipeline

- Separating media processing from logic

- Deploying region-local media nodes

- Monitoring jitter and packet loss continuously

Additionally, systems must assume failure will happen. Therefore, fallback logic must be built in from day one.

Where Does FreJun Teler Fit In A Million-Scale Voice Architecture?

After understanding the challenges of bulk calling and real-time voice AI, the next logical question is where infrastructure responsibility should sit.

In a modern system, voice transport and AI logic must be clearly separated. Otherwise, scale becomes unmanageable.

This is where FreJun Teler fits in.

FreJun Teler acts as the voice infrastructure layer that sits between telecom networks and your AI stack. Instead of controlling logic or models, it focuses entirely on what is hardest to scale: real-time voice delivery under heavy load.

In simple terms:

- Teler handles telephony connectivity and media streaming

- Your system handles LLMs, STT, TTS, RAG, and tool calling

Because of this separation, teams can scale voice traffic independently from AI compute. As a result, systems remain stable even when call volume spikes suddenly.

How Does Teler Support Million-Scale Bulk Calling

Handling millions of calls is not about raw throughput alone. Instead, it is about predictable behavior under stress.

Teler is designed to manage:

- High call concurrency

- Burst traffic patterns

- Long-running voice sessions

- Real-time bidirectional audio

Importantly, Teler treats each call as a real-time streaming session, not a series of discrete events. Therefore, audio flows continuously without waiting for state transitions.

Key Capabilities That Enable Scale

- Carrier-grade call routing

- Distributed media handling across regions

- Stateless session management where possible

- Fast call setup with minimal signaling overhead

Because of this, Teler can support million scale calling APIs without coupling scale to application logic.

How Does Teler Work With Any LLM, STT, Or TTS?

One of the biggest concerns for engineering leaders is vendor lock-in. Teler avoids this by design.

Teler does not impose:

- A specific LLM

- A specific STT engine

- A specific TTS provider

Instead, it provides a clean voice transport layer that streams audio in and out.

Typical Integration Flow

- Teler streams live call audio

- Audio is sent to your chosen STT engine

- Partial transcripts are processed by your LLM

- The LLM calls tools or retrieves context

- Response text is sent to your TTS engine

- Generated audio is streamed back via Teler

Because all communication is streaming-based, latency remains low even at scale.

This design gives teams freedom while maintaining performance.

What Does A Real Implementation Look Like For Engineering Teams?

From an implementation perspective, building with Teler follows a predictable structure.

Step-by-Step High-Level Flow

- Configure inbound or outbound call routing in Teler

- Establish real-time media streams

- Forward audio chunks to STT

- Maintain conversation state in your backend

- Send generated audio back to Teler

- Monitor call quality and performance

Although the flow looks complex, responsibilities are clearly divided. As a result, teams can work independently on voice, AI, and business logic.

Where Teams Often Go Wrong

- Mixing AI logic with telephony control

- Blocking media streams while waiting for LLM output

- Treating voice as a request-response system

Avoiding these mistakes early saves months of refactoring later.

How Does Teler Handle Heavy Load Without Dropping Calls?

Under heavy load, stability matters more than features.

Teler is built to maintain call quality even when systems are stressed. It does this through:

- Distributed media nodes to reduce network distance

- Automatic load balancing across carriers

- Isolation between concurrent call sessions

- Back-pressure handling for downstream systems

Because of this, spikes in AI latency do not automatically cause call drops. Instead, voice streams continue while logic catches up.

This is critical for heavy load voice systems, where call continuity is non-negotiable.

How Does This Compare To Traditional Calling Platforms?

Traditional platforms excel at call control. However, they struggle when conversations become dynamic.

The difference becomes clear when comparing capabilities:

| Capability | Traditional Voice APIs | Teler-Based Architecture |

| Bulk calling | Yes | Yes |

| Million-scale concurrency | Limited | Designed for it |

| Real-time audio streaming | Partial | Native |

| AI-driven conversations | Add-on | Core use case |

| Model flexibility | Restricted | Fully open |

| Latency control | Best-effort | Streaming-first |

Because of this, Teler is better suited for voice systems built for the next decade, not legacy telephony use cases.

How Do You Measure Success In Million-Scale Voice Systems?

Once deployed, measurement becomes critical.

Founders and product leaders should focus on metrics that reflect real user experience.

Core Metrics To Track

- Call connection success rate

- End-to-end latency

- Audio jitter and packet loss

- AI response time

- Conversation completion rate

Equally important, systems must expose these metrics in real time. Without visibility, failures are discovered too late.

Therefore, observability should be treated as a feature, not an afterthought.

Why Is This Architecture Better For Scalability In 2026 And Beyond?

Looking ahead, voice systems will only become more demanding.

Future requirements include:

- More natural conversations

- Multilingual real-time processing

- Deeper personalization

- Integration with enterprise systems

This means systems must remain flexible.

By separating voice infrastructure from AI logic, teams can evolve models, tools, and workflows without touching the telephony layer.

This approach supports best scalability 2026 readiness while reducing long-term risk.

What Should Founders And Product Leaders Evaluate Before Choosing A Voice API?

Before committing to any platform, decision-makers should ask:

- Can this handle real-time streaming at scale?

- Does it support millions of concurrent sessions?

- Can we bring our own AI stack?

- How does it behave under burst traffic?

- Is observability built in?

Choosing the wrong foundation makes future innovation expensive. Therefore, infrastructure decisions should be made with long-term scale in mind.

Final Thoughts

Building a voice API for bulk calling at million scale requires more than high throughput. It demands real-time media streaming, predictable latency, and infrastructure that stays stable under heavy load. As voice systems evolve toward AI-driven conversations, the separation between telephony and intelligence becomes essential.

Teams must design architectures where voice transport scales independently, while LLMs, STT, TTS, and tools evolve freely. FreJun Teler enables this approach by acting as a dedicated voice infrastructure layer built for real-time, high-concurrency environments. If you are planning to deploy AI voice agents at scale, Teler helps you focus on intelligence while it handles voice complexity reliably.

Schedule a demo to see how Teler supports million-scale voice systems.

FAQs –

1. What is a voice API for bulk calling?

A voice API for bulk calling enables applications to programmatically place and manage large volumes of concurrent phone calls reliably.

2. Why do voice systems fail at million scale?

Failures occur due to carrier limits, media latency, poor concurrency handling, and lack of real-time streaming architecture.

3. Is bulk calling the same as scalable calling?

No. Bulk calling triggers many calls, while scalable calling maintains quality and stability under sustained heavy load.

4. Can traditional calling APIs support AI voice agents?

They support basic calls but struggle with real-time streaming and dynamic conversational logic required for AI agents.

5. What makes voice different from other APIs?

Voice is real-time, continuous, and latency-sensitive, making retries and delays immediately visible to users.

6. How important is latency in voice AI systems?

Latency directly affects conversation quality; delays over milliseconds can make interactions feel unnatural.

7. What role does STT and TTS play in voice agents?

STT converts live speech to text, while TTS generates natural audio responses in real time.

8. Can voice systems scale independently from AI models?

Yes, when voice infrastructure is decoupled from AI logic, each layer can scale independently.

9. What should engineering teams monitor in voice systems?

Call success rates, latency, jitter, packet loss, and AI response time are critical metrics.

10. When should teams invest in scalable voice infrastructure?

Early – before traffic spikes – because retrofitting scale into voice systems is complex and risky.