Voice-based communication is no longer limited to call centers or IVRs. Today, businesses are using Voice APIs combined with AI and multilingual automation to deliver real-time, human-like conversations at global scale. As customer bases expand across regions, language becomes a critical factor in engagement, trust, and conversion. This is where modern voice infrastructure, paired with LLMs, STT, and TTS systems, plays a defining role.

In this blog, we explored how Voice APIs enable multilingual conversations, the technical architecture behind them, and the measurable business benefits they unlock. Whether you are a founder, product manager, or engineering lead, this guide aims to help you design scalable, language-aware voice systems with confidence.

Why Do Businesses Need A Voice API Today?

Modern businesses are experiencing a shift in how customers want to communicate. Instead of text-only channels, many users expect real-time spoken interactions. As a result, companies are adopting voice APIs to power conversational systems that operate at scale.

Research shows that around 80% of businesses are planning to adopt AI-driven voice technology for customer service by 2026, reinforcing the strategic priority for voice automation.

Where to Place: After explaining the voice API benefits like automation and scalability.

A voice API acts as a programmable interface that lets developers add voice calling capabilities to applications without managing telephony infrastructure. In practice, businesses can embed calling features, automate responses, and stream real-time audio. For large enterprises and fast-moving startups alike, this technology opens new avenues for customer engagement.

Key benefits of using a voice API for businesses include:

- Scalability: Add or remove call capacity programmatically.

- Automation: Trigger calls based on events or workflows.

- Multichannel Integration: Tie voice into web, mobile, and backend systems.

- Real-Time Control: Manage calls with code rather than manual tools.

Furthermore, voice APIs enable multilingual voice automation by serving as the backbone for real-time audio capture and delivery. Whether a call originates in Mumbai or Madrid, businesses can build global communication systems without worrying about underlying networks.

What Makes Multilingual Voice Automation Critical For Global Businesses?

As companies expand globally, supporting only one language is no longer sufficient. Customers expect help in their native language, and failing to provide this can lead to dissatisfaction and churn. Consequently, multilingual customer support has become a strategic priority.

However, enabling true multilingual interaction is not trivial. Voice systems must handle:

- Speech recognition across many languages

- Natural-sounding text generation in multiple voices

- Low-latency responses for real-time conversations

- Contextual understanding regardless of language

This is where language aware AI and multilingual voice automation intersect. Instead of static recordings or limited rule-based menus, advanced systems convert spoken language into text, interpret meaning, and generate responses back into speech.

For example, in customer support:

- A user calls in Spanish.

- The system processes the audio and understands intent.

- The response is generated in fluent Spanish.

- The customer hears a natural reply in real time.

Beyond support, multilingual voice automation can be used for:

- Outbound notification calls

- Appointment reminders

- Surveys and feedback gathering

- Global calling API–based campaigns

Therefore, the ability to automatically process and respond in multiple languages increases reach, boosts customer satisfaction, and reduces operational costs.

How Do Voice APIs Work With AI And LLMs To Enable Multilingual Conversations?

To understand the full technical picture, it helps to break down how voice interactions flow from user speech to intelligent response. A robust voice system involves the following key components:

- Voice Capture

- Speech-to-Text (STT) Processing

- Large Language Model (LLM) or AI Logic

- Retrieval-Augmented Generation (RAG)

- Text-to-Speech (TTS) Output

- Real-Time Streaming and Latency Control

Below is a simplified visual of the typical flow:

| Step | Component | Purpose |

| 1 | Voice Input | Capture audio from a caller |

| 2 | STT Engine | Convert speech to text |

| 3 | AI/LLM | Interpret text and decide response |

| 4 | RAG/Database | Pull supporting data if needed |

| 5 | TTS Engine | Convert text reply back to audio |

| 6 | Voice Output | Stream reply back to the caller |

Voice Input And Capture

The process begins when a user speaks. The system must capture audio streams reliably. A global calling API simplifies this by providing a stable transport layer that accepts incoming voice streams and outputs audio without buffering delays.

Speech-to-Text (STT) Processing

Once audio is captured, the next step is to convert it to text. An STT engine must be:

- Accurate across languages

- Robust to accents and noise

- Fast enough to prevent lag

Because many languages have subtle phonetic differences, high-quality STT is essential for correct interpretation.

AI Processing And LLM Interpretation

After converting speech to text, the system needs to understand what the user said. This is where an LLM or custom AI logic comes in. The AI performs:

- Intent recognition

- Context tracking

- Dialogue state management

Here, language aware AI delivers two benefits:

- It accurately interprets user intent regardless of language

- It maintains context across multi-turn conversations

Retrieval-Augmented Generation (RAG)

In real-world applications, AI doesn’t just generate generic responses. It must pull relevant data from knowledge sources such as:

- FAQs

- CRM records

- Product databases

- Support tickets

This step is often implemented using RAG, which improves accuracy and relevance of responses.

Text-to-Speech (TTS) Output

Once the system decides on a reply, it needs to convert text back into audio. High-quality TTS engines produce replies that are:

- Natural-sounding

- Emotionally appropriate

- Correct for the target language

This ensures users hear responses that feel conversational rather than robotic.

Real-Time Streaming And Latency Management

To keep conversations natural, every part of this pipeline must operate with minimal delay. Therefore, real-time translation voice systems require:

- Low-latency transport of audio

- Efficient parallel processing

- Fast inference from STT and TTS engines

Without these, users will experience awkward pauses that disrupt conversational flow.

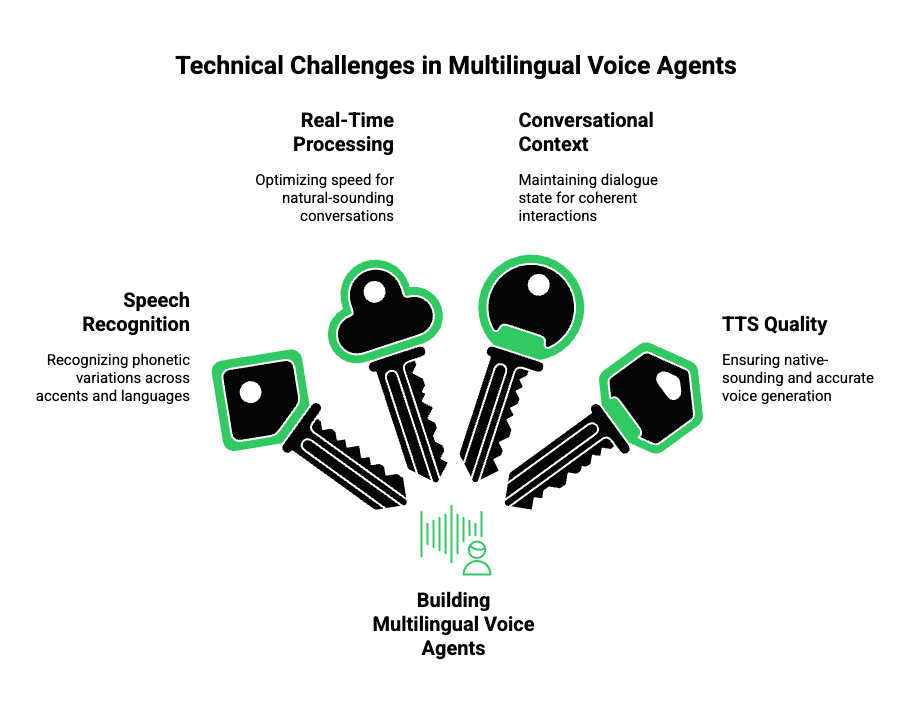

What Are The Technical Challenges In Building Multilingual Voice Agents?

Building systems capable of multilingual interactions is complex, and several challenges must be addressed:

1. Speech Recognition Across Accents And Languages

STT engines must recognize subtle phonetic variations. For example, Spanish spoken in Argentina differs from that in Mexico. Similarly, dialects in Arabic present unique challenges.

To handle this:

- Train or fine-tune STT models on diverse datasets

- Use language identification modules for initial detection

- Employ confidence scoring to detect misrecognitions

2. Low-Latency Real-Time Processing

Natural-sounding conversation depends on speed. Technical teams must optimize:

- Audio packet transport

- Parallel processing between STT, AI, and TTS

- Buffer management

This is especially important for real-time translation voice, where delays become noticeable.

3. Consistent Conversational Context

Multilingual conversations still require tracking what the user said earlier in the session. Therefore:

- Dialogue state must persist across turns

- Context must transfer seamlessly between processing stages

- Memory mechanisms or session management logic must be robust

Without stable context, answers appear disjointed or unrelated.

4. TTS Quality And Natural Response Generation

Not all TTS engines are equal. For multilingual support:

- Voices must sound native rather than synthetic

- Pronunciation must be accurate

- Tone must match expected conversational style

This often requires choosing specialized TTS models per language.

How Can Businesses Use Multilingual Voice APIs In Real-World Scenarios?

Once technical blocks are in place, businesses can unlock powerful use cases. Below are common applications that benefit from multilingual voice automation:

Inbound Calls

Companies can deploy multilingual interactive voice response (IVR) systems that:

- Detect caller language automatically

- Route users to appropriate support

- Provide self-service options

This drastically cuts wait times while improving satisfaction.

Outbound Campaigns

With a global calling API, businesses can automate high-volume outbound calls such as:

- Appointment reminders

- Lead qualification

- Survey collection

- Billing notifications

When paired with TTS in native languages, these calls feel personalized rather than scripted.

Customer Support Automation

Support teams can benefit from AI-driven voice systems that:

- Understand natural queries

- Provide accurate answers drawn from company knowledge

- Escalate to human agents when needed

This leads to 24/7 support without human agent overload.

How Are Traditional Voice Platforms Different From AI-Powered Voice APIs?

Traditional telephony providers focus on call connection and routing. They offer:

- Basic voice call setup

- Call recording

- DTMF menus

However, they lack deep AI integration. With traditional systems:

- Speech understanding is limited

- Multilingual automation is often manual

- Real-time context and language handling are weak

By contrast, modern voice APIs are designed with AI in mind from the ground up. They support:

- Real-time audio streaming

- Easy integration with STT and TTS

- Context-aware language processing

- Tool calling and data retrieval

This makes them far more capable for multilingual customer support and automated conversational tasks.

Introducing FreJun Teler As A Multilingual Voice API Solution

FreJun Teler is a purpose-built voice infrastructure platform that enables businesses to power human-like multilingual voice interactions at scale. Teler handles the complex transport layer so teams can focus on building business logic and language models.

Key technical advantages of Teler include:

- Ultra-Low-Latency Real-Time Media Streaming for clear audio

- Model-Agnostic Integration, so teams can choose any LLM, STT, or TTS

- Context Stability across long conversations

- Developer-First SDKs for rapid implementation

- Support for Global Calling API, making international reach seamless

With these capabilities, engineering teams can connect their AI logic to live calls and deliver voice experiences that feel natural, responsive, and multilingual.

What Are The Business Benefits Of Implementing Multilingual Voice Agents?

Implementing multilingual voice automation offers businesses a range of measurable benefits. Beyond just handling calls, AI-powered voice agents improve efficiency, engagement, and revenue potential.

1. Enhanced Customer Experience

- Native-Language Interaction: Customers communicate in their preferred language, reducing friction.

- Consistency Across Channels: Same voice experience across inbound and outbound calls.

- Reduced Wait Times: Automated AI agents can handle multiple calls simultaneously.

2. Operational Efficiency

- Scalable Automation: Systems can handle peak call volumes without adding human agents.

- Improved First-Call Resolution: AI retrieves relevant data using RAG or tool integrations.

- Integration With CRM/Backend Systems: Automated updates reduce manual work and errors.

3. Revenue Growth

- Personalized Outreach: Voice agents tailor campaigns in the user’s language.

- Global Market Expansion: Companies can reach regions with diverse languages.

- Better Conversion Rates: Multilingual engagement increases trust and engagement.

4. Cost Reduction

- Fewer Human Agents Needed: AI handles routine inquiries.

- Reduced Training Overhead: No need for multilingual call center staff.

- Optimized Call Flows: Efficient routing reduces dropped calls and repeated contacts.

5. Actionable Insights

- Analytics & Reporting: Track call durations, sentiment, and engagement.

- Performance Monitoring: Identify bottlenecks in conversations.

- Language-Specific Metrics: Assess which languages drive better outcomes.

How Can Companies Implement Multilingual Voice Automation Successfully?

While the potential is significant, implementing multilingual voice agents requires careful planning. Below are technical and strategic best practices.

1. Choosing the Right AI Models

- Select LLMs or AI agents that support multilingual understanding.

- Pair with STT/TTS engines optimized per language.

- Ensure models can integrate seamlessly with your voice API.

2. Managing Conversational Context

- Maintain session-level state for multi-turn conversations.

- Implement RAG or tool calling to fetch real-time data.

- Use context-aware pipelines to reduce repetitive explanations to users.

3. Handling Latency

- Optimize audio streaming pipelines.

- Use low-latency real-time media transport, especially for long conversations.

- Prioritize parallel processing where possible to reduce bottlenecks.

4. Testing Across Languages

- Validate STT and TTS performance in multiple dialects and accents.

- Ensure responses maintain natural tone and intonation.

- Continuously measure speech recognition accuracy and TTS quality.

5. Security and Compliance

- Ensure end-to-end encryption of audio and text data.

- Manage data privacy for customer interactions across regions.

- Comply with local regulations (e.g., GDPR, HIPAA).

What Are Some Real-World Use Cases For Multilingual Voice APIs?

Businesses can leverage multilingual voice APIs across various verticals. Here are practical examples.

1. Intelligent Inbound Support

- AI-powered receptionists capable of recognizing languages.

- Multilingual IVRs that handle queries without human intervention.

- Seamless escalation to human agents when necessary.

2. Outbound Communication

- Lead qualification and appointment reminders in the customer’s native language.

- Surveys, billing notifications, and personalized campaigns.

- Multi-region outreach with automated global calling API.

3. Enterprise Applications

- Banking: Multilingual fraud alerts, balance notifications, and loan application support.

- Healthcare: Appointment reminders, telemedicine guidance, and lab result explanations.

- E-Commerce: Order confirmations, delivery notifications, and upselling campaigns.

Why Do Traditional Telephony Platforms Fall Short?

While platforms like Vonage, Twilio, and SendGun offer reliable voice connectivity, they are limited when it comes to AI-powered multilingual interactions.

| Feature | Traditional Telephony | AI-Powered Voice API |

| Speech Understanding | Minimal | Advanced, context-aware |

| Multilingual Support | Limited | Full multilingual voice automation |

| Real-Time Translation | Rare | Supported via real-time STT/TTS |

| AI Integration | Manual | Native integration with LLMs and RAG |

| Context Management | Basic call logs | Persistent session context |

This comparison illustrates why companies focused on scaling multilingual voice interactions require AI-integrated APIs instead of pure telephony solutions.

How FreJun Teler Enables Global Multilingual Voice Experiences

Teler differentiates itself by providing a developer-first platform designed for complex voice and AI interactions.

Technical Highlights

- Model-Agnostic Integration: Connect any LLM, AI agent, STT, or TTS engine.

- Global Calling API: Reach customers worldwide without managing telephony infrastructure.

- Real-Time Media Streaming: Ensures low-latency, natural-sounding conversations.

- Context Persistence: Maintains conversational history across multiple turns and languages.

- SDK Support: Fast implementation for web, mobile, or backend systems.

How It Works in Practice

- User speaks into a call.

- Teler streams audio in real time to the connected STT engine.

- LLM interprets the intent, optionally fetching additional data via RAG.

- TTS engine generates a natural voice response.

- Teler streams the response back, maintaining low latency and multilingual accuracy.

By managing the infrastructure layer, Teler allows engineering teams to focus on AI logic, knowledge integration, and business-specific workflows.

What Are The Best Practices For Optimizing Multilingual Voice Automation?

To maximize performance and impact:

- Select the Right Combination of LLM + STT + TTS: Ensure each component supports target languages and accents.

- Prioritize Low Latency: Real-time streaming is crucial for smooth interactions.

- Test Across Scenarios: Include long conversations, multiple languages, and edge cases.

- Implement Context Management: Track dialogue history, user preferences, and conversation state.

- Monitor Performance Metrics: Measure recognition accuracy, response latency, and call success rates.

- Maintain Compliance: Protect audio and data privacy, especially for sensitive industries.

How Can Businesses Measure Success With Multilingual Voice Agents?

Key metrics to track:

- First-Call Resolution Rate: Are user queries solved without escalation?

- Customer Satisfaction: Collect feedback post-interaction.

- Call Duration: Ensure natural pacing without unnecessary delays.

- Conversion Rate for Outbound Campaigns: Measure effectiveness of multilingual outreach.

- Accuracy of STT/TTS Across Languages: Track recognition and voice quality.

- Latency Metrics: Keep conversation delay below acceptable thresholds.

By continuously measuring these, companies can optimize their AI pipelines and ensure high-quality multilingual interactions.

Conclusion

Multilingual voice automation is no longer a future capability; it is a present-day business requirement. By combining Voice APIs with LLMs, STT, TTS, and contextual AI, companies can deliver real-time, natural conversations across languages without building telephony infrastructure from scratch. The result is faster support, wider market reach, improved customer experience, and lower operational cost.

FreJun Teler enables this by acting as the global voice infrastructure layer for AI-driven conversations. It allows teams to integrate any LLM or AI agent with real-time voice, maintain low latency, and support multilingual customer interactions at scale.

Schedule a demo with FreJun Teler to see how you can build production-ready multilingual voice agents faster and more reliably.

FAQs –

- What is a voice API used for in businesses?

Voice APIs enable businesses to programmatically handle calls, automate conversations, and integrate AI-driven voice workflows at scale. - How does multilingual voice automation work?

It combines speech recognition, AI language understanding, and speech synthesis to process and respond in multiple languages. - Can voice APIs work with any LLM?

Yes, modern voice APIs are model-agnostic and can integrate with any LLM or AI agent. - Is real-time translation voice reliable for customer calls?

With low-latency streaming and accurate STT/TTS, real-time voice translation is reliable for many business scenarios. - What industries benefit most from multilingual voice APIs?

Customer support, fintech, healthcare, logistics, e-commerce, and SaaS benefit heavily from multilingual voice automation. - How does latency affect voice AI conversations?

High latency breaks conversational flow; low-latency streaming is critical for natural, human-like voice interactions. - Do multilingual voice agents replace human agents completely?

No, they automate routine conversations and escalate complex issues to human agents when necessary. - Is building multilingual voice agents expensive?

Using APIs reduces infrastructure cost, making multilingual voice automation more affordable and scalable. - How is conversational context maintained across calls?

Context is managed through session state, memory handling, and optional RAG or backend integrations.

Why choose a global calling API instead of local telephony providers?

Global calling APIs simplify international reach, compliance, and scalability without managing multiple carriers.