Voice automation is moving fast, and engineering teams now need infrastructure that can keep up with real-time expectations. Traditional telephony was never designed for millisecond-level audio streaming, yet modern AI systems require exactly that precision to deliver natural, interruption-friendly conversations. Before diving into how FreJun Teler achieves instant voice responses, it’s important to understand what actually happens between a live microphone and an AI-generated reply.

This blog walks you through that journey – how media streaming works, why low latency matters, and how Teler’s architecture supports any LLM, TTS, or STT engine to create reliable, real-time voice automation.

Why Are Instant Voice Responses Critical for Modern AI Applications?

In today’s fast-moving digital world, users expect answers – and they expect them fast. When a customer calls a support line, any delay feels awkward. When an AI voice agent takes more than a second to respond, it breaks the natural flow. That is why low-latency voice AI matters so much: it improves user experience, increases engagement, and drives business outcomes. ITU guidance shows that keeping mouth-to-ear latency under 150 ms is critical for natural conversational flow; beyond 150 to 400 ms, users begin to notice awkwardness and reduced conversational quality.

Moreover, in sectors like finance, healthcare, or logistics, instant voice replies can reduce friction, cut costs, and scale operations 24/7. By reducing response time, companies can lower their customer effort score, improve satisfaction, and even automate use cases such as reminders, qualification calls, or conversational IVR. All of this becomes possible only when the voice infrastructure supports real-time media streaming reliably and with ultra-low delay.

What Exactly Is Media Streaming in Real-Time Voice AI?

To understand how FreJun Teler powers instant voice responses, first we must define media streaming in the context of voice applications.

- Media streaming refers to the continuous transport of live audio frames between a live call leg and an application, often over WebSockets or other streaming protocols.

- Unlike batch-based upload (e.g., uploading a full WAV after the call), streaming delivers raw audio in real time, enabling immediate processing.

- In voice AI, we often use bidirectional streaming: audio comes from the caller to the application (inbound), and synthesized speech (from TTS) is sent back to the call (outbound). Twilio supports this model via their Media Streams product.

- Protocols commonly used: WebSocket for app-layer transport, and RTP / SRTP on the telephony leg. Telnyx, for instance, uses a WebSocket-based API to fork media and transmit audio in both directions.

- For encoding, common codecs include PCM (such as PCMU), Opus, or other linear formats. These choices affect latency, bandwidth, and the ease of integration with STT / TTS systems.

By using media streaming instead of periodic polling or file uploads, a voice system can react to user input almost instantly, without buffering large files or waiting for whole segments to finish.

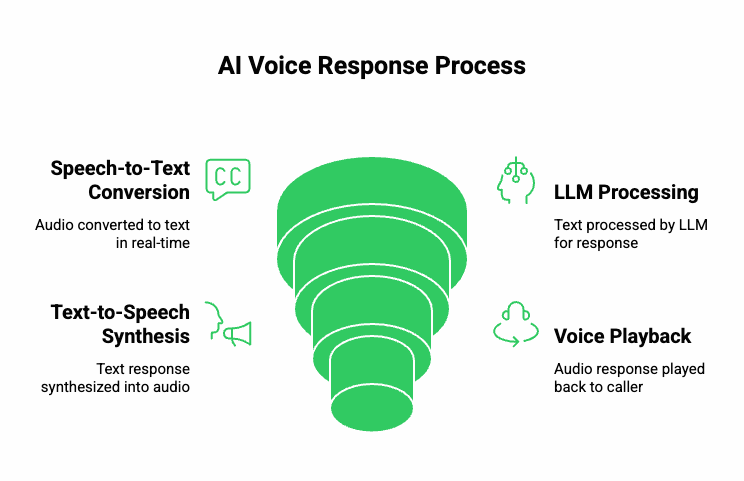

How Does a Voice AI Agent Work Behind the Scenes?

To see why FreJun Teler’s streaming architecture matters, it’s helpful to break down the AI voice response flow: how an LLM-based agent listens, thinks, and speaks, all over a live call.

Here is a simplified step-by-step picture of the typical voice agent stack:

- Real-time Speech-to-Text (STT)

- As soon as the caller speaks, FreJun Teler captures the audio via media streaming and forwards it to a transcription engine.

- The STT engine converts the audio into text in small chunks.

- As soon as the caller speaks, FreJun Teler captures the audio via media streaming and forwards it to a transcription engine.

- LLM / Dialog Manager + Context Management

- The transcribed text is sent to an LLM or conversational agent.

- This agent may use retrieval-augmented generation (RAG), external tool-calling, or other logic to decide how to respond.

- Crucially, the agent tracks the conversation state so it can maintain context across multiple turns.

- The transcribed text is sent to an LLM or conversational agent.

- Text-to-Speech (TTS)

- Once the LLM generates a response (in text), that text is passed to a TTS engine.

- The TTS system produces audio, often in real time, by streaming synthesized speech in frames or chunks.

- Once the LLM generates a response (in text), that text is passed to a TTS engine.

- Back to the Caller – Voice Playback

- FreJun Teler receives the TTS audio frames and injects them into the live call, again via media streaming.

- The caller hears the response as natural, human-like voice with minimal delay.

- FreJun Teler receives the TTS audio frames and injects them into the live call, again via media streaming.

In short: a voice agent built on FreJun Teler is fundamentally LLM + TTS + STT + RAG + tool-calling, all tied together over a FreJun real-time voice streaming layer.

Why Can’t Traditional Telephony Platforms Alone Deliver Low-Latency AI Voice?

Many telephony platforms – like Twilio, Telnyx, or Bandwidth – are designed first and foremost for voice calling, not AI orchestration. While they provide media streaming primitives, they do not inherently manage LLM logic, context retention, or model orchestration. Here’s how they compare:

Because traditional telephony platforms are not built around the requirements of LLM-based agents, using only them often leads to higher latency, increased development complexity, and fragile orchestration. That’s where FreJun Teler adds real value.

Where Does FreJun Teler Fit Into This AI Voice Pipeline?

FreJun Teler is the real-time voice infrastructure layer for AI-first applications. It is not just a voice-calling service – it is a media streaming infrastructure architected for LLM-based voice agents.

Here’s what Teler provides at its core:

- Model-agnostic voice transport: You can plug in any LLM, STT, or TTS engine.

- Optimized streaming architecture: Teler’s streaming architecture minimizes delay, enabling fast media forwarding and efficient audio routing.

- Developer-first SDKs: With SDKs for your backend and front-end, you don’t have to build low-level media handling from scratch.

- Global footprint & edge points: Teler deploys media ingestion and routing close to major telephony regions, reducing first-mile latency.

- Enterprise-grade reliability: Built for failover, packet loss mitigation, and real-world scaling.

In brief, FreJun Teler bridges the gap between voice infrastructure and AI orchestration, giving engineering teams a reliable, high-performance foundation for building low latency voice AI applications.

Learn how Elastic SIP Trunking connects your AI voice stack end-to-end and ensures reliable call delivery before your media streaming layer activates.

How Does FreJun Teler Stream Voice Input in Real Time With Sub-Second Latency?

Now that we have context, let’s dive deeper into how Teler’s media streaming architecture enables near-instant ingestion of caller audio.

- Call Initiation & Media Forking

- When a call arrives (via SIP, PSTN, or VoIP), Teler’s edge nodes intercept the media stream.

- These edges replicate audio into a WebSocket stream that is forwarded to the customer’s backend or AI orchestrator.

- Forking ensures the original call remains intact while a parallel audio path supports real-time processing.

- When a call arrives (via SIP, PSTN, or VoIP), Teler’s edge nodes intercept the media stream.

- WebSocket Transport Layer

- The WebSocket connection remains open for the duration of the call. This persistent link eliminates handshake overhead.

- Incoming audio frames use a small packet size (for example, 20–40 ms frames) to reduce latency.

- Each frame is encoded (e.g., PCM or Opus), base64-encoded, then sent as a media message. This resembles how Twilio handles media messages.

- The WebSocket connection remains open for the duration of the call. This persistent link eliminates handshake overhead.

- Buffering & Jitter Management

- Teler applies an adaptive jitter buffer: it maintains just enough buffer to handle small network jitter, but not so much that it introduces long delay.

- This balance ensures that occasional network spikes don’t kill the call, but delay stays below the threshold for natural conversation.

- Teler applies an adaptive jitter buffer: it maintains just enough buffer to handle small network jitter, but not so much that it introduces long delay.

- Codec Handling

- On the call side, Teler handles common telephony codecs (e.g., PCMU, G.711) and can transcode if needed.

- On the WebSocket side, audio can be streamed in a format optimized for AI (for instance, 16-bit linear PCM). This reduces downstream overhead in STT systems and prevents unnecessary transcoding.

- By choosing appropriate codecs, Teler supports highly efficient streaming architecture that is tuned for voice-AI agents.

- On the call side, Teler handles common telephony codecs (e.g., PCMU, G.711) and can transcode if needed.

- Metadata & Control Events

- Alongside audio frames, Teler embeds metadata in WebSocket messages (e.g., sequence numbers, timestamps) to maintain stream order and timing.

- It also supports control events (like connected, start, stop) similar to other media streaming APIs. For example, Twilio sends a “connected” message when the WebSocket is established.

- This ensures the backend can know exactly when media started, what format is in use, and when the caller hang-up happens.

- Alongside audio frames, Teler embeds metadata in WebSocket messages (e.g., sequence numbers, timestamps) to maintain stream order and timing.

How Does FreJun Teler Handle AI Processing and Retain Conversation Context?

Once the inbound audio is streaming reliably, the next step is processing. Here’s how FreJun Teler supports that:

- Token-by-token Transcription

- As Teler forwards audio, your STT system (e.g., Google Speech, DeepGram, or open-source ASR) transcribes small frames in near real time.

- The transcription engine may return partial transcripts (interim results) to feed into the LLM as the user speaks.

- As Teler forwards audio, your STT system (e.g., Google Speech, DeepGram, or open-source ASR) transcribes small frames in near real time.

- Streaming LLM / Dialog Manager

- Using a model-agnostic approach, you can connect any LLM (OpenAI, Anthropic, your own).

- The LLM receives live text inputs – either partial or complete – enabling token streaming.

- This allows for speculative next-token generation or incremental thinking, which reduces the time between user speech and response decision.

- Using a model-agnostic approach, you can connect any LLM (OpenAI, Anthropic, your own).

- Context & RAG / Tool Calls

- Teler is designed to let your backend orchestrator manage full conversational state. It does not impose a specific memory architecture.

- You can call external knowledge sources (RAG) or use tool-calling for API access (e.g., CRM lookup, calendar scheduling, DB queries).

- This flexibility ensures that your conversational agent is not limited by Teler, but actually empowered by it.

- Teler is designed to let your backend orchestrator manage full conversational state. It does not impose a specific memory architecture.

- Audio Feedback Loop Setup

- Once the LLM produces a text response, that is sent to a TTS engine.

- TTS may be done via your own engine or via third-party service, depending on your preference, cost, or quality needs.

- Importantly, Teler supports incoming streamed TTS audio, meaning your TTS output can be piped back in real time, not just as a file.

- Once the LLM produces a text response, that is sent to a TTS engine.

How Does FreJun Teler Deliver Outbound Audio to the Caller With Minimal Delay?

After the LLM decides the next response, the system must turn that text into speech and play it back without noticeable delay. This section explains how FreJun Teler manages this path.

1. TTS Stream Reception

Most modern TTS engines support two modes:

- Batch TTS – send full text, receive full WAV/MP3

- Streaming TTS – send text gradually and receive audio frames continuously

For instant replies, streaming is essential. FreJun Teler’s architecture is built to accept real-time TTS audio frames and inject them into the live call flow without buffering large segments.

2. Frame-Level Playback

Instead of waiting for full sentences, Teler processes TTS audio in frames such as:

- 20 ms

- 40 ms

- 60 ms

This reduces perceived delay, allowing the caller to hear the beginning of the sentence while the rest is still being generated.

3. Outbound Media Injection

Once Teler receives a frame, it immediately:

- Normalizes the format (e.g., 16-bit PCM 8kHz → G.711 μ-law)

- Places the frame into the RTP stream

- Transmits the audio packet to the caller

Because this pipeline is optimized for streaming, latency remains extremely low, supporting natural turn-taking in AI conversations.

How Does Teler Maintain Natural Turn-Taking Without Cutting the Caller Off?

One of the hardest engineering problems in voice AI is barge-in – the ability for the caller to interrupt the AI, and for the AI to stop speaking instantly. If barge-in does not work well, the experience feels robotic.

FreJun Teler uses a combination of techniques to support natural interruption:

1. Continuous Inbound Audio Listening

Even while sending outbound audio, Teler keeps the inbound media stream open. This allows it to detect:

- sudden rise in RMS energy

- voice frequency patterns

- speech onset

- word boundaries

2. Playback Suspension Mechanism

When the system detects that the caller has started speaking:

- the AI orchestrator receives an event

- it immediately stops the TTS stream

- Teler halts outbound RTP packets

- the call audio “switches direction” back to the caller’s voice

This ensures callers can jump into the conversation fluidly, just like a real human call.

3. Low Buffer Design

Traditional IVR systems introduce heavy jitter buffers that make interruption feel slow.

Teler’s lightweight buffer allows:

- sub-200 ms reaction time

- faster backpressure handling

- smoother conversational flow

This is a core requirement for low latency voice AI, and a key part of Teler’s real-time voice streaming architecture.

How Does Teler Scale Media Streaming Across Global Regions?

As enterprises adopt AI voice agents for sales, support, and operations, a platform must scale across large call volumes. FreJun Teler’s architecture is designed with this requirement in mind.

Edge Ingestion

Teler uses localized media nodes that capture RTP audio near the caller’s region. This reduces first-mile latency and improves call quality.

Distributed WebSocket Gateways

Media frames are routed through regional gateways before being forwarded to the customer’s backend. This reduces:

- packet loss

- jitter

- network hops

- cross-region delays

Autoscaling Workers

As concurrent calls increase, Teler automatically scales:

- media handlers

- WebSocket processors

- transcoding modules

- metadata routers

This prevents congestion and ensures stable frejun real time voice performance even at high load.

Multi-Codec Compatibility

A wide range of codecs can be streamed such as:

- G.711

- Opus

- Linear PCM

- GSM

Codec flexibility enables enterprises to optimize for bandwidth, quality, or cost depending on their deployment model.

How Does Teler Solve the Biggest Reliability Challenges in Voice-AI Streaming?

In any voice system, incoming audio quality depends on several variables. Teler addresses them with platform-level safeguards.

1. Packet Loss Recovery

Teler uses:

- concealment strategies

- forward error correction where applicable

- dynamic retransmission

- redundancy markers

This allows live conversations to continue even in imperfect network conditions.

2. Adaptive Jitter Buffer

A fixed jitter buffer often causes delays. Therefore, Teler uses adaptive algorithms that adjust buffer size based on:

- observed jitter

- network congestion

- current packet arrival rate

As a result, the system stays responsive but resilient.

3. Real-Time Monitoring

Teler continuously monitors:

- round-trip time

- frame arrival order

- codec mismatch

- jitter spikes

- silence duration

Alerts are generated automatically when thresholds exceed safe limits.

4. Failover Routes

If a media node becomes unstable, Teler triggers:

- in-call rerouting

- gateway handover

- WebSocket reconnection with state retention

This allows the call to survive edge failures without restarting the session.

How Does FreJun Teler Keep Conversations Secure and Policy-Compliant?

AI voice interactions often handle sensitive data. Teler includes several safeguards.

Secure Transport

- SRTP for call audio

- TLS for WebSocket streaming

- Hardened endpoints for orchestrator callbacks

Authentication

- role-based access

- signed streaming requests

- private WebSocket URLs

Data Handling Controls

Enterprises can choose retention settings such as:

- do not store media frames

- store metadata only

- encrypted conversational logs

Furthermore, Teler does not enforce vendor lock-in for compliance workflows – customers can run their own ASR/LLM/TTS pipelines inside private VPCs.

How Does FreJun Teler Compare With Telephony-First Platforms?

Although Twilio, Telnyx, and Bandwidth offer strong media streaming features, their core DNA is still classic telephony, not AI orchestration.

Teler, however, is voice infrastructure built for AI-first pipelines.

| Capability | Telephony Platforms | FreJun Teler |

| Primary focus | Calling & connectivity | AI voice responsiveness |

| Media streaming | Yes | Yes, optimized for LLM loops |

| Context/state support | Manual setup | Built-in support for AI workflows |

| Barge-in | App-dependent | Native |

| Real-time TTS injection | Limited | Core |

| LLM-agnostic | Yes | Yes (model-agnostic by design) |

| End-to-end voice agent flow | Not native | Native |

This makes Teler ideal for teams building real-time AI agents, not just telephony applications.

What AI Architectures Can You Connect With Teler?

Because FreJun Teler is model-agnostic, you can plug in any AI stack:

LLMs

- OpenAI

- Anthropic

- Llama

- Mistral

- Gemini

- Custom on-prem transformers

STT Engines

- Deepgram

- Google Cloud

- Whisper

- AssemblyAI

TTS Engines

- ElevenLabs

- Azure TTS

- Google TTS

- Open-source TTS models

Tool-Calling

Teler does not dictate the orchestration method. You can integrate:

- CRM lookups

- RAG pipelines

- customer databases

- scheduling or booking APIs

- payment processors

- logistics systems

This flexibility allows engineering teams to deploy any conversational logic without limitations.

What Practical Use Cases Can Teler’s Media Streaming Unlock?

FreJun Teler enables a wide range of applications that depend on instant voice responses:

- AI receptionists

- smart IVR

- sales qualification bots

- logistics delivery confirmation agents

- appointment scheduling bots

- payment reminders

- lead verification calls

- customer satisfaction surveys

- internal support agents

- voice-enabled task automation

Each of these requires sub-second speech processing and accurate turn-taking, which is exactly what Teler’s streaming architecture enables.

Final Takeaway: Why FreJun Teler Matters for the Future of Voice AI?

As organizations shift toward AI-first operations, the expectations from automated voice interactions continue to rise. Users now want immediate responses, consistent clarity, and conversations that feel naturally paced. Engineering teams, however, know that delivering this requires more than a powerful LLM – it demands a reliable media streaming backbone that keeps latency predictable, maintains conversational context, and integrates seamlessly with STT, TTS, and custom AI logic.

FreJun Teler provides that foundation. Its optimized streaming architecture, distributed edge network, and developer-ready APIs simplify everything between the phone call and your AI engine. As a result, companies can deploy real-time voice agents that respond instantly, scale confidently, and operate with enterprise-grade reliability.

Ready to build real-time voice AI?

Schedule a demo with Teler

FAQs-

- How does media streaming reduce latency in AI voice agents?

It sends audio packets instantly over WebSockets instead of waiting for call completion events, enabling faster recognition and response generation. - Can I use any LLM with FreJun Teler?

Yes. Teler is model-agnostic and allows integrating any LLM, custom model, or enterprise AI stack through simple streaming APIs. - Does streaming require specialized telephony hardware?

No. Everything runs over cloud telephony or VoIP networks, so engineering teams integrate using APIs instead of maintaining physical systems. - What makes low-latency streaming crucial for voice automation?

It prevents unnatural pauses, ensures faster turn-taking, and keeps the interaction aligned with real human conversation pacing. - Does Teler support full-duplex or interruption-friendly speech?

Yes. Teler’s streaming layer enables bidirectional, real-time audio flow so AI agents can detect interruptions and respond instantly. - How does FreJun Teler ensure call reliability at scale?

It uses distributed edge locations, load-balanced media servers, and automatic failover to keep calls stable during traffic spikes. - Can I plug in my existing STT and TTS engines?

Yes. Teler supports BYO-STT/TTS so teams can choose any provider or switch models without changing call infrastructure. - What is the typical latency budget for real-time voice AI?

Most systems aim for under 300ms end-to-end to maintain natural conversational flow without noticeable delay. - How secure is Teler’s streaming architecture?

All streams run over encrypted transport with strict authentication, ensuring audio and metadata remain protected during every session.

10. Is Teler suitable for both inbound and outbound AI calls?

Yes. The platform handles both directions, providing the same low-latency streaming pipeline across all call scenarios.