Just a few years ago, the process of building voice bots was a niche discipline, a specialized domain reserved for a small group of telecom engineers and PhDs in speech recognition. A developer who could build a web application was a world away from building a conversational voice agent. Today, that world is changing at a breathtaking pace.

The rise of powerful APIs, sophisticated AI models, and developer-first infrastructure platforms has democratized the world of voice. The good news is that you no longer need to be a telecom expert to build a powerful voice bot. The challenging news is that you need to be a much more versatile and well-rounded engineer.

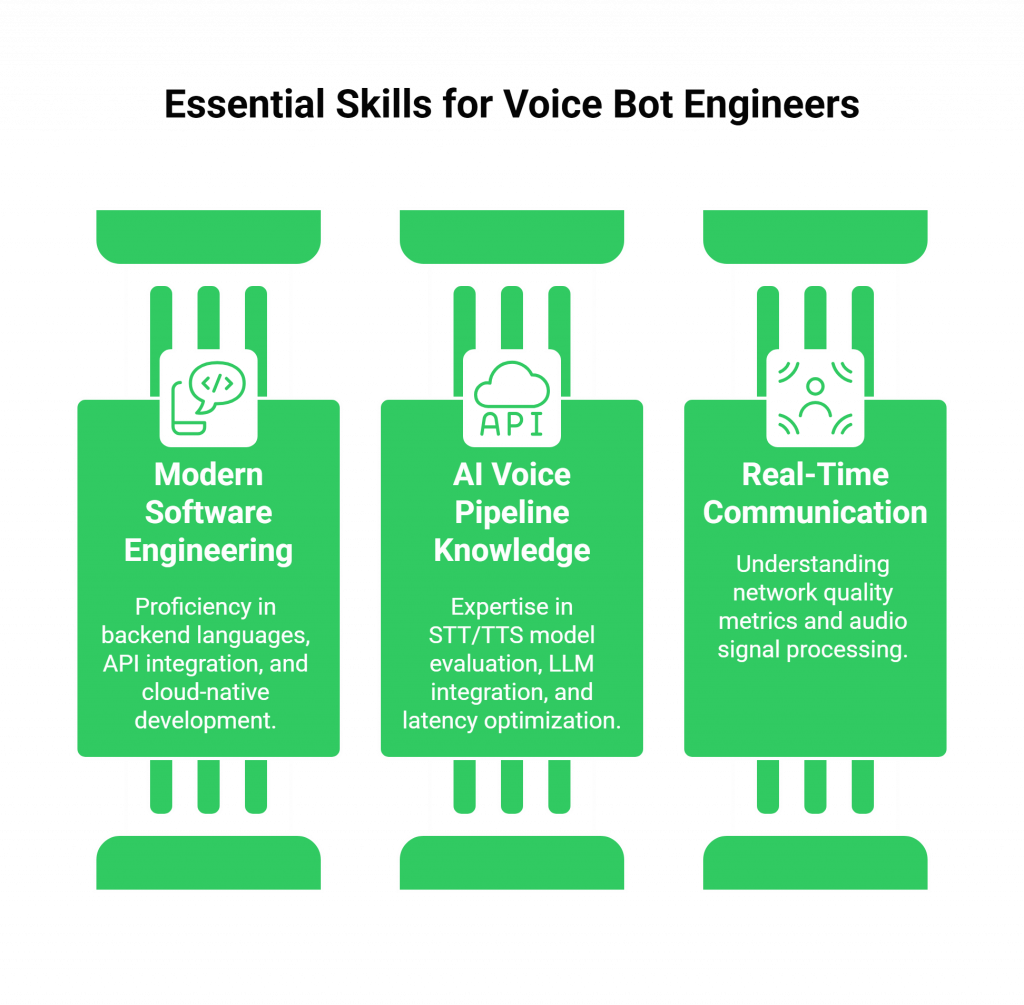

As we look toward 2026, the demand for engineers who can build intelligent, reliable, and natural-sounding voice experiences is set to explode. But the skillset required is a unique and fascinating hybrid. It is a blend of modern software engineering, a deep understanding of the AI/ML pipeline, and a foundational knowledge of the physics and protocols of real-time communication.

The engineers who will lead this revolution will not be siloed specialists; they will be full-stack conversational AI developers. This guide will break down the essential skills that engineers need to master before they start building voice bots in the new era of voice.

Table of contents

The Old World vs. The New World of Voice Development

To understand the skills of the future, we must first appreciate how the job has changed.

The Old World (Pre-2022)

- The Focus: The primary challenge was just making the basic mechanics of speech recognition and telephony work.

- The Tools: This was the world of complex, on-premise IVR platforms, rigid state machines, and a deep, unavoidable dive into telephony engineering fundamentals like SIP and RTP. The AI was often a simple, intent-based NLU that was brittle and difficult to train.

- The Skillset: The ideal developer was a telecom specialist who had learned a bit of scripting.

The New World (2026 and Beyond)

- The Focus: The basic mechanics of telephony have been abstracted away by modern platforms. The new challenge is to build a seamless, low-latency, and incredibly intelligent conversational experience. The focus has shifted from “can we make it work?” to “can we make it feel human?”

- The Tools: This is the world of developer-first voice APIs, serverless functions, and, most importantly, powerful, general-purpose Large Language Models (LLMs).

- The Skillset: The ideal developer is a strong, full-stack software engineer who has a deep appreciation for the unique challenges of real-time AI and is willing to learn the fundamentals of the voice medium.

Also Read: Voice Calling SDKs for Enterprises: Scaling Conversations with AI and Telephony

What Are the Essential, Must-Have Skills for 2026?

The modern voice bot developer is a “T-shaped” individual. They have a deep expertise in one area (like backend development or machine learning) but a broad, functional knowledge across the entire stack.

This table provides a high-level overview of the key skill domains.

| Skill Domain | Core Competency | Why It’s Essential for Building Voice Bots |

| Modern Software Engineering | Backend development, API integration, cloud-native architecture. | This is the foundational skill. You are building a real-time, scalable, and resilient software application. |

| The AI Voice Pipeline | A deep, practical understanding of the STT-LLM-TTS workflow. | You must be able to select, integrate, and optimize the different AI models that make up your bot’s “brain” and “senses.” |

| Real-Time Communication Fundamentals | A working knowledge of latency, jitter, packet loss, and audio codecs. | You are not just processing data; you are managing a live, real-time audio stream. Understanding the physics of voice is crucial for debugging and optimization. |

| Conversational Design & UX | The ability to think about the user experience of a conversation. | A technically perfect bot with a frustrating or unnatural conversational flow is a failed product. |

How Do These Skills Break Down in Detail?

Let’s do a deep dive into each of these domains to understand the specific, practical skills that an engineer will need.

Mastering Modern Software Engineering

This is the bedrock. You cannot build a great voice bot if you are not a great software engineer first.

- Strong Backend Skills: You will need to be proficient in a modern backend language like Python, Node.js, Go, or Java. You will be building the core application logic that orchestrates the entire conversation.

- Expertise in API Integration: A voice bot is a system of systems. You will be constantly making and receiving API calls, to the voice platform, to your AI models, and to your internal business systems (like a CRM). A deep understanding of REST APIs, webhooks, and authentication is non-negotiable.

- Cloud-Native and Serverless Mindset: A voice bot that needs to handle a massive, unpredictable spike in calls is a perfect use case for a serverless architecture (like AWS Lambda or Google Cloud Functions). Understanding how to build and deploy scalable, event-driven applications in the cloud is a critical skill.

Also Read: How to Enable Global Calling Through a Voice Calling SDK (Without Telecom Headaches)

Deep AI Voice Pipeline Knowledge

It is no longer enough to just call a black-box AI service. A great voice engineer needs a practical, hands-on understanding of the entire ai voice pipeline knowledge.

- STT/TTS Model Evaluation: You need to know that not all Speech-to-Text and Text-to-Speech models are created equal. An essential skill is the ability to perform an stt tts model evaluation. Can your STT model handle noisy backgrounds or different accents? Does your TTS model have a low “time to first byte” so the conversation feels snappy? You need to be able to benchmark and choose the right models for your specific use case.

- LLM Integration Skills: This is the most important new skill. You need to be an expert in “prompt engineering” to guide the LLM’s behavior. But more importantly, you need to master the techniques of llm integration skills, such as Retrieval-Augmented Generation (RAG), to connect the LLM to your company’s private knowledge bases in a way that is accurate and prevents “hallucinations.”

- Latency Optimization: You need to understand the performance characteristics of each part of the pipeline and how they contribute to the overall latency of the conversation.

Understanding Real-Time Communication Fundamentals

You do not need to be a traditional telecom engineer, but you do need to understand the fundamental physics and protocols of a voice call.

- The Holy Trinity of Network Quality: You must understand what latency, jitter, and packet loss are, how to measure them, and how they impact the user’s audio quality.

- Audio Signal Processing Basics: Having a foundational knowledge of audio signal processing basics is a huge advantage. Understanding concepts like audio codecs (e.g., G.711 vs. Opus), sampling rates, and noise cancellation will help you make much more informed decisions when configuring your voice platform and debugging audio quality issues.

- Telephony Engineering Fundamentals (Lite): You do not need to be able to write a SIP parser from scratch. But you should understand the basic roles of SIP (for signaling) and RTP (for media) and be able to look at a high-level call log from a platform like FreJun AI and understand the lifecycle of a call. This level of telephony engineering fundamentals is essential for effective debugging.

Ready to start building on a platform that abstracts away the hardest parts of telephony and lets you focus on your AI? Sign up for FreJun AI nd explore our developer-first platform.

Also Read: Voice Calling SDKs Explained: The Invisible Layer Behind Every AI Voice Agent

Conclusion

The era of the voice developer is here. The process of building voice bots in 2026 is set to be one of the most exciting and in-demand fields in software engineering. But it will require a new kind of engineer, a versatile “polyglot” who is as comfortable fine-tuning an LLM as they are debugging a network packet capture.

The journey to becoming a full-stack conversational AI developer is a challenging one, but for those who are willing to master this unique blend of skills, the opportunity to build the next generation of human-computer interaction is immense. The tools and the platforms are finally here. The only remaining question is: are you ready to build?

Want to get a head start on your learning journey? Schedule a demo for FreJun Teler!

Also Read: Cloud Telephony for Hybrid, Flexible, and BYOD Work Models

Frequently Asked Questions (FAQs)

No, and this is the key change. With a modern, developer-first voice platform like FreJun AI, you do not need a traditional telecom background. If you are a strong software engineer who understands APIs, you can get started. However, learning the telephony engineering fundamentals at a high level will make you a much more effective developer.

The most important new skill is a deep understanding of and obsession with latency. A voice conversation is a real-time, synchronous interaction, and every millisecond of delay matters. You must learn to design your entire application with the goal of minimizing this delay.

The ai voice pipeline knowledge refers to understanding the end-to-end workflow that an AI uses to have a conversation. This includes Speech-to-Text (STT) to understand the user, a Large Language Model (LLM) to “think,” and Text-to-Speech (TTS) to respond.

LLM integration skills are critical because modern voice bots are moving away from simple, rigid, intent-based models to more powerful and flexible LLMs. An engineer needs to know how to effectively “prompt” these models and, more importantly, how to connect them to private data sources using techniques like Retrieval-Augmented Generation (RAG).

An STT (Speech-to-Text) model is the “ears” of the AI; it converts spoken audio into written text. A TTS (Text-to-Speech) model is the “mouth” of the AI; it converts written text into spoken audio. The ability to perform an stt tts model evaluation to choose the right models for your use case is a key skill.

You do not need to be an audio engineer, but having a basic understanding of audio signal processing basics like codecs (e.g., Opus vs. G.711) and the impact of packet loss will help you debug audio quality issues much more effectively.

FreJun AI provides the foundational platform that abstracts away the most complex parts of the telephony layer. This allows a developer to focus on learning the AI and conversational design parts first. Our detailed logs, analytics, and documentation also provide a great learning tool for understanding the real-world mechanics of a voice call.

For building voice bots, it is currently more important to be a strong software engineer who is a proficient user of machine learning models. The job is primarily about integrating these models into a scalable and resilient real-time application.

Jitter is the variation in the arrival time of the audio packets over the network. High jitter is a major cause of choppy or garbled audio, and understanding how to diagnose it is a key part of troubleshooting.

The best place to start is by signing up for a developer account with a modern voice API provider like FreJun AI. The hands-on experience of making your first API-driven call and experimenting with the tools is the fastest and most effective way to learn.