For the past few years, we have been living in a world of silent AIs. Large Language Models (LLMs) have demonstrated an astonishing ability to understand, reason, and generate human-like text. They can write poetry, debug code, and summarize complex documents.

But for all their intelligence, they have been trapped behind a keyboard. That era is now definitively over. The next great frontier in artificial intelligence is the spoken word.

The future of voice AI is not about clunky, robotic phone menus; it is about fluid, natural, and deeply intelligent conversations. The technology that is finally breaking down the wall between the silent world of LLMs and the audible world of human conversation is the modern voice calling SDK.

We are witnessing more than an integration; we are witnessing a fusion, a new kind of application where the most powerful language models on the planet operate through the most universal and natural interface of all: a simple phone call.

A voice calling SDK is no longer just a tool for making and receiving calls. It is evolving into the essential, high-performance nervous system that connects the AI “brain” to the global voice network, and its role is becoming more critical and more sophisticated with each passing day.

This article will explore the voice SDK evolution and the indispensable role it will play in the coming era of LLM-powered calls.

Table of contents

Where We’ve Been: The Voice SDK as a Simple “Phone”

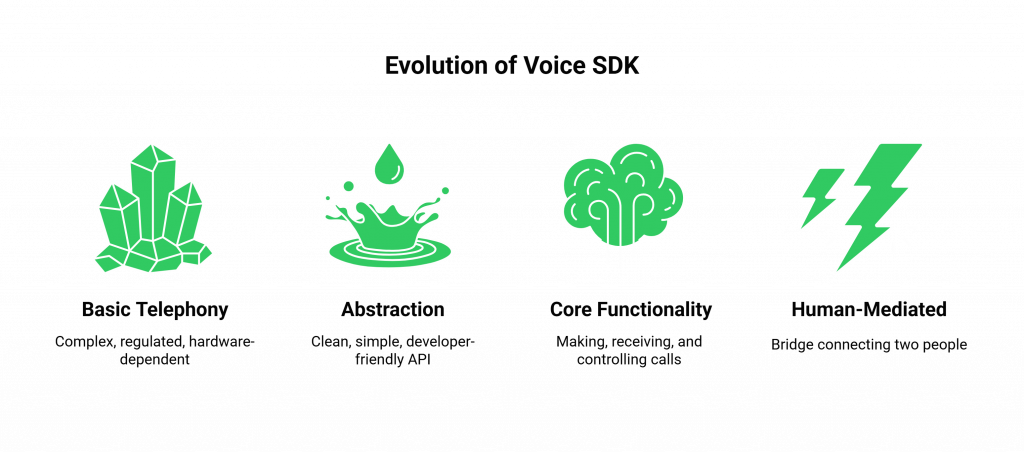

To understand where we are going, we must first appreciate where we have been. The first generation of the voice calling SDK was a revolution in its own right. It was a powerful tool that did for telephony what Stripe did for payments: it took an incredibly complex, regulated, and hardware-dependent industry and abstracted it away behind a clean, simple, developer-friendly API.

The primary job of this first-generation SDK was to solve the basic mechanics of a phone call:

- Making Calls: Programmatically initiate an outbound call.

- Receiving Calls: Be notified of and answer an inbound call.

- Basic In-Call Control: Perform simple actions like hold, mute, and transfer.

This was a massive leap forward. It allowed developers who were not telecom experts to embed basic voice functionality into their applications. But in this model, the “intelligence” was still entirely human. The SDK was simply a bridge to connect two people.

Where We Are Now: The Voice SDK as a Real-Time “Data Pipe”

The current, second generation of the voice calling SDK is defined by a single, game-changing capability: real-time media streaming. This is the feature that has enabled the first wave of truly interactive voice AI.

The Power of Programmable Media

A modern voice calling SDK from a provider like FreJun AI does not just connect a call; it gives the developer deep, programmatic access to the raw audio stream of the call in real time. This is the critical “input” that an AI needs to “hear.” The workflow it enables is the foundation of today’s voice AI:

- The SDK establishes a call.

- It uses a real-time media streaming API to fork the live audio and send it to a Speech-to-Text (STT) engine.

- The resulting text is sent to an LLM for processing.

- The LLM’s text response is sent to a Text-to-Speech (TTS) engine.

- The SDK is used to “inject” this synthesized audio response back into the live call.

This “data pipe” model is incredibly powerful, and it is the standard for the AI voice architecture 2025 landscape. A recent report from Gartner predicts that by 2026, conversational AI will have reduced contact center agent labor costs by $80 billion, a saving that is being driven almost entirely by this second-generation architecture.

Also Read: How FreJun Teler Delivers the Best Voice API Experience for Businesses?

Where We’re Going: The Voice SDK as an “Intelligent Co-Processor”

The future of voice AI is not just about making this data pipe faster; it is about making the pipe itself smarter. The third generation of the voice calling SDK is evolving from a passive conduit into an active, intelligent co-processor that works in tandem with the LLM. It is about offloading certain real-time, conversational tasks to the network edge, where the SDK lives, to create an even faster and more fluid experience.

The Evolution from “Dumb Pipe” to “Smart Endpoint”

The voice SDK evolution is about pushing more intelligence closer to the user. Here are some of the capabilities that will define the next generation of the voice calling SDK:

| Capability | What It Is | Why It Matters for LLM-Powered Calls |

| Edge-Based Barge-In and Interruption Detection | The SDK itself can detect the instant a user starts speaking, even while the AI is talking, and can immediately signal the AI to stop. | This is the key to natural turn-taking. It allows a user to interrupt the AI, making the conversation feel much less robotic and more like a real human dialogue. |

| Real-Time Sentiment and Tone Analysis | The SDK’s underlying platform can analyze the raw audio stream for emotional cues (frustration, happiness, etc.) in real-time. | It can provide this metadata to the LLM along with the text, giving the AI a much richer, more empathetic understanding of the user’s emotional state. |

| On-the-Fly TTS Switching | The SDK can allow for dynamic, real-time changes to the AI’s voice, perhaps switching to a more empathetic tone if it detects user frustration. | This allows the AI to modulate its response in a more human-like way, which is a critical part of advanced conversational design. |

| Intelligent Packet Loss Concealment (PLC) | The SDK can use AI-powered algorithms to predict and fill in small gaps in the audio stream caused by poor network conditions. | This dramatically improves the perceived audio quality and ensures that the STT engine receives a cleaner audio stream, which improves transcription accuracy. |

The AI voice architecture of 2025 will use a hybrid model. The powerful LLM in the cloud will handle the core reasoning, while the intelligent voice calling SDK at the edge will increasingly manage the split-second, real-time mechanics of the conversation.

Ready to build on an SDK that is designed for the future of voice AI? Sign up for FreJun AI.

Also Read: What Is Elastic SIP Trunking and How Does It Power Modern Voice AI?

How FreJun AI is Building the Future of the Voice SDK?

At FreJun AI, we build our entire architectural philosophy around this vision for the future of voice AI. Our Teler engine operates as a globally distributed, real-time media processing platform, and our voice calling SDK gives developers powerful access to it.

We focus on the two things that matter most for LLM-powered calls:

- Minimizing Latency: Our edge-native architecture is design from the ground up to shorten the distance that data has to travel, which is the key to a fast, responsive conversation. A recent industry benchmark on cloud services performance showed that edge computing can reduce network latency by as much as 75% compared to a centralized cloud model.

- Maximizing Developer Control: We believe developers will build the best voice experiences when they have granular, real-time control over every aspect of the call. Our Real-Time Media APIs and SDKs give them this level of power and flexibility.

We aren’t just providing a connection; we are providing the high-performance, intelligent conversational infrastructure that will power the next generation of voice AI.

Also Read: How Elastic SIP Trunking Works Behind Every AI Voice Conversation?

Conclusion

The fusion of Large Language Models and voice is set to be one of the most transformative technology trends of the coming decade. As we move from simple, command-based voice assistants to truly open-ended, conversational AI, the role of the underlying communication infrastructure becomes more critical than ever.

The voice calling SDK is at the very heart of this transformation. It is evolving from a simple tool for making phone calls into a sophisticated, intelligent co-processor that is essential for creating the seamless, low-latency, and natural-sounding LLM-powered calls that will define the future of human-to-machine interaction.

For developers and enterprises looking to lead in this new era, choosing a voice calling SDK is the most important decision they will make.

Want to get a technical deep dive into our real-time media APIs and discuss the future of AI voice architecture? Schedule a demo with our team at FreJun Teler.

Also Read: United Kingdom Country Code Explained

Frequently Asked Questions (FAQs)

A voice calling SDK is a set of software libraries and tools. It lets developers add voice calling features easily. They can make and receive calls inside web or mobile apps. It also helps them manage live conversations smoothly.

LLM-powered calls are phone calls where a user is having a conversation with an AI agent whose “brain” is a Large Language Model (LLM), like OpenAI’s GPT-4. This allows for very sophisticated, natural, and open-ended conversations.

The biggest technical challenge in the future of voice AI is reducing latency. The entire round-trip, from a user speaking to the AI responding, must happen in a fraction of a second to feel like a natural, real-time conversation.

The voice SDK evolution is moving from being a simple tool for connecting calls to a more intelligent platform. The future SDK will handle more real-time conversational mechanics like interruption and tone analysis, at the network edge.

The AI voice architecture of 2025 will likely use a hybrid model. A large cloud LLM will handle complex reasoning. Smaller edge models will manage fast, real-time processing. An intelligent voice calling SDK will keep conversations smooth and responsive. This setup brings processing closer to the user.

It is the ability for a user to interrupt the AI agent while it is speaking. This is a critical feature for a natural conversation, and a next-generation SDK can help manage this at a low level for faster response.

The world of AI is changing incredibly fast. A model-agnostic SDK like FreJun AI lets you always use best AI models from any provider, OpenAI, Google, Anthropic, and others.

FreJun AI is building the foundational, low-latency conversational infrastructure this future requires. Our Teler engine and voice calling SDK power the high-performance nervous system.

“The edge” refers to a network of smaller, globally distributed data centers that are physically closer to the end-users. Processing a call at the edge dramatically reduces the data travel time, which is the key to lowering latency.