For decades, the holy grail of automated customer service has been a simple, yet elusive, goal: to have a natural, intelligent, and genuinely helpful conversation with a machine. The clunky, frustrating “press one for sales” phone menus of the past were a constant reminder of how far we were from that goal. But today, we are at a profound inflection point.

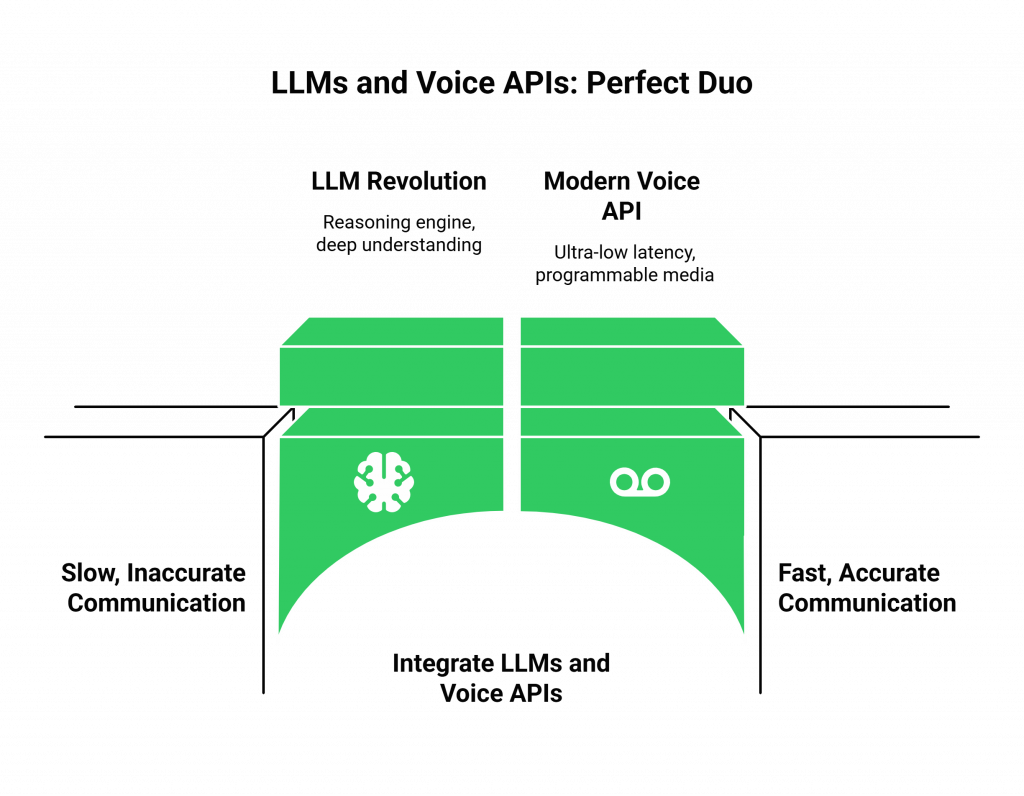

The convergence of two powerful technologies, the breathtaking intelligence of Large Language Models (LLMs) and the real-time, programmable power of modern voice APIs is finally making that dream a reality. This is not just an incremental improvement; it is the dawn of a new era in business communication.

The combination of LLMs and voice APIs is creating a perfect duo, a synergy where the “brain” of the AI is finally being given a worthy “voice.” This is enabling the creation of LLM-driven voice bots that can do more than just follow a rigid script; they can understand context, show empathy, and solve complex problems in real time.

For businesses, this moment offers a transformative opportunity to reinvent their customer experience, and they can unlock that potential by choosing the best voice API, one architected for the unique demands of this new conversational world.

Table of contents

Why Was This So Difficult Before? The Missing Pieces of the Puzzle

To appreciate the power of this new duo, we must first understand why previous attempts at conversational AI so often fell short. The problem was twofold: the “brain” was not smart enough, and the “voice” was not fast enough.

The “Brain” Problem: The Limits of Pre-LLM AI

Before the rise of models like GPT-4, conversational AI was based on rigid, intent-based systems. Developers had to painstakingly map out every possible conversational path and manually program every response.

- Brittle and Inflexible: These systems would break the moment a user deviated from the expected script. They could not handle nuance, sarcasm, or complex, multi-part questions.

- Incapable of True Understanding: They were pattern-matching machines, not thinking machines. They could not reason, infer, or remember the context of a conversation from one turn to the next.

The “Voice” Problem: The Latency of Traditional Telephony

Even if you had a brilliant AI, connecting it to a phone call in real-time was a massive technical hurdle. Traditional telephony and early-stage voice APIs were not built for the speed that a natural conversation demands.

- High Latency: The round-trip journey, from the user speaking, to processing the audio, to generating the AI’s response, to playing that response back was often painfully slow. These long, awkward pauses of dead air were a dead giveaway that you were talking to a machine and made a fluid conversation impossible.

- Lack of Programmable Media: Early voice APIs were often just call control APIs. They could make a call or hang up a call, but they did not give developers the deep, real-time access to the raw audio stream needed to effectively power an AI.

Also Read: Citizen Feedback Systems Using Voice AI

The Perfect Duo: How LLMs and Modern Voice APIs Solve the Puzzle

The current revolution is happening because both of these problems have been solved simultaneously.

The LLM Revolution: A Brain That Can Think

Modern LLMs are a quantum leap forward. They are not just pattern-matchers; they are reasoning engines.

- Deep Contextual Understanding: They can understand the full context of a real-time AI conversation, remembering previous turns and grasping complex, nuanced language.

- Human-like Generative Power: They can generate responses that are not just accurate, but are also empathetic, creative, and perfectly tailored to the user’s tone and sentiment.

- Dynamic Problem-Solving: They can be given access to tools (like a company’s internal APIs) and can learn to use them to solve problems on the fly, just like a human agent would.

The Modern Voice API: A Voice That Can Keep Up

In parallel with the LLM revolution, a new generation of voice infrastructure has emerged. The best voice API for business communications is now a sophisticated, real-time media platform.

- Ultra-Low Latency: Modern voice platforms, like FreJun Teler, are built on a globally distributed, edge-native architecture. This means they can process the audio of a call at a location physically close to the user, dramatically reducing network latency and enabling a truly instantaneous, back-and-forth conversation. The importance of this speed cannot be overstated. A recent Google study on voice search found that even a 400-millisecond delay in response time can lead to a significant drop in user engagement.

- Programmable Real-Time Media: A modern voice API gives developers the power to programmatically access and manipulate the raw audio stream of a live call in real time. This is the critical missing piece for connecting LLMs to telephony. It allows the user’s voice to be streamed directly to a Speech-to-Text engine the instant they start speaking.

This powerful combination, a brain that can think like a human and a voice infrastructure that can operate at the speed of a human conversation…is what is enabling the next generation of business communication.

Ready to connect your LLM to a voice platform that was built for the demands of real-time AI? Sign up for FreJun AI and explore our powerful, low-latency infrastructure.

What Does This New Generation of Business Communication Look Like?

The synergy between LLMs and a high-quality voice API is unlocking a new class of LLM-driven voice bots that are more than just simple IVRs. They are true conversational agents. This table shows the evolution of automated voice systems.

| Characteristic | Old IVR System | Next-Gen LLM-Powered Voice Agent |

| Interaction Model | Rigid, menu-driven (“Press 1…”). | Conversational, natural language. |

| Understanding | Keyword-based, easily confused. | Deep contextual and semantic understanding. |

| Problem-Solving | Limited to pre-programmed, simple tasks. | Dynamic, can use tools and APIs to solve complex problems. |

| User Experience | Often frustrating and a barrier to service. | Helpful, empathetic, and a preferred channel for many tasks. |

| Underlying Tech | Basic telephony and pre-recorded audio. | Best voice API for business communications, STT, LLM, TTS. |

Also Read: How to Design Production-Grade Agent Workflows with AgentKit and Teler’s Realtime Layer

A Real-World Example: The “Autonomous Contact Center Agent”

Imagine a customer calling an e-commerce company to return a product.

- The Conversation Begins: The call is answered instantly by an LLM-driven voice bot. The AI greets the customer and asks how it can help.

- The LLM Understands: The customer says, “Hi, I’d like to return the blue sweater I bought last week, it’s the wrong size.” The LLM understands the core intent (return), the specific item (blue sweater), and the reason (wrong size) all from this single, natural sentence.

- The LLM Acts: The LLM uses the company’s order management API to authenticate the user, find the order, confirm the item, and initiate the return process in the backend system.

- The LLM Confirms and Upsells: The AI then says, “Okay, I’ve processed that return for you. You’ll receive a shipping label in your email in the next two minutes. I see that we do have that same sweater in the next size up in stock. Would you like me to process an exchange for you instead?”

Why FreJun AI is the Best Voice API for This New Era

At FreJun AI, our entire Teler voice infrastructure was architected with this future in mind. We provide the essential, high-performance foundation that developers need to build these sophisticated LLM-driven voice bots.

- We are Obsessed with Low Latency: Our globally distributed, edge-native architecture is designed from the ground up to minimize the round-trip time, ensuring your real-time AI conversations feel natural and fluid.

- We are Radically Developer-First: We provide the powerful, easy-to-use APIs and real-time media access that give you the granular control you need to build a truly integrated system.

- We are Model-Agnostic: We believe in giving you the freedom to choose. We provide the best “voice,” but we allow you to connect any “brain” (any STT, LLM, or TTS) you choose, ensuring your application is always power by the best-in-class AI.

This is our core promise: “We handle the complex voice infrastructure so you can focus on building your AI.”

Also Read: SIP Trunking vs Elastic SIP Trunking: What’s the Difference and Why It Matters

Conclusion

We are at the beginning of a paradigm shift in how businesses and their customers interact. The powerful duo of intelligent LLMs and high-performance voice APIs is finally delivering on the long-held promise of truly conversational AI. This is a technology that will move from a competitive advantage to a fundamental expectation in a remarkably short period of time.

For businesses aiming to lead this transformation, the strategic imperative is clear: choose the best voice API for business communications, one architected for the speed, intelligence, and scale of this new conversational world.

Want to see how our low-latency infrastructure can bring your LLM to life? Schedule a demo for FreJun Teler.

Also Read: Call Log: Everything You Need to Know About Call Records

Frequently Asked Questions (FAQs)

An LLM is a type of artificial intelligence that has been trained on a massive amount of text data. This allows it to understand, generate, and respond to human language in a very sophisticated and context-aware manner. The most famous example is OpenAI’s GPT series.

A voice API is a set of programming tools that allows developers to add voice communication features to their applications. The best voice API for business communications provides not just call control, but also deep, real-time access to the call’s audio stream.

The LLM provides the “brain” (the intelligence to understand and respond), while the voice API provides the “voice” (the real-time connection to the telephone network). Together, they allow you to create intelligent LLM-driven voice bots that can have natural conversations.

The biggest challenge is latency. The entire process of listening, thinking, and responding must happen in a fraction of a second for the conversation to feel natural. This requires a low-latency AI voice platform.

These are conversations between a human and an AI that happen with no perceptible delay. The AI is able to listen and respond instantly, mimicking the natural back-and-forth rhythm of a human conversation.

Yes. With a model-agnostic provider like FreJun AI, you have the complete freedom to connect your own custom-trained or fine-tuned LLM to our voice infrastructure.

A modern, cloud-native voice API is built on an elastic infrastructure that can scale automatically. It can handle a massive, sudden spike in call volume (for example, during a service outage) without any degradation in performance.

FreJun AI provides the foundational voice infrastructure. Our Teler engine is the powerful, low-latency platform for connecting LLMs to telephony. We provide the APIs and the real-time media streaming capabilities that developers need to build their AI voice applications.