As a developer, you live in a world of APIs, microservices, and infinite cloud scalability. You can spin up a hundred servers with a single command line and process a billion data points with a few lines of code. But when your brilliant AI application needs to make or receive a simple phone call, you often hit a wall.

Suddenly, you are thrust into the arcane, rigid world of traditional telecommunications, a world of channels, carriers, and protocols that feels a century removed from the agile, on-demand universe you are used to. This is the chasm that modern, developer-first elastic SIP trunking is designed to bridge.

For a developer tasked with building voice AI with SIP, the challenge is not just about making a call; it is about building a system that can handle a thousand simultaneous, low-latency, conversational data streams. It is about creating a voice infrastructure that is as scalable, programmable, and intelligent as the AI it is designed to serve.

Traditional SIP trunking, with its fixed channels and GUI-based configurations, is a non-starter. The future of voice AI development belongs to a new class of provider that treats voice not as a phone call, but as a real-time, programmable data service.

This developer SIP trunk guide will explore the architectural principles and practical steps for leveraging a modern elastic SIP trunking platform to build and scale production-grade voice AI systems.

Table of contents

Why is Traditional SIP Trunking a Dead End for AI Developers?

To understand why a new approach is needed, we must first recognize why the old one fails. The first generation of SIP trunking was a breakthrough, but it was designed to solve a different problem: replacing the physical PRI lines that connected to a hardware PBX. It was built for IT administrators, not for software developers.

The Architectural Mismatch

The core problem with traditional SIP trunking for an AI developer is an architectural mismatch.

- The “Black Box” Problem: A traditional SIP trunk is a black box. It receives a call and is configured to “terminate” that call to a single, static IP address (your PBX). As a developer, you have no granular control over the call in real-time. More importantly, you have no easy, programmatic way to access the raw audio stream (the media), which is the lifeblood of any voice AI application.

- The “Rigid Channel” Problem: The channelized model, where you purchase a fixed number of call paths, is the antithesis of a modern, scalable application. An AI-powered outbound campaign might need to go from zero to 5,000 concurrent calls in ten seconds. A traditional trunk would simply crash.

- The “Manual Configuration” Problem: Everything from provisioning numbers to changing call routing is done through a web-based GUI. This is a slow, manual process that does not fit into an automated, CI/CD development workflow.

Also Read: Automating Utility Payments Via AI Calls

What is a Developer-First Elastic SIP Trunking Platform?

A modern, developer-first elastic SIP trunking platform is a complete rethinking of what a voice provider should be. It is not just a connection to the phone network; it is a full-fledged Communication Platform as a Service (CPaaS) that is designed to be controlled by code.

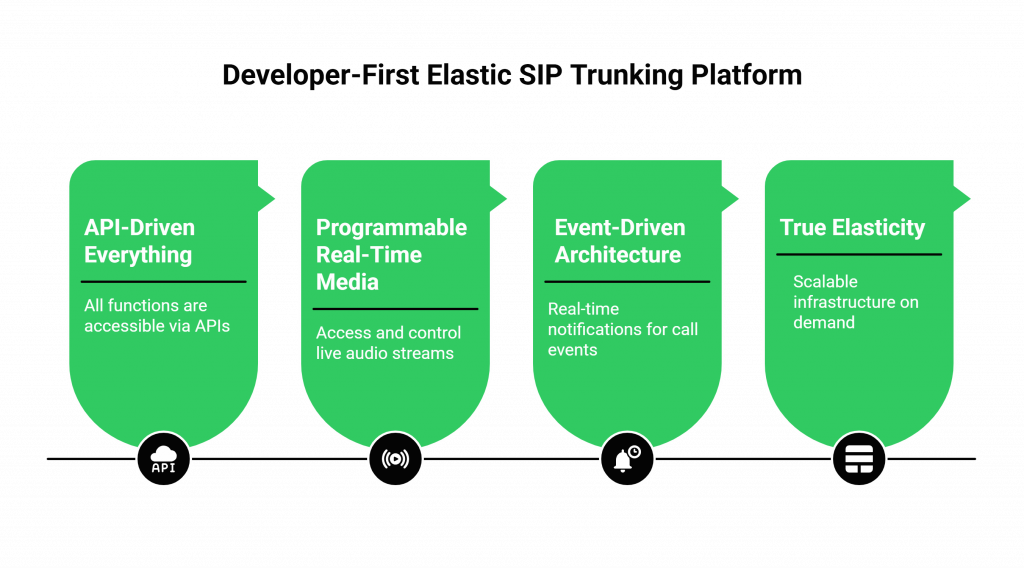

The Core Principles of a Developer-First Platform

- API-Driven Everything: Every single function of the platform, from buying a phone number to manipulating a live call’s media, is exposed through a clean, well-documented, and robust API. The API is not an afterthought; it is the primary interface to the system.

- Programmable Real-Time Media: This is the game-changer. The platform gives you the power to programmatically access and control the raw audio stream (RTP) of a live call in real time. This is the essential capability for integrating SIP with LLMs.

- Event-Driven Architecture: The platform communicates with your application through a system of real-time event notifications (webhooks). It tells your application everything that is happening on a call, “ringing,” “answered,” “speech detected,” “hanged up”, allowing your code to react and orchestrate the workflow step-by-step.

- True Elasticity: The platform is built on a massive, globally distributed infrastructure that can handle virtually any level of scale on demand. Capacity is not something you provision; it is something you use.

This is the core philosophy of the FreJun AI platform. Our Teler engine is not a traditional SIP trunk; it is a powerful, API-driven scalable voice SDK that gives you the foundational building blocks to create any voice experience imaginable.

This table highlights the fundamental shift in mindset and capability.

| Feature | Traditional SIP Trunking | Developer-First Elastic SIP Trunking |

| Primary Interface | Web-based GUI. | REST API. |

| Media Access | Hidden; terminated to a PBX. | Programmable and exposed in real-time. |

| Workflow Control | Static and pre-configured. | Dynamic and orchestrated by your application code. |

| Scalability | Manual and limited by purchased channels. | Automatic and virtually unlimited. |

| Target User | IT Administrator. | Software Developer. |

| Integration Model | Configuration. | Programming. |

How Do You Architect a Scalable Voice AI System? A Step-by-Step Guide

Using a platform like FreJun AI, a developer can architect a voice AI system that is as scalable and resilient as any other modern cloud application. The key is a decoupled, event-driven architecture.

Step 1: Decouple the “Voice” from the “Brain”

The first and most important architectural principle is to separate your concerns.

- The “Voice” (Handled by FreJun AI’s Teler Engine): This is the voice infrastructure. Its job is to manage the phone numbers, connect the calls, and handle the real-time audio streaming with low latency.

- The “Brain” (Your AgentKit): This is your application. It is where your AI’s intelligence lives, your STT, LLM, and TTS models, along with your business logic and conversational state.

Your AgentKit tells the Teler engine what to do via API calls. The Teler engine tells your AgentKit what is happening via webhooks. This clear separation makes your system more flexible, more scalable, and far easier to debug. This decoupling is a cornerstone of modern software design.

A recent report on microservices adoption found that over 90% of organizations that have adopted microservices have seen a significant improvement in their application’s scalability and resilience.

Also Read: From MCP to AgentKit: How to Deploy Voice-Enabled LLM Agents with Teler

Step 2: Configure Your Webhooks

The webhook is the “front door” to your application. In the FreJun AI dashboard or via our API, you will purchase a phone number and configure it with a “Voice URL.” When a call comes in to that number, our Teler engine will make an HTTP request to that URL. This is the trigger that starts your workflow.

Step 3: Orchestrate the Call with a Markup Language

Your application’s response to that initial webhook is a set of instructions. At FreJun AI, we use a simple but powerful XML-based language called FML (FreJun AI Markup Language). Your code dynamically generates this FML to control the call.

A simple “hello world” response might look like this:

When our Teler engine receives this, it will perform a Text-to-Speech synthesis on the “Hello…” text and play it to the caller.

Step 4: Access the Real-Time Media and Engage the AI

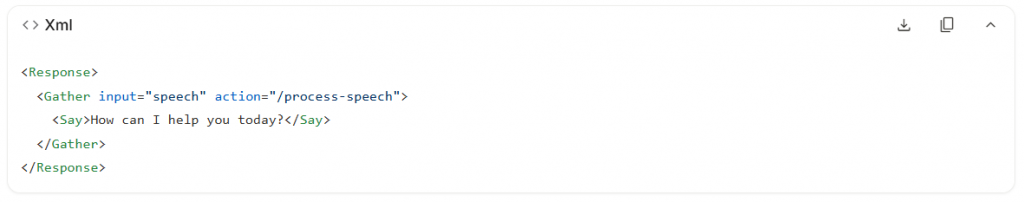

To have a conversation, you need to listen. This is where the <Gather> verb in FML comes in.

This single block of FML tells our Teler engine to do several things in sequence:

- Speak: Say the phrase “How can I help you today?”

- Listen: Immediately after, start listening for the caller to speak.

- Stream & Transcribe: As the caller speaks, Teler can be configured to stream that audio in real-time to your chosen STT engine.

- Notify: Once the caller stops speaking, Teler will make another HTTP request (a webhook) to the URL you specified in the action attribute (/process-speech). This request will contain the transcribed text of what the user said.

Step 5: The Conversational Loop

Your application receives the webhook at the /process-speech endpoint. This is where the core of your AI logic lives.

- Process with LLM: You take the transcribed text and send it to your LLM for processing.

- Formulate a Response: Your LLM returns a text-based response.

- Continue the Conversation: Your application generates a new FML response with another <Say> and <Gather> to continue the conversation.

This loop continues until the call is complete. This event-driven, request-response model is incredibly powerful and scalable. It allows your application to be stateless, meaning you can easily scale up the number of instances of your “Brain” to handle any number of concurrent conversations.

Ready to start programming the phone network? Sign up for FreJun AI!

Also Read: Voice Recognition API: Enabling Smarter Voice-Based Applications

How Does This Enable True Scale for Voice AI?

This architecture is the key to solving the scalability problem.

- The Teler Engine Scales Automatically: Our globally distributed elastic SIP trunking infrastructure is designed to handle millions of simultaneous calls. This layer scales without you ever having to think about it.

- Your Application Scales Like Any Other Web Service: Because your “Brain” (your AgentKit) is a standard web application that responds to HTTP requests, you can scale it using the same tools and techniques you use for any other web service. You can run it in a container, put it behind a load balancer, and scale the number of instances up or down based on traffic.

This is how you go from a prototype that can handle one call to a production system that can handle a hundred thousand.

Conclusion

For a developer, the world of telecommunications no longer has to be an intimidating black box. The advent of developer-first elastic SIP trunking has transformed it into a fully programmable, API-driven service. This is a monumental shift. It means that the same developer who builds your web application can now build your enterprise-grade, globally scalable voice AI system.

By understanding the decoupled architecture of a modern voice platform and by mastering the event-driven workflow of APIs and webhooks, you are no longer just a software developer; you are a communications developer.

You have been given the tools to teach your AI to speak, and with that, the power to automate and reshape the future of human-to-machine interaction.

Want to walk through a live coding session and see how you can connect your AI application to our elastic SIP trunking infrastructure in just a few minutes? Schedule a personalized demo for FreJun Teler.

Also Read: UK Mobile Code Guide for International Callers

Frequently Asked Questions (FAQs)

From a developer’s perspective, it is a cloud-based service, accessible via an API, that provides the foundational connectivity to the global telephone network. It allows your application to programmatically make and receive phone calls at scale.

The main advantage is control. Instead of being a static, pre-configured service, a developer-first platform gives you granular, real-time, programmatic control over every aspect of the call, which is essential for building voice AI with SIP.

A webhook is an automated HTTP request that the voice platform sends to your application to notify it of a real-time event (like an incoming call). It is the trigger that allows your application to react and control the call flow, making it the core of this event-driven architecture.

Yes. A key benefit of this decoupled architecture is that it is model-agnostic. The job of the elastic SIP trunking platform is to handle the voice and media. You are completely free to use whichever AI models (STT, LLM, TTS) you choose in your application for integrating SIP with LLMs.

This is typically done through a high-level command in the platform’s markup language (like the <Gather> verb in FreJun AI’s FML) or through a more advanced, lower-level Real-Time Media API that allows you to directly stream the audio packets.

A scalable voice SDK is a set of software libraries and tools provided by the platform that makes it even easier for a developer to interact with the voice API. It provides pre-built functions and handles the low-level HTTP requests, allowing you to build your application faster.

FreJun AI provides the complete, developer-first platform. We provide the globally scalable elastic SIP trunking infrastructure (our Teler engine), a powerful and well-documented API, a simple markup language (FML), and the scalable voice SDKs you need. We handle all the underlying telecom complexity, allowing you to focus on your AI’s logic.

While open-source platforms are powerful, they require you to manage the entire infrastructure yourself. You are responsible for the servers, the carrier connections, the security, and the scalability. A platform like FreJun AI is a managed service; we handle all of that for you, allowing you to get to market much faster and with greater reliability.