Designing AI agents is easy.

Designing production-grade AI agents that can reliably talk to humans in real time is not.

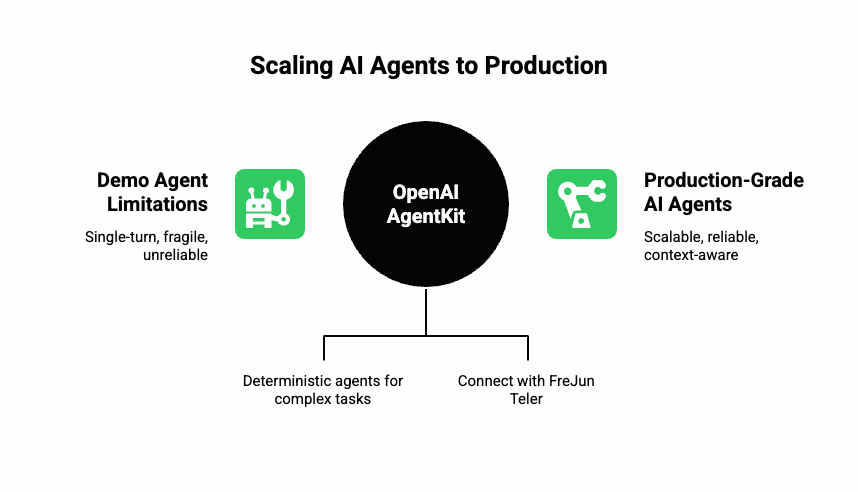

Most teams quickly discover the gap between “demo-ready” and “deployment-ready”: latency spikes, missing context, brittle tool-calls, inconsistent state management, and voice pipelines that collapse under real customer traffic. This is where AgentKit and Teler’s realtime voice layer fundamentally change the architecture.

AgentKit gives you structured control over reasoning, planning, memory, and tool execution. Teler provides the ultra-low-latency media and call infrastructure required to deliver those decisions as live, natural conversations on any phone network.

This guide shows you how to combine both into a seamless, scalable, production-grade workflow that can support real customers – not just sandbox demos.

Why Do AI Teams Struggle to Move from Demo Agents to Production-Grade Workflows?

Over the past year, many engineering teams have experimented with language-based agents. Most proofs of concept look impressive in controlled demos, yet they fail when scaled to real users or live environments.

The main reason is that demo agents are designed for single-turn interactions, not for continuous, context-aware workflows. When real-world data, phone calls, or asynchronous triggers are introduced, these prototypes face latency spikes, missing context, and fragile error handling.

Common pain points when moving to production

- High latency: speech or event delays break conversational flow.

- No orchestration: tools execute in isolation, without workflow logic or retry policies.

- Limited observability: debugging becomes difficult without traces or logs per step.

- Unreliable context: session data is lost between turns or after system restarts.

- Operational complexity: multiple services (LLM, STT, TTS, telephony) must be synchronized.

As product teams seek enterprise-grade reliability and scalable AI agents, they realize the need for structured workflow automation and a robust voice infrastructure.

This is exactly where OpenAI AgentKit helps teams design multi-step, deterministic agents that can later connect with real-time voice through FreJun Teler.

What Exactly Are Production-Grade Agent Workflows?

A production-grade agent workflow is not just a chatbot prompt. It is an orchestrated system that handles decision logic, external actions, retries, and monitoring-all while maintaining context across long-running interactions.

The five pillars of a production-ready workflow

- Reliable orchestration: every tool or API call is coordinated through defined steps, not ad-hoc prompts.

- Context continuity: session memory and variables persist across actions.

- Low latency: optimized for real-time responses, especially in voice use cases.

- Scalability: supports parallel calls and thousands of concurrent sessions.

- Security and guardrails: limits agent access, validates inputs, and prevents unintended actions.

| Aspect | Prototype Agent | Production-Grade Agent |

| State management | Stored in prompt | Persisted in structured session |

| Error handling | Manual retries | Automated policies & alerts |

| Testing | Manual | Continuous evaluation & trace grading |

| Deployment | Local or dev | Cloud-native, distributed |

| Voice support | None | Realtime layer integration |

These characteristics ensure that a workflow can scale safely and recover gracefully from any network or API failure.

How Does OpenAI AgentKit Simplify Building Scalable AI Agents?

OpenAI AgentKit provides a structured foundation for building multi-step AI workflows that enterprises can trust. It introduces a formal way to describe, execute, and monitor agent behavior using code instead of loose prompt chaining.

Key building blocks of AgentKit

- Agents and Workspaces

Each agent resides inside a workspace, defining its tools, context schema, and evaluation parameters. This separation enables multiple workflows (sales, support, onboarding) to run in isolation. - Tool Schemas and Validation

Tools are defined with JSON schemas that enforce input and output consistency. Validation ensures that every function call follows expected data types and contracts. - Shared State and Context Persistence

Session variables (e.g., customer_id, call_stage, summary_notes) allow long-running processes to maintain continuity without rewriting prompts. - Routing and Confidence Thresholds

AgentKit supports conditional routing-if model confidence is low, the workflow can reroute to a secondary model or a human agent. This improves reliability and compliance. - Guardrails and Trace Grading

Built-in evaluation tools record every decision and output. Engineers can review traces, grade them, and refine workflows using structured datasets.

Because of these design principles, AgentKit turns AI prototypes into scalable AI agents that operate with predictability.

Discover how Teler’s low-latency layer and AgentKit’s orchestration are redefining scalable, real-time voice AI for enterprise applications.

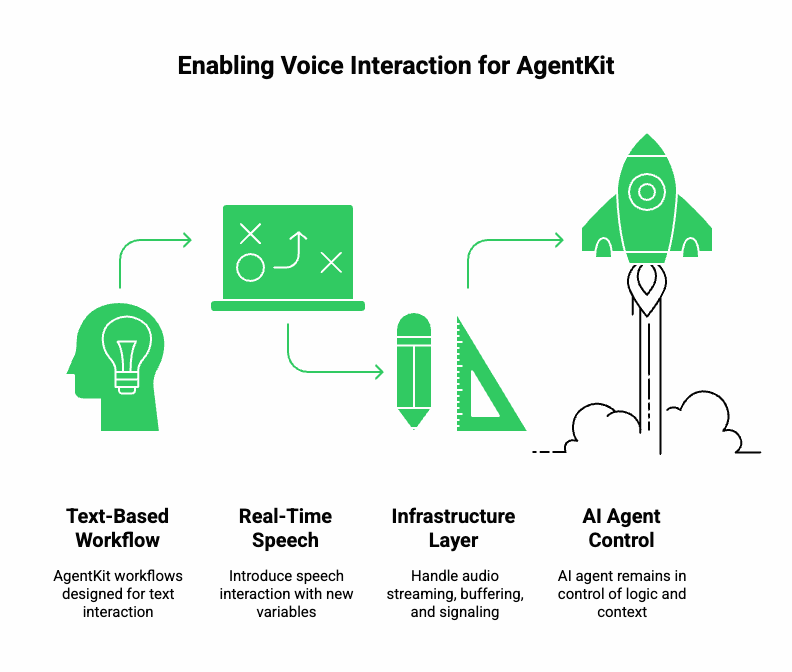

How Do You Bring AgentKit Workflows to the Voice Layer?

After designing reliable workflows, the next challenge is enabling real-time speech interaction. Moving from text to voice introduces several new variables:

- Latency: Every millisecond matters in conversation.

- Bidirectional streaming: Speech-to-text and text-to-speech must work simultaneously.

- Telephony integration: PSTN or VoIP networks add transport complexity.

- Error handling: dropped packets, jitter, or call transfers must be managed gracefully.

Traditional voice APIs or CPaaS platforms can handle calls, but they are not designed for AI-driven dialogue loops. They treat audio as static media, not as part of a dynamic decision workflow.

To achieve a seamless voice experience, engineers need an infrastructure layer that handles audio streaming, buffering, and telephony signaling, while letting the AI agent remain in control of logic and context.

That infrastructure is introduced through FreJun Teler, which we will explore in Part 2.

Before we reach that point, it’s essential to understand how to combine the orchestration power of AgentKit with a real-time transport layer.

How Do You Architect the Workflow Between LLM, STT, and TTS?

Designing production-grade voice agents requires connecting multiple services in a controlled pipeline. Each service-speech recognition, language reasoning, and speech synthesis-must interact through a shared context.

Typical workflow components

- Speech-to-Text (STT): converts incoming audio into partial transcripts.

- AgentKit Workflow: consumes transcripts, decides the next action, and generates text output.

- Text-to-Speech (TTS): converts the agent’s response into natural audio.

- Voice Transport Layer: streams audio back to the caller with minimal delay.

- Context Store: tracks conversation state and tool outputs.

Recommended design pattern

User speech

↓

Streaming STT – Partial transcripts

↓

AgentKit Workflow (LLM + tools + context)

↓

TTS Engine – Audio chunks

↓

Voice Transport Layer – User playback

This architecture ensures that the AI can respond before the user finishes speaking, maintaining a natural rhythm. Moreover, each component can be replaced independently-any LLM, any TTS, or any STT service-making the system modular and scalable.

Best practices for low-latency orchestration

- Stream everything: send partial transcripts and TTS chunks instead of waiting for complete responses.

- Use concurrent pipelines: run STT, LLM reasoning, and TTS generation in parallel where possible.

- Maintain context tokens: reuse variables (session_id, intent_stage) across turns.

- Budget latency: target <300 ms round-trip for natural voice flow.

- Instrument every layer: collect logs and metrics for each component separately.

When teams follow these guidelines, they can achieve smooth, near-real-time interactions that feel human yet remain programmatically controlled.

How Do You Ensure Workflow Reliability and Observability?

Even the most advanced workflow fails without visibility and recovery mechanisms. In production systems, reliability comes from monitoring, logging, and automated fallback logic.

Key techniques

- Structured event logging – each workflow step should emit structured logs (JSON or protobuf) for observability tools.

- Trace correlation – link each call, transcript, and action through a common request_id.

- Health checks and heartbeats – verify that STT, TTS, and agent endpoints remain online.

- Automated fallback routes – define default actions when latency exceeds thresholds.

- Evaluation datasets – replay conversations in test mode to verify deterministic results.

Engineers can apply trace grading-a practice within AgentKit-to measure decision quality and detect regressions after workflow updates.

For long-running calls or multi-step interactions, durable orchestration frameworks such as Temporal or MCP can manage retries and background tasks while AgentKit handles conversational turns.

Example: Durable agent workflow

| Stage | Orchestrator (Temporal/MCP) | AgentKit | Voice Layer |

| Call Start | Create workflow ID | Start session | Accept call |

| Intent Handling | Wait for signal | Parse transcripts | Stream audio |

| Tool Action | Execute external task | Update context | Maintain call |

| Response | Notify workflow | Generate reply | Play audio |

| Completion | Close state | End session | Hang up |

This division of labor allows each subsystem to perform its role reliably while remaining loosely coupled.

How Do You Plan for Scalability from Day One?

Designing scalable AI agents involves both horizontal and vertical scaling strategies. Without proper planning, systems hit resource bottlenecks long before product adoption.

Practical scalability patterns

- Stateless AgentKit sessions: persist session context externally (e.g., Redis, DynamoDB).

- Distributed media nodes: deploy voice transport servers regionally to minimize latency.

- Async processing queues: decouple STT/TTS workloads using message brokers.

- Rate limiting and throttling: protect external APIs from overload.

- Autoscaling policies: monitor active calls and spin up containers dynamically.

A well-designed system can handle thousands of concurrent sessions without perceptible delay, ensuring consistent performance for every user.

Learn how developers are combining Teler’s realtime infrastructure and AgentKit to design natural, context-aware, and human-like voice agents.

How Do You Maintain Security and Data Integrity?

Because production-grade agents handle sensitive voice data, every layer must be secured by design.

Core security practices

- TLS encryption for media and control channels.

- Token-based authentication for all API requests.

- Access scopes limiting agent permissions to specific tools or datasets.

- Audit logging for every external action or tool call.

- Compliance settings for call recording and retention policies.

By following these principles, enterprises can deploy compliant, auditable, and resilient systems without sacrificing agility.

What Is FreJun Teler’s Realtime Layer and Why Does It Matter?

Once your AgentKit workflows are stable in a text environment, the next milestone is to make them conversational in real time. This is where FreJun Teler’s realtime layer becomes essential.

FreJun Teler acts as the voice and media infrastructure that allows your AI agent to interact over actual phone calls, VoIP sessions, or in-app voice channels – all while maintaining low latency and high fidelity.

What makes Teler technically unique

- Low-latency bidirectional media streaming: Teler maintains <300ms round-trip audio delivery using optimized WebRTC and SIP trunking layers.

- LLM-agnostic architecture: It can connect to any reasoning engine (OpenAI, Anthropic, Mistral, local models, or RAG pipelines).

- Dynamic audio routing: Streams voice packets between the user, STT, and TTS engines in real time.

- Session management: Preserves metadata (session_id, caller_id, context_state) throughout the conversation.

- Secure telephony interface: Works with enterprise PSTN or VoIP providers under full compliance.

Essentially, Teler abstracts the entire voice transport layer so that engineers can focus on workflow logic, not telephony or audio infrastructure.

How Does the Integration Between AgentKit and Teler Work Technically?

At a high level, the architecture merges two worlds:

- AgentKit – orchestrates reasoning, decision-making, and external tool calls.

- Teler – handles media streaming, voice events, and connection stability.

Let’s see how the two layers communicate:

Step-by-step workflow

- Call initiation:

- User dials a number or triggers an outbound campaign.

- Teler accepts the call and establishes a media session (WebRTC/SIP).

- User dials a number or triggers an outbound campaign.

- Realtime transcription:

- Teler streams the incoming audio to an STT engine (OpenAI Realtime API, Whisper, or AssemblyAI).

- Partial transcripts are sent to AgentKit as structured events.

- Teler streams the incoming audio to an STT engine (OpenAI Realtime API, Whisper, or AssemblyAI).

- AgentKit reasoning:

- The transcript updates the agent’s state.

- AgentKit executes logic (intent classification, database lookup, or tool call).

- The output text is sent back to Teler via webhook or socket.

- The transcript updates the agent’s state.

- Speech synthesis:

- Teler calls the configured TTS engine (Azure Neural, ElevenLabs, Play.ht, etc.) to generate speech chunks.

- The response audio is streamed to the user immediately – no waiting for full output.

- Teler calls the configured TTS engine (Azure Neural, ElevenLabs, Play.ht, etc.) to generate speech chunks.

- Continuous loop:

- Teler synchronizes STT input and TTS output streams in near real time.

- The agent maintains context and flow until the call ends.

- Teler synchronizes STT input and TTS output streams in near real time.

| Layer | Responsibility | Example Services |

| Transport | Media, signaling, sessions | Teler Realtime Layer |

| Perception | Speech recognition | OpenAI Realtime, Whisper |

| Reasoning | Decision logic | AgentKit + LLM |

| Output | Speech synthesis | Azure TTS, ElevenLabs |

| Orchestration | Context, tools, memory | AgentKit workflow logic |

This modular approach ensures fault isolation – if one layer fails, others continue gracefully without breaking the entire conversation.

How Does Teler Handle Latency and Concurrency at Scale?

In live calls, even small audio delays feel unnatural. FreJun Teler’s infrastructure is optimized to meet enterprise-grade latency benchmarks.

Technical optimizations under the hood

- Edge media nodes: Deployed regionally to minimize physical distance from the caller.

- Packet prioritization: Adaptive jitter buffering ensures smooth playback under network stress.

- Concurrent streams: Each call session can manage multiple sub-streams (STT, TTS, background noise) in parallel.

- Dynamic bitrate adjustment: Automatically optimizes audio quality for bandwidth conditions.

- Async orchestration: Each agent runs in an event-driven mode with non-blocking I/O.

Performance snapshot

| Metric | Typical Benchmark |

| Round-trip audio latency | 200–300 ms |

| STT to AgentKit turnaround | < 250 ms |

| Average concurrent calls per node | 1,000+ |

| Audio packet loss tolerance | < 0.5% |

| Workflow recovery rate | 99.98% uptime |

Because of this infrastructure, AI agents built on Teler + AgentKit can sustain thousands of concurrent voice sessions while keeping responses fluid and contextual.

How to Architect Voice Agent Deployment with Teler + AgentKit

Designing the deployment architecture properly ensures smooth scaling and monitoring. Below is a simplified blueprint of how the pieces fit together.

System architecture overview

User ↔ Teler Realtime Layer ↔ STT ↔ AgentKit (LLM + Tools) ↔ TTS ↔ Teler ↔ User

↓

Context Store (DB)

↓

Observability Stack

Deployment tiers

- Frontend media edge – handles WebRTC and SIP connections.

- Processing tier – connects to STT and TTS providers.

- Agent orchestration tier – hosts AgentKit workflows, memory, and APIs.

- Monitoring tier – tracks logs, metrics, and traces.

- Storage tier – stores conversation summaries, transcripts, and analytics.

This tiered structure isolates workloads and ensures that media-heavy traffic never interferes with reasoning latency.

How Does the Combined Stack Enable Workflow Automation?

When both systems run in sync, you gain an end-to-end automation framework for real-time interactions.

Workflow automation examples

- Customer callbacks: AgentKit handles intent logic; Teler manages redial and connection flow.

- Lead qualification: AgentKit validates CRM data; Teler delivers voice prompts dynamically.

- Interview scheduling: LLM collects timeslots; Teler executes the voice conversation automatically.

- Post-call analytics: Session data from Teler feeds into RAG pipelines for summary generation.

Automation flow

| Phase | Handled by | Description |

| Input capture | Teler | Streams and normalizes audio |

| Intent reasoning | AgentKit | Interprets transcript, selects tools |

| Action execution | External tools | Updates CRM, calendar, etc. |

| Feedback loop | AgentKit + Teler | Confirms to user via voice |

This orchestration makes it possible to turn any enterprise process into a live, autonomous, voice-based workflow – measurable, repeatable, and secure.

How to Monitor and Optimize Live Voice Agents

Observability is crucial in production-grade systems. Both AgentKit and Teler expose telemetry hooks that can be connected to monitoring tools like Prometheus, Grafana, or Datadog.

Monitoring essentials

- Latency metrics: Measure per-stage timings (STT, reasoning, TTS).

- Conversation quality: Track speech overlap and interruption ratio.

- Error distribution: Log disconnections, failed API calls, and retries.

- Usage analytics: Aggregate duration, cost, and completion rates.

- Trace sampling: Capture 1% of sessions for manual review.

These metrics help product teams identify slow stages, fine-tune buffering, and optimize cost versus performance trade-offs.

How to Future-Proof Your Voice AI Infrastructure

Voice AI is evolving quickly, and new models or codecs appear every few months. By keeping your stack modular-AgentKit for logic, Teler for voice transport-you ensure adaptability without major rewrites.

Future-ready strategies

- Adopt pluggable STT/TTS architectures.

- Use API gateways for version control and feature rollout.

- Build observability-first pipelines for continuous optimization.

- Keep RAG and memory components externalized for portability.

- Integrate real-time feedback loops to self-tune responses.

This modularity transforms your implementation into a sustainable, long-term asset rather than a short-lived prototype.

Final Thoughts

By merging OpenAI AgentKit’s structured workflow orchestration with FreJun Teler’s realtime layer, enterprises can transform AI prototypes into production-grade voice agents. This integration bridges reasoning and real-time communication, enabling seamless, latency-free conversations that scale reliably across customer engagement and automation use cases. Together, AgentKit and Teler offer a unified path to build, monitor, and deploy enterprise-grade voice AI infrastructure with measurable performance and control.

If you’re building the next generation of intelligent voice systems, now is the time to experience it firsthand.

Schedule a demo with FreJun Teler to see how our real-time layer can bring your AgentKit workflows to life – with true production reliability.

FAQs –

- What is AgentKit used for?

AgentKit helps developers build structured, multi-step AI workflows with integrated state management, tool execution, and orchestration. - Can I use Teler with my existing LLM setup?

Yes. Teler is model-agnostic and connects with any LLM, TTS, or STT engine for real-time interaction. - How does Teler maintain low latency in calls?

Teler uses edge nodes, adaptive jitter buffering, and optimized audio codecs to keep latency under 300 milliseconds. - Do I need to use OpenAI models to integrate AgentKit?

No. AgentKit supports any reasoning or RAG system that follows standard API communication and tool-calling schemas. - How scalable is Teler’s realtime layer for enterprise workloads?

Teler supports thousands of concurrent sessions with distributed media nodes, ensuring global reliability and consistency. - Can I deploy Teler on my private infrastructure?

Yes. Teler supports hybrid or on-premise deployments for enterprises requiring strict data residency and compliance. - What happens if the STT or TTS API fails during a live call?

Teler’s failover logic retries automatically and replays the last session context seamlessly. - How secure is communication through Teler’s APIs?

All communication is encrypted end-to-end with TLS, ensuring full protection of audio, transcripts, and metadata. - Does AgentKit help in monitoring workflow performance?

Yes. It exposes logs, metrics, and trace data for every workflow step, aiding debugging and optimization.

How long does it take to integrate Teler and AgentKit?

Integration typically takes a few days using available SDKs, APIs, and developer documentation for both platforms.