For the past decade, the story of elastic SIP trunking has been one of profound but predictable evolution. It was the powerful force that liberated enterprises from the rigid, costly world of PRI lines, offering a future of scalability, flexibility, and significant cost savings. It was the digital bridge to the Public Switched Telephone Network (PSTN), a more efficient pipe for carrying human conversations.

But today, the traffic traversing that bridge is changing. The packets are no longer just carrying the voices of people; they are carrying the voices of artificial intelligence. This marks a fundamental paradigm shift.

The rise of sophisticated, LLM-powered voice agents is no longer a futuristic concept; it is a present-day reality. The global conversational AI market is exploding, projected to grow from around 17 billion in 2025 to over 50 billion by 2031, a clear signal that businesses are betting big on automated voice. This explosion in AI-driven business calls means that the underlying network infrastructure must evolve.

The future of elastic SIP trunking is not just about being a cost-effective utility; it is about becoming an intelligent, low-latency, and highly programmable conversational infrastructure for the next generation of voice experiences.

Table of contents

What Was the Original Promise of Elastic SIP Trunking?

To understand where we are going, we must first appreciate where we have been. Before elastic SIP trunking, enterprise telephony was defined by physical hardware.

Primary Rate Interface (PRI) lines were the standard, delivering 23 voice channels over a physical copper circuit. This model was incredibly rigid. If you needed a 24th channel, you had to purchase an entire new PRI line with 23 more channels, whether you needed them or not.

Elastic SIP trunking shattered this model by leveraging the power of the internet. It replaced physical wires with virtual connections, offering a set of transformative benefits that drove its widespread adoption:

- Cost Savings: It eliminated the need for expensive hardware and the associated maintenance contracts, drastically reducing telecommunication expenses.

- Unmatched Scalability: The “elastic” nature meant businesses could scale their call capacity up or down in an instant, paying only for the concurrent call paths they actually used.

- Geographic Flexibility: It decoupled phone numbers from physical locations. A business in New York could have a local number in London, all running through the same virtual trunk.

For years, these benefits were more than enough. But the arrival of voice AI has introduce a new set of requirements that the first generation of SIP trunking was never designed to handle.

Also Read: Voice AI For Emergency Response Centers

Why Do LLMs Place Unprecedented Demands on Voice Infrastructure?

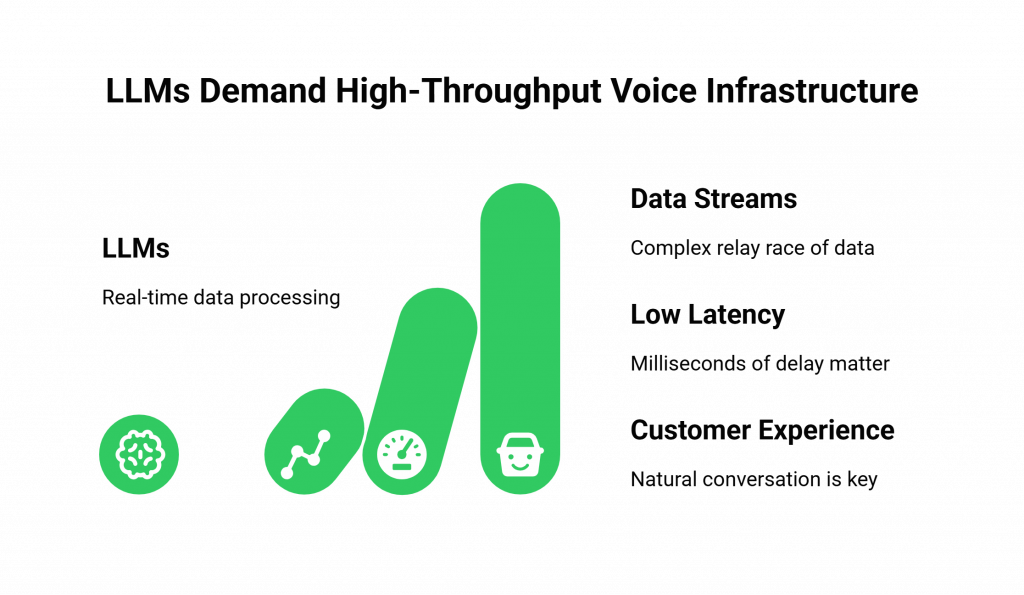

In the traditional model, a phone call was a relatively simple, low-bandwidth event. An AI-powered conversation is a fundamentally different kind of workload. It is not just a voice call; it is a high-throughput, real-time data processing event.

The new definition of capacity is not just about how many calls you can handle, but how well you can handle the data within those calls.

From Simple Call Paths to High-Throughput Data Streams

A human conversation is relatively straightforward. An AI conversation, however, is a complex relay race of data. The raw audio from the call (the RTP stream) must be instantly capture and sent to a Speech-to-Text (STT) engine.

The system sends the resulting text to a Large Language Model (LLM) for processing, sends the LLM’s text response to a Text-to-Speech (TTS) engine for conversion into audio, and streams the audio back to the caller.

Each of these steps is a data transaction, and they must all happen in a fraction of a second. A modern elastic SIP trunking provider must be architect to facilitate this high-speed data flow, not just terminate a call.

The Non-Negotiable Requirement for Ultra-Low Latency

Humans are surprisingly tolerant of small delays in conversation. An AI is not. Every millisecond of latency in the AI workflow, from the network, the STT, the LLM, or the TTS, adds up.

Once this total latency crosses a certain threshold (typically around 500-800 milliseconds), the conversation ceases to feel natural. The user starts talking over the AI, the interaction breaks down, and the customer experience is ruined.

This is not a trivial issue; a survey by Arise found that nearly two-thirds of customers are only willing to wait on hold for two minutes or less before hanging up. An AI that feels slow is even more frustrating than hold music.

For an elastic SIP trunking provider, this means that simply connecting a call is no longer good enough. The quality of that connection, measured in milliseconds of delay, is now the most critical metric for real-time voice LLMs.

Also Read: How Teler Improves AgentKit’s Intelligent Agents with Voice?

How Must Elastic SIP Trunking Evolve to Support LLM Integration?

For an elastic SIP trunking provider to effectively serve the AI-powered world, it must evolve from being a simple connectivity provider to being a true voice infrastructure platform. It requires a new set of architectural principles and capabilities that are design for developers and machines, not just for traditional phone systems.

Beyond Connectivity: The Need for Programmable Media Access

The most critical need is the ability for developers to get their hands on the raw, real-time audio stream (RTP) of the call. Traditional providers often terminate the call and pass it to a PBX, hiding the underlying media.

An AI-first provider must expose this stream via an API, allowing it to be forked and sent directly to an STT engine in real time. This is the heart of LLM integration with telephony.

From Centralized to Edge-Native Architecture

To minimize network latency, the provider must have a global network of Points of Presence (PoPs). When a call comes in, the system must handle it at the edge in a data center physically close to the user instead of routing it across the country to a centralized server. This architectural choice is the most effective way to combat latency.

From GUI to API: The Shift to a Developer-First Mindset

The modern trunk is not something you configure once in a web portal. It must be a dynamic entity that can be controlled by code. Developers need a powerful and flexible API to provision numbers, manage call routing, and control the in-call media flow in real time.

This table summarizes the fundamental shift in what is expected from an elastic SIP trunking provider.

| Feature | Traditional Elastic SIP Trunking Focus | AI-Optimized Elastic SIP Trunking Focus |

| Primary Value | Cost savings and channel consolidation. | Enabling low-latency, real-time AI workflows. |

| Capacity Metric | Concurrent call paths for human agents. | High-throughput, low-latency data streams for AI models. |

| Media Access | Terminates audio to a PBX or softswitch. | Provides direct, programmable access to the raw RTP stream. |

| Infrastructure | Centralized, focused on reliability. | Globally distributed (edge-based), focused on low latency. |

| Control Interface | Web-based portal for configuration. | Developer-first, API-driven for real-time orchestration. |

| Target User | IT Administrator. | Software Developer. |

Ready to build on an infrastructure that was design for the demands of modern AI? Sign up for FreJun AI.

Also Read: Automating Tour Bookings with Voice AI

What Does This New Conversational Infrastructure Look Like in Practice?

This new synergy between elastic SIP trunking and LLMs is enabling a new generation of AI-driven business calls. Consider an AI-powered customer service agent for a large e-commerce company.

The workflow is a perfect example of this new conversational infrastructure:

- The Call Arrives: A customer calls the support number. The call is routed to the nearest edge PoP of a provider like FreJun AI. Our Teler engine answers the call.

- Media is Forked: Our programmable API instantly forks the raw audio stream, sending one copy to the company’s recording servers for compliance and streaming the other in real time to their AI “brain” (AgentKit).

- The AI Brain Engages: The AgentKit’s STT transcribes the audio, the LLM understands the customer wants to track a package, it queries the order management system via an internal API, and the TTS generates a natural-sounding response.

- The Response is Delivered: This audio response is streamed back to our Teler engine, which plays it back to the customer.

This entire round trip happens in less than a second. The elastic SIP trunking layer is not just a dumb pipe; it is an active, programmable participant in the AI workflow, managed and orchestrated by code. This is our core philosophy: “We handle the complex voice infrastructure so you can focus on building your AI.”

Conclusion

The role of elastic SIP trunking is in the midst of a profound transformation. What began as a tool for cost savings and operational convenience is now evolving into the mission-critical foundation for the AI-powered voice revolution.

The future does not belong to the providers who can simply offer the cheapest call path, but to the infrastructure platforms that can offer the fastest, most reliable, and most programmable data path for artificial intelligence.

For the telecom and enterprise communication professionals tasked with building the future, understanding and embracing this shift is not just an option; it is the key to unlocking the true potential of real-time voice LLMs and the next generation of business communication.

Want to discuss how our underlying elastic SIP trunking infrastructure can support your enterprise AI strategy? Schedule a demo with our team at FreJun Teler.

Also Read: Telephone Call Logging Software: Keep Every Conversation Organized

Frequently Asked Questions (FAQs)

A voice AI conversation involves multiple steps (STT, LLM, TTS). Any delay in this process results in a lag between the user speaking and the AI responding. If this lag is too long, the conversation feels unnatural and frustrating, leading to a poor user experience.

Programmable media access refers to the ability to get the raw audio stream (RTP) of a call in real time via an API. An AI needs this so the audio can be sent to a Speech-to-Text engine for transcription, which is the first step in any AI conversation.

By having servers (Points of Presence) around the world, a provider can handle a call at a location physically closer to the end-user. This dramatically reduces the network travel time for the data, which is the most effective way to lower latency.

A traditional phone system is designed simply to connect two humans. A conversational infrastructure is a modern, API-first platform designed to connect humans to machines (or machines to machines). It is built for the high-throughput, low-latency data demands of AI.

Yes. A key feature of a modern voice infrastructure platform is being model-agnostic. FreJun AI allows you to bring your own AI models (STT, LLM, TTS), giving you complete control over your agent’s intelligence.

Yes. Security is a top priority. An enterprise-grade platform will use protocols like Transport Layer Security (TLS) to encrypt the signaling (the call setup information) and the Secure Real-time Transport Protocol (SRTP) to encrypt the media (the voice conversation itself).

We provide a high-level, developer-friendly set of APIs that handle the complex, low-level details of the SIP and RTP protocols for you. As a developer, you can think in terms of simple commands like “make a call” or “play audio” without ever needing to become an expert in telecommunications signaling.

The future will likely see even tighter integration, with more AI-powered intelligence moving directly into the network layer itself. This could include real-time sentiment analysis, intent detection, and AI-driven fraud prevention happening at the edge, before the call even reaches the main application.