Voice AI is evolving from scripted bots to truly interactive systems. Yet, building production-grade voice agents still requires two critical layers, intelligence and communication. OpenAI’s AgentKit brings structured reasoning and automation control, while FreJun Teler delivers real-time voice streaming with minimal latency. Together, they form a developer-ready framework for deploying intelligent, human-like voice agents that respond instantly, understand context, and scale effortlessly.

In this blog, we’ll explore the key benefits of integrating AgentKit with Teler, and how this synergy helps founders, product managers, and engineering leads build reliable voice automation systems faster.

What’s Driving the Next Wave of Voice AI Innovation?

The way people interact with technology is changing fast. Text chatbots once dominated business automation, but now, real-time voice interfaces are setting a new standard. Customers expect natural, fluid conversations – not menus or button-based IVRs. Usage data from GWI show that 32% of consumers globally used a voice assistant in the past week, and 21% used one to search for information actively – demonstrating the shift toward voice-first interaction.

Yet building these advanced voice systems isn’t simple.

Engineering teams must combine:

- A speech recognition engine (STT) to capture human input,

- A language model that understands and decides,

- A text-to-speech layer (TTS) to respond naturally, and

- A telephony or VoIP layer to handle calls at scale.

Each of these parts has its own APIs, protocols, and scaling issues. Integrating them takes months – and often breaks when latency or network conditions fluctuate.

That’s the gap where AgentKit, OpenAI’s new agent orchestration framework, comes in. It simplifies how developers build intelligent agents. When paired later with FreJun Teler, a real-time voice infrastructure, it forms an end-to-end stack that transforms how teams deploy production-grade voice agents.

What Exactly Is OpenAI’s AgentKit and Why Does It Matter?

OpenAI’s AgentKit is more than a toolkit – it’s a structured framework for building, testing, and improving intelligent AI agents. Instead of manually coding agent behaviors, developers can now use prebuilt components to manage every layer of the workflow.

Core Capabilities of AgentKit

| Functionality | Description | Benefit |

| Agent Builder | Visual and programmable interface to design agents with defined tools, memory, and goals. | Simplifies orchestration and reduces coding effort. |

| ChatKit | Embeddable chat interface for agent-driven user experiences. | Enables quick integration into existing products. |

| Guardrails | Rule-based safety layer that filters or modifies outputs before delivery. | Ensures compliance and brand safety. |

| Connector Registry | Prebuilt integrations for external APIs and data stores. | Connects agents to business systems instantly. |

| Evals & RFT | Evaluation and reinforcement fine-tuning modules. | Monitors performance and continuously improves response quality. |

Each component works together to automate decision-making while maintaining control and reliability.

This structure is why AgentKit is considered a leap from prompt engineering to full AI workflow automation.

Why AgentKit Is Different from Previous AI Frameworks

Unlike traditional chatbots or scripted assistants, AgentKit agents:

- Can reason and act using structured tools.

- Have contextual memory and can recall past sessions.

- Use Guardrails to prevent unsafe or irrelevant actions.

- Can be evaluated and tuned continuously using real-world metrics.

This approach moves AI closer to “production-ready autonomy.” Instead of writing logic for every possible user input, teams define the framework and let the agent handle dynamic decision-making.

For product teams, that means:

- Faster prototyping cycles.

- Reduced dependency on backend logic for every task.

- Better governance over AI actions.

Why Voice Agents Need More Than Just a Chat Interface

Voice adds both power and complexity to AI-driven experiences.

A simple chat flow that feels seamless in text becomes highly demanding in audio because:

- Timing is critical: Humans notice even small pauses in voice conversations.

- Error tolerance is low: Misheard words or lag cause immediate user frustration.

- Parallel processing is needed: Voice input (STT) and voice output (TTS) must often run concurrently for natural interaction.

Traditional APIs struggle here because they weren’t built for real-time communication. They handle data well, but not continuous, low-latency streams.

That’s where a dedicated voice infrastructure – like Teler – becomes essential. But before we bring it in, it’s important to understand how AgentKit enables this integration cleanly.

How Does AgentKit Simplify AI Workflow Automation?

Building intelligent workflows has always required extensive backend engineering. Developers had to:

- Write orchestration logic to call APIs in sequence.

- Maintain memory and context manually.

- Implement safeguards for tool calls and sensitive data.

AgentKit abstracts most of this complexity through its workflow engine.

Developers can define:

- When an agent should call a tool (e.g., CRM lookup, calendar booking).

- How it should handle failures or unexpected responses.

- Which datasets to reference (via Retrieval-Augmented Generation or RAG).

Here’s a simplified example of how AgentKit structures an AI workflow:

User Input → STT → AgentKit Workflow →

→ (Tool Calls / RAG) → LLM Decision →

→ Guardrail → Response → TTS

In this model:

- The AgentKit Workflow acts as the conductor.

- The LLM performs reasoning.

- External tools handle tasks like data retrieval or booking actions.

- Guardrails monitor safety and compliance before the response is finalized.

This modularity lets teams plug in new models, tools, or connectors without rewriting large sections of code.

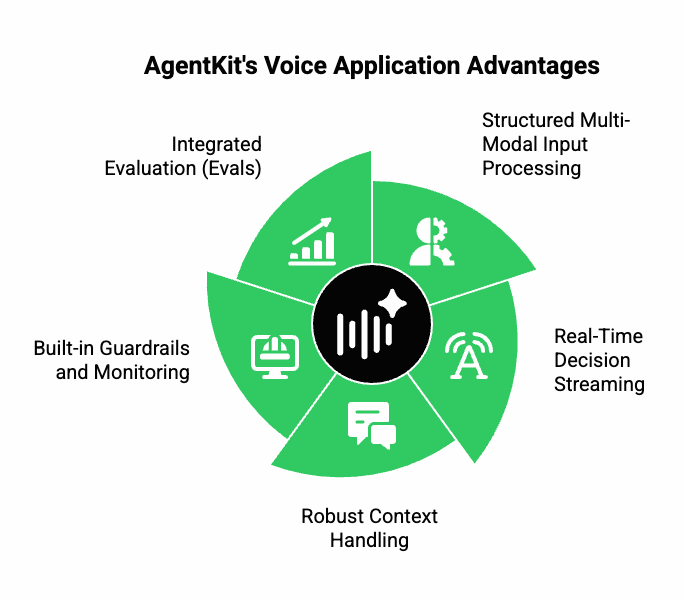

What Technical Advantages Does AgentKit Provide for Voice Applications?

Even though AgentKit was built as a general framework for agents, its structure fits perfectly into voice AI pipelines. Here’s how it helps:

1. Structured Multi-Modal Input Processing

AgentKit allows developers to manage multiple input types – including transcribed speech. That makes it easy to route STT outputs directly into an agent’s reasoning workflow.

2. Real-Time Decision Streaming

Instead of waiting for complete responses, AgentKit supports streamed token output from language models. When connected with a low-latency transport (like Teler), it enables incremental speech synthesis – the agent can “start talking” before finishing the thought.

3. Robust Context Handling

Agents can retain and use conversational context across sessions. For recurring customer interactions, that means responses can be more consistent and relevant.

4. Built-in Guardrails and Monitoring

Voice interfaces are public-facing by design. Guardrails prevent unsafe or irrelevant speech before it’s spoken. Combined with Evals, teams can quantify how often responses meet accuracy, tone, or compliance standards.

5. Integrated Evaluation (Evals)

Instead of testing through user feedback alone, AgentKit records traces of each interaction for automated scoring. This makes iterative improvement measurable.

Together, these features let teams move from static scripts to adaptive, data-driven voice agents that improve over time.

Discover how developers can leverage AgentKit + Teler to craft lifelike, real-time voice agents with controlled reasoning and contextual awareness.

Why Combining AgentKit with a Real-Time Communication Layer Matters

Even the most capable agent framework can’t handle voice calls directly. It still needs:

- An audio input stream,

- A telephony bridge, and

- A low-latency output channel for synthesized speech.

If you tried to build all that yourself, you’d have to handle:

- Codec negotiation (e.g., Opus, PCM).

- Media streaming protocols (RTP, WebRTC).

- Call control (start, stop, mute, hold).

- Jitter buffering and network resiliency.

These aren’t small engineering challenges. Every millisecond matters.

That’s why the voice infrastructure layer must be as reliable and optimized as the AI layer. Without it, your intelligent agent can’t sound intelligent – it’ll stutter, pause, or misalign responses.

AgentKit provides the logic and control, but not the transport.

To bridge that final mile, you need a purpose-built system that streams audio seamlessly and synchronizes with the agent’s workflow.

That’s exactly what FreJun Teler was designed for – and it’s where the two technologies complement each other perfectly.

Transitioning from Concept to Implementation

Before we dive into the voice integration in the next part, it’s important to visualize the architecture that makes this possible.

A modern AI voice system typically looks like this:

| Layer | Responsibility | Example Tech |

| Telephony/Transport | Captures & streams voice data | SIP, WebRTC, or Teler |

| Speech-to-Text (STT) | Converts audio into text | Whisper, Google Speech |

| Agent Logic (AgentKit) | Handles reasoning, tool calls, memory | OpenAI AgentKit |

| Text-to-Speech (TTS) | Converts output to voice | ElevenLabs, Azure TTS |

| Analytics & Evaluation | Tracks quality & compliance | AgentKit Evals |

Each layer can operate independently, but seamless integration is what drives real value.

AgentKit sits at the center – managing logic, context, and orchestration.

The next challenge is ensuring that the voice layer keeps up with its real-time speed and precision.

That’s exactly where Teler enters the stack.

What Is FreJun Teler and Why Does It Matter for Voice AI?

FreJun Teler is a real-time communication layer designed for AI-driven calling and conversational automation.

Unlike generic telephony APIs that simply connect calls, Teler provides intelligent voice infrastructure – optimized for low latency, adaptive streaming, and seamless AI integration.

Think of it as the audio engine that lets your OpenAI AgentKit agents actually “speak and listen” – in real-time.

Core Capabilities of Teler

| Capability | Technical Focus | Result |

| Low-Latency Audio Streaming | Real-time duplex streaming over WebRTC/SIP | Sub-second response time in live conversations |

| Dynamic Media Routing | Intelligent routing of inbound/outbound media | Stable voice quality even under network stress |

| Programmable APIs | REST + WebSocket control for session management | Simple orchestration with AI agents |

| Speech Layer Compatibility | Supports STT and TTS engine integration | Works with any preferred vendor stack |

| Event Hooks and Callbacks | Custom event triggers (start, pause, end) | Enables complex workflows and logging |

How Teler Is Different

Where most platforms stop at “connect a call,” Teler goes further – it handles the entire audio lifecycle with synchronization across AI components.

For developers, this means they can plug in any LLM, STT, or TTS system and achieve real-time bidirectional communication without maintaining media servers or jitter buffers manually.

How Does Teler Technically Integrate with OpenAI’s AgentKit?

At a high level, the integration looks like this:

Caller → Teler → STT Engine → AgentKit → (Tools / APIs / RAG) → TTS Engine → Teler → Caller

Here’s the breakdown:

Step-by-Step Flow

- Call Initiation:

- Teler receives or initiates a call via SIP/WebRTC.

- Audio is captured and streamed in real time.

- Teler receives or initiates a call via SIP/WebRTC.

- Speech-to-Text Conversion (STT):

- Teler passes audio packets to your chosen STT engine (e.g., Whisper, Deepgram).

- The transcribed text is streamed to the AgentKit workflow endpoint.

- Teler passes audio packets to your chosen STT engine (e.g., Whisper, Deepgram).

- Agent Reasoning via AgentKit:

- AgentKit receives the text input, processes it through the LLM, and optionally calls external tools or APIs.

- Context memory and Guardrails ensure controlled and goal-oriented responses.

- AgentKit receives the text input, processes it through the LLM, and optionally calls external tools or APIs.

- Text-to-Speech Conversion (TTS):

- The AgentKit’s response text is sent to a TTS engine (like ElevenLabs or Azure Speech).

- The generated audio is streamed back through Teler.

- The AgentKit’s response text is sent to a TTS engine (like ElevenLabs or Azure Speech).

- Playback to Caller:

- Teler sends synthesized speech to the caller with minimal delay (<500ms typical).

- Teler sends synthesized speech to the caller with minimal delay (<500ms typical).

This creates a fully looped real-time conversation – the user talks, the agent listens, reasons, and replies instantly.

What Are the Combined Benefits of Using AgentKit with Teler?

When OpenAI’s AgentKit logic layer meets Teler’s communication infrastructure, the result is a production-ready, high-performance voice AI stack.

Let’s break down the benefits for both engineering and business teams.

1. Unified Voice AI Architecture

| Without Integration | With Teler + AgentKit |

| Multiple fragmented APIs | One cohesive flow |

| Manual error handling | Built-in Guardrails |

| Latency above 2s | Sub-second conversational latency |

| Complex custom code | Reusable agent workflows |

This unified stack reduces complexity for developers and ensures consistent voice quality and agent intelligence in real-world conditions. The AI customer-service market is projected to reach US$47.82 billion by 2030, with up to 95% of customer interactions expected to be AI-powered by 2025.

Faster Development & Deployment

AgentKit automates logic and orchestration.

Teler automates telephony and audio delivery.

Together, they enable rapid prototyping – teams can deploy proof-of-concept voice agents in days, not months.

Developers no longer need to:

- Write media routers.

- Manage call signaling.

- Handle token streaming manually.

Instead, they can focus purely on business logic and experience design – increasing developer productivity by up to 40–50%.

Real-Time Conversational Fluidity

The biggest barrier to believable voice AI is latency.

AgentKit’s streaming output combined with Teler’s real-time routing ensures responses feel naturally timed.

For customer-facing applications like sales calls or appointment scheduling, this difference directly impacts user trust and retention.

Flexible Integration with Any AI Stack

Because both systems are model-agnostic, teams can freely choose their components:

| Layer | Example Tools |

| LLM | GPT-4, Claude, Gemini, Mistral |

| STT | Whisper, Deepgram, Google Speech |

| TTS | ElevenLabs, Azure Speech, Play.ht |

| Agent Layer | OpenAI AgentKit |

| Voice Layer | FreJun Teler |

This flexibility makes Teler + AgentKit future-proof – you can upgrade one layer without disrupting the rest.

Enhanced Observability and Debugging

Both AgentKit and Teler include structured logs and event traces.

When integrated, they provide end-to-end visibility across the voice pipeline – from audio packet to LLM decision to speech output.

- Engineers can analyze call-level metrics.

- Product managers can evaluate response accuracy using AgentKit Evals.

- Founders can track ROI through conversion or resolution rates.

This observability turns your voice AI deployment from a “black box” into a transparent, measurable system.

How Does This Integration Improve AI Workflow Automation?

Traditional automation relied on prebuilt flows or triggers.

AgentKit enables context-aware automation, and Teler extends that context into live communication.

Example use case: Automated HR Screening Calls

| Step | Description | Tech Component |

| 1 | Candidate receives automated call | Teler |

| 2 | Candidate speaks answers | STT |

| 3 | AgentKit processes and evaluates | LLM + AgentKit |

| 4 | Response recorded and summarized | RAG / Tool call |

| 5 | HR dashboard updated | API integration |

Every layer operates autonomously yet synchronously – delivering AI workflow automation across voice, logic, and data systems.

This pattern can be applied to:

- Sales qualification calls,

- Customer support triage,

- Survey and feedback collection,

- Appointment reminders, and more.

Why Does It Matter for Developer Productivity and Cost Efficiency?

In most AI projects, 60–70% of engineering effort goes into integration overhead – connecting APIs, managing latency, and debugging audio sync issues.

By combining AgentKit and Teler, teams can:

- Reduce codebase size by up to 30%.

- Lower call-handling costs with optimized routing.

- Reuse workflows across different projects.

- Maintain one logic layer instead of fragmented systems.

This lets startups and enterprises alike focus resources on improving business logic rather than managing infrastructure.

How Does This Pairing Support Real-Time Communication Tools?

With Teler as the audio foundation, businesses can embed real-time communication capabilities into existing platforms.

For instance:

- CRM systems can directly trigger voice follow-ups.

- Scheduling apps can use agents for reminder calls.

- Helpdesks can escalate complex voice interactions to humans seamlessly.

These integrations turn static workflows into live, event-driven systems, enhancing responsiveness and voice AI efficiency.

What Are the Strategic Benefits for Founders and Product Teams?

Beyond technical excellence, the Teler + AgentKit pairing drives strategic advantages:

- Faster Time-to-Market: Prototype, test, and deploy faster than competitors.

- Scalability: Handle thousands of concurrent voice sessions with consistent latency.

- Customizability: Choose your LLM, voice models, and guardrails as needed.

- Security: Keep compliance boundaries clear with AgentKit’s controlled API calls.

- Data Ownership: Host Teler on your own infrastructure for data privacy.

Together, they empower teams to own their AI stack end-to-end – without vendor lock-in.

What’s Next for Voice AI Stack Builders?

The future of voice AI will depend on tight integration between reasoning and communication.

AgentKit provides the intelligence; Teler provides the channel.

As both evolve, expect:

- Adaptive latency optimization through token-level streaming.

- Cross-modal workflows that mix voice, chat, and screen interaction.

- Fine-tuned evaluation models that measure “conversation quality.”

These innovations make the combination not just an integration – but a foundation for next-generation, autonomous voice systems.

Final Thoughts: Why AgentKit + Teler Is the New Standard

Building production-ready Voice AI systems today demands both intelligence and infrastructure. OpenAI’s AgentKit provides structured reasoning, controlled automation, and seamless tool orchestration, the cognitive layer that drives intelligence. Meanwhile, FreJun Teler powers the communication layer, delivering real-time, reliable voice streaming that ensures every interaction feels natural, fluid, and human-like.

Together, AgentKit and Teler create a unified voice AI architecture designed for scale – offering developer efficiency, consistent low-latency performance, and seamless multi-network voice connectivity. This combination lets product teams and engineering leads focus on innovation instead of operational overhead.

Ready to Build Your First AI Voice Agent?

Integrate OpenAI’s AgentKit with FreJun Teler to unlock real-time, production-grade automation.

Schedule a demo and explore how Teler can bring your intelligent voice agents to life.

FAQs –

- What is OpenAI’s AgentKit?

AgentKit is OpenAI’s developer framework for creating autonomous AI agents with structured reasoning and tool integration. - How does Teler integrate with AgentKit?

Teler connects AgentKit-powered agents to real-time telephony and VoIP, enabling instant, two-way human-like conversations. - Can I use my own LLM with Teler?

Yes, Teler is model-agnostic, allowing integration with any LLM or AI model via API. - What benefits does AgentKit add to voice automation?

AgentKit provides structured reasoning, action control, and real-time tool calling for smarter, context-aware automation. - How does Teler ensure low latency in AI voice calls?

Teler uses optimized real-time media streaming architecture to minimize latency between speech input and response playback. - Is Teler suitable for enterprise-scale applications?

Yes, it’s built with enterprise-grade reliability, scalability, and security for large-scale deployments. - How long does integration take with AgentKit and Teler?

Developers can integrate within hours using SDKs and APIs designed for plug-and-play implementation. - Can Teler handle both inbound and outbound calls?

Yes, it supports real-time bidirectional calling, making it ideal for support, sales, and automation workflows. - Do I need specific AI models for Teler to work?

No, you can bring your own AI – Teler manages the voice transport layer, not the model logic. - What support does FreJun offer for integration?

FreJun provides pre-integration guidance, SDK documentation, and dedicated technical support for smooth deployment.