For a developer working on an AI voicebot, the most exciting decision is choosing its “brain.” We are in a golden age of artificial intelligence, with an incredible array of powerful Large Language Models at our fingertips. At the forefront of this revolution are two titans: OpenAI, with its famously powerful GPT series, and Anthropic, with its nuanced and safety-conscious Claude models. Choosing between them is like choosing the engine for a high-performance race car, each offers a unique blend of power, style, and capability.

But a world-class engine is useless without a chassis, a transmission, and a connection to the road. A brilliant, text-based LLM is just a brain in a jar. To create a true voice agent, you must solve a far more fundamental challenge: how do you connect this silent, digital brain to the messy, analog, and incredibly time-sensitive world of a live human conversation?

This guide is for the architect and the engineer. We will explore the distinct characteristics of building with the GPT API versus Anthropic’s Claude, and provide a deep, architectural blueprint for the real-time voice integration that brings these powerful minds to life. This is the playbook for next-generation AI development.

Table of contents

- What Makes OpenAI and Claude the Top Choices for an AI “Brain”?

- Why Can’t These LLMs Just Talk Out of the Box?

- What is the Architectural Blueprint for Voice Integration?

- What is the Step-by-Step Implementation Plan for Developers?

- How Does This Architecture Impact Performance and Flexibility?

- Conclusion

- Frequently Asked Questions (FAQs

What Makes OpenAI and Claude the Top Choices for an AI “Brain”?

When you’re building a conversational AI, the quality of your LLM is the ceiling for your application’s intelligence. OpenAI and Anthropic have consistently proven to be at the pinnacle of this technology, but they have distinct personalities that make them suited for different tasks.

What Are the Strengths of Building with OpenAI’s GPT Models?

OpenAI’s models, particularly the latest in the GPT-4 series, are renowned for their raw power and versatility.

- Massive General Knowledge: Trained on a vast swath of the internet, GPT models have an encyclopedic knowledge base, making them excellent for open-ended Q&A and generalist assistants.

- State-of-the-Art Reasoning: They excel at complex, multi-step reasoning and are often the top performers in standardized benchmarks for logic and problem-solving.

- Advanced Tool Calling: OpenAI has invested heavily in “function calling,” making their models incredibly adept at interacting with external APIs and tools, which is essential for an AI agent that needs to do things.

What Are the Strengths of Building with Anthropic’s Claude Models?

Anthropic’s Claude family, including the latest Claude 3 models, has carved out a reputation for being more nuanced, creative, and safety-oriented.

- Superior Conversational Nuance: Many developers find that Claude models produce more natural, less robotic-sounding dialogue. They are often better at picking up on subtle user sentiment and maintaining a consistent, engaging persona.

- Large Context Windows: Claude models have been leaders in offering massive context windows, allowing the AI to “remember” extremely long conversations and documents, which is a huge advantage for complex support scenarios.

- “Constitutional AI”: Anthropic’s focus on a safety-first training methodology (“Constitutional AI”) can make Claude a more reliable choice for enterprise applications where brand safety and predictable behavior are paramount.

The growth of enterprise AI adoption is explosive. A recent report from IBM found that 42% of enterprise-scale companies have actively deployed AI in their business, and having a choice between powerful models like these is a major catalyst.

Why Can’t These LLMs Just Talk Out of the Box?

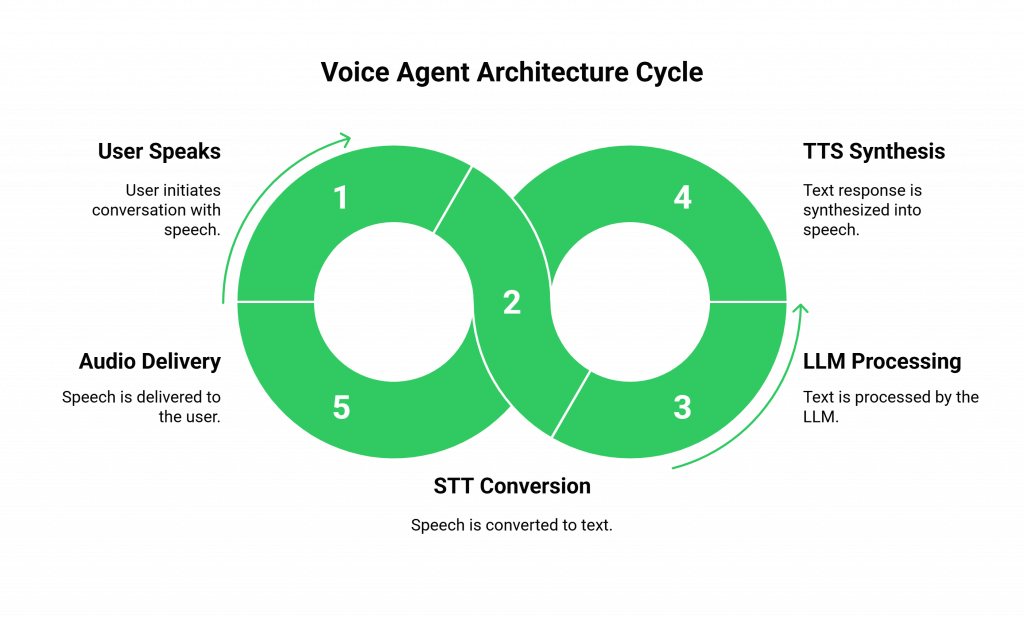

This is the fundamental architectural challenge. Both the GPT API and Anthropic‘s API are text-in, text-out systems. They are brilliant writers, but they have no concept of sound. They have no “ears” to listen and no “mouth” to speak. To build a voice agent, you must architect a “body” around this brain.

This body consists of three critical systems:

- The Ears (Speech-to-Text – STT): A specialized AI model that translates the user’s spoken audio into text.

- The Mouth (Text-to-Speech – TTS): A specialized AI model that synthesizes the LLM’s text response into audible, human-like speech.

- The Nervous System (The Voice Infrastructure): This is the high-speed, real-time communication network that carries the signals. It’s the infrastructure that connects to the live phone call, streams the audio to the “ears,” carries the text to the “brain,” and delivers the final audio from the “mouth” back to the user.

Also Read: Building Human-Like Voice Conversations with AI

What is the Architectural Blueprint for Voice Integration?

Building this “body” is an engineering project that requires a modern, API-driven architecture. The key is to create a backend orchestration service that acts as a central “command center.”

Think of your voice integration as a real-time intelligence operation:

- The Field Agent (The User): Communicating from the real world via a radio (their phone).

- The Radio Operator (The Voice Infrastructure): This is the critical communication hub. This is the role of a platform like FreJun AI. It manages the messy, real-world communication channel (the global phone network), cleans up the signal (the raw audio), and patches it through to your command center with ultra-low latency.

- The Intel Analyst (The STT): This expert listens to the raw radio chatter and instantly transcribes it into a clean, written report (the text transcript).

- The Strategist (Your chosen LLM: OpenAI or Claude): This is the brilliant mind in the command center. It reads the analyst’s report, analyzes the situation based on all prior reports (the conversation history), and writes the next set of clear, concise orders (the text response).

- The Spokesperson (The TTS): This expert takes the written orders from the strategist and reads them back over the radio in a clear, authoritative, and perfectly toned voice.

Your backend application is the “command center” itself, orchestrating this team of experts in a continuous, sub-second loop.

What is the Step-by-Step Implementation Plan for Developers?

Here is a high-level plan for your AI development process.

- Choose Your “Strategist”: Make a decision between the GPT API and Anthropic’s Claude based on your project’s needs. Get your API keys.

- Select Your “Analyst” and “Spokesperson”: Choose your high-quality, streaming STT and TTS providers. Remember, a model-agnostic voice infrastructure will give you the freedom to choose the best ones.

- Build the “Command Center’s” Communication Hub: This is where you set up your voice infrastructure. With a developer-first platform like FreJun AI, this is a fast process. You’ll sign up, get a phone number, and configure a webhook. This webhook is the “direct line” that the radio operator will use to contact your command center.

- Write the Orchestration Logic: This is your backend code. It’s a real-time event loop that:

- Receives the live audio stream from the user via FreJun AI.

- Forwards this audio to your STT engine to get a live transcript.

- Constructs a prompt for your chosen LLM, including the conversation history and any available tool definitions.

- Calls the LLM’s API and receives a text response.

- Streams that text response to your TTS engine to get a live audio stream.

- Streams that generated audio back to the user via FreJun AI.

Ready to connect your OpenAI or Claude model to a live phone call? Sign up for FreJun AI and start your voice integration.

Also Read: From IVR to AI Voicebots: The Big Upgrade

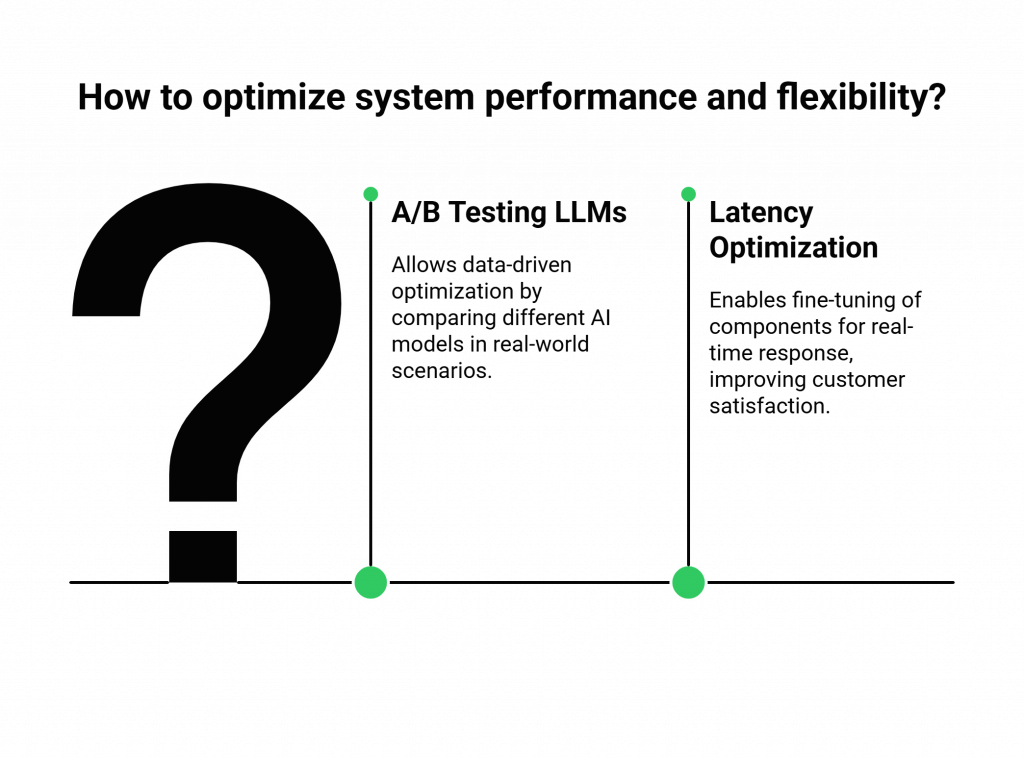

How Does This Architecture Impact Performance and Flexibility?

This modular, API-first approach is not just an implementation detail; it’s a profound strategic advantage.

- The Power of A/B Testing: With this architecture, you are not permanently married to your LLM choice. You can actually build your system to route 50% of your traffic to the GPT API and 50% to Anthropic‘s Claude. This allows you to A/B test the two leading AI “brains” on your live, real-world traffic to see which one delivers better results for your specific use case. This is a level of data-driven optimization that is impossible in a closed-platform ecosystem.

- Obsessive Latency Optimization: This “glass box” architecture allows you to measure the performance of every single component. You can see exactly how many milliseconds your STT, LLM, and TTS are taking, allowing you to fine-tune your system for the absolute best real-time response. This is critical, as a recent Salesforce report found that 78% of customers have had to repeat themselves to multiple agents, a frustration that a fast, context-aware AI can eliminate.

Also Read: How to Build an AI Voicebot in Minutes?

Conclusion

The choice between building a voice agent with OpenAI or Claude is an exciting one, a testament to the incredible power and diversity of the modern AI landscape. But the choice of the “brain” is only half the battle. The true art of building a great AI voicebot lies in the architecture that surrounds it.

By embracing a modular, API-first approach and building on a foundation of a high-performance, model-agnostic voice infrastructure, you give yourself the ultimate freedom. You can choose the best brain, the best ears, and the best mouth, and connect them all with a flawless nervous system. This is the path to moving beyond a simple AI voicebot and creating a truly intelligent, responsive, and engaging conversational partner.

Want to see a live demo of how our infrastructure seamlessly integrates with both the GPT API and Anthropic? Schedule a demo with FreJun Teler!

Also Read: How Automated Phone Calls Work: From IVR to AI-Powered Conversations

Frequently Asked Questions (FAQs

Generally, OpenAI’s models are known for their raw power, extensive general knowledge, and strong tool-calling abilities. Anthropic’s Claude models are often praised for their more nuanced conversational style, creativity, and a strong focus on AI safety and reliability.

No. A key advantage of a model-agnostic voice integration is that you can mix and match. You can use OpenAI’s GPT for the “brain” but choose a different provider for your STT and TTS if you find they offer better performance or a more suitable voice.

FreJun AI provides the essential voice infrastructure, or the “nervous system.” It handles the telephony (connecting to the phone network) and the ultra-low-latency, real-time streaming of audio between the user and your application’s backend, where you are calling the LLM APIs.

The cost is variable. You will pay a per-use fee to the LLM provider (OpenAI or Anthropic), the STT/TTS providers, and the voice infrastructure provider. However, this pay-as-you-go model is often far more cost-effective than hiring human agents, especially for high-volume tasks.

Yes, if you’ve built on a model-agnostic architecture. Since your application is just making an API call to the LLM, you can easily write your code to switch between the two endpoints, which is great for testing and future-proofing.

The context window is the amount of conversation history the AI can “remember” at one time. A larger context window is a significant advantage for complex, multi-turn voice conversations, as it allows the AI voicebot to maintain a more coherent and intelligent dialogue.

It is the most important factor for a good user experience. The entire, multi-step process—from the user speaking to the AI responding—must happen in under a second for the conversation to feel natural.

State management is the process of storing the history of a conversation. For a scalable voicebot, this “state” should be stored in an external, fast database (like Redis), not on the application server itself.

Yes. A full-featured voice API allows you to programmatically initiate outbound calls. You can use the same backend orchestration logic to power a proactive outbound AI voicebot using either OpenAI or Claude.

You must treat all your API keys (for FreJun AI, OpenAI, Anthropic, etc.) as highly sensitive secrets. They should be stored in a secure vault and never be exposed on the client-side. All API communication should be over an encrypted (HTTPS) connection.