For the better part of a decade, we have been teaching our AI to be brilliant specialists. We’ve built AI that are masterful writers, capable of drafting complex essays from a simple prompt. We have created AI that are gifted artists, able to generate stunning images from a textual description.

And we have engineered AI that are eloquent speakers, able to hold a natural, spoken conversation. Each of these “single-mode” agents is a marvel of modern engineering. But they all share a profound, hidden handicap: they are experiencing our world with blinders on.

A text-based AI is trapped in a library, unable to see the world it writes about. A voice AI is having a phone call in the dark, hearing your words but having no idea what you’re looking at. This sensory limitation is the final barrier that has kept AI from being a true partner in our real-world tasks.

Now, that barrier is being shattered. We are witnessing the rise of a new architectural paradigm: the multimodal AI agents. This is not just an incremental improvement; it is a fundamental rewiring of how an AI perceives, understands, and interacts with reality.

This guide will explain this paradigm shift, explore the technological forces driving it, and reveal why this is the most significant leap forward for artificial intelligence since the invention of the Large Language Model itself.

Table of contents

Why is the AI Community Moving Beyond Single Senses?

The move towards multimodal AI agents is a direct response to the limitations of a specialized, single-sense world. While a single-mode AI can be incredibly powerful at its one specific task, the most valuable and complex human problems are rarely, if ever, single-mode.

How Do Single-Mode Agents Fail in the Real World?

Think about trying to get help from a remote expert to fix your car.

- A text-based AI would require you to be a professional mechanic, able to perfectly describe the complex components and the strange sounds in writing.

- A voice-only AI would be a frustrating exercise in trying to verbally describe a visual problem: “It’s the black tube next to the shiny metal thing that’s making a sort of whirring-clicking sound.”

- A vision-only AI could identify the parts but would have no idea which sound you were concerned about.

None of these specialists can solve the problem alone. The real world is a symphony of sight, sound, and language, and an AI that can only perceive one note of that symphony will always be limited. This is a core driver of customer frustration.

A recent Salesforce report found that a staggering 80% of customers now say the experience a company provides is as important as its products, and a seamless, problem-solving experience is at the top of their list.

How Do Multimodal AI Agents Achieve “Grounded Intelligence”?

A multimodal AI achieves a state that researchers call “grounded intelligence.” This means its understanding of a concept is not just based on a statistical analysis of text, but is “grounded” in multiple sensory inputs.

It understands the word “dog,” the sound of a dog barking, and the image of a dog are all referring to the same real-world entity. This fusion of senses gives it a form of digital “common sense” that is far more robust and human-like.

Also Read: How To Create AI Chatbot Voice Functions Using Real-Time APIs?

What Technological Breakthroughs Are Fueling This Rise?

This shift is not a sudden accident. It is the culmination of years of research and engineering across multiple domains, all reaching a critical point of maturity at the same time.

The Arrival of Native Multimodal Models

This is the central pillar of the revolution. The new generation of Large Language Models, like Google’s Gemini and OpenAI’s GPT-4o, are not just text models that have had vision capabilities “bolted on.” They were designed from the ground up to be natively multimodal.

Think of it like the difference between someone who learned a second language as an adult and someone who grew up bilingual.

The native multimodal model thinks and reasons across sight and sound as its “first language,” allowing it to understand the deep, contextual relationships between a visual scene and a spoken query.

The Maturation of Real-Time Infrastructure

A multimodal AI “brain” is a data-hungry beast. It needs to process a high-resolution video stream and a high-fidelity audio stream simultaneously and in real-time. This is an immense engineering challenge.

The maturation of real-time communication protocols (like WebRTC) and the rise of specialized, high-performance infrastructure platforms have created the “nervous system” that is capable of supporting this complex, multi-sensory AI “brain.”

This is where a platform like FreJun AI provides a critical, specialized component. While the video stream presents its own challenges, the voice conversation must be a flawless, ultra-low-latency experience.

FreJun AI is the expert in this domain. We provide the high-performance, real-time “auditory nerve,” handling the complex, bidirectional streaming of audio that is essential for the “hearing and speaking” part of a responsive multimodal agent.

Also Read: How To Add Chatbot Voice Assistant Capabilities in Your Backend?

What Can a Multimodal AI Agent Do That a Single-Mode Agent Cannot?

The true excitement of multimodal AI agents lies in the new class of problems they can solve. They are not just better; they are capable of entirely new things.

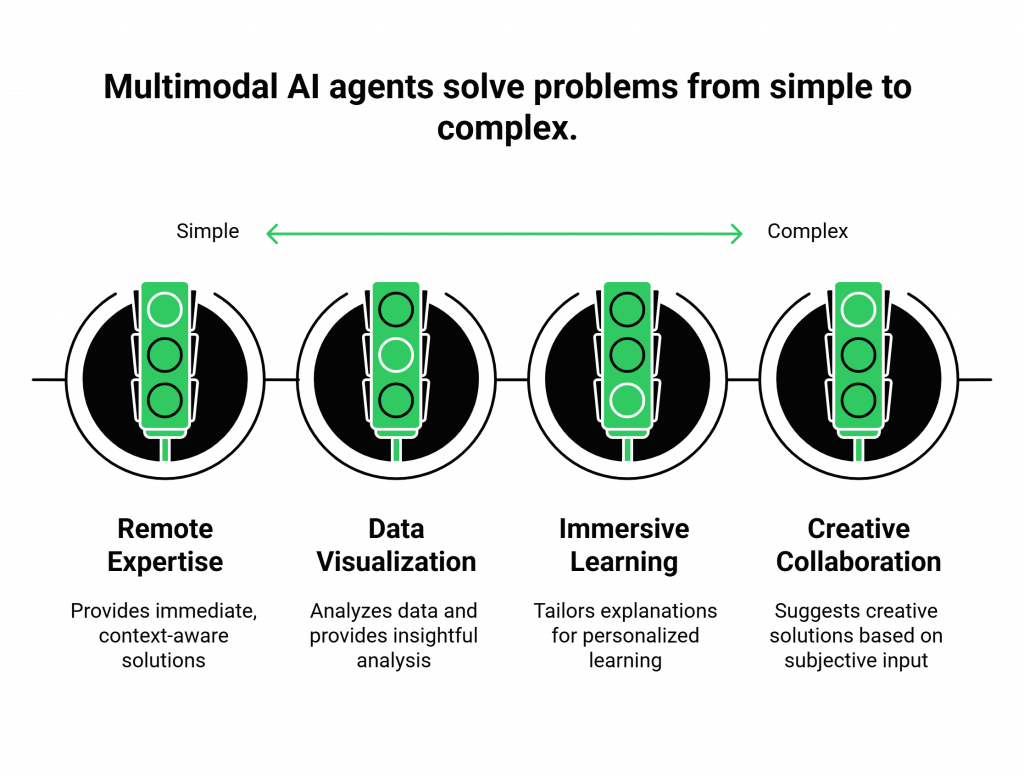

- “See What I See” Remote Expertise: This is the killer app for enterprise. A field technician can point their phone’s camera at a complex piece of industrial machinery and say, “I’m seeing an alert on this diagnostic panel, and I’m hearing a high-pitched whine from this motor. What’s the recommended procedure?” The AI can read the alert, see the motor, and provide the exact right answer from the technical manual.

- Interactive Data Visualization: An executive can pull up a sales dashboard on their screen and have a verbal conversation with an AI about it. “Looking at this chart, why did our sales in the European region drop in Q3, and how did that correlate with our marketing spend in the same period?” The AI can see the chart and cross-reference the data to provide an instant, insightful analysis.

- Rich, Immersive Learning: An AI tutor can watch a student work through a physics problem on a whiteboard, listen to their question, and provide a tailored explanation, creating a one-on-one learning experience at a global scale.

- Creative Collaboration: A designer can show the AI a sketch of a website layout and say, “I like this, but can you suggest a color palette that feels more energetic and modern?” The AI can see the layout, understand the subjective term “energetic,” and suggest a new set of colors.

Ready to architect the future of AI that can see and hear? Sign up for a FreJun AI to get started.

What Will the Rise of Multimodal AI Agents Mean for the Future?

The rise of multimodal AI agents 2025 and beyond is about more than just a new feature; it’s about a new relationship with technology. We are moving from a world where we “use” AI as a tool to a world where we “collaborate” with AI as a partner.

This shift will have a profound impact across every industry. In healthcare, it will enable more sophisticated remote diagnostics. In retail, it will power a new generation of hyper-personalized “conversational commerce.”

When it comes to manufacturing, it will create safer and more efficient factories. The business opportunity is staggering. A recent report from Bloomberg Intelligence suggests that the market for generative AI technology could reach a stunning $1.3 trillion by 2032.

Building AI agents with multimodal models is the next great challenge and opportunity for developers. It’s about orchestrating a symphony of AI senses to create experiences that are not just intelligent but are truly perceptive.

Also Read: From Chat to Voice: Upgrade to Voice-Enabled Chatbots

Conclusion

We are at a pivotal moment in the history of artificial intelligence. The blind and deaf AI of the past is evolving into a perceptive, aware partner that can engage with the world in the same rich, multisensory way that we do. The rise of multimodal AI agents is a fundamental shift from AI that can process information to AI that can understand reality.

For businesses and developers, now is the time to start exploring, experimenting, and building with this transformative technology. By architecting a solution on a foundation of a flexible, high-performance infrastructure, you can begin to create the next generation of truly intelligent agents.

Want to discuss how a high-performance voice channel fits into your multimodal strategy? Schedule a demo for FreJun Teler!

Also Read: Outbound Call Center Software: Essential Features, Benefits, and Top Providers

Frequently Asked Questions (FAQs)

A single-mode agent is an expert in one type of data (e.g., text, voice, or images). A multimodal AI agent can process and understand multiple types of data at the same time, allowing it to have a much richer and more contextual understanding of a situation.

The most common modalities are text (reading/writing), audio (hearing/speaking), and vision (seeing images/video). More advanced models are also beginning to incorporate other data types.

A native multimodal LLM, like OpenAI’s GPT-4o or Google’s Gemini, is a model that was designed from the ground up to accept inputs from multiple modalities in a single, unified way, rather than having separate models for each sense bolted together.

A single-mode voicebot is often a better choice when the user’s task can be fully completed with words alone, and a visual component would add unnecessary complexity. Examples include booking a simple appointment, checking an account balance, or making a proactive reminder call.

The best use cases are those that involve solving problems in the physical world. This includes “see what I see” remote technical support, automated visual damage assessment for insurance claims, and interactive “how-to” guides for product assembly.

The biggest challenges include managing real-time data streams with ultra-low latency. It’s also crucial to keep audio and video perfectly synchronized. Processing video data adds higher computational costs.

The modern, API-driven approach lets you integrate with powerful, pre-trained multimodal models from providers like Google and OpenAI. You can also use open-source alternatives such as LLaVA.

In a multimodal system that involves a voice conversation, FreJun AI provides the essential, high-performance voice infrastructure. It captures the user’s speech and delivers the AI’s spoken response smoothly. It operates with ultra-low latency to ensure a natural, real-time conversation.

This is more complex. A traditional phone call is an audio-only channel. For multimodal interaction, the user usually needs a smartphone app or web-based client. It should have access to the device’s camera.

The outlook for multimodal AI agents in 2025 is one of rapid adoption in specific, high-value enterprise niches. We will see them evolve from experimental “demos” to production-grade, mission-critical tools. They will power industries like field service, insurance, and complex technical support.