For the past decade, the world of AI has been a world of specialists. We’ve built AI that is brilliant at understanding text, AI that is a master of understanding images, and AI that can have a flawless spoken conversation. Each of these “single-mode” agents is a powerful tool in its own right.

A text-based chatbot can answer your questions, and a voicebot can book your appointments. They are efficient, scalable, and have transformed customer service. But they all share a fundamental, hidden limitation: they are experiencing the world with one sense tied behind their back.

A voicebot is blind. A computer vision AI is deaf. This sensory deprivation puts a hard ceiling on the complexity of problems they can solve. Now, a new, more powerful architecture is emerging, one that breaks down these sensory walls. This is the era of the multimodal AI agents.

This is not just an incremental upgrade; it’s a quantum leap in a machine’s ability to understand our world. This guide will provide a clear and definitive comparison between single-mode and multimodal AI agents, exploring the architectural differences and the new class of problems that you can solve when you start building AI agents with multimodal models.

Table of contents

What is the Core Difference Between Single-Mode and Multimodal AI?

To understand the revolution, you first have to understand the old world. A single-mode AI is an expert in one type of data, or “modality.”

- A Text AI (like a traditional chatbot): Its entire universe is text. It can read, write, and understand language with incredible sophistication, but it has no concept of what a “red blinking light” actually looks like.

- A Vision AI: Its entire universe is pixels. It can identify objects in an image with superhuman accuracy, but it can’t understand the spoken question, “Why is my device making this strange buzzing sound?”

- A Voice AI: Its universe is sound waves. It can understand a spoken command, but it has no idea what the user is pointing at when they say, “What’s this button for?”

A multimodal AI agents, on the other hand, is a generalist. It is designed to ingest and reason about multiple, different types of data at the same time. It can see the red blinking light, hear the strange buzzing sound, and read the user’s text message, all in one unified thought process.

Also Read: Voice-Based Bot Examples That Increase Conversions

Why is This Shift to Multimodality Happening Now?

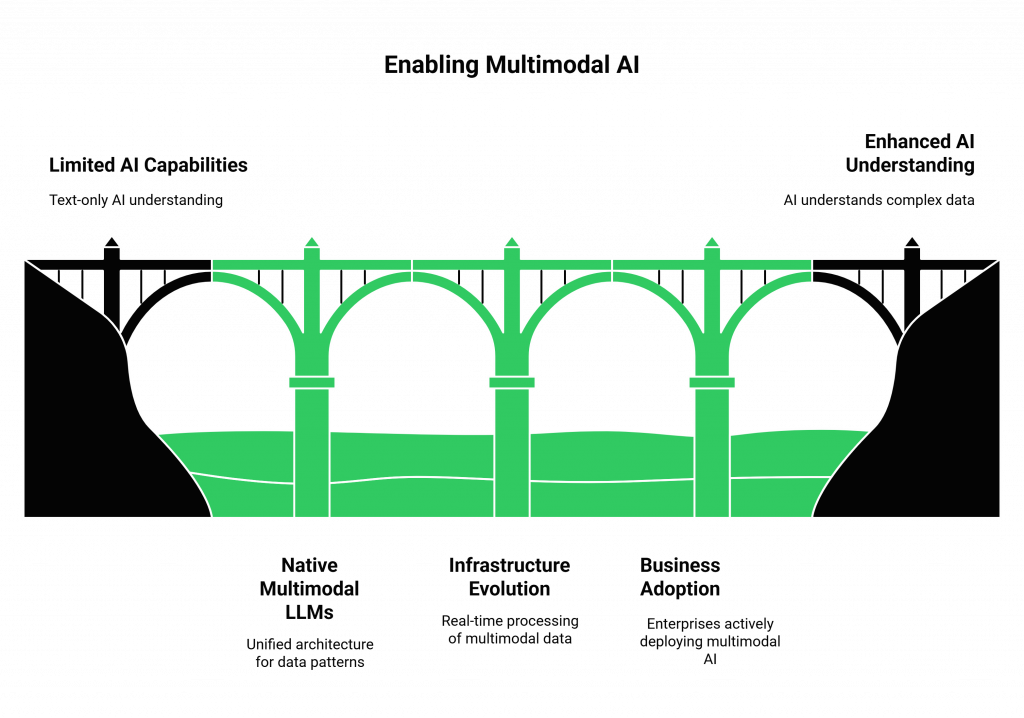

The rise of the multimodal AI has been driven by a perfect storm of technological breakthroughs.

What is the Role of “Native” Multimodal LLMs?

This is the single biggest breakthrough. The new generation of Large Language Models, like Google’s Gemini and OpenAI’s GPT-4o, are not just text models that have had vision capabilities “bolted on.” They were designed from the ground up to be natively multimodal.

This means they have a unified internal architecture that can find the complex patterns and relationships between different types of data. This is what allows an AI to understand that the spoken word “cat” and a picture of a cat refer to the same concept.

How Has Infrastructure Evolved to Support This?

A multimodal agent is a data firehose. It has to process a high-resolution video stream and a high-fidelity audio stream simultaneously and in real-time. This requires an infrastructure that can handle a massive amount of data with ultra-low latency.

The evolution of real-time communication protocols (like WebRTC) and the rise of specialized, high-performance voice infrastructure platforms like FreJun AI have created the “nervous system” that is capable of supporting this complex, multi-sensory AI “brain.”

The business world is taking notice of this shift. A 2024 report from IBM’s Institute for Business Value found that a remarkable 42% of enterprise-scale companies have already actively deployed AI in their business, and these new multimodal capabilities are the next logical step for expanding these deployments into more complex, real-world problems.

Also Read: Voice Bot Online Platforms for Lead Qualification

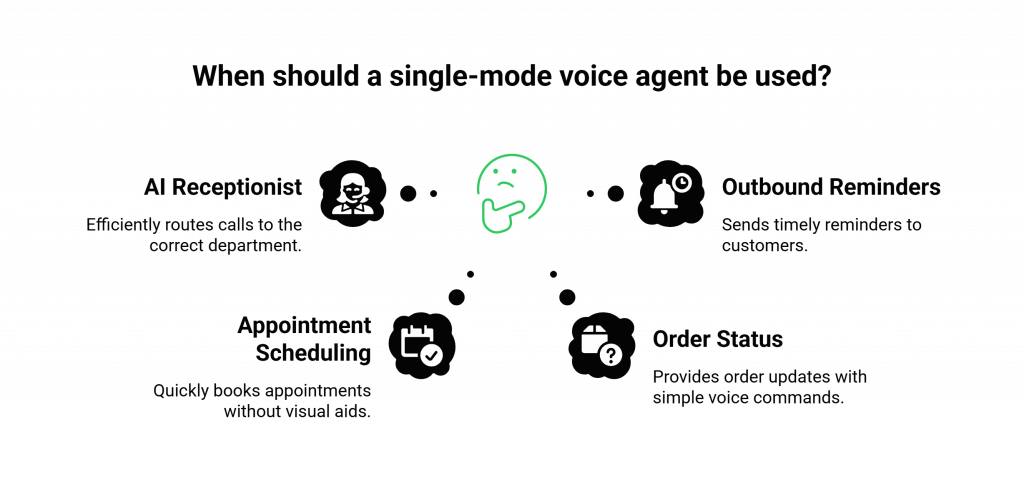

When is a Single-Mode Voice Agent Still the Right Choice?

While the future is multimodal, the present is still largely single-mode, and for many use cases, a specialized voice-only agent is still the perfect tool for the job. A single-mode voice assistant chatbot excels in scenarios where the entire interaction can be completed with words alone.

- AI Receptionist & Call Routing: “I’d like to speak to someone in the billing department.”

- Appointment Scheduling: “I need to book my car for a service next Tuesday.”

- Simple Order Status (WISMO): “What’s the status of my recent order?”

- Proactive Outbound Reminders: “This is a reminder about your dental appointment tomorrow at 10 AM.”

In these cases, adding a visual component would add unnecessary complexity. A well-designed, low-latency voice-only experience is the most efficient and user-friendly solution.

Ready to start building the next generation of AI that can see and hear? Sign up for a FreJun AI to get started with the voice component.

When Do You Need a Multimodal AI Agent?

The need for multimodal AI agents arises when the problem is inherently visual and cannot be easily or accurately described with words alone. Building AI agents with multimodal models is the answer when the user needs to say, “The problem is this thing right here.”

Here are some high-value, enterprise use cases that are unlocked by multimodality:

- “See What I See” Technical Support: A customer can point their phone’s camera at a piece of equipment and say, “This warning light is on. What should I do?” The AI can see the light, identify the model of the equipment, and provide the exact right troubleshooting step.

- Automated Insurance Claim Assessment: A homeowner can take a video of a damaged roof after a storm while verbally describing the situation. The multimodal agent can analyze the video to assess the extent of the damage, transcribe the user’s testimony, and instantly file the initial claim.

- Interactive Product Onboarding: A user trying to assemble a new piece of furniture can show the AI the parts they have and say, “I’m stuck on step 3. I’m not sure how this piece connects.” The AI can see the piece and provide a specific, visual, and verbal instruction.

This ability to solve real-world, physical problems is a game-changer. The broader generative AI market is projected to explode to over $1.3 trillion by 2032, and these powerful, problem-solving multimodal agents will be a primary driver of that value.

Also Read: Best Voice API for Business Communications in 2025

Conclusion

The evolution from single-mode to multimodal AI agents is the next great frontier in artificial intelligence. It is a shift from AI that can process information to AI that can perceive and understand reality.

Specialized single-mode voice bots will continue handling many tasks effectively, but the next generation of AI will solve the most complex, high-value, and human-centric challenges by seeing, hearing, and reasoning all at once.

For enterprises, now is the time to start exploring and investing in this technology. By building AI agents with multimodal models, you are not just creating a new feature; you are building a new and powerful way to connect with your customers and solve their problems in the real world.

Intrigued by the architectural challenges of building a multimodal AI? Schedule a demo to see how FreJun AI can power your voice channel.

Also Read: How Automated Phone Calls Work: From IVR to AI-Powered Conversations

Frequently Asked Questions (FAQs)

A single-mode agent is an expert in one type of data (e.g., text, voice, or images). A multimodal AI agents can process and understand multiple types of data at the same time, allowing it to have a much richer and more contextual understanding of a situation.

The most common modalities are text (reading/writing), audio (hearing/speaking), and vision (seeing images/video).

A native multimodal LLM, such as OpenAI’s GPT-4o or Google’s Gemini, processes text, audio, and vision in a unified way from the start, rather than combining separate models for each modality.

A single-mode voicebot is often a better choice when the user’s task can be fully completed with words alone, and a visual component would add unnecessary complexity. Examples include booking a simple appointment, checking an account balance, or making a proactive reminder call.

The best use cases are those that involve solving problems in the physical world. This includes “see what I see” remote technical support, automated visual damage assessment for insurance claims, and interactive “how-to” guides for product assembly.

The biggest challenges are managing real-time data streams with low latency. Audio and video feeds must stay perfectly synchronized. Processing video data also requires high computational power.

The modern, API-driven approach lets you integrate with powerful, pre-trained multimodal models from providers like Google and OpenAI. You can also use strong open-source alternatives such as LLaVA.

In a multimodal system that involves a voice conversation, FreJun AI provides the essential, high-performance voice infrastructure. It captures the user’s speech quickly and accurately. It delivers the AI’s response with very low latency. This ensures conversations feel natural and happen in real time.

This is more complex. A traditional phone call is an audio-only channel. To have a multimodal interaction, the user would typically need to be using a smartphone app or a web-based client that can access their device’s camera.

The outlook for multimodal AI agents in 2025 is one of rapid adoption in specific, high-value enterprise niches. We will see them evolve from experimental “demos” to production-grade, mission-critical tools. Industries like field service, insurance, and complex technical support will benefit most.