As a developer, you live in a world of APIs and SDKs. When you decide to build a new feature, the first instinct is often to reach for a Software Development Kit. You npm install the SDK for your Speech-to-Text provider, another for your LLM, and yet another for your Text-to-Speech engine. Finally, you layer on the SDK for your voice infrastructure. Before you’ve written a single line of your own logic, your project is already a complex web of third-party dependencies.

This is SDK Overload. It’s the silent tax on modern development, a “convenience” that quickly spirals into a nightmare of bloated application size, steep learning curves, and frustrating limitations. Your dream of building a sleek, high-performance AI Voice Chat Bot gets bogged down by the sheer weight of other people’s code.

But there is a better way. It’s a return to first principles, an approach that prioritizes control, performance, and simplicity. It’s about shedding the weight of heavy SDKs and interacting directly with the clean, powerful APIs that lie beneath. This guide is for the developer who wants to build a professional, lightweight, and truly custom voice AI by mastering the API-first approach.

Table of contents

- What is SDK Overload and Why is it a Problem for Developers?

- What is the API-First Alternative?

- How Does the API-First Architecture for a Voice Chat Bot Work?

- How Does FreJun AI Champion This API-First Philosophy?

- What Are the Long-Term Benefits of an API-First Voice Architecture?

- Conclusion

- Frequently Asked Questions (FAQs)

What is SDK Overload and Why is it a Problem for Developers?

An SDK can feel like a helpful shortcut, a pre-packaged toolkit that gets you started quickly. But for a complex, real-time application like an AI Voice Chat Bot, relying on a stack of different SDKs can create more problems than it solves. Think of it like trying to build a custom piece of furniture using only pre-fabricated, all-in-one kits.

- You’re Stuck with the Kit’s Design: The kit is easy to assemble, but you can’t change the dimensions. An SDK often forces you into an “opinionated” way of doing things, restricting your architectural freedom and making it difficult to implement unique or advanced features.

- The Parts Might Not Fit Together: What happens when the SDK for your STT provider has a dependency that conflicts with the SDK for your LLM? You get thrown into “dependency hell,” spending hours debugging compatibility issues instead of building your application.

- It’s Incredibly “Heavy”: Each SDK adds a significant amount of code to your application. This increases your bundle size, slows down your startup time, and creates a larger surface area for potential security vulnerabilities.

- You Lose Granular Control: A heavy SDK often hides the underlying API calls, making it difficult to debug performance issues or fine-tune network requests for the lowest possible latency.

Also Read: The Rise of Conversational Voice AI Assistants in Banking

What is the API-First Alternative?

The API-first alternative is about moving from pre-fabricated kits to a professional workshop. Instead of relying on someone else’s bundled tools, you use the fundamental, universal protocols of the web directly: REST APIs for commands and WebSockets for real-time data streaming.

This approach puts you, the developer, back in complete control. You are not importing a massive library; you are making a clean, lightweight HTTP request to a specific endpoint. You are not wrestling with a complex SDK’s internal state; you are managing a raw, real-time data stream over a WebSocket.

The results are transformative. The 2023 Postman “State of the API” report underscores this shift, revealing that developers are spending a majority of their time, nearly 60% of their work week, working directly with APIs. This is the professional’s approach. It results in an application that is:

- Lightweight and Performant: Your application contains your logic, not a mountain of third-party code.

- Framework-Agnostic: You are not tied to any specific programming framework that an SDK might demand.

- Infinitely Flexible: You have the complete freedom to build any logic, handle any edge case, and orchestrate your AI models in exactly the way you want.

How Does the API-First Architecture for a Voice Chat Bot Work?

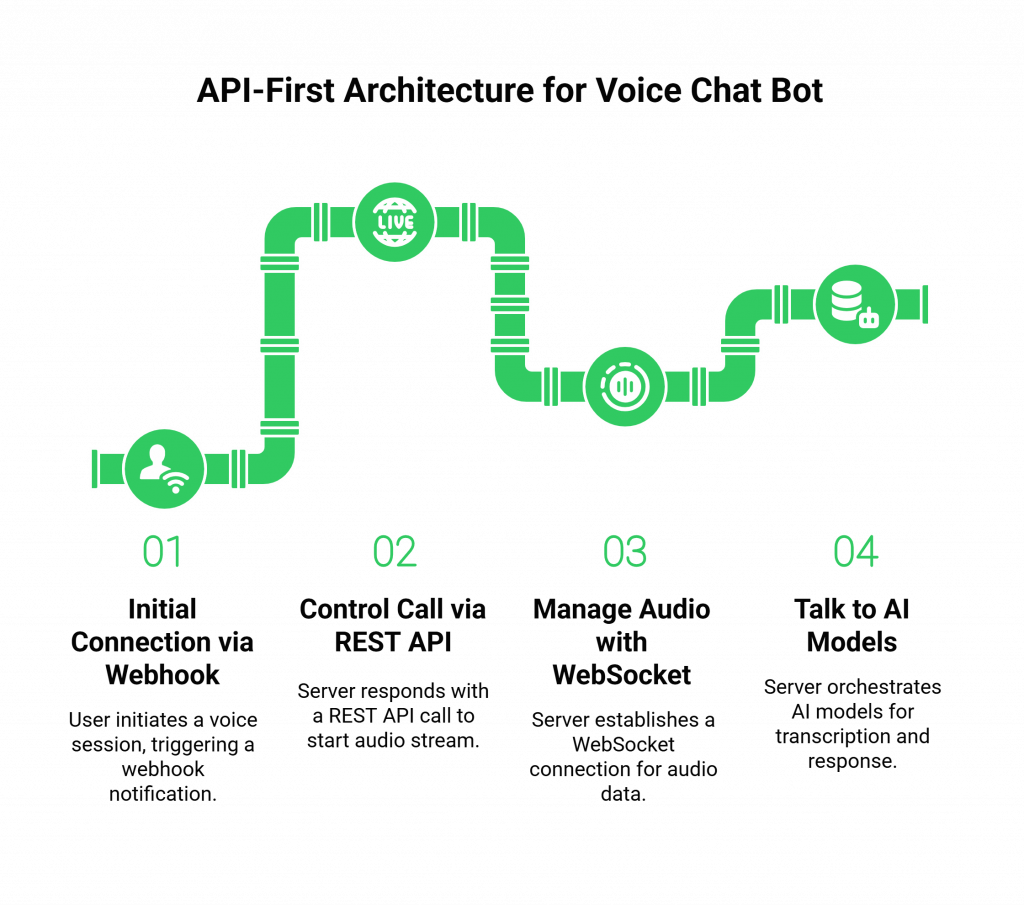

So, what does this “workshop” look like in practice? Let’s walk through the data flow of a real-time AI Voice Chat Bot, built entirely with direct API interactions.

Step 1: How Do You Handle the Initial Connection via Webhook?

It all starts with an event. When a user calls your phone number or initiates a voice session on your website, your voice infrastructure provider doesn’t require you to run a heavy SDK to “listen” for the call.

Instead, it sends a simple, standard HTTP POST request, a webhook, to an endpoint you control. This webhook is a lightweight notification containing the essential metadata about the call. Your backend server, which is just a simple API endpoint, receives this request.

Step 2: How Do You Control the Call via a REST API?

Your server’s response to that initial webhook is a direct REST API call back to the voice platform. Instead of calling a complex function in an SDK, you just send a standard HTTP request. For example, your code might construct a JSON payload and POST it to https://api.yourvoiceprovider.com/v1/calls/{call_id}/start_stream. This command tells the platform to establish a real-time audio stream. This is the beauty of an API-first approach: you are communicating with simple, universal verbs and nouns.

Also Read: How to Enhance Voice Bot Efficiency in E-Commerce?

Step 3: How Do You Manage the Raw Audio with a WebSocket?

Once the stream starts, you are not dealing with a complex object from an SDK. You are dealing with a raw WebSocket connection. Your server opens a persistent, bidirectional channel with the voice platform.

Over this channel, you receive a clean, continuous stream of raw audio data packets from the user’s microphone. Your code is now a high-speed traffic controller, responsible for routing these packets.

Step 4: How Do You Talk to Your AI Models?

With the raw audio stream in hand, your server’s job is to orchestrate the AI.

- It forwards the incoming audio packets directly to the streaming API endpoint of your chosen STT provider.

- It receives the transcribed text back from the STT.

- It then makes a standard HTTP POST request to the API endpoint of your chosen LLM.

- It receives the text response from the LLM.

- It makes another HTTP POST request to the API endpoint of your chosen TTS provider, sending the text and receiving back a stream of generated audio data.

Every step is a clean, direct API call that you have full control over. You can set custom headers, manage timeouts, and handle errors with surgical precision.

Ready to build a voice assistant that your customers will love? Sign up for FreJun AI’s developer-first voice API.

How Does FreJun AI Champion This API-First Philosophy?

This lightweight, API-first philosophy is the very core of our identity at FreJun AI. We believe that a developer’s greatest asset is their own creativity, not their ability to navigate a vendor’s bloated SDK. That’s why we’ve built our entire platform to be the perfect “workbench” for the professional voice developer.

Our philosophy is simple: “We handle the complex voice infrastructure so you can focus on building your AI.”

- A Clean, Well-Documented REST API: Our API is designed to be powerful yet simple. Every feature of our platform, from call control to real-time streaming, is accessible through a clean, predictable RESTful interface.

- Raw WebSocket Access: We don’t force you into a proprietary streaming format. We give you direct access to the raw audio stream over a standard WebSocket, giving you the ultimate flexibility to integrate with any STT engine or custom audio processing logic.

- We Are Not Another Heavy Framework: FreJun AI is not another complex framework you have to learn. We are the un-opinionated, high-performance infrastructure layer. We provide the essential, reliable “power source” for your AI “tools,” but we never tell you how you have to build.

Also Read: How To Build Secure Voice Agents For Healthcare?

What Are the Long-Term Benefits of an API-First Voice Architecture?

Adopting an API-first approach for your AI Voice Chat Bot is more than just a development preference; it’s a strategic decision that pays long-term dividends.

- Enhanced Performance and Reliability: By removing the layers of abstraction an SDK creates, you can build a more direct and efficient data path. This often results in lower latency and a more reliable system. This is crucial for enterprise-grade applications, as a recent report on IT modernization found that API-led integration can result in a 35% increase in operational efficiency.

- Simplified Maintenance: A system with fewer external dependencies is easier to maintain, debug, and secure.

- Greater Innovation: When the limitations of an SDK do not constrain you, your team is free to experiment and innovate, building the unique, groundbreaking features that will set your voice bot apart from the competition.

Conclusion

The journey to building a truly exceptional AI Voice Chat Bot is a journey of control. While the “quick start” promise of a heavy SDK can be tempting, the professional path lies in embracing the power, simplicity, and flexibility of a direct, API-first approach. It’s about moving from an assembler of pre-fabricated parts to a true craftsman.

By building your application on a foundation of a developer-first, API-driven voice infrastructure, you give yourself the freedom to choose the best tools, the control to optimize for the best performance, and the flexibility to build a voice AI that is not just functional, but a true work of art.

Want to learn more about the infrastructure that powers the most advanced audio chat bots? Schedule a demo with FreJun AI today.

Also Read: What Is an Auto Caller? Features, Use Cases, and Top Tools in 2025

Frequently Asked Questions (FAQs)

An API (Application Programming Interface) is a set of rules and definitions that allows two applications to communicate with each other. An SDK (Software Development Kit) is a collection of tools, libraries, and code that is designed to make it easier to work with a specific API, but it often adds a layer of abstraction and can be “heavy.”

It can have a slightly steeper initial learning curve, as you need to handle HTTP requests and WebSocket connections directly. However, for any experienced web developer, these are standard, everyday tasks, and the long-term benefits in control and performance often far outweigh the initial effort.

No. A good strategy is to use direct APIs for the critical, real-time path (like audio streaming) where you need maximum control and performance, and you might use a lightweight SDK for less critical, non-real-time tasks (like looking up call logs).

Authentication is typically handled by including a secret API key in the headers of your HTTP requests. Any reputable API provider will have clear documentation on their specific authentication scheme.

The audio data will arrive as a stream of binary packets. Most programming languages have built-in libraries for handling WebSocket connections and binary data. Your application will simply need to take these packets and forward them to your STT provider’s streaming API, which is designed to accept this format.

It can be. By having fewer third-party dependencies, you reduce the potential attack surface of your application. You also have full control over the security of every single API request your application makes.

Yes, absolutely. Frameworks like LangChain are designed to work with direct API calls to LLMs and other tools. An API-first voice infrastructure is the perfect complement to these frameworks, as it provides the clean, real-time data stream that they need to operate.

A webhook is an automated notification sent from one application to another when a specific event occurs. In a voice API, webhooks are used to inform your application about real-time events, such as an incoming call, so your code can react instantly.

FreJun AI simplifies the process by handling all the deep, complex telephony. We provide a clean, well-documented REST API for call control and a standard WebSocket interface for audio streaming. We’ve done the hard work of building the carrier-grade infrastructure, so you can focus on the simple, powerful APIs.

The very first step is to thoroughly read the API documentation of your chosen voice infrastructure provider. Understand their authentication methods, their webhook structure, and how they handle real-time streaming. A solid understanding of the API is the foundation of your entire project.