Adding voice to chatbots is no longer experimental – it is becoming a core requirement for teams building customer-facing products. Modern users expect to talk, interrupt, and get answers naturally, just as they would with a human. To achieve this, developers need more than just text-based logic; they need the top programmable voice AI APIs with low latency that can handle real-time streaming, connect with speech recognition, and deliver natural Text-to-Speech output. This guide explains how to build a chatbot voice assistant step by step, why a voice API for developers matters, and what pitfalls to avoid when moving from prototype to production.

What Does a Chatbot Voice Assistant Actually Need?

A chatbot that speaks is not a different product from a text bot; it is the same logic wrapped in an additional layer of real-time audio. But this extra layer comes with new requirements.

At a high level, the journey looks like this:

- The user speaks into a phone or device microphone.

- Audio is captured and streamed to a speech recognition service.

- The recognized text is passed to the chatbot’s dialog engine or large language model.

- A response is generated.

- The response is sent to a TTS engine that converts it into speech.

- Audio is streamed back to the user in the same call or app.

Each step must work in less than a second to avoid awkward pauses. For a voice agent to succeed, the transition from speech in to speech out must feel as seamless as a human conversation.

How Text-to-Speech (TTS) Powers Real-Time Conversations

Text-to-Speech is more than a utility that reads text aloud. It determines how natural and trustworthy the assistant sounds. If TTS is slow, flat, or inaccurate with pronunciation, users immediately sense the system is artificial.

Why TTS is critical

- Timing: The assistant must respond quickly, ideally starting playback within a fraction of a second.

- Clarity: The voice must handle different languages, accents, and technical terms.

- Engagement: Prosody features such as pitch, tone, and pauses make the difference between robotic speech and a human-like interaction.

Streaming vs batch TTS

There are two ways TTS systems generate audio:

- Batch generation creates the full audio file before playing it. This is fine for static content like pre-recorded messages, but too slow for live conversations.

- Streaming generation produces audio in small segments as soon as the text is ready. This allows the assistant to start speaking almost immediately, which is essential for live calls.

For real-time chatbot voice assistants, streaming TTS is the only viable approach.

Implementation best practices

- Choose neural voices that capture natural rhythm and intonation.

- Keep latency under 300 milliseconds from text to audio output.

- Use pronunciation dictionaries to handle brand names, medical terms, or local words.

These practices ensure that the assistant not only speaks but also speaks in a way that builds user confidence.

Where Does Speech-to-Text (STT) Fit In?

If TTS is the output, STT is the input. Without accurate transcription, even the best dialog engine cannot produce relevant answers. The automatic speech recognition (ASR) market alone is forecast to grow from USD 9.1 billion in 2024 to USD 30.5 billion by 2033, reflecting rising demand for reliable STT in voice systems.

What STT must deliver

- Real-time transcription so that words appear as they are spoken, not after a delay.

- Interim results to allow the system to start processing before the user finishes speaking.

- Noise handling to work reliably in real-world environments such as a busy street or a call center.

- Accent adaptability since global deployments must understand diverse speech patterns.

Why latency matters

The conversion from speech to text should take a few hundred milliseconds at most. Longer delays create unnatural gaps and can cause users to interrupt or repeat themselves. This is where careful tuning of STT services becomes important, especially in telephony use cases where bandwidth may fluctuate.

How the LLM and Dialogue Brain Work With Voice

Between STT and TTS sits the heart of the assistant: the dialog brain. In many modern implementations, this is a large language model (LLM) or a combination of rules and models. Its role is to understand intent, keep track of context, and decide the best response.

Key functions of the dialog brain

- Intent recognition: Interpreting what the user really wants.

- Context tracking: Remembering earlier turns in the conversation.

- Knowledge access: Using retrieval techniques to bring in information from databases or documents.

- Tool execution: Calling APIs or services to perform tasks such as booking a slot or checking an order.

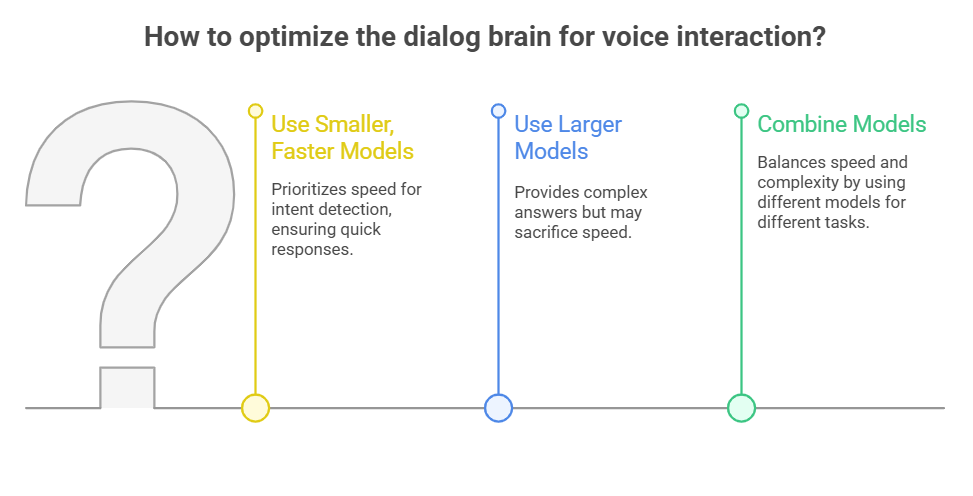

In a voice assistant, these functions must be optimized for speed. A slow model response increases the overall round-trip time and breaks the natural rhythm of dialogue. Teams often use smaller, faster models for intent detection combined with larger models for complex answers.

Architectures for Adding Voice to Chatbots

There are two common deployment patterns depending on where the assistant will be used.

Voice inside apps or websites

In this setup, the microphone captures audio directly in the browser or mobile app. The audio stream is sent to an STT service, the dialog engine processes it, and the response is returned through a TTS engine. The synthesized speech is then played back on the device.

This architecture is straightforward when you control the user interface. It is common for education apps, embedded assistants in mobile banking, or customer support widgets.

Voice over phone calls or telephony

Here the assistant must connect with public telephone networks or VoIP lines. The audio is captured at the network edge, streamed through a voice API integration, and routed to STT. Once the dialog engine generates a response, TTS output is streamed back over the call.

This approach is more complex but opens use cases such as automated receptionists, customer helplines, and outbound campaigns. Handling media streams, latency, and call routing requires specialized infrastructure, which is why dedicated voice APIs are often used.

Understand the key differences between programmable voice APIs and traditional cloud telephony to choose the right foundation for your chatbot assistant.

Step-by-Step: How To Add Voice To Your Chatbot With TTS

For teams looking to move from concept to working prototype, the process can be broken into clear steps.

- Select your dialog engine. This could be an LLM like GPT, Claude, or a local open-source model. Decide how you will manage context and whether you need retrieval from external data.

- Integrate STT. Choose a service that supports streaming results and test it with your expected user accents and noise environments.

- Add TTS. Ensure it can stream audio, supports the languages you need, and gives control over tone and pacing.

- Connect with users through voice API integration. For apps, this may be WebRTC; for telephony, you need APIs that handle PSTN or SIP reliably.

- Test latency and flow. Run simulations to confirm that each turn of conversation happens smoothly. Measure from user speech to audio reply.

Following these steps gives a working chatbot voice assistant that can scale from simple demos to production scenarios.

How FreJun Teler Helps You

In earlier sections, we saw that adding voice to a chatbot requires four parts working together: Speech-to-Text, a dialog brain, Text-to-Speech, and reliable audio delivery. For prototypes, developers often connect these components with simple scripts or cloud services. But when you move to production, the real complexity emerges in the voice infrastructure layer.

Challenges like streaming live calls over PSTN or VoIP, handling jitter, keeping latency under one second, and ensuring uptime across geographies are difficult to solve in-house.

This is where FreJun Teler provides a purpose-built solution. It delivers:

- Real-time media streaming that ensures every spoken word is captured without delay.

- Low-latency audio transport so TTS responses feel instant.

- Model-agnostic flexibility to use any STT, TTS, or LLM stack.

- Enterprise-grade reliability and security, so voice assistants stay available and compliant at scale.

In practice, this means you can focus on building conversational intelligence, while Teler ensures the voice pipeline is seamless, fast, and production-ready.

Use Cases of Voice-Enabled Chatbots

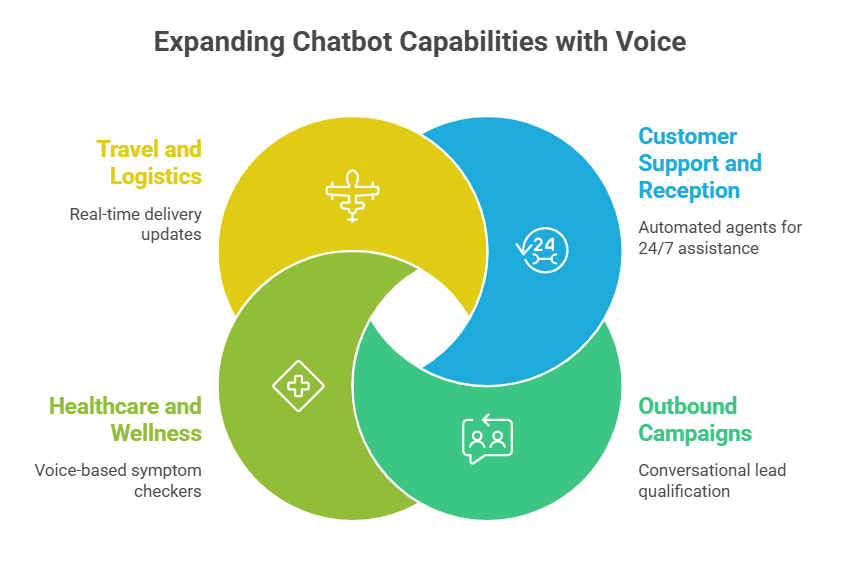

Adding voice to chatbots unlocks new categories of applications. While text chatbots are often limited to web widgets or apps, voice assistants can reach users wherever they are-on the phone, in their car, or using a smart device at home.

Customer Support and Reception

- Automated front-line agents that can answer common questions 24/7.

- Intelligent call routing based on natural language requests.

- Reduced wait times and lower staffing costs.

Outbound Campaigns

- Appointment reminders and follow-ups.

- Lead qualification with natural, conversational tone.

- Post-purchase feedback calls.

Healthcare and Wellness

- Voice-based symptom checkers.

- Medication reminders for elderly patients.

- Secure inbound lines for telehealth pre-screening.

Travel and Logistics

- Flight and booking confirmations through phone calls.

- Package delivery updates with real-time voice notifications.

These examples highlight how voice assistants extend chatbot capabilities into scenarios where typing is inconvenient or impossible. According to Gartner, 85 % of customer service leaders are set to explore or pilot conversational AI (voice-enabled) solutions in 2025, reflecting how core these agents are becoming in support operations.

Technical Challenges and How to Solve Them

Deploying a chatbot voice assistant is not without obstacles. Teams often underestimate how real-world environments affect performance.

Latency

The most critical challenge. Each step-STT, dialog engine, TTS, and audio streaming-adds milliseconds. When combined, these can cause delays of several seconds if not managed well. The target is to keep the full round-trip under one second.

Solutions:

- Use streaming STT and TTS services.

- Deploy dialog engines close to where calls are processed.

- Avoid unnecessary audio transcoding between codecs.

Accents and Languages

Assistants must work for global users, which means handling diverse speech patterns.

Solutions:

- Choose STT providers with wide language and accent support.

- Provide pronunciation dictionaries and lexicons for TTS.

- Continuously test with user samples from different regions.

Noise and Interruptions

Background noise and users speaking over the assistant (known as barge-in) can break the flow.

Solutions:

- Implement voice activity detection (VAD) to filter silence and noise.

- Support barge-in handling so the assistant can pause mid-response.

- Use noise-cancelled audio capture on client devices.

Security and Compliance

Voice conversations may involve sensitive data such as account numbers or medical details.

Solutions:

- Encrypt all media streams in transit.

- Mask or redact sensitive phrases in transcripts.

- Follow regional compliance frameworks such as GDPR and HIPAA.

By preparing for these challenges early, teams can build assistants that work reliably in production, not just in the lab.

Discover proven strategies to reduce delays in speech processing and create seamless chatbot voice assistants. Read our guide on lowering latency now.

Future of Voice in AI Agents

The evolution of voice assistants is only beginning. A few trends are shaping the next wave:

Multi-Modal Interaction

Assistants will combine voice, text, and visual elements in one flow. For example, a banking assistant may explain options over the phone and send a summary via SMS or app notification.

Emotional and Adaptive TTS

Next-generation TTS engines are starting to add emotional tones-sounding empathetic when delivering bad news or cheerful when confirming success. This will reduce the “robotic” feel that still exists today.

Deeper Integration with Tools

Voice assistants will not only answer but also take action. With tool calling, they can reschedule appointments, process payments, or initiate workflows directly during the conversation.

Edge and On-Device Models

To reduce latency and improve privacy, smaller models for STT and NLU will increasingly run on-device, especially in mobile and IoT settings.

Unified Voice Infrastructure

Instead of piecing together separate providers for telephony, STT, TTS, and dialog, we will see more unified platforms. This is where solutions like FreJun Teler demonstrate how integration and reliability become competitive advantages.

Conclusion

Voice is no longer an optional layer for chatbots – it is becoming the most natural interface for customer interaction. The foundation is clear: capture speech with STT, process with your chosen dialog engine, generate responses with TTS, and deliver them through reliable voice API integration. The true challenge is execution at scale: minimizing latency, handling diverse languages, and maintaining enterprise-grade reliability.

This is where FreJun Teler adds real value. By managing the complex voice infrastructure, Teler allows your teams to focus on building intelligent, context-aware assistants while ensuring the audio layer remains seamless and dependable.

Ready to add voice to your chatbot?

Schedule a demo with FreJun Teler and see it in action.

FAQs –

1: How can developers quickly add voice to an existing chatbot?

Answer: Integrate Speech-to-Text, dialog engine, and Text-to-Speech through a low-latency voice API for developers to enable seamless conversations.

2: What’s the biggest technical challenge in voice chatbot integration?

Answer: Latency is the main challenge; real-time streaming APIs and optimized infrastructure ensure chatbot voice assistants respond naturally without awkward delays.

3: Why use a programmable voice API instead of cloud telephony?

Answer: Programmable voice APIs give developers flexibility, low-latency media streaming, and model-agnostic integration, unlike rigid cloud telephony setups with higher delays.

4: Can chatbot voice assistants scale across multiple languages?

Answer: Yes, with multilingual STT and TTS engines plus reliable voice API integration, assistants can serve diverse regions without sacrificing conversation quality.